New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Reproduce Performance Discussion #4

Comments

|

@EthanZhangYi Thanks for your attention and experiments. No, this code with the default settings should achieve >79% performance on Val set. Later on, I will upload the training log and details of the experimental environment. Hope this help you to reproduce the result. |

|

Python 3.6.4 The training log is here. |

|

@HqWei |

|

@speedinghzl I also find that the log you supplied does NOT match this repo. Can you retrain the model exactly with this repo? |

|

This repo is transplanted from the cluster version with minor changes (e.g. module name) which should not affect the performance. I will check the difference between the two version and run this repo. Thanks. |

|

@speedinghzl Thanks for your reply. |

This is indeed the reason. You are so great. |

|

@EthanZhangYi Hi! Thanks for sharing your results. Have you use ohem or aux loss during the training |

|

@EthanZhangYi @lxtGH @HqWei Hi, the trained models are available now. You can find the download links in ReadMe. |

|

@speedinghzl Thanks for your work. |

|

@speedinghzl I try to evaluate with your uploaded model. there is some error. |

|

@EthanZhangYi @lxtGH @HqWei @mingminzhen |

|

@sydney0zq @speedinghzl @lxtGH @HqWei @mingminzhen @mingminzhen You need to update your codes. Now I am training the model with the latest codes, and will report my result later. Regards |

|

@EthanZhangYi Where have you made changes to this update? |

|

@EthanZhangYi @speedinghzl thanks. it indeed gets the same performance by your updated code. |

|

You are welcome to share your reproduced performance here. |

|

@speedinghzl @EthanZhangYi I use 4 Nvidia p100 to train the ccnet with default setting. The test iou on val dataset is only 78.58. |

|

Please refer to ReadMe. You can run multiple times to achieve a better performance. You are welcome to provide the solution to stabilize the result. |

|

Hi. Could you guess some reason make the performance is not stable? Normally, the Generative model is non stable for training but for segmentation, I think it should be stable |

|

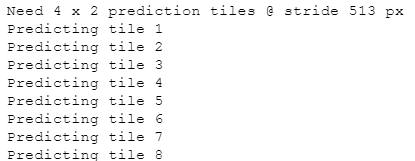

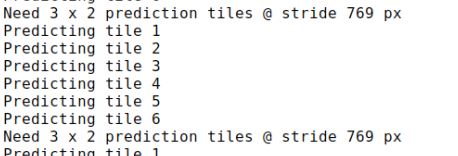

@sydney0zq @speedinghzl @lxtGH @HqWei @mingminzhen

The result of PSPNet in paper is 78.5%, which is well reproduced. checklist:

EnvPython version : 3.6.3 |

|

@Ethan: the performance not stable is not from unbalance class because all methods are applied same dataset. I guess it from the attention. I used attention and got same problem. If you print the attention, you can see that almost value are become zeros, just some value goes to 0.2 or 0.3. It make the dead features. Never learning. Please print it and confirm my point |

|

@John1231983 |

|

@EthanZhangYi @mingminzhen @John1231983 @sydney0zq Nice discussion! |

|

@speedinghzl Thanks for the reply. I've updated the |

|

@EthanZhangYi Id means you tried 3 times with the same default setting, I tried this code on PSPnet got the same results with your ID1. Did you use single scale crop test? |

|

Hi everybody, Someone has reproduced the performance of CCNet on Cityscapes and COCO . You can find the discussions in the issues How to use OHEM loss function? and Param Initialization. Cheers! |

|

Hi I noticed that CCNet use 60000 iterations while PSP and Deeplabv3 (your implementation repo) use 40000 iterations. Can we say more iterations get a performance improving? |

Thx for the nice job.

However I downloaded the code and trained the model, but the results in the paper were not well reproduced.

Setting

Dataset: Cityscapes

Train with

trainsplit, 2975 images.Evaluate with

valsplit.Follow all details in this repo.

Train models with different

max_iterations (60000 as default setting in this repo.)Results in paper

Result

Env

pytorch 0.4.0

torchvision 0.2.1

4*TITAN XP

Is there any tricks in the implementation?

The text was updated successfully, but these errors were encountered: