Geewax, J. J. (2018). Google Cloud Platform in Action. Manning Publications.

This video demo's GCP ai-platform API use via their command line interfaceabove. Please unmute the video to hear sound. Alternatively below the video ere each of the 16 steps followed by a screenshot of its execution:

DEPP.434-55.DV6.mp4

https://cloud.google.com/sdk/gcloud/reference/services/enable

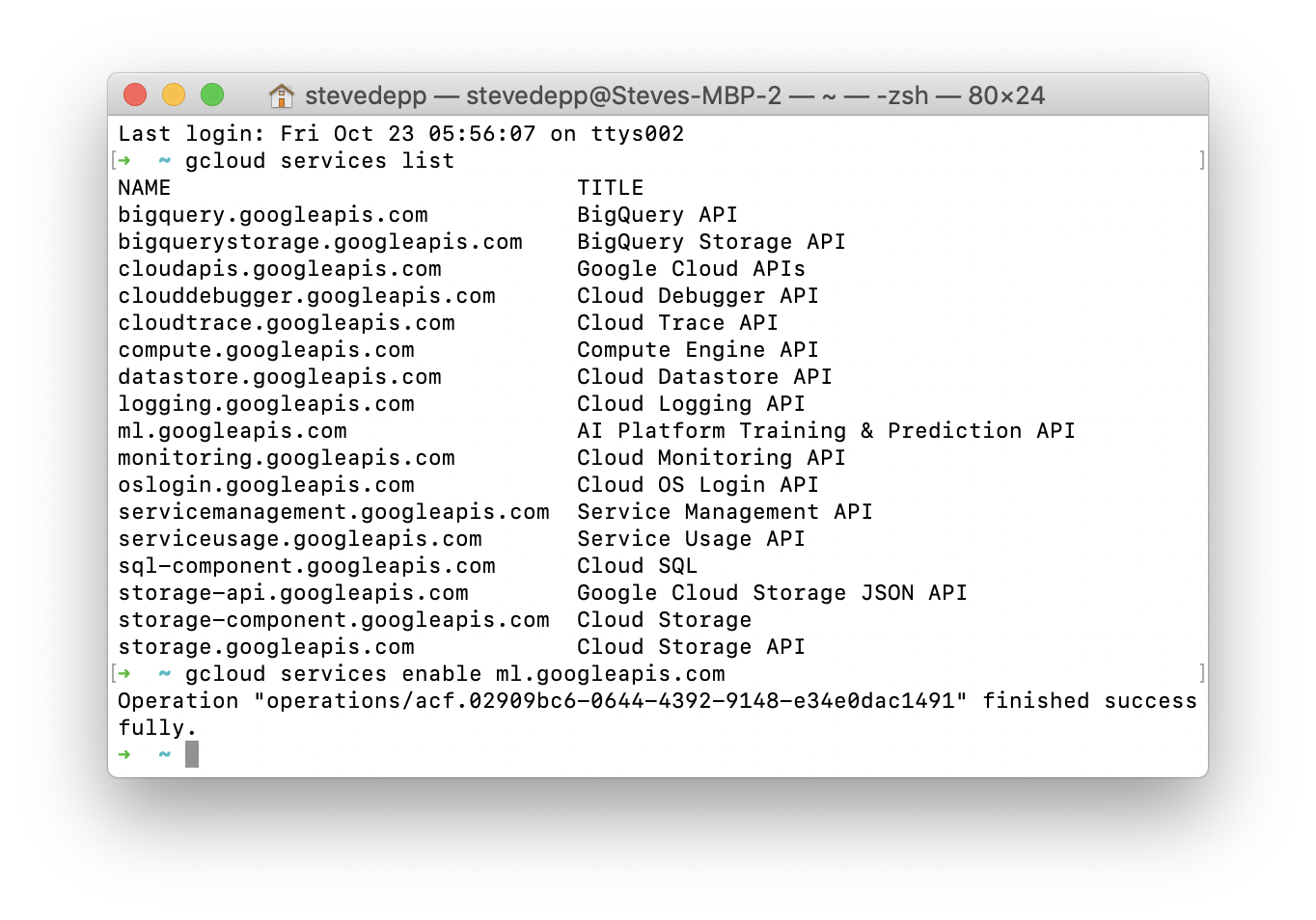

- step 1: Enable the API

gcloud services listgcloud services enable ml.googleapis.com

https://cloud.google.com/sdk/gcloud/reference/ai-platform/models/create

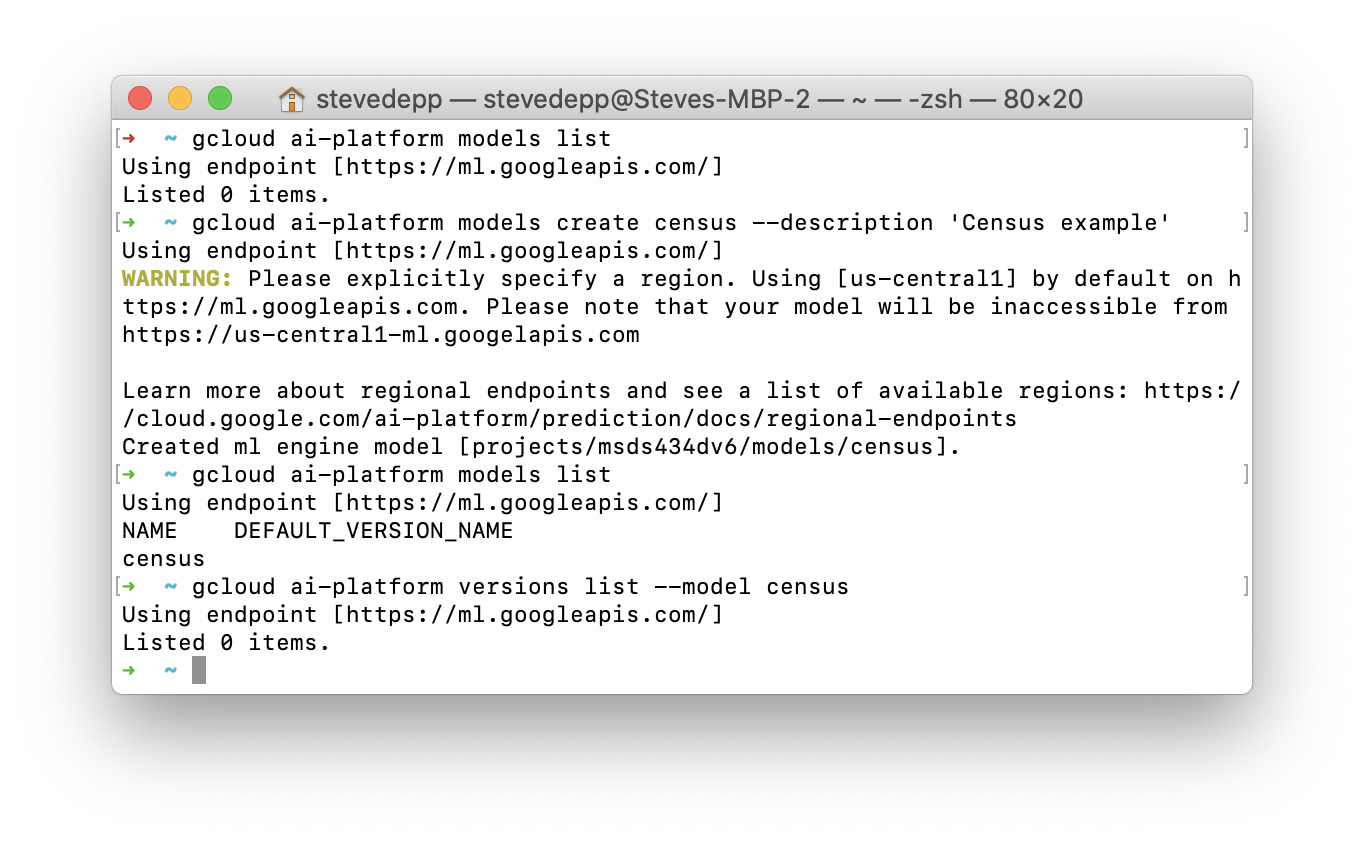

- step 2: create model

gcloud ai-platform models create census --description 'Census example'gcloud ai-platform models listgcloud ai-platform versions list --model census

https://github.com/amygdala/tensorflow-workshop/tree/master/workshop_sections/wide_n_deep

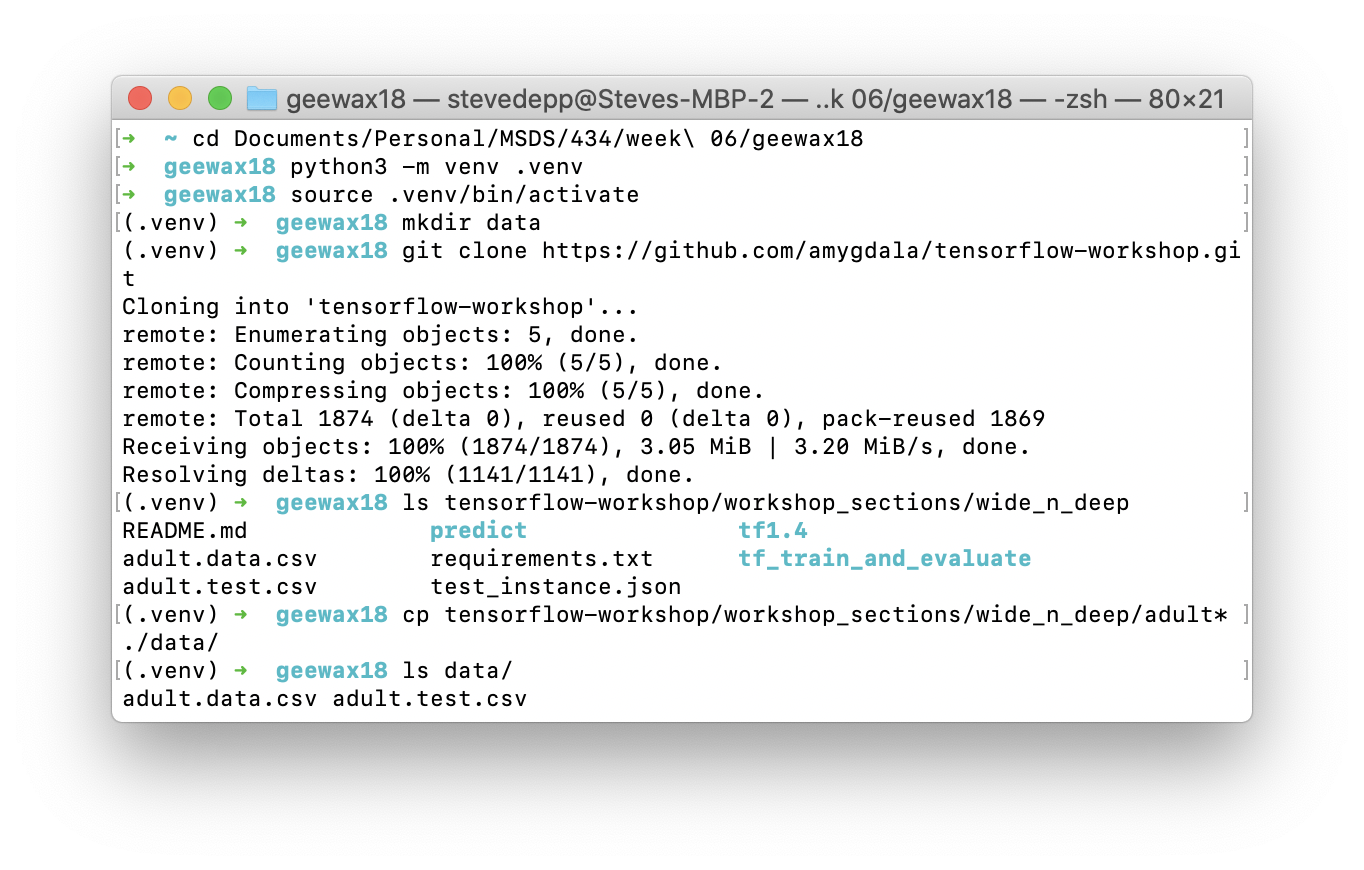

- step 3: set up the environment

python3 -m venv .venvsource .venv/bin/activatemkdir data

- step 4: retrieve the data

git clone https://github.com/amygdala/tensorflow-workshop.gitls tensorflow-workshop/workshop_sections/wide_n_deepcp tensorflow-workshop/workshop_sections/wide_n_deep/adult* ./data/

https://cloud.google.com/storage/docs/gsutil/commands/mb

- step 5: move data to GCP google storage

gsutil cp ./data/adult.test.csv data gs://dv-auto-ml-depp/datagsutil cp ./data/adult.data.csv data gs://dv-auto-ml-depp/datagsutil ls gs://dv-auto-ml-depp/data

https://github.com/GoogleCloudPlatform/cloudml-samples

- step 6: retrieve the canned model

git clone https://github.com/GoogleCloudPlatform/cloudml-samplesls cloudml-samples/census/tensorflowcore/trainercd cloudml-samples/census/tensorflowcore

https://cloud.google.com/sdk/gcloud/reference/ai-platform/jobs/submit/training

https://cloud.google.com/ai-platform/prediction/docs/runtime-version-list

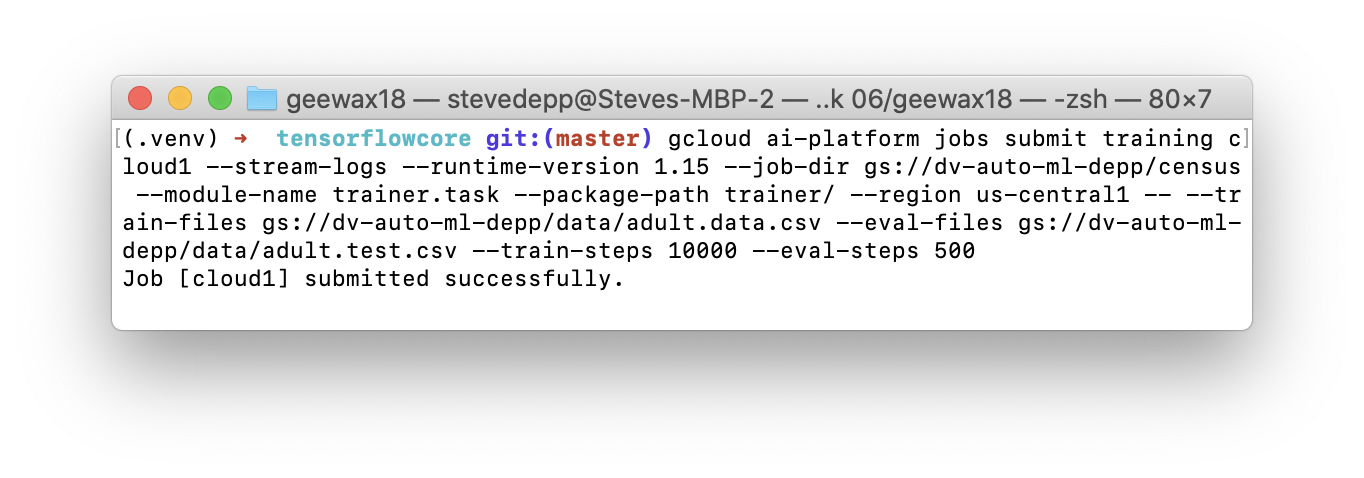

- step 7: submit the job

gcloud ai-platform jobs submit training cloud1 --stream-logs --runtime-version 1.15 --job-dir gs://dv-auto-ml-depp/census --module-name trainer.task --package-path trainer/ --region us-central1 -- --train-files gs://dv-auto-ml-depp/data/adult.data.csv --eval-files gs://dv-auto-ml-depp/data/adult.test.csv --train-steps 10000 --eval-steps 500

- step 8: read the logs

- took 30 minutes to get log results

- notes old version of some dependencies

- python 2.7 end of life at google Jan 2020, but still seems to run

- global steps / sec = 4.65 and with 10,000 steps / 4.65 / 60 = 35 minutes.

- We can also see logs on the GCP https://console.cloud.google.com/logs/query;query=resource.labels.job_id%3D%22cloud1%22%20timestamp%3E%3D%222020-10-23T07:43:09Z%22?project=msds434dv6

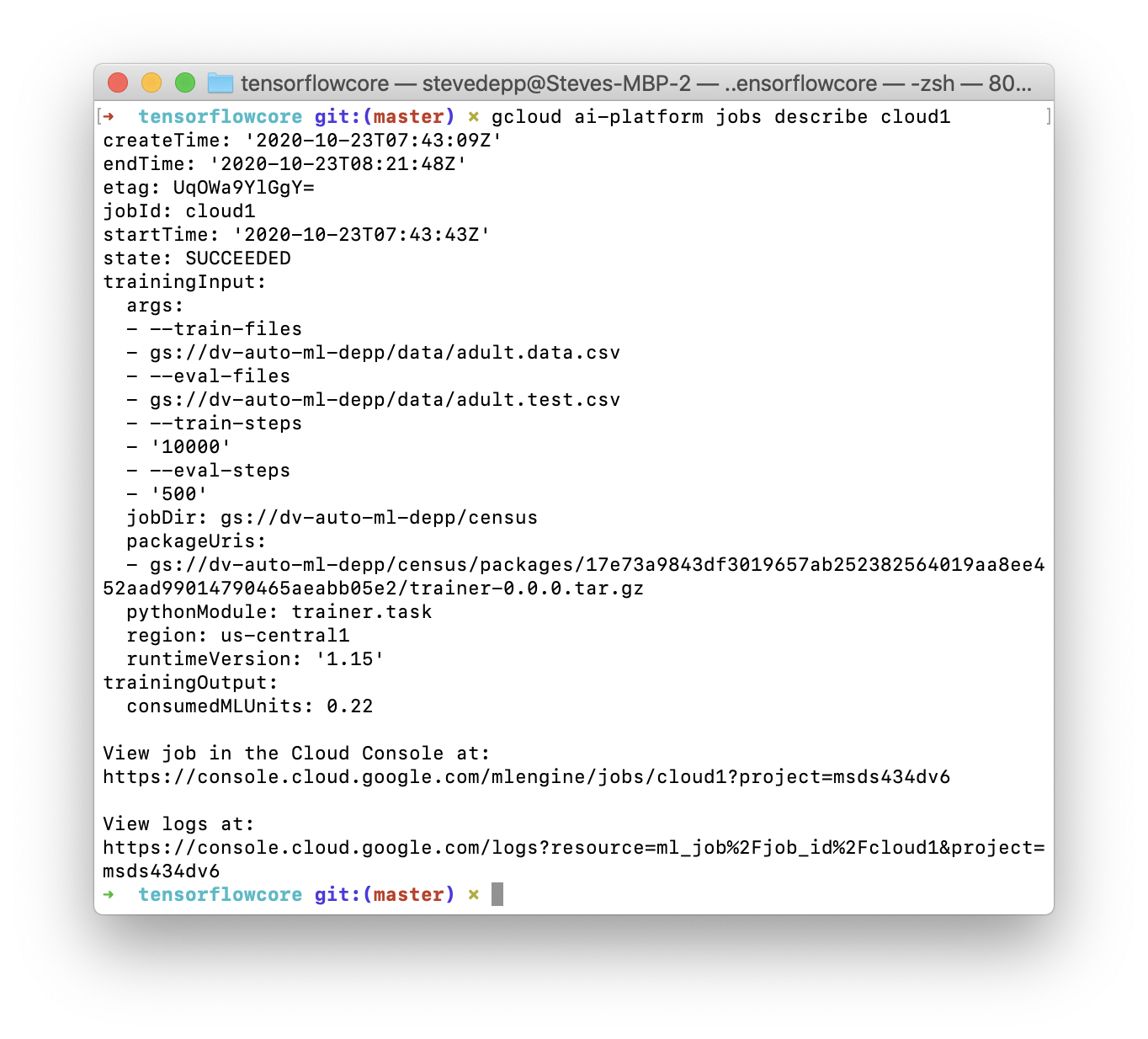

- step 9: review the job

gcloud ai-platform jobs describe cloud1

- step 10: review the output files and model stored in the bucket

gsutil ls gs://dv-auto-ml-depp/censusgsutil ls gs://dv-auto-ml-depp/census/export

https://cloud.google.com/sdk/gcloud/reference/ai-platform/versions/create

-

step 11: create version 1 of the model

gcloud ai-platform versions create v1 --model census --staging-bucket gs://dv-auto-ml-depp --origin gs://dv-auto-ml-depp/census/export --runtime-version 1.15

-

note for step 11: the documentation is a bit sketchy here.

-

version_nameis the only mandatory argument and--modelthe only mandatory flag, but errors are thrown unless you include these flags:--staging_bucket,--origin,--runtime-versionhttps://cloud.google.com/sdk/gcloud/reference/ai-platform/versions/create#--model

https://cloud.google.com/sdk/gcloud/reference/ai-platform/versions/create#--staging-bucket

https://cloud.google.com/sdk/gcloud/reference/ai-platform/versions/create#--origin

https://cloud.google.com/sdk/gcloud/reference/ai-platform/versions/create#--runtime-version

-

https://cloud.google.com/sdk/gcloud/reference/ai-platform/predict

- step 12: locate a

test.jsonfile to make a single online predictions from version 1 of the modells ../test.*gcloud ai-platform predict --model census --version v1 --json-instances ../test.json—> 78.4% confidence the correct class is “<=50k”

cat test.json

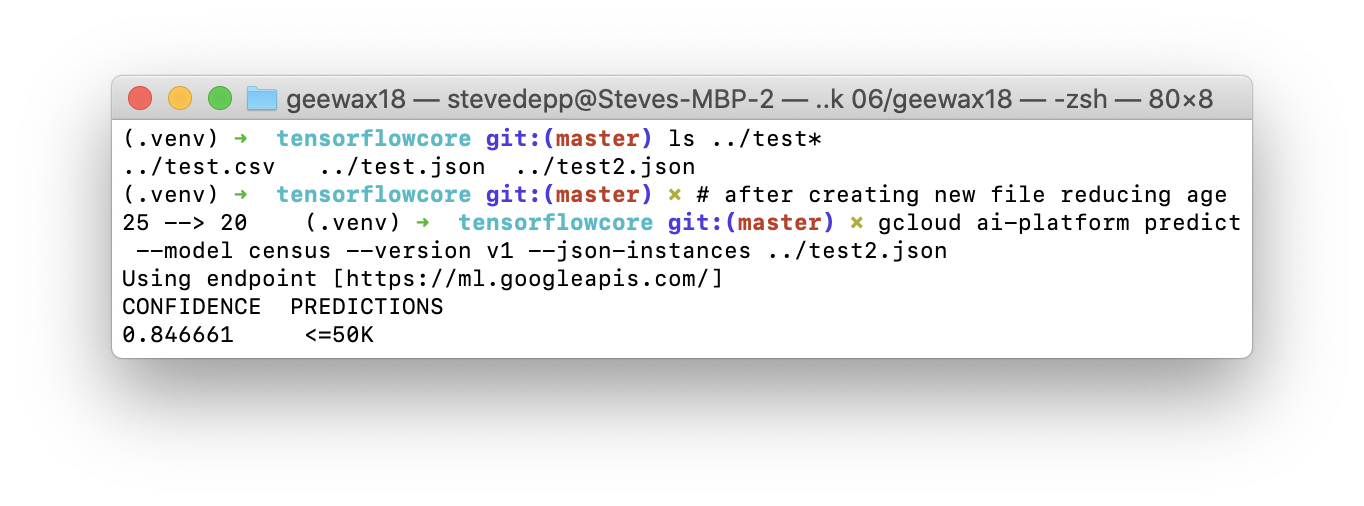

- step 13: copy the

test.jsonfile intotest2.jsonand see model sensitivity with a change the age feature modified from 25 to 20.ls ../test*gcloud ai-platform predict --model census --version v1 --json-instances ../test2.json—> 84.7% confidence the correct class is “<=50k”

cat test2.json

https://cloud.google.com/sdk/gcloud/reference/ai-platform/jobs/submit/prediction

- step 14: submit prediction job for a test_batch.json dataset with 11 rows for ages 20-70

gcloud ai-platform jobs submit prediction prediction1 --model census --version v1 --data-format text --region us-central1 --input-paths gs://dv-auto-ml-depp/test_batch.json --output-path gs://dv-auto-ml-depp/prediction1-output- (somewhat disconcerting to have no message when QUEUED for > 5 minutes)

nano test_batch.json

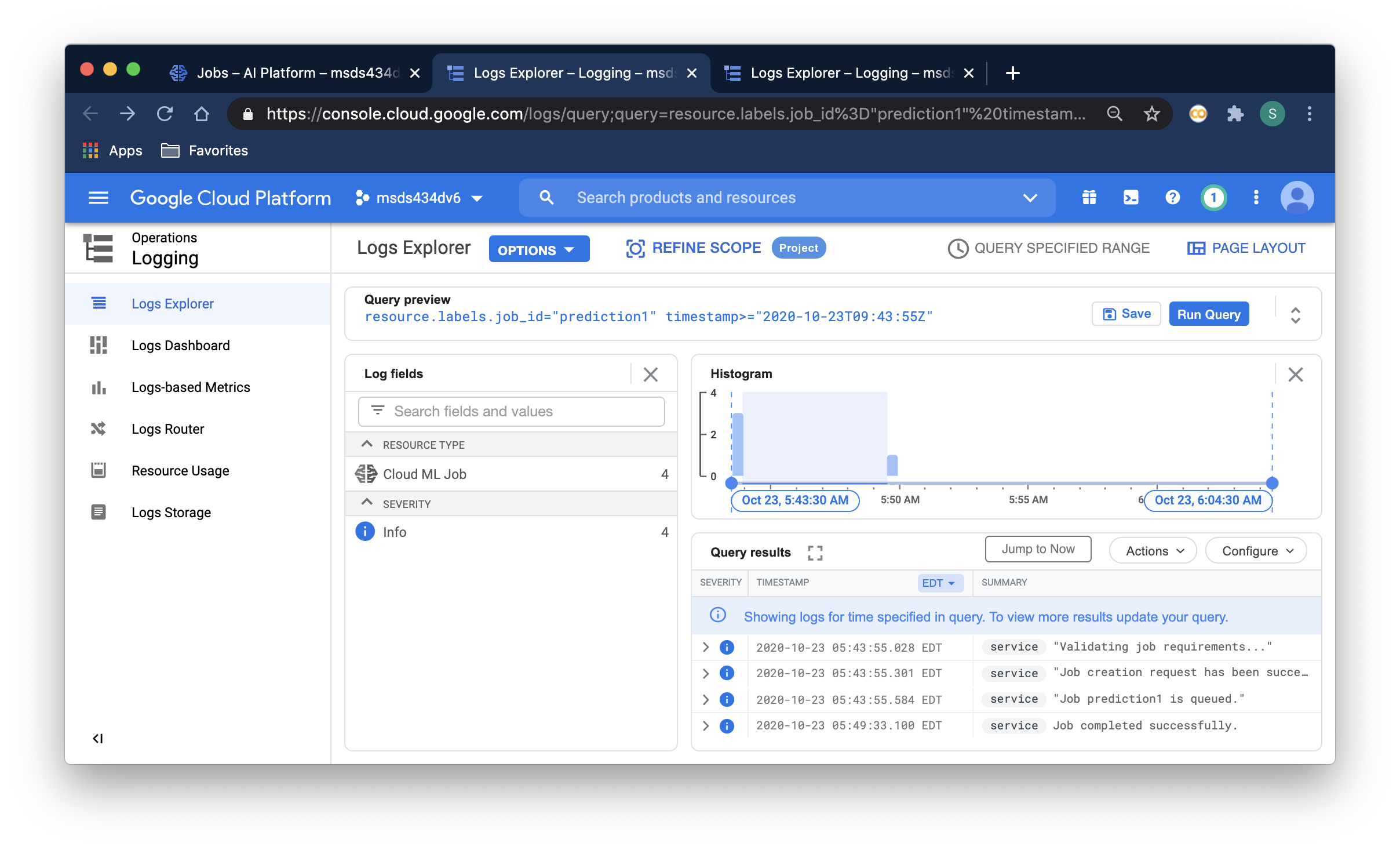

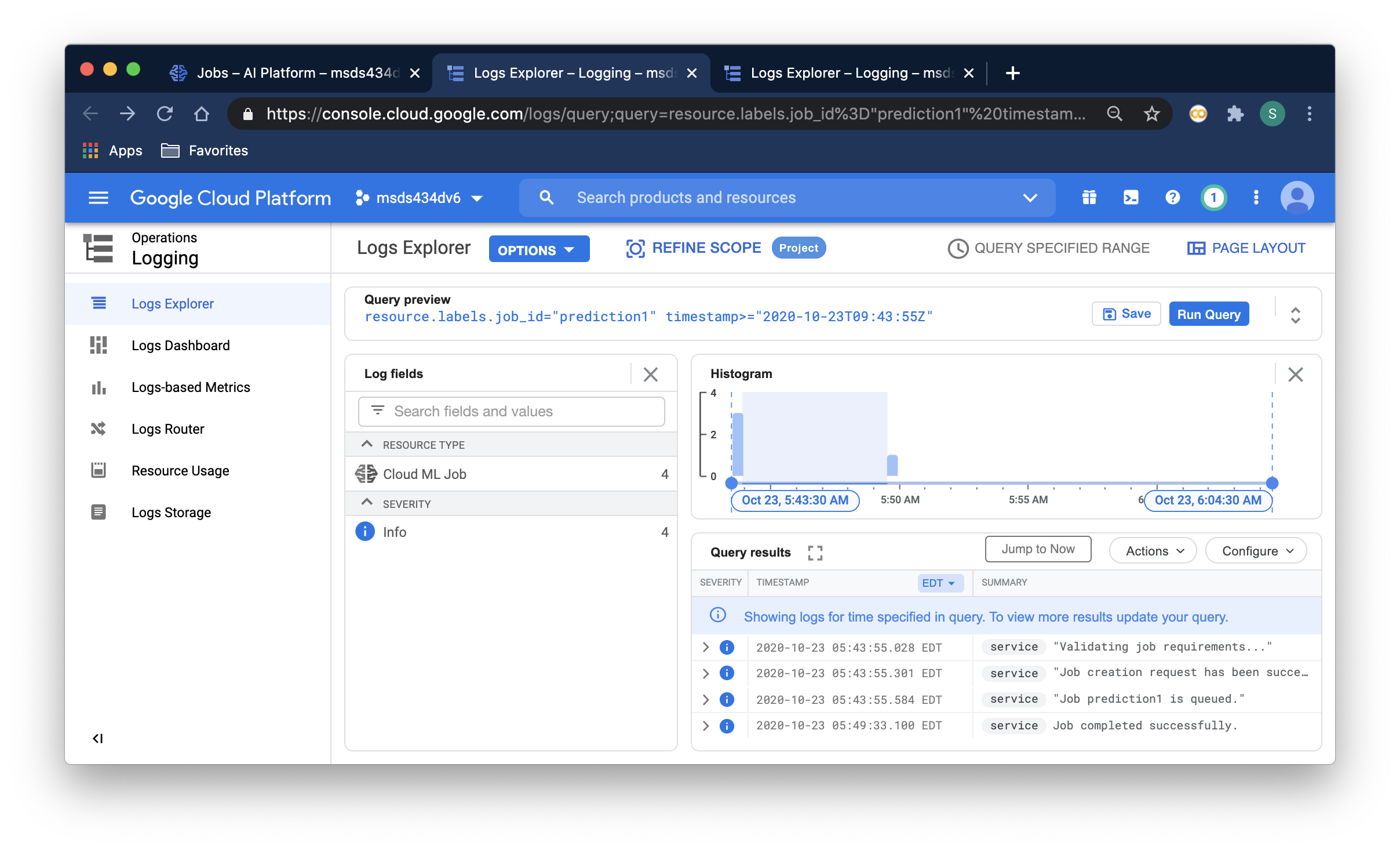

- step 14 continued: Look at the GCP logs for this job

- step 14 continued: Look at the GCP logs for this job

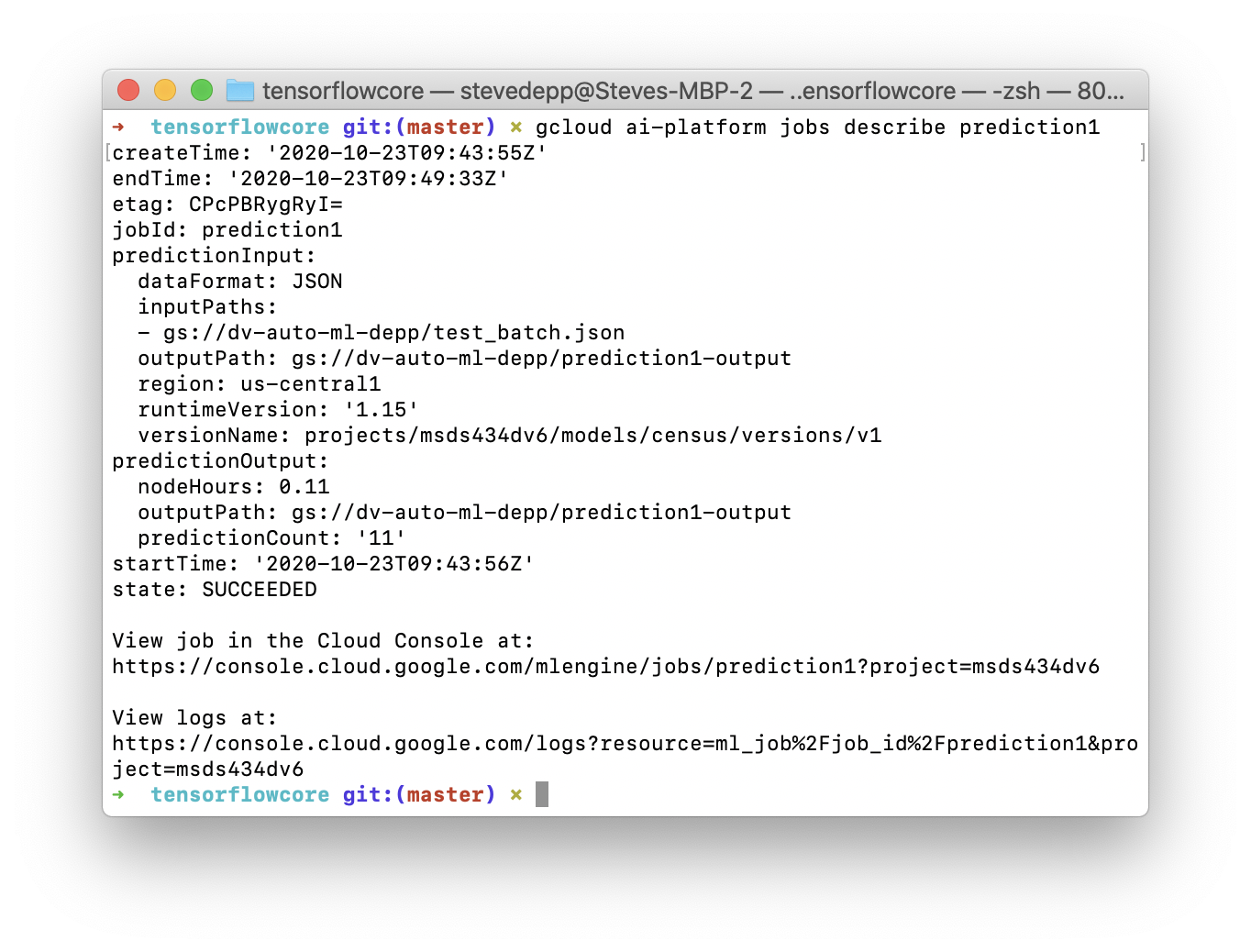

- step15: review the prediction job output in the bucket and the description of the job

gsutil ls gs://dv-auto-ml-depp/prediction1-outputgsutil cat gs://dv-auto-ml-depp/prediction1-output/prediction.results-00000-of-00001gcloud ai-platform jobs describe prediction1

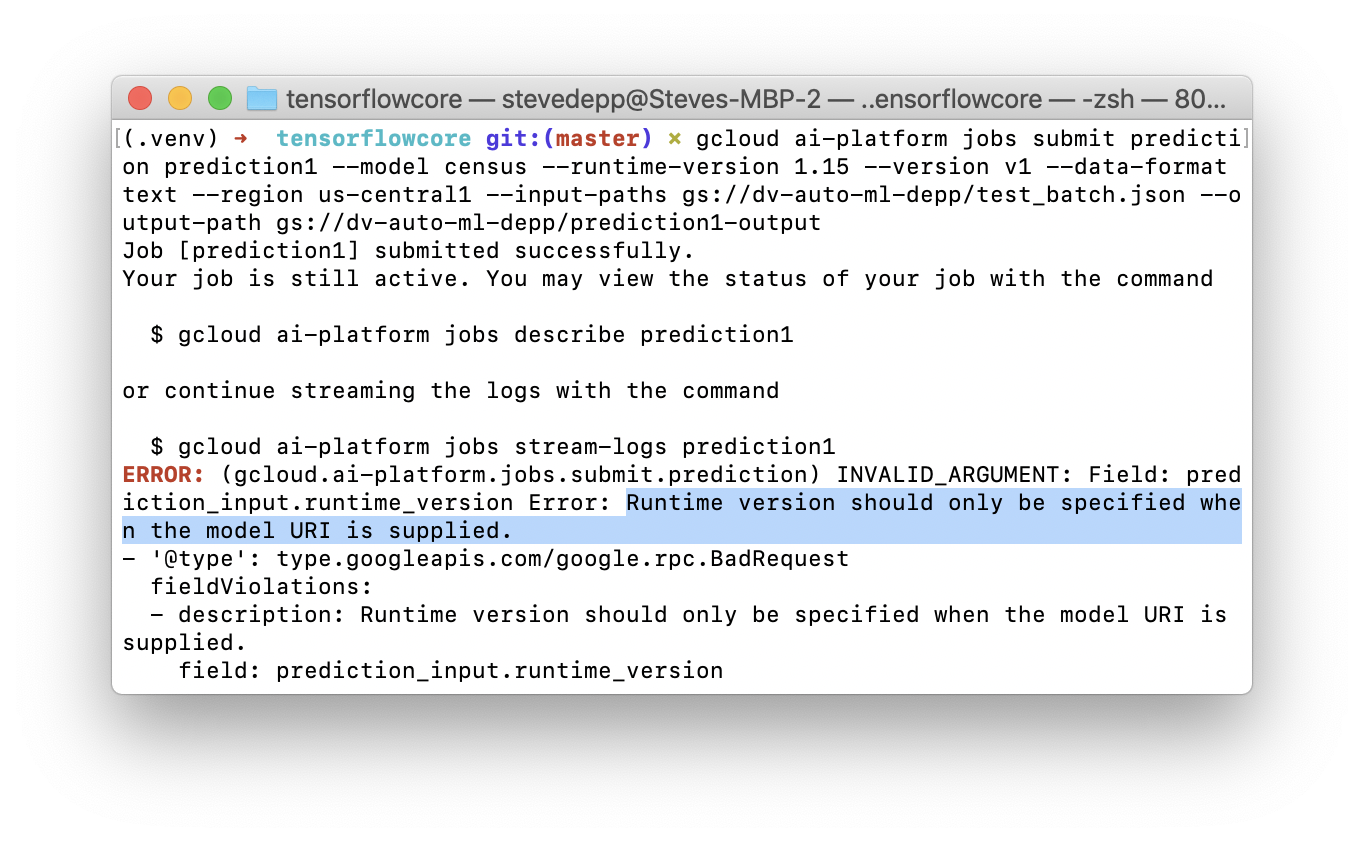

- note: gcloud ai-platform jobs submit prediction …

- again the documentation is a bit confusing: on the one hand

--runtime_version“must be specified unless--master-image-uriis specified” and in the error “Runtime version should only be specified when the model URI is suppled” https://cloud.google.com/sdk/gcloud/reference/ai-platform/jobs/submit/prediction#--data-format

- again the documentation is a bit confusing: on the one hand

https://cloud.google.com/storage/docs/deleting-buckets

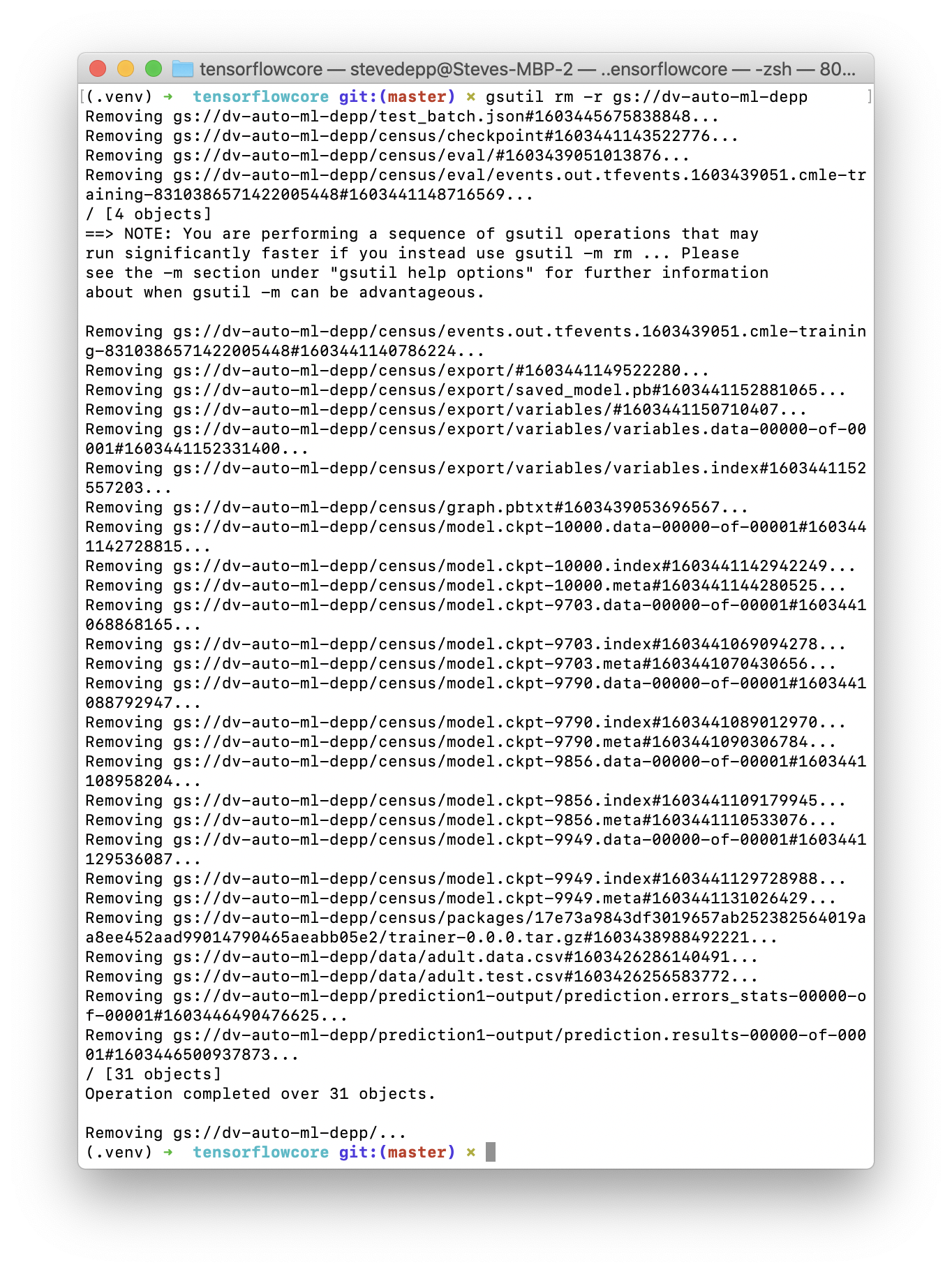

- step 16: don’t over pay: delete the bucket

gsutil rm -r gs://dv-aut-ml-depp- alternatives:

-

rmwill remove bucket and any contents in one go -rbwill removes bucket only if empty

https://cloud.google.com/sdk/gcloud/reference/ml-engine/versions/delete

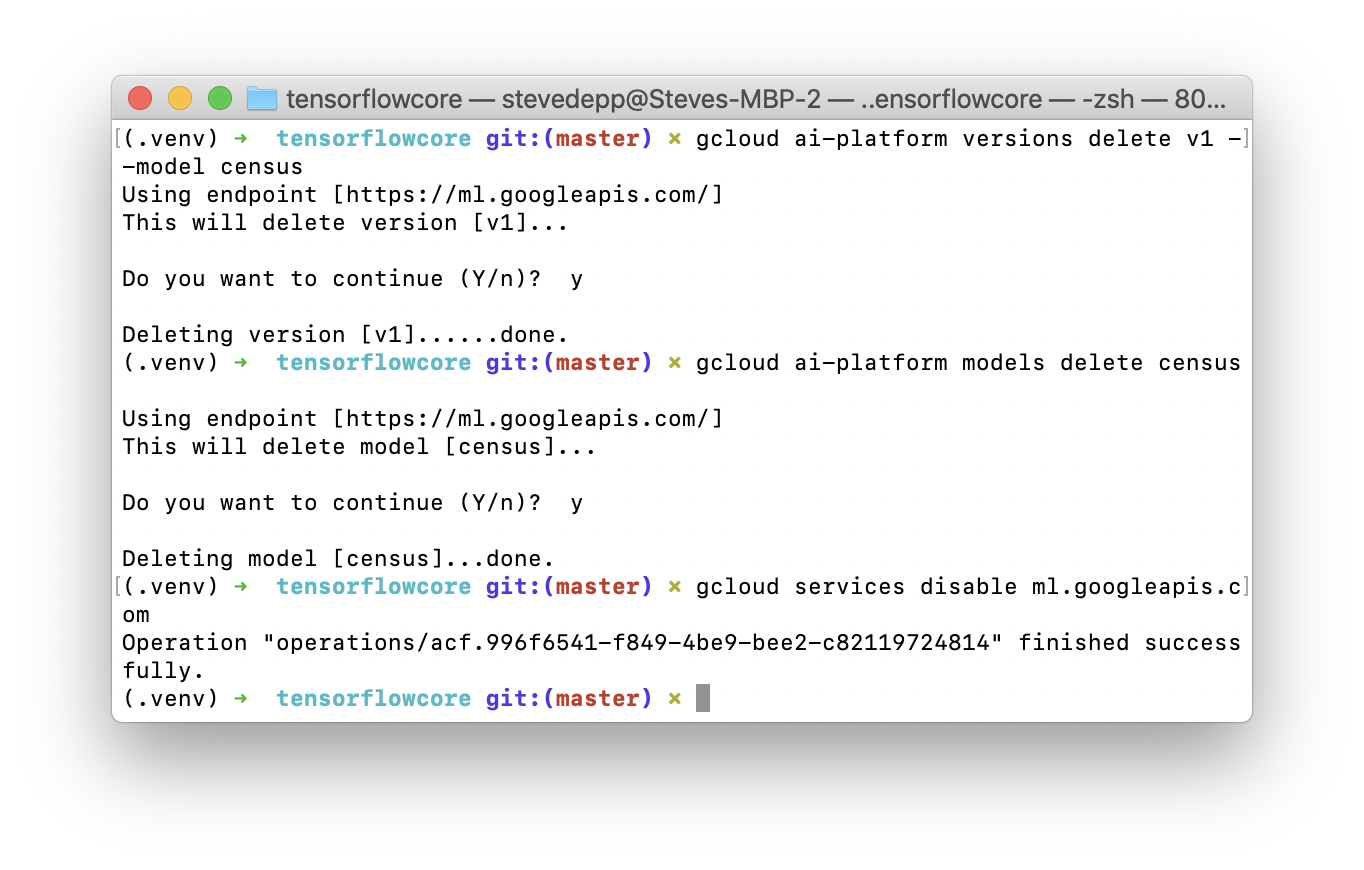

- step 16 continued: delete version then delete model then disable api

gcloud ai-platform versions delete v1 --model censusgcloud ai-platform models delete censusgcloud services disable ml.googleapis.com