-

-

Notifications

You must be signed in to change notification settings - Fork 1.2k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

AST struct transfer, serialization, deserialization performance enhancement #2175

Comments

|

Yeah, if we can improve performance of passing ast, I expect the js plugin api to be really useful. |

|

Maybe deserialize via https://github.com/rkyv/rkyv in the wasm and convert into JsObject will be more faster. |

|

@Brooooooklyn rkyv looks great, in particular the zero-cost deserialization would be very efficient for getting an AST into But I'm afraid I don't understand your point about WASM. Can you possibly elaborate? |

|

From benchmark results before, the performance bottleneck in this scenario is |

|

Thanks for quick reply. Are you suggesting that SWC would run as WASM in NodeJS, rather than via N-API? Or that you'd have a layer of WASM sitting in between JS and N-API? Please excuse me if I have the terminology wrong. As I mentioned above, this is my first experience of Rust or Node native module development, so I am really just feeling my way here... |

|

And maybe this is a really stupid idea but... Do we need to serialize at all? The AST already exists in Rust's memory in a binary format with a well-defined schema (the Rust types). Could that memory be wrapped in a JsBuffer (without copy), passed to JS, and a deserializer in JS would parse this buffer and convert it into JS objects? The JS deserializer could be generated programmatically from the Rust type definitions. For plugins, the deserializer could be lazy i.e. only deserialize sections of the buffer for AST nodes as and when they are accessed in the plugin's visitors. For plugins which only operate on a subset of AST nodes, the serialization + deserialization overhead could be avoided entirely for the majority of the AST. This sounds too good to be true. Am I totally misunderstanding what you can / can't do with memory across the JS/Rust boundary? |

simd_json has similar appraoch luizperes/simdjson_nodejs#28 |

I expect this to slow down operations by a margin. |

|

I think we can use binary ser/de library like protobuf, which already has js-based deserializer. |

Yes, there'd be no point in lazy-deserializing AST nodes if they're all going to be visited. What I had in mind is to modify the implementation of the plugin system so it minimizes the number of nodes accessed. For example, the I imagined it working something like this: Plugins would be objects rather than functions (more like Babel I suppose): const consoleStripperPlugin = {

visitor: {

CallExpression(e: CallExpression): Expression {

if (e.callee.type !== "MemberExpression") return e;

// ... etc ...

return e;

}

}

}

The plugin can complete its work without accessing the majority of the AST, therefore there's no need to deserialize most of it. Whatever the serialization format, deserialization in JS is going to be the slowest link in the chain, so it seems worthwhile to avoid as much of that work as possible. I don't know if you'd be open to changing how plugins work in this direction? Implementation in SWC is more complex, but I imagine could be much more performant and, from a consumer point of view, probably a bit more familiar from Babel. |

|

@kdy1 I tried using serde to serialize to bincode instead of JSON, but was getting errors. I'll give protobuf a go. Can anyone advise if my idea of using the binary representations of AST nodes in Rust's heap as the source for deserializing is (in theory at least) possible? Maybe it's a bad idea, but I'm curious as to whether it's in the realm of possibility, or whether it falls under "no, it doesn't work like that". |

|

Here is a similar discussion happened in Deno: denoland/deno#2121 |

|

deno used similar techniques to optimize in the past, but their structure is much simpler than the AST structure of SWC. |

|

Thanks very much for this. Really interesting. I'll dig into it. |

|

@overlookmotel Can you make a PR if it's good enough? |

|

@kdy1 Yes I'd be happy to. And thanks for offer of help with the Rust code. My plan was:

I'm very busy with day job, so this may all take me some weeks/months, but I'm really interested so will progress it whenever I get a chance. One question: In my mind, the ideal end point is:

i.e. aim of the game is compatibility with existing JS ecosystem, and for writing SWC plugins in JS to be a viable (and still fairly speedy) option rather than "if you don't know Rust, you can't do it". However, I'm new to the project, so I'm not aware what your vision/priorities for SWC are. Is the above compatible with the direction you want to take? |

|

Sorry, one other question: Are the Typescript type definitions in types.ts and Visitor class in Visitor.ts written by hand, or programmatically generated somehow from the Rust type definitions? If the latter, can you point me to the code which does this please? |

Yes, I think compatibility with babel plugin is the most important. But I don't expect js plugins to be speedy as rust plugins, because they can't be called in threads.

It's written by hand. I actually tried generating it, but I stopped because rust proc macro api is limited. |

That's great to hear. I think my direction is in line with yours then.

Ah of course, I hadn't thought of that. I suppose if plugins were specified as paths (i.e. Anyway, I have more questions and comments, but I think best for now I just start with step 1 and benchmark the potential serialization methods. I'll let you know any findings. I hope you don't mind if I come back with questions if I'm having trouble with the Rust. |

|

I absolutely do not mind :) |

|

There is another way, that is, we modify the fake AST in the node layer, and then generate the smallest instruction set and pass it to Rust. This is also a basic strategy of cross thread communication in the past. Its core point is to generate the minimum instruction set, anyway. |

|

@yisar I don't think it will work. Even if it's implemented, it will be very slow becuase such api means we have to cross language boundaries frequently. |

|

@kdy1 In fact, there is another another way, that is, If we can find a way so that Rust can communicate directly with V8 and bypass JS, which is also very good. I don't know if node can do it, but deno does it. https://github.com/denoland/serde_v8 However, please note that the structure of deno is very simple. It is usually just a queue containing function name and arguments. |

|

If using v8 api directly, we will lost ABI-stable which provided by Node-API |

|

I read this again, and I think the performance will be bad again if we fully imlplement the parser. JIT is very good for this kind of microbenchmarks. |

|

PS My intent is not to go nuts trying to squeeze every last drop of performance and posting about this forever. I feel like it's moving towards putting together a PR pretty soon. However, it does feel like there's some low-hanging fruit which could yield significant gains, so I'd be keen to try to resolve those things if possible. Unfortunately they're on the Rust side, so unlikely I can figure them out myself without a bit of help. |

Sorry for the interruption — I just read through most of this thread and wanted to say it's delightful to see how fast you went from "I don't know any Rust D:" to practicing the Dark Arts. (I actually came to suggest exactly that, but then noticed your link was broken and saw that you had already done it in the meantime.) I'm investigating swc for an imminent work project, and mostly what I was wondering is whether I can start using the released/documented version in a couple of weeks and upgrade relatively seamlessly down the road, or if I'll need to eventually rewrite my plugins in Rust. It sounds like the JS API will stay put, but any perf improvements over the status quo would be dependent on the work you're doing @overlookmotel (and aren't a big priority for the swc team in any event)? If there's anything I can do to help, please feel free to ping me! |

|

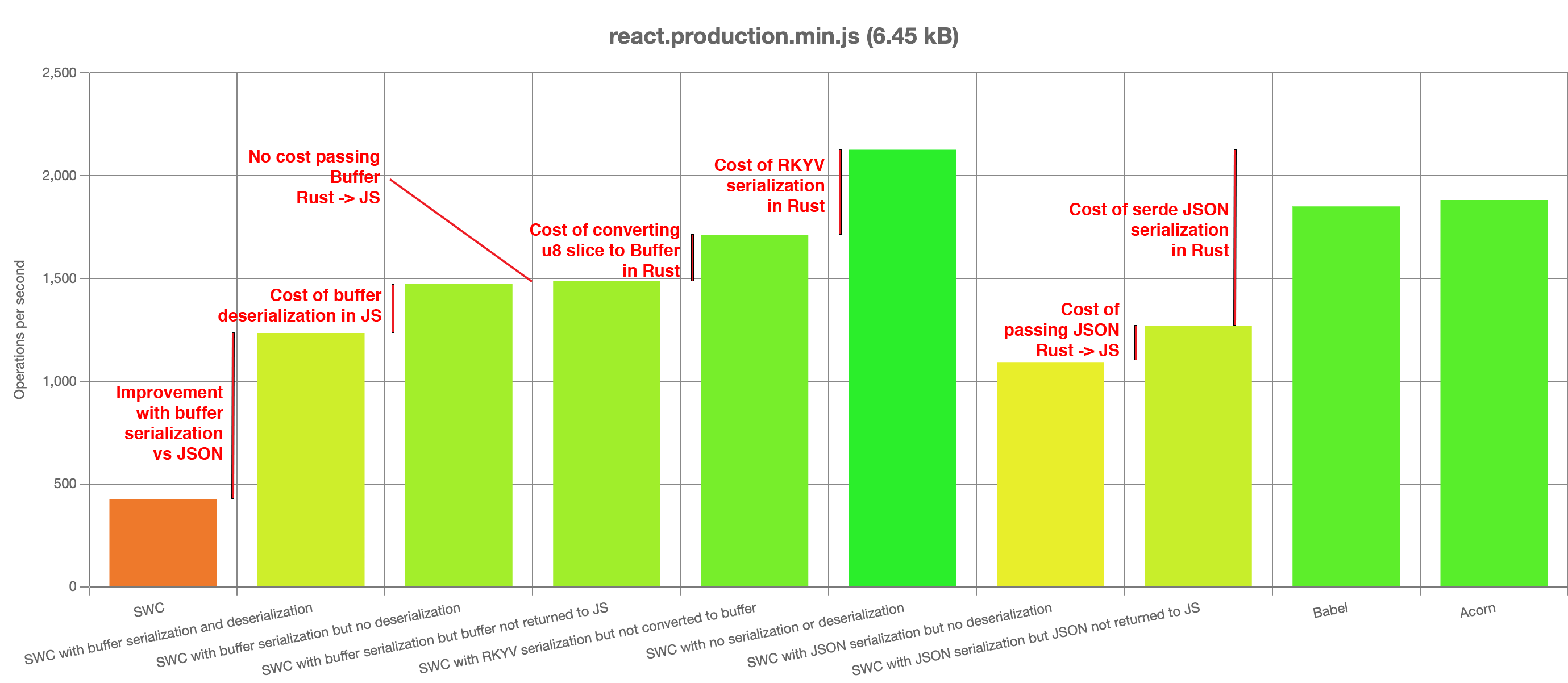

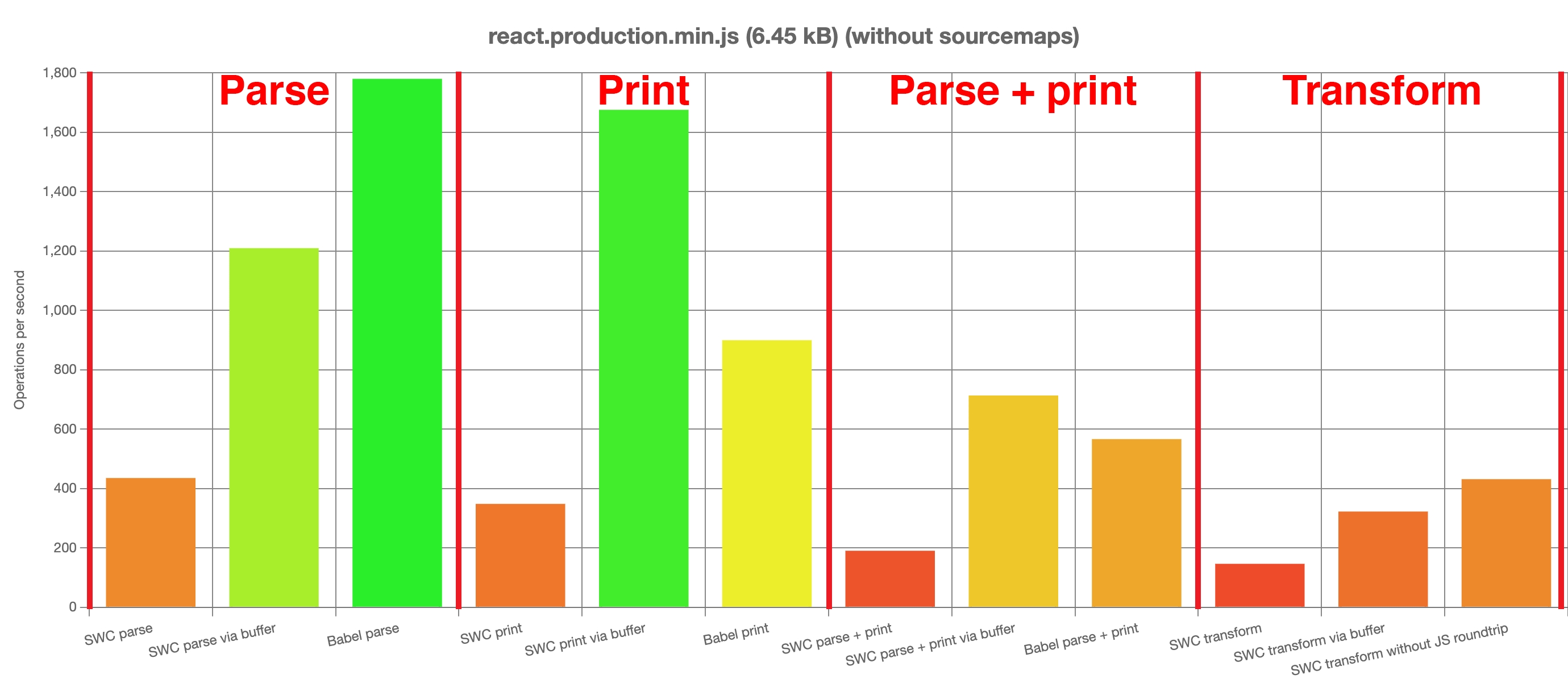

Some more progress: ParseI've speeded up the deserializer quite a bit. Buffer-based implementation is now 2.9x faster than current SWC. The Javascript side is now probably fast enough. The bottleneck is on Rust side:

I have also now implemented a serializer in JS and re-implemented For a round of parse-and-print, this new implementation is now faster than Babel. Finally! I've also prototyped using RKYV serialization/deserialization for passing AST in/out of JS for a JS plugin. There is still a sizeable penalty, but the gap is closing. NB This is a comparison of transform with JS plugin vs no plugin. I've not compared to a WASM plugin. But as most of the cost is in RKYV's serialization, I suspect it may be competitive. NB Benchmark below is without sourcemaps, but performance with sourcemaps enabled is much the same. AST implementationI've implementated JS Classes in serializer/deserializer. All that remains now is support for JSX and TypeScript. Update 9/7/22: JSX is implemented now too. Only TS remaining. Faster on M1The above benchmarks are on an Intel-based Mac. Interestingly, on an M1 Pro processor, the new implementation pulls ahead of current SWC even more (parse is 3.6x faster on M1 vs 2.9x on Intel). I guess M1 processors are optimized to run JS. Next stepsAlmost there... Can I ask for some help from the community at this point?

For someone who understands TypeScript, it should not be too difficult. It would involve:

If someone would be able to help with TypeScript, it would be a huge help in getting this over the finish line. Please please pretty please! Update 10/7/22: I've completed the TypeScript implementation. But it stills need tests. That's tricky for me to do because I have no idea what all these |

|

@dannymcgee Sorry for slow reply. Day job (which has nothing to do with compilers, or indeed programming) went nuts for a while, and then I got Covid! Well I still don't think I can say I know Rust! I'm not so much "practicing the dark arts" as flinging darkness at the compiler until something works. I am really enjoying getting to know Rust, but it's a slow process. My feeling is that in the end JS plugins can be made fast enough to be practical. However, the work discussed in this thread is only phase 1. There's potential to make them much faster than the above by implementing partial/lazy serialization/deserialization, and I think this could get them to the point of being almost as fast as WASM plugins in a lot of cases. However, there are some complications due to the fact that AST nodes cannot be repeated (i.e used more than once) in Rust, whereas they can in JS. So that needs to be handled. So overall, I do think it's possible, but I only have so much time to work on it. It could take a long time before it comes to fruition. If you do have some time to lend a hand, any help with the two points above would be massively appreciated. Implementing TypeScript support is now the main blocker on completing this "phase 1" work (I'm going to implement JSX, but I really don't want to do TypeScript). |

|

I'm not able to dig deeper into the findings & suggestions for now, just want to double clarify one thing: This issue is strictly trying to pursue serialization / deserialization + transfer performance improvement and would love to adapt any changes that can improve those. But this doesn't mean having this automatically SWC core team will try to pursue to enable JS plugin as first class citizen. Performance / ergonomics around JS plugin have a lot more aspects to consider, which we made a very careful and deliberate decision to pursue wasm plugin instead. I'm not going to say there's no future reevaluation at all or something, but also we don't have answers to a lot of questions if we want to revisit this. In short, I'd expect this issue will be the place for improving existing node bindings interfaces like |

|

@kwonoj Thanks for your swift reply. I totally understand your position and have largely avoided discussion of JS plugins for this reason. I only wanted to respond to @dannymcgee's question. My intent is to finish up this work and then open a new issue about JS plugins. But the impression I've got is that the best way to try to convince you and the other maintainers that it's even worth considering is to demonstrate some halfway-decent performance, since that was the main objection originally. So that's what I'm working towards. Just to repeat: I'm very clear that the maintainers don't see that at all as a priority, and consequently I don't expect any help. I hope you don't mind me communicating with others who have the same interest though. |

|

@overlookmotel Thanks for sharing context and understanding.

My impression is before thinking about any js plugin and other new discussion, it'd be great if there's working PR against to existing interfaces (

Yes sure, that's the whole purpose of keeping opening this issue for the tracking purpose. |

Absolutely. One question: If I get to the point where everything is done except TypeScript support, would you accept a PR for a more performant As you probably gathered from the above, I have very little appetite to implement TypeScript support, and part of me feels it would feel fair enough on an open source project to leave that as a follow-on for someone else who does have the appetite. I know that wouldn't be entirely ideal, but otherwise it's going to be the blocker for all the rest - could result in it taking many months to get to the PR stage, rather than weeks. |

I really can't say without having details, but it sounds like a possible path if there aren't regressions (by properly falling back to current execution path) & if we can replace into new implementation entirely in a reasonable timeframe. It is not uncommon for us to have several staged PR to make a new feature / refactoring to land. |

|

@overlookmotel just found out about this and want to say one thing: Thank you 🙇 This is hugely important work. For reference I built Tamagui which makes extensive use of babel to do all sorts of optimizations, but that has so much logic all over. The flexibility of JS is imperative to being able to support those features, it's a big technical advantage for shipping and there's still a bunch more that could be done. I had brought this idea up with babel folks but didn't get positive response, so very glad to see maybe I can port to SWC in the future. Specifically most of the features can be implemented with something like: traverse({

JSXElement() {}

CallExpression() {}

}) |

|

JS plugin will be dropped in v2 |

|

@kdy1 Can I ask if you could please re-open this? This issue relates to speeding up existing APIs - I've done a huge amount of work on this to date and it's close to the finish line. True, it has taken me a very long time, but please bear in mind that (a) I have had to learn Rust from nothing and (b) my day job has nothing at all to do with coding, so I can only work on it in my spare time which, due to the demands of my job, is very minimal for large chunks of the year. The implementation I have is working, and what I've been (slowly) working on more recently is making the codegen for the JS-side serializer/deserializer be generated from the Rust types automatically, so that it's future-proof for any changes to the Rust code. My intention with that is to ensure it's not a maintenance burden for you and the other maintainers. I really am trying to do this the right way. As the benchmarks above show, the approach I've taken makes significant performance improvements, and I would very much appreciate the opportunity to get this over the finish line. I hope you can reconsider. |

|

@kdy1 could we get some discussion on why the JS plugin being dropped / have a chance to discuss it somewhere? |

|

There were multiple discussions regarding plugins at https://github.com/swc-project/swc/discussions , though it is not a single umbrella discussion so you may need some archeology to find some details. In short summary, things like performances including serialization and runtime both, supporting various platforms like native cli / wasm host, ergonomics concerns of ast visitor that cannot replicate rust behavior 100%, ecosystem fragmentations. There is no such things like 100% impossible so we may revisit this one day depends on the circumstances, but for now we are focusing to improve current wasm based pluing improvements. |

|

For the cleanup purpose, I'm going to close this: not because of we will not trying to adapt suggestions in this issue, more of actionable perspective. Currently this issue does not have clear actionable item on @swc/core side and it's hard to keep the issue as opened indefinitely. If there are outcomes from this investigation as a PR, happy to accept it. Or either, if there are an actionable suggestions instead - please file an RFC. |

|

This closed issue has been automatically locked because it had no new activity for a month. If you are running into a similar issue, please create a new issue with the steps to reproduce. Thank you. |

As mentioned in #2123 and #1392,

parse()is currently quite slow.SWC's Rust parser is of course extremely fast, but the bottleneck is passing ASTs back into JS.

I've done some experiments, and believe I may have the beginnings of a solution to speed it up by 5x or more, enough so it out-performs Babel at least.

https://github.com/overlookmotel/swc-parse-test

The problem

There are 2 bottlenecks involved in transferring AST from Rust to JS:

JSON.parse())Potential solution

I've attempted to alleviate these pinch points by outputting a buffer (

JsBuffer) from Rust instead of a JSON string. The buffer is a binary serialization of the AST, and I've written a matching deserializer in JS.This has 2 advantages:

JsBuffercan be created in main thread's memory so passing it from Rust to JS is zero cost.The results appear to be pretty good.

Provisos

The implementation is only a proof of concept. So far it can only deal with

const x = 1;statements. I see no reason why it shouldn't maintain it's speed when support for all AST node types is included, but I could be wrong.It may also be naive. Until this weekend, I've never written a line of Rust before, so I may be totally missing a reason why it's not possible to integrate this approach into SWC.

The Rust code I've written is, for the same reason, quite embarrassingly bad. The JS code is also hacked together very quickly. So potentially it could get significantly faster with some optimization.

Questions

Does this seem like a promising direction?

If

parse()(andprint()) can be made much faster, might this make it feasible to maintain support for plugins written in Javascript?As mentioned above, I don't know Rust at all, so I have limited ability to help with an effort to flesh out these experiments into fully-fledged features. However, I would be very happy to help out on the JS side (the deserializer etc).

The text was updated successfully, but these errors were encountered: