The Official implementation for

Accelerating Guided Diffusion Sampling with Splitting Numerical Methods (2023)

by Suttisak Wizadwongsa and Supasorn Suwajanakorn.

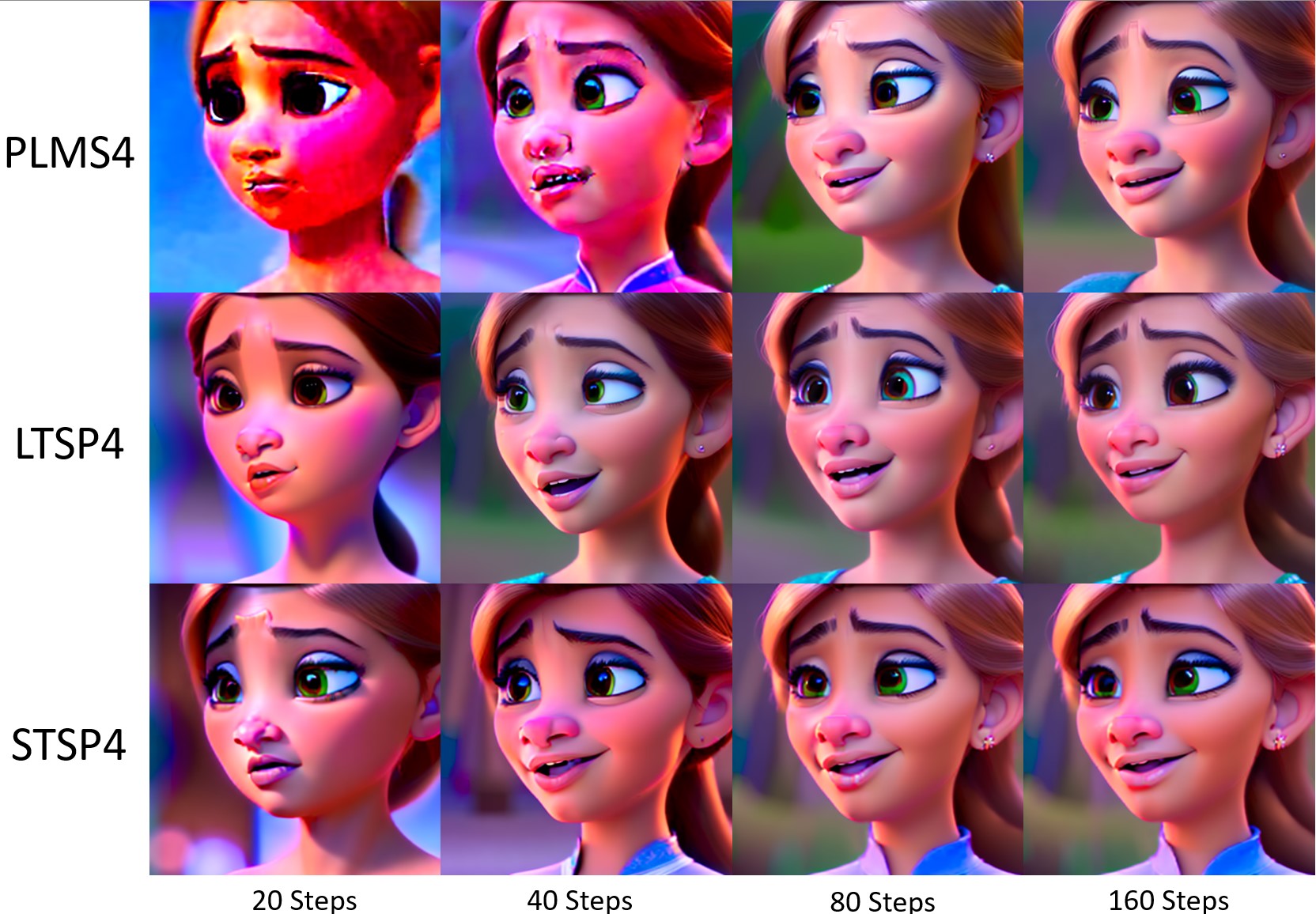

Abstrace: Guided diffusion is a technique for conditioning the output of a diffusion model at sampling time without retraining the network for each specific task. One drawback of diffusion models, however, is their slow sampling process. Recent techniques can accelerate unguided sampling by applying high-order numerical methods to the sampling process when viewed as differential equations. On the contrary, we discover that the same techniques do not work for guided sampling, and little has been explored about its acceleration. This paper explores the culprit of this problem and provides a solution based on operator splitting methods, motivated by our key finding that classical high-order numerical methods are unsuitable for the conditional function. Our proposed method can re-utilize the high-order methods for guided sampling and can generate images with the same quality as a 250-step DDIM baseline using 32-58% less sampling time on ImageNet256. We also demonstrate usage on a wide variety of conditional generation tasks, such as text-to-image generation, colorization, inpainting, and super-resolution.

This repository is based on openai/improved-diffusion and crowsonkb/guided-diffusion, with modifications on sampling method.

Clone this repository and run:

pip install -e .

This should install the python package that the scripts depend on.

All checkpoints of diffusion and classifier models are provided in this.

For this code version, user need to download pretrain models and change the models' location in config.py.

The output directly can be cange in scripts/classifier_sample.py

python scripts/classifier_sample.py --model=u256 --method=stps4 --timestep_rp=20

Some example of --method options are stsp4, stsp2, ltsp4, ltsp2, plms4, plms2, ddim

- 128x128 model:

--model=c128 - 256x256 model:

--model=c256 - 256x256 model (unconditional):

--model=u256 - 512x512 model:

--model=c512