New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Possible memory leak? #1567

Comments

|

Where are you suggesting the memory leak is coming from? |

|

I'm not sure if there is a memory leak. I've disabled the calls to this part of the code to see if the memory increases still. If the memory stops increasing, I would guess this code is causing the memory to increase: const queue = await getQueue(queueName as QueueNames);

let completedJobs = await queue.getJobs(["completed"], 0, quantity, false);

let failedJobs = await queue.getJobs(["failed"], 0, quantity, false);

const waitingJobs = await queue.getJobs(["waiting"], 0, quantity, false); |

|

How much memory does the instance you're hosting it on have? |

|

This server is running with 2GB of memory |

|

The graph you are showing above does not imply memory leaks. That's how memory looks like with a GC system like NodeJS. In fact it is very difficult to find memory leaks in Node unfortunately. |

|

Particularly the BullMQ code that you are using in this example would be quite unlikely to have any memory leaks as it is just simple getters. |

|

Thanks for the help with this. I hope the issue is just something I'm doing unrelated to BullMQ. @manast What do you mean by "That's how memory looks like with a GC system like NodeJS"? I disabled uptimerobot so nothing has been calling the code above for the last day. This is the memory graph: It seems clear that this endpoint is likely causing the memory to increase. I agree, it does seem odd that calling a few simple getters would cause this memory issue. I'll run another test. First, I will re-enable the uptimerobot calls and see if the memory resumes (no code changes). If it does, I will remove the other code from the endpoint (see below) and see if it changes anything with the memory. app.get(

"/queueStatus/:queue/:percentage/:quantity",

wrapAsync<any, any, any, {queue: string; percentage: string; quantity: string}>(

async (req, res) => {

const {queue: queueName} = req.params;

const quantity = 500;

const queue = await getQueue(queueName as QueueNames);

const completedJobs = await queue.getJobs(["completed"], 0, quantity, false);

const failedJobs = await queue.getJobs(["failed"], 0, quantity, false);

const waitingJobs = await queue.getJobs(["waiting"], 0, quantity, false);

const totalJobCount = completedJobs.length + failedJobs.length;

const completedJobCount = completedJobs.length;

const failedJobCount = failedJobs.length;

const waitingJobCount = waitingJobs.length;

const statusCode = 200;

const data = {

totalJobCount,

completedJobCount,

failedJobCount,

waitingJobCount

};

return res.status(statusCode).json(data);

}

)

);Let me know if you would like me to run any other tests. I'll report back. |

|

Basically, if you suspect there is a memory leak in BullMQ you should setup a test that is independent of third-party libraries such as express, etc. and the test must unequivocally show there is a memory leak. This is because determining if there really is a memory leak is very time-consuming so I need very clear proof that it is the case before I am going to look deeper into it. |

|

After doing more testing, I think the memory issue is caused by the server returning status code 500 errors and is unrelated to BullMQ. If I run the above code without 500 errors it works fine. Sorry for the false flag. |

|

@manast is it possible that not calling |

|

|

|

I see. In my example above we call This means that we create a new queue instance every time the endpoint is called. In this case, should we close the queue or maybe call |

|

You should only call |

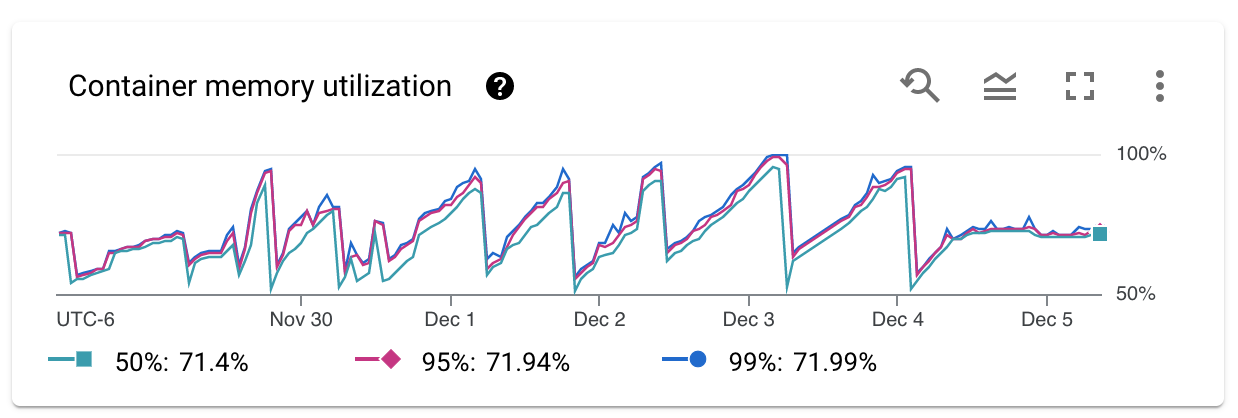

We added an endpoint to track failures, etc. using uptimerobot.com. The code still needs tweaking but the general idea is working well but we are seeing what looks like a memory leak.

Here's a graph of the server memory after deploying the code below. We have about 50 endpoints that are called once every 5 min.

Here is the endpoint code:

We are running Redis version 6.2.6 and BullMQ 3.2.4

The text was updated successfully, but these errors were encountered: