-

Notifications

You must be signed in to change notification settings - Fork 169

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

SSD Object Detection Mappings? #107

Comments

|

The 1917 are the predictions from box priors from the different layers in the model. The coreml-example strips the original model from preprocess, box-generation and postprocessing (like non-max-supression). However, the preprocessing can be achieved by creating the model with: What I need though is the specification of the anchor-boxes layout used in creating the tensor flow model, to be able to correctly map coordinates in the box-generation. Is there any information on that? |

|

Thanks for the tip. I tried adding the image_scale and biases to the convert method but I get the same output still. I am using this example image from the SSD conversion notebook: Also why does the Object Detection API example output numbers that are 0 -> 1 as a % of the input locations? That is much more sane, so how are they doing that? Its the same model no? The same happens in this iOS example which uses TensorFlow mobile: To me it looks like the boxRect vector contains non negative sane locations which can be used to simply drawRect Maybe this repo will shed some light on how the boxes are created: But why is the conversion destroying the useful output from the TensorFlow ProtoBuf version? @vonholst are you able to make sense out of the following np arrays: With respect to the above DOG image which is used in the Object Detection API example? ScoringSame goes for the scores, shouldnt they be non negative? When I look at the Object Detection API example, I see this: This makes sense, there are 100 of the top probabilities and their classes. The classes are rounded floats, theres no way to mistake them. I don't see any rounded floats in the output of the CoreML model, nor can I see any "probability" looking values in the scores. This looks like garbage: @vonholst Do you understand how to interpret that numpy array? Any help would be greatly appreciated. |

|

@madhavajay I believe this "garbage" is what you get before applying some sort of activation function. The example has extracted only the feature-generator. The complete tensor flow graph has the post-processing required to make sense of these numbers. coreml_box_encodings: "1": "2": "3": "4": "5": I don't know if this is correct, but the numbers add up. I don't know the shapes and sizes of these though. And also I don't know the activation function to make sense of the numbers. As for the coreml_scores, I would assume maybe a softmax to make sense of them. |

|

Ah yes that is making a lot more sense now. Might help to BOLD / Emphasis the important line in the README. The full MobileNet-SSD TF model contains 4 subgraphs: Preprocessor, FeatureExtractor, MultipleGridAnchorGenerator, and Postprocessor. Here we will extract the FeatureExtractor from the model and strip off the other subgraphs, as these subgraphs contain structures not currently supported in CoreML. The tasks in Preprocessor, MultipleGridAnchorGenerator and Postprocessor subgraphs can be achieved by other means, although they are non-trivial. @vonholst If this is something you are also trying to achieve in Swift perhaps we can work together to figure it out? I guess the first thing would be to create a working interpretation in python, which can then be ported to any language. So we need to create a MultipleGridAnchorGenerator and a Postprocessor? Do you have any idea how we will be able to get the Scores AND the Class numbers from the score output? My thoughts were to try and compare the same input image to both the original ssd_mobilenet_v1_android_export ProtoBuf model and the feature extracted CoreML model so that as we apply mappings and transformations we can compare it to something meaningful. Also if anyone in the Google team would be able to shed any light that would also be appreciated! 👍 |

|

@madhavajay Yes, I am trying to get this working in coreml as well. I have run a similar yolo version on mobilenet in coreml, and i would like to see how this compares to that implementation. I think much of the post processing is equivalent, but I need to decode the boxes. As for the classes, I expect that they follow the order of the txt-file. And I think you can get the scores by applying a simple soft max. I think we could make a guess at the default boxes generated by the ssd method in the following: |

|

I did a lot of work on this over the weekend and I have a working understanding of the outputs produced by the converted CoreML model. I'll try and walk through what I found— please let me know if anything is unclear. Anyway, the CoreML model outputs two

Here, 91 refers to the index of the class labels (0 = background, 18 = dog). There are a total of 1917 anchor boxes as well. PostprocessingThe postprocessing goes like this:

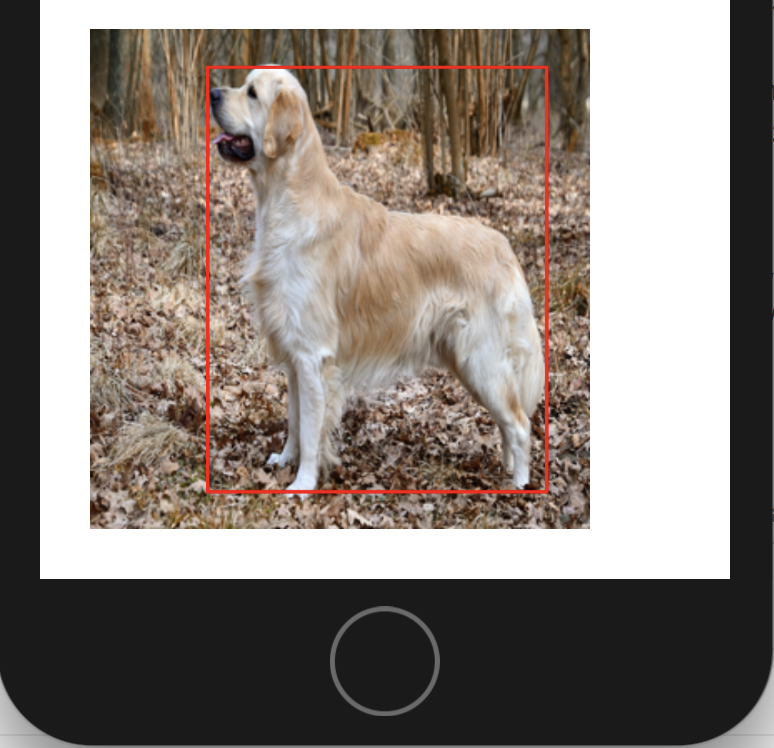

Now, the non-maximum suppression part is pretty easy to understand. You can read about it here but the basic gist is that you sort each box by its score in descending order. You weed out any box that overlaps >50% with any other box that is scored more highly. The trickiest part here is computing the bounding boxes. To do that, you need to take the output of the CoreML model and adjust a base set of anchor boxes. This set of 1917 anchor boxes tiles the 300x300 input image. The output of the k-th CoreML box is: You take these and combine them with the anchor boxes using the same routine as this python code. Note: You'll need to use the scale_factors of 10.0 and 5.0 here. Now, the anchor boxes themselves are generated using this logic. I followed the logic to the bitter end, but instead of trying to reimplement the logic in swift, I just exported them out of the Tensorflow Graph from the The logic for combining the box prediction and the anchor boxes is written up here. Hope this helps! Again, this was a lot of blood, sweat, and tears and reading a ton of Tensorflow code and going through all of the logic. Honestly thought I would stab my eyeballs out. 😭 At the end, I was able to reproduce the bounding box for the golden retriever: |

|

@vincentchu: Big thanks. Had the same strategy minus the time, so I am glad you shared your solution. Saved me a weekend. If I can find the time I will implement the generator in swift, so it will be more convenient to implement other configurations. Thanks again. |

|

@vincentchu Out of curiosity, how did you find the scale_factors = [10.0, 10.0, 5.0, 5.0] needed by KeypointBoxCoder? |

|

@vincentchu you are a legend!!! 👍 I read the SSD paper and my next task was to compare the outputs side by side and see if i could find the implementation of the boxes but exporting the values from the Graph makes a lot more sense, are you able to explain how you did that? I tried loading the protobuf up in TensorBoard but couldn't see how to actually view the post processing step that modifies the values. I think the process of reverse engineering the input and output of exported graphs is a really important one particularly when converting models between formats. Thanks so much for your hard work, I am going to try your Swift code and see what I can get working. 🎉 |

|

@madhavajay @vonholst I spent most of my time inside of an IPython notebook just dumping contents of the tensor and tensorboard. I cleaned it up and added some comments--- you might be interested in checking out this notebook. In it, I:

In terms of exporting values out of the graph, it's not super sophisticated. I just evaluate the tensor I want to dump, then I literally code-genned the swift code and |

|

Thats awesome, this is what I was going to do but you have done the work so now I can play with the notebook and see how its done! 👍 If you have a blog this would make a really really super awesome blog post. Thanks so much for sharing your hard work! |

|

@vincentchu Just tried the code, first issue was a possible range issue and it crashed: Maybe best to use the ..< range operator Or alternatively provide it as a default inside the class to 90? Thoughts? |

|

@vincentchu I am seeing some weird scoring, do we need to apply a softmax like @vonholst suggested? For example score: 1.3203125? Also it seems to be having trouble recognizing stuff, I am seeing stop signs okay but many other objects failing to recognize. Prediction(klass: 65, index: 1911, score: 0.5908203125, anchor: OpenCV91.BoundingBox(yMin: 0.025000009685754776, xMin: 0.025000009685754776, yMax: 0.97500002384185791, xMax: 0.97500002384185791, imgHeight: 300.0, imgWidth: 300.0), anchorEncoding: OpenCV91.AnchorEncoding(ty: 0.07094370573759079, tx: -0.027406346052885056, th: 0.076941326260566711, tw: 0.13402754068374634)) Prediction(klass: 65, index: 1914, score: 1.3203125, anchor: OpenCV91.BoundingBox(yMin: -0.17175143957138062, xMin: 0.16412428021430969, yMax: 1.1717514991760254, xMax: 0.8358757495880127, imgHeight: 300.0, imgWidth: 300.0), anchorEncoding: OpenCV91.AnchorEncoding(ty: 0.019769947975873947, tx: 0.005826219916343689, th: -1.5519936084747314, tw: 2.0325460433959961)) Also I couldnt see any way you were returning the object class information, so I added the klass property to the Prediction struct and passed it through in the initializer like so: |

|

@madhavajay It should be The array however has 91 slots because I want the indexing to match the labels instead of remembering to add everything. |

|

@vincentchu okay well 1...90 and 1..<91 are the same thing so either way. 👍 |

|

@madhavajay In terms of scoring, I don't think you necessarily have to transform the scores. You can just treat them as "higher = better". So you start with anything Then I think you could just take the top 20 detections say of those candidates by score... |

|

Okay but what if I want to convert them to the scale of 0 -> 100%? |

|

@madhavajay yes, you will have to process them further. I didn't dig too much into it, but I think the loss function is here. My impression is that the logits are per-anchor box, so you'd compute the logit across classes with fixed box index ? |

|

@madhavajay Then you might as well apply a sigmoid. In the config there are some parameters related to score-thresholds. They mention sigmoid as score converter. I'm not sure exactly how this config is used in model-gerenation though. |

|

@vincentchu @vonholst great I will look into this soon and if I can figure it out ill post here! :) I am still seeing some strange output with the bounding boxes though: Prediction(klass: 13, index: 1914, score: 1.28515625, anchor: OpenCV91.BoundingBox(yMin: -0.17175143957138062, xMin: 0.16412428021430969, yMax: 1.1717514991760254, xMax: 0.8358757495880127, imgHeight: 300.0, imgWidth: 300.0), anchorEncoding: OpenCV91.AnchorEncoding(ty: -0.01125398650765419, tx: 0.0046060904860496521, th: -1.5216562747955322, tw: 2.0175662040710449)) ACCEPTED klass: 13 label: stop sign The CGRect I get is: Negative values again? Also if the input image is 300x300 how am I getting a height over 300? |

|

@vincentchu also any chance you could share your example Xcode project so I can debug mine against your working version? 😊 |

|

@madhavajay @vincentchu I used the code provided by vincentchu. I applied a sigmoid to the score to bind them in (0,1). I think there are some issues with the non-max-suppression algorithm (not being implemented is the main issue I guess 😊). I used hollance implementation . If we should discuss this further I think we might continue the discussion in another forum, since these should mainly focus on functional issues on tf-coreml. Another tip: hollance has some other useful code for handling MLMultiArrays and object-detection that might speed up your code as well. I used those for other implementations. |

|

@vonholst Amazing! The NMS should be pretty easy, we just have to write an implementation to compute the IOU (Jaccard index) of two boxes, then weed any boxes out that have >50% overlap with another, higher-scoring box. Could I ask a favor? This is actually my first learning iOS project so I think I would benefit quite a bit from seeing your code. I was trying to get this to work in real time, vs. just on the golden retriever picture and just having some struggles. Would help me a ton if I could look at how you did the real-time inference. Maybe you could just create a new repo with the code, and we could submit PRs to improve/implement the NMS and do our discussion there? |

|

@vonholst @vincentchu Yes I am also trying to implement this as a working Camera Demo and having some similar issues, would be great if we perhaps worked on a shared git repo to provide a working implementation for iOS and CoreML. @vonholst do you mind committing your code to a repo and putting a cut down demo app in there we can all iterate on? |

|

Absolutely. I will need to clear some stuff from the code due to some parts are developed for a client. I will try to make a clean project that I safely can share. You have most of the code though, I just filled out the blanks in vincentchu’s code. Hollance also has some complete Xcode projects with nms implemented if you are in a hurry. Otherwise I will fix this as soon as I can. |

|

@vincentchu @madhavajay I created a clean (still kinda messy) project that I can share. We can continue the discussion on that forum if there is any trouble. SSDMobileNetCoreML |

|

Awesome! 🎉 |

|

@vonholst @madhavajay @vincentchu Thank you so much! This is the only detailed solution that I found on internet to use tensorflow ssd model with coreml . And it indeed give me a great help! |

|

@vonholst and @vincentchu did all the work, I just started the issue. 🤓 Also I would say that for anyone else if you watch Andrew Ng's DeepLearning CNN series it covers the YOLO algorithm and sliding windows / non max suppression really well: Anyone attempting to understand the output of the SSD should watch those videos to understand what the output vectors really contain. |

|

@vonholst @vincentchu Hey guys, theres no issues on the example repo: I was tracking the TF Lite progress here: From the developer at google andrewharp: Seems like there might be a chance to re-use the post processing from SSDMobileNet_CoreML demo and add some pre-processing to get the TF Lite iOS demo working. |

|

@madhavajay Interesting! I don’t have a device at hand to try the android implementation. But it seems much of the iOS code should be similar. Swamped right now but will try as soon as I get a chance. |

|

@vonholst Yeah no worries, I have an Android device so I could provide some debugging. Whats the main thing you want to know? The inputs / outputs of the Android TF Lite model in Android Studio? Also I might try and get the model loaded up in TF and poke around to understand it a bit better. Also super busy but seems like this could be a good contribution of the code to the TensorFlow repo. :) |

|

@vonholst SSDMobileNet_CoreML is awesome! Thanks for sharing. I am trying to replicate your conversion of the pb file to mlmodel using: I updated the conversion: But still seeing issues when running with the new mlmodel. Do you have any idea if I am missing anything? Thanks in advance! |

|

@vonholst Hi, I see the image_scale=2./255 part in the model inference. I dont't see any image scaling preprocess step in tensorflow object detection api demo code. https://github.com/tensorflow/models/blob/master/research/object_detection/object_detection_tutorial.ipynb |

|

It’s required preprocessing if you use a pretrained mobilenet. If it’s not included in the model then you need to do that manually at inference. |

|

Here is some description on topic: https://github.com/tf-coreml/tf-coreml/blob/master/examples/ssd_example.ipynb

I wonder if there any example of postproccessing and preprocessing in python/C++? i.e. for parts that are removed from graph? |

|

@mrgloom , From your link: Preprocess the image - normalize to [-1,1]img = img.resize([300,300], PIL.Image.ANTIALIAS) |

|

hi. |

|

@Davari393 Check out this source code which does the mapping from 1917 to anchor boxes and scores: https://github.com/vonholst/SSDMobileNet_CoreML |

Hi pal. Are the ty,yx,th,tw equal prediction[-1][:4] of model Respectively?? |

Hi. |

Can anyone explain what the mappings are of the two tensors which are produced from the output of the ssd_mobilenet_v1_android_export model which the example converts to a .mlmodel file?

When I look at this example:

https://github.com/tensorflow/models/blob/master/research/object_detection/object_detection_tutorial.ipynb

Which uses:

ssd_mobilenet_v1_coco_2017_11_17

You can see the code does this:

Looks good!

So I assume what we have here is the first 100 boxes with 4 dimensions each.

I traced the values and code and did this:

These are % of the entire image so multiplied by the input size of 300 and you should get the original pixel locations.

This all makes sense.

However, I need the model in CoreML so I followed this guide:

https://github.com/tf-coreml/tf-coreml/blob/master/examples/ssd_example.ipynb

Which uses: ssd_mobilenet_v1_android_export

I assume from the README that its the same model:

https://github.com/tensorflow/models/tree/master/research/object_detection

Obviously its slightly different but to what degree I don't know. Can someone clarify?

Now, after the export process I load it into Xcode and when I run the model, I get this kind of output from the tensor.

boxes in concat__0 e.g. concat:0

concat:0 are the bounding-box encodings of the 1917 anchor boxes

Why are there negative values in the bounding box coords?

[ 0.35306236, -0.48976013, -2.5883727 , -4.0799093 ]

[ 0.8760979 , 1.1190459 , -2.6803727 , -1.5514386 ]

[ 1.3935553 , 0.85614955, -0.92042184, -2.7950268 ]

Also can anyone explain what the 1917 are? There are 91 categories in COCO but why 1917 anchor boxes?

I even looked at the android example and its not much easier to understand:

Even better would be an explanation of the CoreML files output tensors?

It would be great to have the end of the file map the output in python to show what they are.

Perhaps draw the box and show the category label just like the object_detection_tutorial.ipynb that would be great! :)

I am completely lost with this so any help would be greatly appreciated!

The text was updated successfully, but these errors were encountered: