Tianrun Chen, Lanyun Zhu, Chaotao Ding, Runlong Cao, Yan Wang, Shangzhan Zhang, Zejian Li, Lingyun Sun, Papa Mao, Ying Zang

KOKONI, Moxin Technology (Huzhou) Co., LTD , Zhejiang University, Singapore University of Technology and Design, Huzhou University, Beihang University.

In Proceedings of the IEEE/CVF International Conference on Computer Vision (pp. 3367-3375).

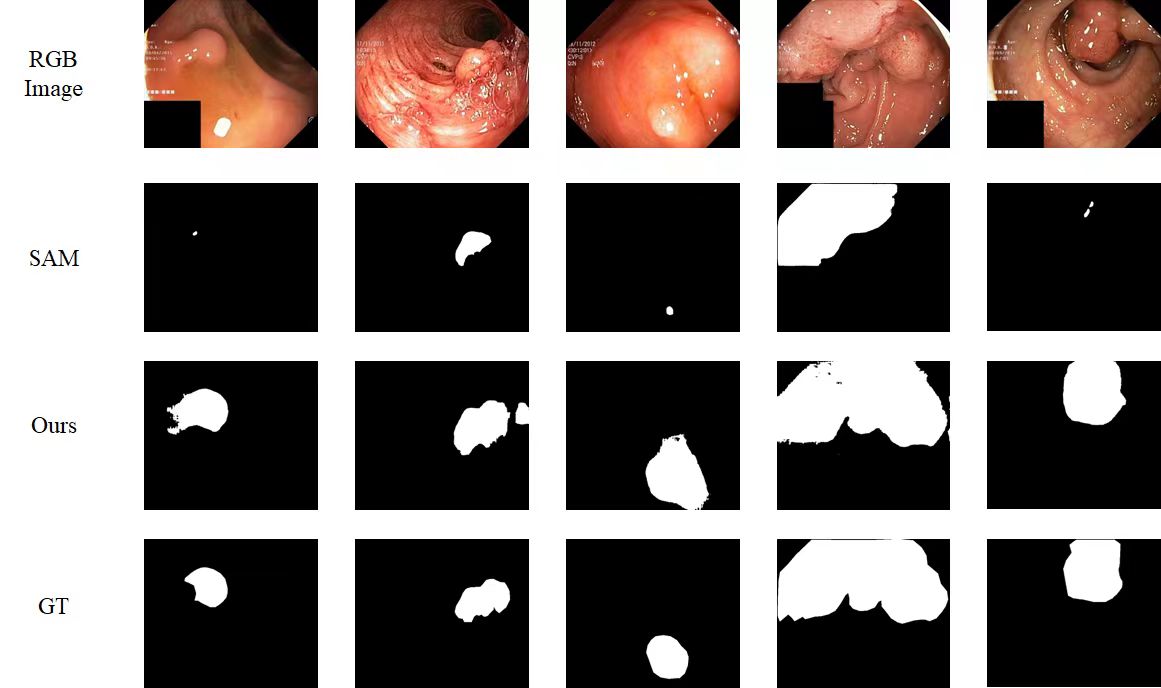

Update on 28 April: We tested the performance of polyp segmentation to show our approach can also work on medical datasets.

This code was implemented with Python 3.8 and PyTorch 1.13.0. You can install all the requirements via:

pip install -r requirements.txt- Download the dataset and put it in ./load.

- Download the pre-trained SAM(Segment Anything) and put it in ./pretrained.

- Training:

CUDA_VISIBLE_DEVICES=0,1,2,3 python -m torch.distributed.launch --nnodes 1 --nproc_per_node 4 loadddptrain.py --config configs/demo.yaml!Please note that the SAM model consume much memory. We use 4 x A100 graphics card for training. If you encounter the memory issue, please try to use graphics cards with larger memory!

- Evaluation:

python test.py --config [CONFIG_PATH] --model [MODEL_PATH]CUDA_VISIBLE_DEVICES=0,1,2,3 python -m torch.distributed.launch train.py --nnodes 1 --nproc_per_node 4 --config [CONFIG_PATH]Updates on 30 July. As mentioned by @YunyaGaoTree in issue #39 You can also try to use the code below to gain (probably) faster training.

!torchrun train.py --config configs/demo.yaml

CUDA_VISIBLE_DEVICES=0,1,2,3 python -m torch.distributed.launch --nnodes 1 --nproc_per_node 4 loadddptrain.py --config configs/demo.yamlpython test.py --config [CONFIG_PATH] --model [MODEL_PATH]https://drive.google.com/file/d/1MMUytUHkAQvMRFNhcDyyDlEx_jWmXBkf/view?usp=sharing

If you find our work useful in your research, please consider citing:

@misc{chen2023sam,

title={SAM Fails to Segment Anything? -- SAM-Adapter: Adapting SAM in Underperformed Scenes: Camouflage, Shadow, and More},

author={Tianrun Chen and Lanyun Zhu and Chaotao Ding and Runlong Cao and Shangzhan Zhang and Yan Wang and Zejian Li and Lingyun Sun and Papa Mao and Ying Zang},

year={2023},

eprint={2304.09148},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

The part of the code is derived from Explicit Visual Prompt