New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Injection BSOD on W7x64 #576

Comments

|

You mean you close a process within the guest itself? |

|

Yes. Firstly i started process with inejctor after i connect vnc and i try click exit button on target process window, after i got bsod |

|

Did the injector exit correctly? |

|

Yes. |

|

I can't reproduce this on my end |

+1 |

|

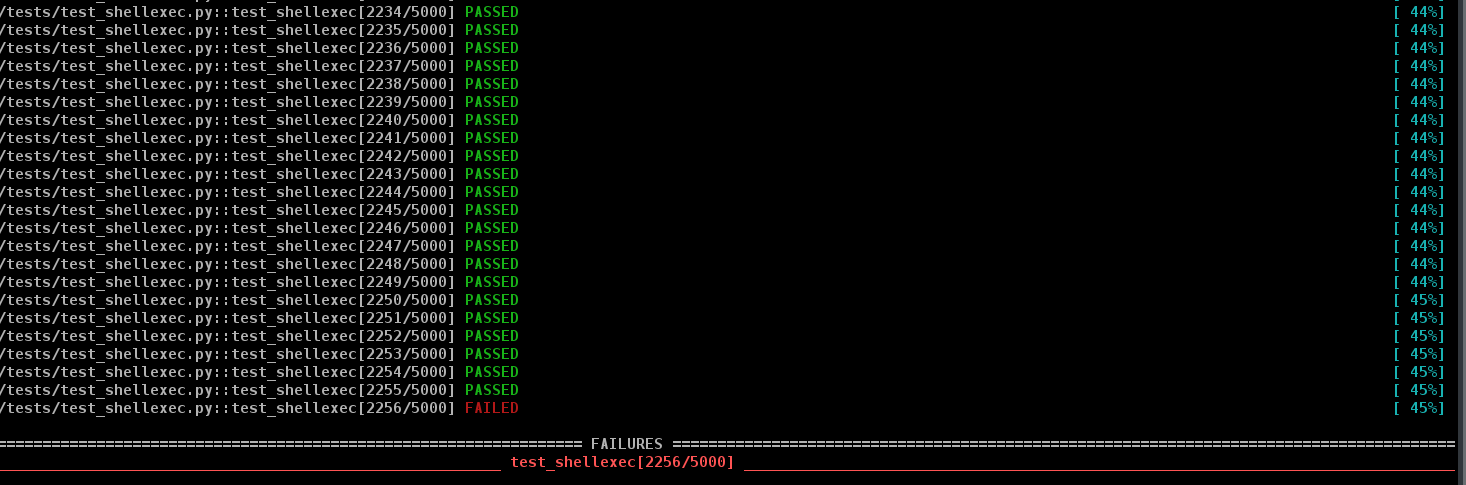

Hi, I think i can shed some lights on this issue, because I have been able to reproduce this kind of behavior on TestingI have developed a test suite to evaluate I have found it to be very stable, in most cases. However I'd like to mention that the VM has only 1 VCPU, but the more VCPUs you have, the more likely the bug will appear. (race condition ?) Here, much faster to crash with 4 VCPUs:

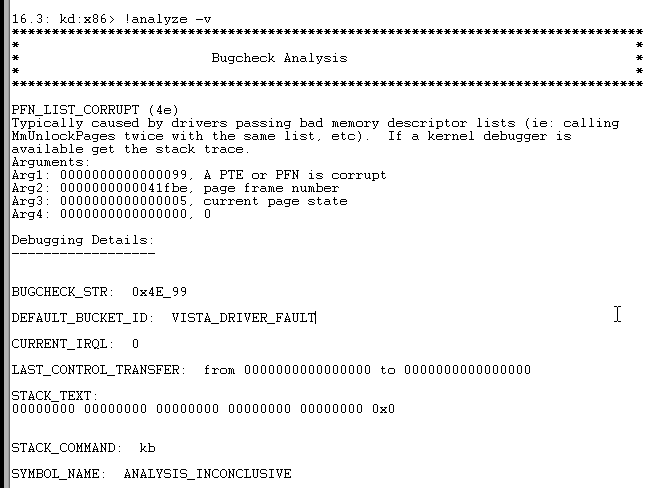

Even if the process successfully executed in the VM, the injection failed, and a BSOD followed, with message Windbg analysisAnalyzing the bug with What's really surprising is that the instruction pointer ( However, the disassembly is wrong since Since you implemented the project and digged into Xen's API, do you have an idea or a lead that we could follow to debug this ? Thanks. |

It alters the guest state for the whole duration of DRAKVUF being active but these changes should not be visible to the guest other then what we discuss in http://dfrws.org/conferences/dfrws-usa-2018/sessions/who-watches-watcher-detecting-hypervisor-introspection. Injector is even more intrusive and the injector was not designed to be stealthy. The breakpoints it uses are still are by default but the stack modification for example are not. That said, it should not bluescreen your machine. If you can trigger the BSOD consistently then we may stand a chance to debug it. |

Yes that's what I meant.

Luckily, the answer is yes, this bug is 100% reproducible. Would you be interested in me sharing this test suite for Drakvuf ? |

We don't modify the guests' pagetables anywhere.

That doesn't sound very easily reproducible to me :)

Perhaps but at this point it's very unlikely I would have the time to play with it. Of course if you can open-source it then it may help someone else with more time to digg into the issue. |

|

Hi, I have made progress regarding what part of the code can trigger the BSOD 1 - breakpoint insert/removeSince the plugins dealing with syscalls needs to insert breakpoints during the analysis, to capture their return value, I decided to disable that feature, and see if it changes the BSOD. I made the following changes: diff --git a/src/plugins/procmon/procmon.cpp b/src/plugins/procmon/procmon.cpp

index a008838..2d9fe77 100644

--- a/src/plugins/procmon/procmon.cpp

+++ b/src/plugins/procmon/procmon.cpp

@@ -448,6 +448,9 @@ static event_response_t terminate_process_hook(

static event_response_t create_user_process_hook_cb(drakvuf_t drakvuf, drakvuf_trap_info_t* info)

{

+ PRINT_DEBUG("NtCreateuserProcess cb !\n");

+ return VMI_EVENT_RESPONSE_NONE;

+

// PHANDLE ProcessHandle

addr_t process_handle_addr = drakvuf_get_function_argument(drakvuf, info, 1);

// PRTL_USER_PROCESS_PARAMETERS RtlUserProcessParameters

@@ -457,6 +460,9 @@ static event_response_t create_user_process_hook_cb(drakvuf_t drakvuf, drakvuf_t

static event_response_t terminate_process_hook_cb(drakvuf_t drakvuf, drakvuf_trap_info_t* info)

{

+ PRINT_DEBUG("NtTerminate cb !\n");

+ return VMI_EVENT_RESPONSE_NONE;

+

// HANDLE ProcessHandle

addr_t process_handle = drakvuf_get_function_argument(drakvuf, info, 1);

// NTSTATUS ExitStatus

@@ -558,6 +564,9 @@ static event_response_t open_process_return_hook_cb(drakvuf_t drakvuf, drakvuf_t

static event_response_t open_process_hook_cb(drakvuf_t drakvuf, drakvuf_trap_info_t* info)

{

+ PRINT_DEBUG("NtOpenProcess cb !\n");

+ return VMI_EVENT_RESPONSE_NONE;

+

auto plugin = get_trap_plugin<procmon>(info);

if (!plugin)

return VMI_EVENT_RESPONSE_NONE;

@@ -607,6 +616,9 @@ static event_response_t open_process_hook_cb(drakvuf_t drakvuf, drakvuf_trap_inf

static event_response_t protect_virtual_memory_hook_cb(drakvuf_t drakvuf, drakvuf_trap_info_t* info)

{

+ PRINT_DEBUG("NtProtectVirtualMemory cb !\n");

+ return VMI_EVENT_RESPONSE_NONE;

+

gchar* escaped_pname = NULL;

// HANDLE ProcessHandle

uint64_t process_handle = drakvuf_get_function_argument(drakvuf, info, 1);Well, it turns that Drakvuf is much more stable then: Remember that it was crashing after ~20 tests before. Doing extended testing, I still have a BSOD, at some point: Conclusions so far:

I would like to reimplement that in the little Would you like to briefly explain where do you set the breakpoint on syscall return, once you are at the syscall entry handler ? I saw this: auto trap = plugin->register_trap<procmon, process_creation_result_t<procmon>>(

drakvuf,

info,

plugin,

process_creation_return_hook,

breakpoint_by_pid_searcher());struct breakpoint_by_pid_searcher

{

drakvuf_trap_t* operator()(drakvuf_t drakvuf, drakvuf_trap_info_t* info, drakvuf_trap_t* trap) const

{

if (trap)

{

access_context_t ctx =

{

.translate_mechanism = VMI_TM_PROCESS_DTB,

.dtb = info->regs->cr3,

.addr = info->regs->rsp,

};so 2 - Teardown codeAs explained before, it is impossible to crash the VM while drakvuf is simply monitoring it for hours. I managed to reproduce the bug with a single Drakvuf run. $processes = "notepad", "mspaint", "powershell", "cmd"

For ($i=1; $i -le 10; $i++) {

Write-Host "batch $i"

$proc_running = New-Object System.Collections.ArrayList

For ($j=1; $j -le 10; $j++) {

$proc_name = $processes[(Get-Random -Maximum ([array]$processes).count)]

Write-Host "[$i] $j - $proc_name"

$proc = Start-Process -FilePath "$proc_name" -PassThru -WindowStyle Minimized

$proc_running.Add($proc) | Out-Null

}

foreach ($proc in $proc_running) {

Stop-Process -InputObject $proc

}

}

The idea is that there is a bug in the teardown code, and if the previously breakpointed/remapped pages are hit while drakvuf shuts down, the BSOD triggers. However, I quickly looked at the teardown code, and it is protected by If you want to test it, just copy the code in your vm. Thanks |

Hi

At first , injector work successfully and can inject executable on target vm

but when i try close process on target vm, system going on BSOD

The text was updated successfully, but these errors were encountered: