New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Several Questions #383

Comments

|

|

Thanks for your explanations, I totally got your points right there. Further, I have several issues here, would you mind taking a look:

Actually, I don't understand what the param sparse_shape means here, am I doing it right here by just passing the coordinates range into it? Or is there any better way of doing this?

Again, thank you so much for your help. |

x = x.replace_feature(F.relu(self.bn(x.features)))Actually we can rewrite BatchNorm to make it accept SparseConvTensor and just use nn.Sequential. Note: you shouldn't use Point2VoxelCPU3d because it can't be pickled, use spconv.pytorch.utils.PointToVoxel instead. |

|

|

Same situation for the param coors_range_xyz in PointToVoxel, passing into two different large range lists will get two different numbers of voxels as output, so is there any suggested coors_range_xyz selection strategy? |

|

The "PointToVoxel" process is actually quantization of real pointcloud. it convert points from real-valued coords to quantized coords starts with [0, 0, 0], ends with spatial shape. |

|

@qsisi this is due to this line: |

|

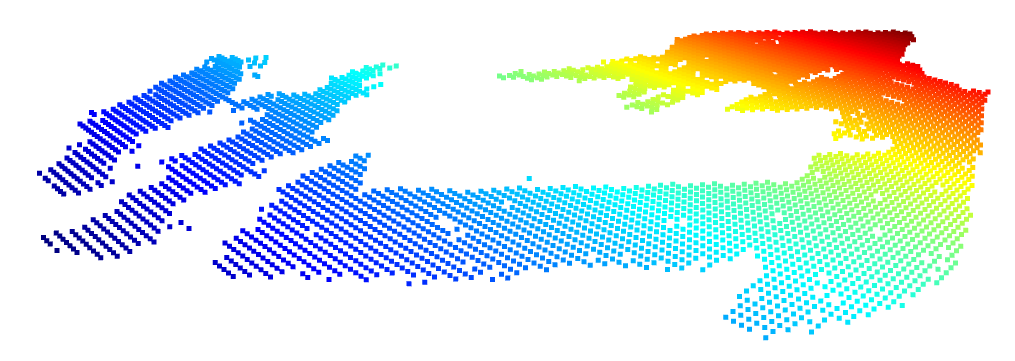

Thank you so much for your help! Now it works for me. Also, I have a naive question here, can spconv be utilized for dense feature extraction or point cloud semantic segmentation? It is a little bit confusing to me because for every input point cloud, the voxelization process will remove some points in the original point cloud, let's say from N->N' during the voxel quantization, and constructing a Encoder-Decoder network will only output N' features, so how to recover the resolution at the output end from N' to N? Thanks for taking up your time for these questions. |

|

@qsisi |

|

To achieve method above, I need to add voxel_id_of_pc output in PointToVoxel, will be added in next bug-fix release (v2.1.12). |

Thanks for your answer! Actually I've already done this while I was using MinkowskiEngine as my network backbone, and Mink has the API leaved for the situation like this: As for the spconv, I assume that there has to be an another(better) way of doing this, so I'm just come straight and ask here. |

That would be nice to work around instead of writing a slow python interface for such querying operation. Looking forward to that functionality. |

|

@qsisi voxel id is available now. see this example |

|

Thank you so much for the quick support, can't wait to try it out. |

|

I noticed that when using spconv==2.1.13 this error: exits again, and when we downgrade the spconv version to 2.1.11 the bug is fixed. |

|

my mistake, I add "get_cuda_stream" back in cpu voxel generater in spconv 2.1.12... |

Hello! Thanks for open-sourcing this amazing repository. I got several fundamental questions,

Hoping to get your answers!

The text was updated successfully, but these errors were encountered: