New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

How to evalaute norfair on my own dataset ? #42

Comments

|

The first step would be to generate videos and check the results by looking at them. A more sophisticated solution would be to make your data compatible with https://github.com/tryolabs/norfair/tree/master/demos/motmetrics4norfair and get the results there. |

|

Hello @joaqo , do I need to have the ground truth and detection files for the video data that I have and then use the above script to check the results ? what are the files that are necessary to check the performance of norfair on my dataset ? could you please elaborate in detail |

|

Hi @vis58 , that is exactly it. You need detections and labels. Regarding the format, you just need to format your data in the same way that MOTChallenge people do. The easiest way to see this is to download the detections and labels on their 2017 datasets as is detailed in the link I provided above. Here is the relevant command though: curl -O https://motchallenge.net/data/MOT17Labels.zip # To download Detections + Ground Truth (9.7 MB)

unzip MOT17Labels.zip |

|

Hello @joaqo , below is the example of how gt file looks from MOT challenge dataset. There are in total 9 values, The values are 1.frame, 2.id, 3.<bb_left>, 4.<bb_top>, 5.<bb_width>, 6.<bb_height> , what are 7th, 8th and 9th values ? |

|

I think @aguscas can help you with this, as he was in charge of writing the parsing for these files. Agus, any idea on what these values mean? |

|

Hello @vis58, the 7th value is a confidence score (it can only be 0 or 1). A value of 0 as you have, means that the instance will be ignored in the evaluation (i.e. the object is marked as inactive), so it will neither count as a true positive, nor a false negative (for example, you may want this if the object corresponds to a reflection). If you want to set that particular instance as active (so that you take it into account), this value should be set as 1. The 8th value is there to indicate the class of the object annotated. You can find a table with the corresponding value to each class in the following paper: https://arxiv.org/pdf/1906.04567.pdf The 9th value is the visibility ratio of the bounding box (your box may be occluded by other object, or it might be cropped by the frame boundary). |

|

thankyou @aguscas , I have a couple of doubts regarding the script that helps in evaluating norfair on the MOT challenge dataset. They are ,

|

|

Right, when using --save_pred you will save your predictions in multiple text files (one text file for each sequence). The predictions are made by a norfair tracker, using the detections provided by the challenge (the det.txt files). The gt.txt files are only used to compute the metrics (after all predictions were generated). These predictions are stored in the format described in https://motchallenge.net/instructions/ . As we are only doing 2D tracking, the last 4 columns are all set to -1. When using --save_metrics, it will save a text file with the results of the metrics obtained by comparing your predictions with the ground truth files. It is not comparing the 'gt' with the 'det' files, instead, it is comparing the predictions (produced by norfair using the 'det' files) with the 'gt' files. If you don't use --save_metrics, it will compute these metrics anyway and the results will be displayed on terminal, but won't be saved in a text file. |

|

Thank you @aguscas, but how do I test the norfair on my own dataset because I only have the gt.txt file, which I labeled, but not the det.txt files? |

|

Right now, norfair doesn't provide a direct way of doing this, but of course there is always a way :) . I will assume you already have a detector and that you also have a python script inwhich you are creating your predictions with a norfair tracker. Also, for each video you are processing, you should have a folder (I will call the path to this folder as

So, to test norfair on your dataset, you can initialize an When you finish processing every video, you should tell the accumulator to compute your metrics and to display them (or save them in a file). In order to do this, you should do If you want to check it, this is the exact same way we used the |

|

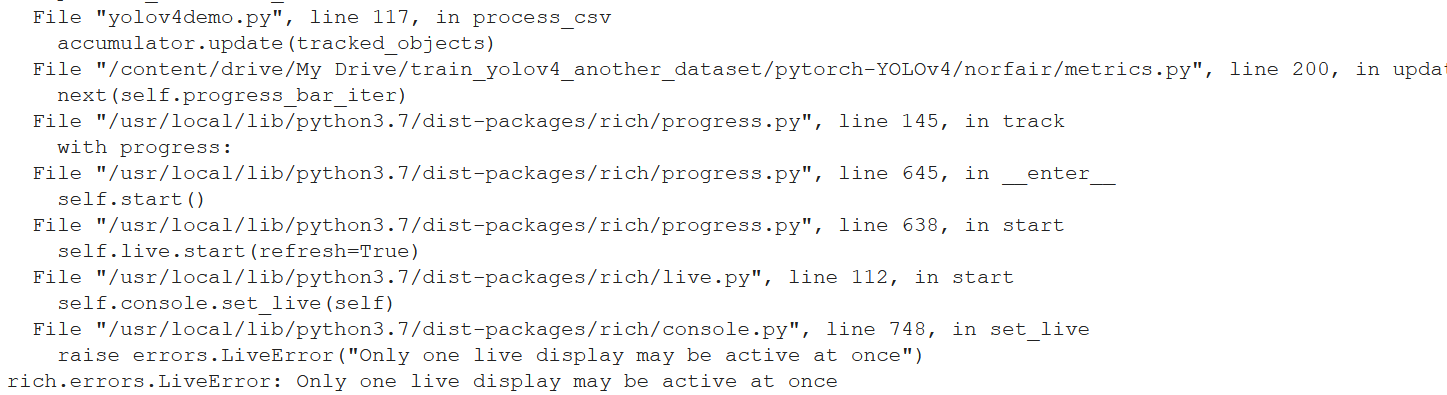

Hello @aguscas , thankyou. I am trying the same way to evaluate norfair , but I have encountered the following error when updating predictions , can you please help me with this ? |

|

That seems to be an error with rich (the progress bar and general cli tool we use), it seems it can't access somewhere to print its output. It looks to be unrelated to the metrics issues being discussed here. Are you running things on a Jupyter notebook? Maybe you reached un weird unstable state in your notebook and rich is having issues with that? |

|

Hello @joaqo ,yes I was using jupyter notebook , but now even I tried in visual studio code and encountered the same issue. I think the line 200 in the metrics.py is triggering the error ? |

|

Hello @joaqo , I was able to avoid the above error, however I am encountering another error at accumulator. update() , can you please help me with this? |

|

What is the format of your positions? You can see this by printing |

|

Hello @aguscas , my object estimate looks like this estimate [[1226.25415129 767.96535106]] This is because while estimating centroid I have just taken image width and height points. This is shown below. def get_centroid(yolo_box, img_height, img_width): now I avoid this error as well just my commenting the obj.estimate[1, 0] - obj.estimate[0, 0], |

|

You need 4 numbers to determine a box in a frame, it is not enough information to provide only those 2 numbers. Our |

|

@vishnuvardhan58 @vis58 how did u solve the error which you were facing can you please give more details ? |

Hello ,

I am trying to implement norfair along with yolov4 with my own dataset. I want to know how well norfair is tracking objects in my dataset ? can you please help me in evaluating norfair ?

The text was updated successfully, but these errors were encountered: