-

-

Notifications

You must be signed in to change notification settings - Fork 3.4k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

build_targets function #8

Comments

|

Ah yes. I did not comment this area sufficiently, I will do a commit to better explain this. This section assigns the closest grid point My first technique to do this was to select unique rows from the n x 3 u = torch.cat((gi, gj, a), 0).view(3, -1).numpy()

_, first_unique_slow = np.unique(u[:, iou_order], axis=1, return_index=True) # first unique indicesbut during profiling I found np.unique was slow in selecting unique rows, so I merged the 3 columns into a single column by the dot product of a random 1 x 3 vector (the 3 random constants you see here). This produced much faster np.unique results. u = gi.float() * 0.4361538773074043 + gj.float() * 0.28012496588736746 + a.float() * 0.6627147212460307

_, first_unique = np.unique(u[iou_order], return_index=True) # first unique indicesI tested the two methods for accuracy using this line, and found differences extremely rare... but wait these are both sorted, and the output used later on is not sorted. Ok I will look into this some more to see the effect of the sorting. print(((np.sort(first_unique_slow) - np.sort(first_unique)) ** 2).sum()) |

|

Yes I've confirmed there is is a bug here. Thank you very much for spotting this! So numpy returns sorted unique indices by default, whereas I assumed they retained the original order. There exists an open numpy issue on the topic: I've corrected this by reverting to the unique row method (first example above), which does appear to retain the original order. Commit is 6116acb. |

|

Thank you. |

|

@glenn-jocher Could you please explain how this will affect results because it seems like results will be the same whichever method we use. |

|

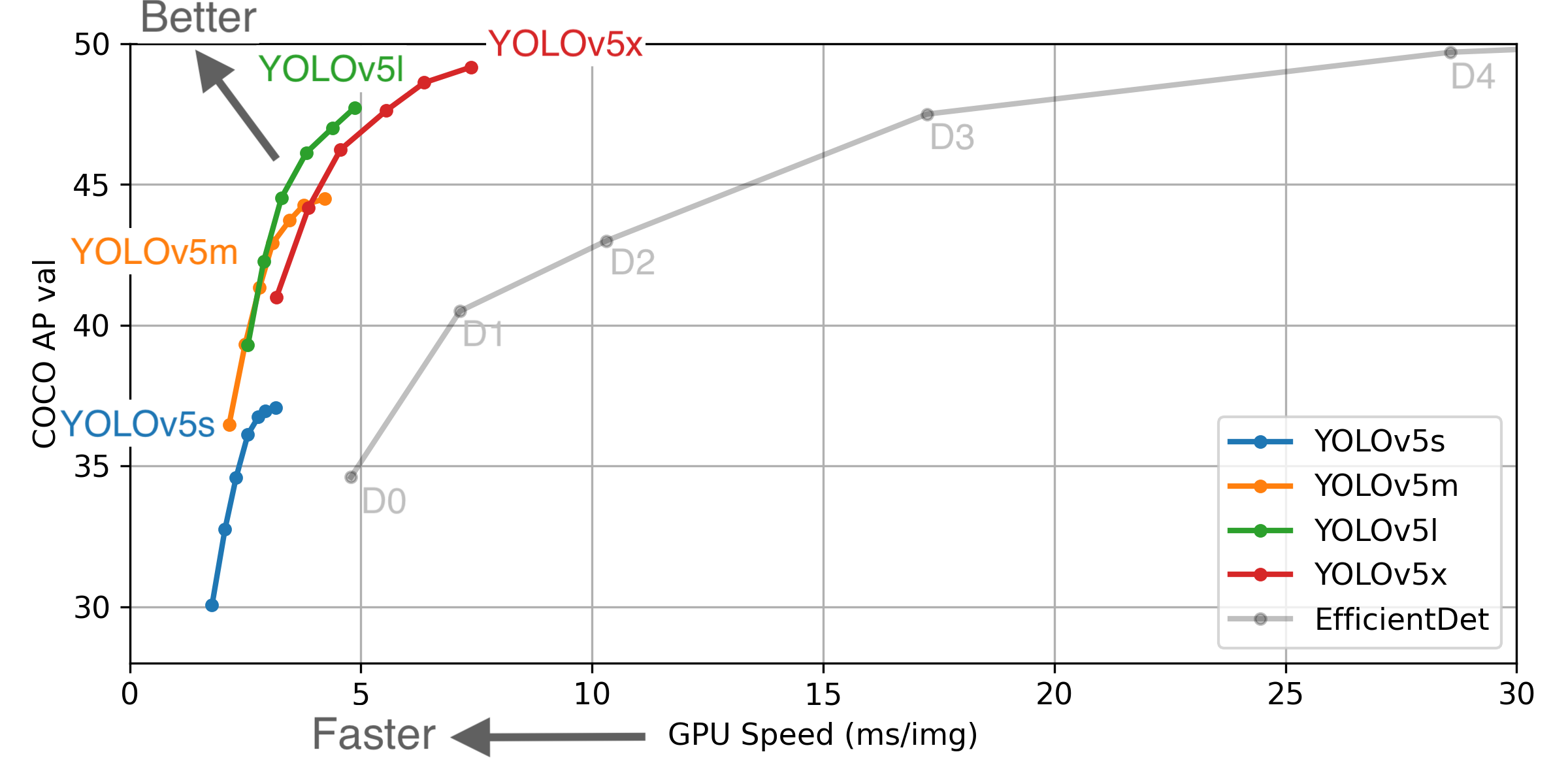

@Bilal-Yousaf Ultralytics has open-sourced YOLOv5 at https://github.com/ultralytics/yolov5, featuring faster, lighter and more accurate object detection. YOLOv5 is recommended for all new projects.

** GPU Speed measures end-to-end time per image averaged over 5000 COCO val2017 images using a V100 GPU with batch size 32, and includes image preprocessing, PyTorch FP16 inference, postprocessing and NMS. EfficientDet data from [google/automl](https://github.com/google/automl) at batch size 8.

Pretrained Checkpoints

** APtest denotes COCO test-dev2017 server results, all other AP results in the table denote val2017 accuracy. For more information and to get started with YOLOv5 please visit https://github.com/ultralytics/yolov5. Thank you! |

|

|

@Bilal-Yousaf the use of np.unique with sorted output would not affect the results in this specific scenario, as it is handled properly in the existing code. Both methods would yield the same results in this implementation. Thank you for bringing this up, and feel free to reach out if you have any more questions or concerns! |

Can you explain why have you used the following constants? I have inspected a few different yolov3 implementation but none had a similar operation.

yolov3/utils/utils.py

Line 183 in a284fc9

The text was updated successfully, but these errors were encountered: