Performance

When we talk about the performance of Eywa, we are usually talking about how many devices/connections can be tracked concurrently with limited hardware resources. This page provides some ideas about how it performs to this sense and also describes the steps to reproduce the benchmark.

v0.9.1

8 CPU / 16 GB, Ubuntu 14.04.3x64, 160GB SSD, San Francisco

sysctl net.ipv4.ip_local_port_range="11000 61000"

sysctl net.ipv4.tcp_fin_timeout=1

sysctl -w fs.file-max=12000500

sysctl -w fs.nr_open=20000500

ulimit -n 20000000

sysctl -w net.ipv4.tcp_mem='10000000 10000000 10000000'

sysctl -w net.ipv4.tcp_rmem='512 512 512'

sysctl -w net.ipv4.tcp_wmem='512 512 512'

sysctl -w net.core.rmem_max=16384

sysctl -w net.core.wmem_max=16384We know that Eywa is bounded by memory, and also provided that most of the embedded devices have limited memory size, we tune the tcp buffers to be 512 bytes. Also set maximum open files to be 2 million. Enough for our benchmark.

service:

host: localhost

api_port: 8080

device_port: 8081

pid_file: /var/eywa/eywa.pid

assets:

security:

dashboard:

username: root

password: waterISwide

token_expiry: 24h

aes:

key: abcdefg123456789

iv: 123456789abcdefg

ssl:

cert_file:

key_file:

api_key: dRiftingcLouds

connections:

registry: memory # deprecated in version higher than v0.9.1

nshards: 16 # deprecated in version higher than v0.9.1

init_shard_size: 4096 # deprecated in version higher than v0.9.1

http:

timeouts:

long_polling: 600s

websocket:

request_queue_size: 8

timeouts:

write: 8s

read: 600s

request: 8s

response: 16s

buffer_sizes:

read: 512

write: 512

indices:

disable: true

host: localhost

port: 9200

number_of_shards: 8

number_of_replicas: 0

ttl_enabled: false

ttl: 0s

database:

db_type: sqlite3

db_file: /var/eywa/eywa.db

logging:

eywa:

filename: /var/eywa/eywa.log

maxsize: 1024

maxage: 7

maxbackups: 5

level: info

buffer_size: 512

indices:

filename: /var/eywa/indices.log

maxsize: 1024

maxage: 7

maxbackups: 5

level: warn

buffer_size: 512

database:

filename: /var/eywa/db.log

maxsize: 1024

maxage: 7

maxbackups: 5

level: warn

buffer_size: 512As you can see, we disabled the indexing, because indexing performance is not part of our project. Each connection is also allocated with 512 bytes read buffer and 512 bytes write buffer.

20 client servers.

Each with 2 CPU / 4 GB, Ubuntu 14.04.3x64, 60GB SSD, San Francisco

Same TCP and system tuning as server side.

./benchmark -host=<server ip> -ports=8080:8081 -user=root -passwd=waterISwide -fields=temperature:float -c=50000 -p=50 -m=50 -r=3600s -w=20s -i=300000 -I=100 -s=5sThis starts 50,000 connections to the server on each client, each client will ping server 50 times, and send 50 messages, with interval randomly decided up to 5 minutes. This mimics the behavior of average use of home automation devices. Each machines starts new client within 100 milliseconds, randomized.

For details about benchmark options, please check ./benchmark -h and source code

Let's take a look at some graphs.

The trending data can be downloaded here.

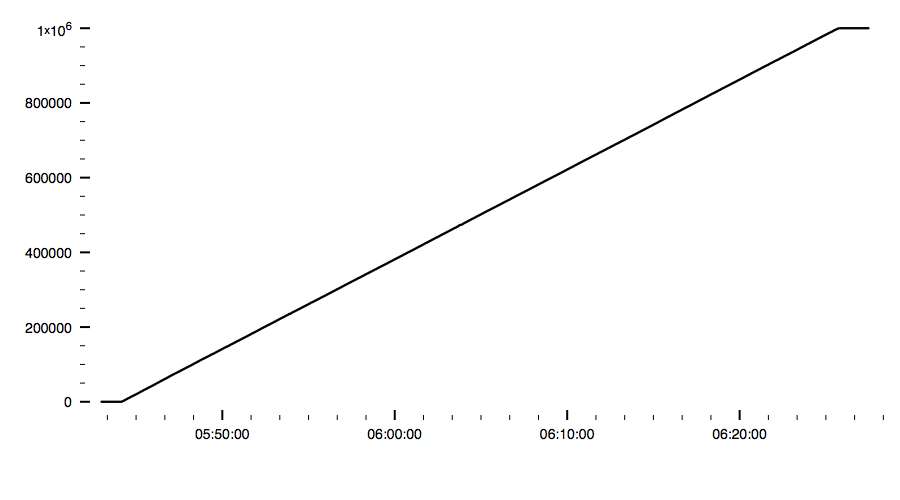

It took 40 minutes to create 1 million connections for clients.

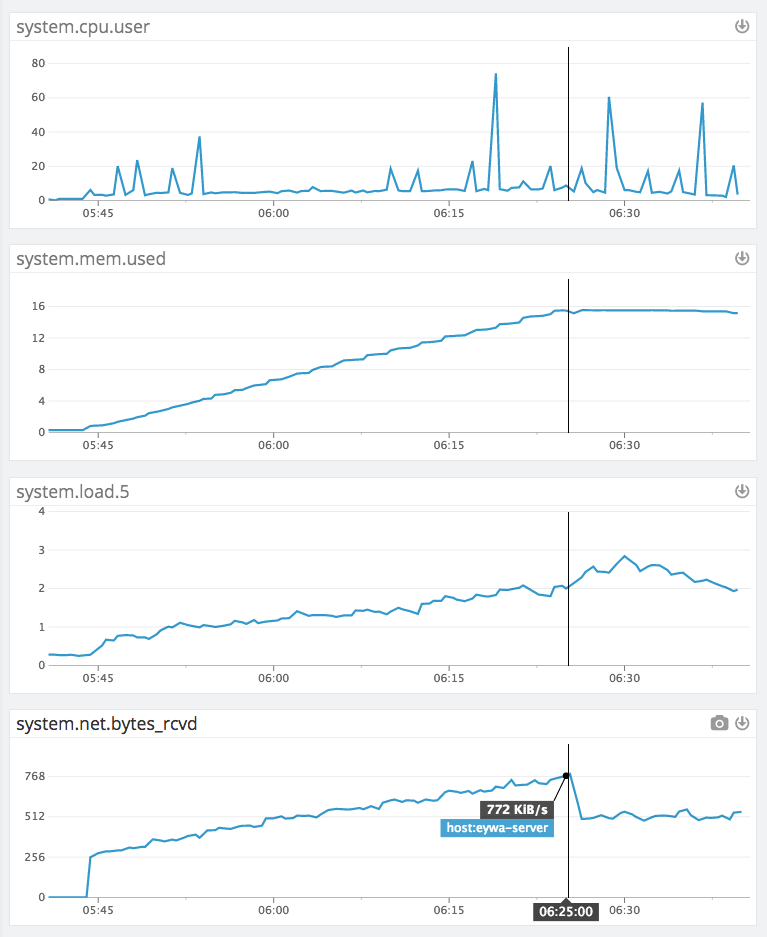

Below are system graphs collected by datadog.

These graph clearly shows that memory is the bottleneck of connection limits on a single server, while cpu has a lot of buffer.

Thanks to golang's exceptional threading model, Eywa can keep track of 1 million connections with tuned tcp configurations on a 16GB machine. While cpu is not the limiting factor, memory is consumed roughly 15KB per connection.