New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Get "AssertionError: can only test a child process" when using distributed TPU cores via Pytorch Lightning [CLI] #1994

Comments

|

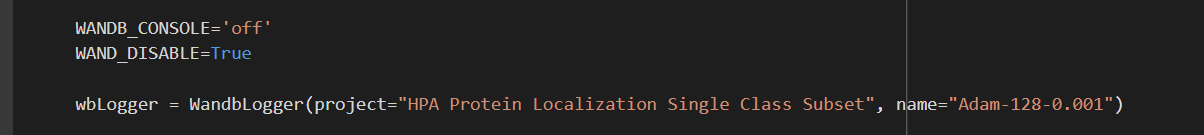

@adamDhalla, I am trying to reproduce the error, but for now (based on that exception) i suggest a temporary workaround of turning the console logging off, Using env variable: |

|

I'm having the same error. I found this issue reported on the huggingface page, and this comment helped me fix the issue temporarily: huggingface/datasets#847 (comment) |

Thanks! but - |

|

You need to use |

|

@adamDhalla I didn't use def _publish(self, record: pb.Record, local: bool = None) -> None:

#if self._process and not self._process.is_alive():

# raise Exception("The wandb backend process has shutdown")

if local:

record.control.local = local

if self.record_q:

self.record_q.put(record) |

|

@prash-p Removing that check should be fine in the case of console output, but for other types of data, the code is trying to prevent a use case we haven't fully tested yet and which could lead to corrupted or incomplete data which we want to avoid. We are still having trouble reproducing this failure so we can provide a safe workaround (and get the fix into a release ASAP) |

|

@borisdayma oh god yeah of course! Don't know what I was thinking. Still kind of a coding noob, but thank you. |

|

@raubitsj If it helps, I had this error when running my script as a notebook in vscode, using tqdm on my pytorch Dataloader. The exact same script ran without errors when running from the command line. |

|

Any progress on this? |

|

I encounter the same error, when training ViT with torch_xla using TPUs. Any progress? |

|

Hi @khvmaths and @adamDhalla we're working on a solution. This may require changes to the wandb logger in Pytorch-Lightning, which could take a couple weeks to get a release out. We'll let you folks know here when a branch is ready |

|

This issue is stale because it has been open 60 days with no activity. |

|

@KyleGoyette Hii, any progress on this issue? I didn't use pytorch-lightning. I got this issue while using transformers and pytorch-xla. |

|

@prikmm Either the next release or the release after will have a new experimental mode of running that supports this case. |

|

This issue is stale because it has been open 60 days with no activity. |

I also have this error when using tqdm on my pytorch dataloader |

|

Hey, I've still got this issue training on TPUs with torch XLA. |

|

I'm still getting this issue, using pytorch-lightninig in jupyter-lab ... any updates? |

|

Still getting this issue in Kaggle notebooks when using pytorch lightning |

|

Thumbs up, same issue. |

|

Hi and thanks for reporting this issue. We are currently working on improvements to our multiprocessing and was wondering if you would be interested in trying it out. The new logic should be more robust and allow more flexibility when using multiprocessing with In case you are interested in trying it out, see this link: https://github.com/wandb/client/blob/master/docs/dev/wandb-service-user.md One thing to note is that this is still experimental, and although we did testing it is still developed and improved all the time. |

|

Hi, we just introduced a tentative fix. pip install --upgrade wandb

pip install --upgrade git+https://github.com/PytorchLightning/pytorch-lightning.git |

|

This unfortunately does not work for me, still getting the error |

|

Could you confirm which versions you are using and whether you have reproducible code? |

|

I still have the same issue on Colab. Colab reproducible code is |

|

Hi @borisdayma I met the same problem with |

|

@leoleoasd I think you can just add |

|

Also just encountered this issue, with |

|

@zplizzi glad to hear that this solved your issue. Btw we have a pre-release where we have service activated by default if you want to try it out: https://docs.wandb.ai/guides/track/advanced/distributed-training#wandb-service-beta |

The wandb service is enabled by default [1][2] starting with wandb sdk 0.13.0. This commit adjusts the requirements accordingly. The setup call is still necessary, since we later initialize wandb in subprocesses. [1] wandb/wandb#1994 (comment) [2] https://docs.wandb.ai/guides/track/advanced/distributed-training#w-and-b-sdk-0.13.0-and-above

The wandb service is enabled by default [1][2] starting with wandb sdk 0.13.0. This commit adjusts the requirements accordingly. The setup call is still necessary, since we later initialize wandb in subprocesses. [1] wandb/wandb#1994 (comment) [2] https://docs.wandb.ai/guides/track/advanced/distributed-training#w-and-b-sdk-0.13.0-and-above

The wandb service is enabled by default [1][2] starting with wandb sdk 0.13.0. This commit adjusts the requirements accordingly. The setup call is still necessary, since we later initialize wandb in subprocesses. [1] wandb/wandb#1994 (comment) [2] https://docs.wandb.ai/guides/track/advanced/distributed-training#w-and-b-sdk-0.13.0-and-above

|

Hi, I got the same issue, using Pytorch Lightning in Google Colab! |

Hi All,

Having an error being thrown at me when trying to log my metrics and hyperparameters on W&B via PyTorch Lightning whilst running on 8 TPU cores.

I first initialize the Weights and Biases run and project using the Lightning

WandbLoggerclass, which practically runs wandb.init(). That goes fine. But then, I run theTraineron 8 TPU cores, and with keyword argument 'logger=my_WandbLogger', I get the errorAssertionError: can only test a child process.Note that I tried this on a single TPU core, and that went fine and dandy. So it seems to be a problem with the distributive processing part of things.

How to reproduce

This isn't my code, but someone had the same issue a while back, although I couldn't find their solution. It's done using the bug-reproducer template ('The Boring Model') that Pytorch Lightning uses. Reproduction HERE.

I'm running things on Google Colab, with Pytorch Lighting version 1.2.4 (most recent) and W&B version 0.10.22 (one version behind the latest version).

Here's the full error stack trace if you're curious

I'm wondering if there are any temporary workarounds for now since I need to find a way to connect and things are a bit time-sensitive!

The text was updated successfully, but these errors were encountered: