-

-

Notifications

You must be signed in to change notification settings - Fork 520

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Error: invalid input 'encoder_attention_mask' #23

Comments

|

Could you provide the command used to convert it? Which task did you use? It may be because you are not exporting using The command I would use is: |

|

Yep that's the exact command I used. If you'd like, I could upload the files to your HuggingFace repo (https://huggingface.co/Xenova/transformers.js/tree/main) to make sure they look ok. |

|

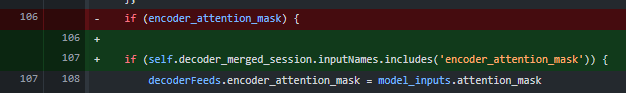

No worries - I'll convert it on my side. Then it's most likely something I'd need to fix. The strange thing is, other model types (with the same model type, i.e., "bart") need the encoder attention mask (vs. what you are showing, which doesn't). |

|

Got it working :) Will push changes soon. |

|

Looks like the commit automatically closed the issue. Whoops. Can you confirm the changes work (I think you can install an npm package from GitHub), and if so, I will push this in version 1.2.6 |

|

Sorry for the late response. It works great! Side note, any plans to set up Typescript types, and/or support onnxruntime-node? Both would be super useful. I'd be open to helping implement it as well. |

Great!

Absolutely! We currently have a couple of people working on the typescript side of things (#26 and #28) For onnxruntime-node, I suppose it would be a question of how to support both web and node versions of onnxruntime without duplicating code. If you have any ideas, or know how to do that, feel free to open up a PR! |

I'm trying to run a slightly larger model (https://huggingface.co/facebook/bart-large-cnn). It's a Bart model, and I converted it to .onnx successfully using your script. Size comes out to ~1gb.

I get this error when running the summarization and text2text generation pipelines:

The text was updated successfully, but these errors were encountered: