-

Notifications

You must be signed in to change notification settings - Fork 140

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

face_template values #14

Comments

|

These look like coordinates of five points. See the comment above the line which you quoted:

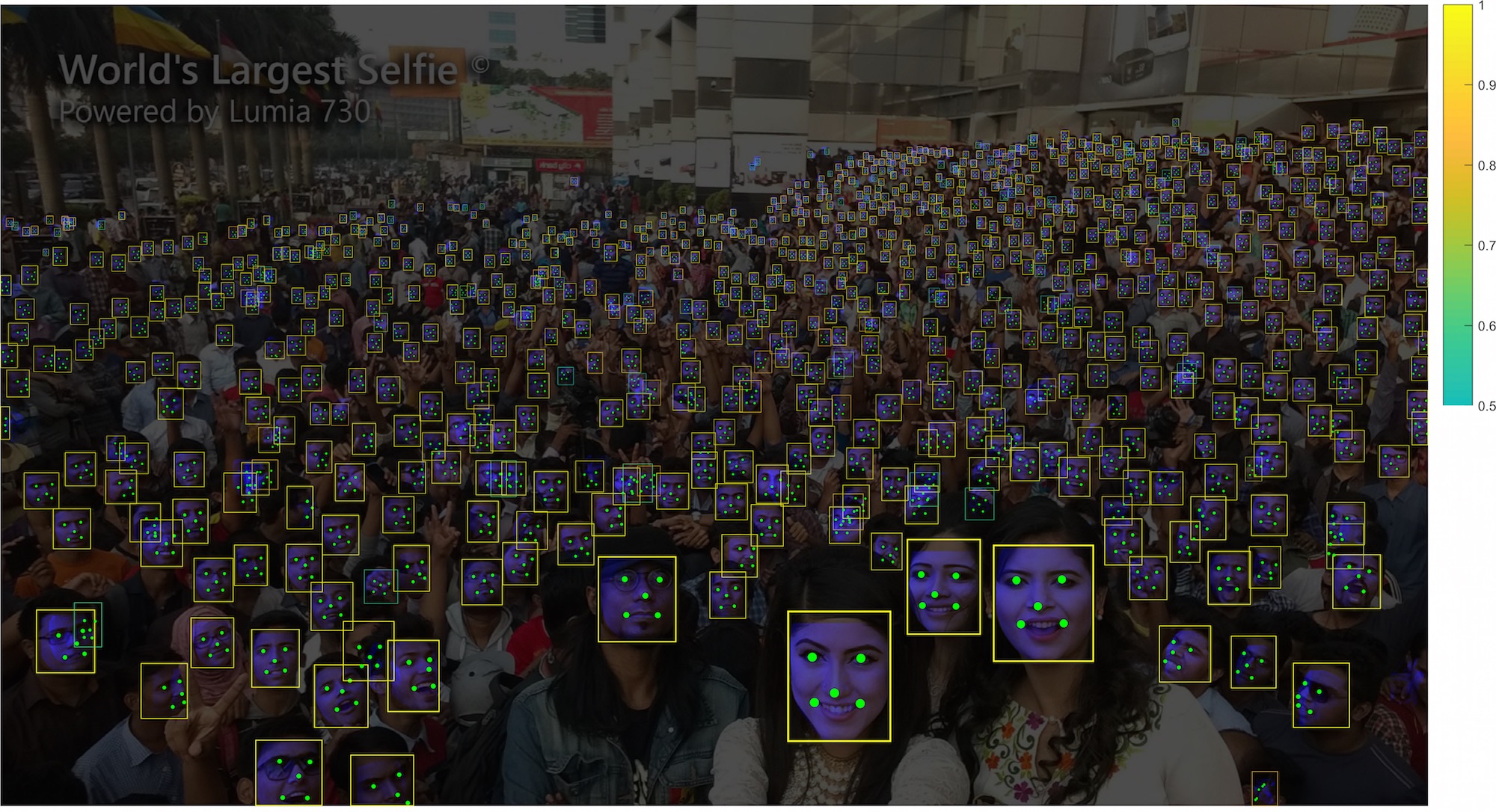

See the 5 colored dots on this image: |

|

Thanks Woctezuma! I see it says it's for FFHQ, can I use these with LFW? I'm still learning... thank you. |

|

That comment got me worried 🤣 |

|

For FFHQ, the dlib library is used, so that face alignment is based on 68 landmarks. See: http://dlib.net/face_landmark_detection_ex.cpp.html

So the use of 5 landmarks is not specific to FFHQ at all. I think I saw it first in RetinaFace: |

|

Perfect! Thank you so much for answering all my questions! |

How do I calculate these numbers?

I want to calculate them for LFW and other datasets. I'm very new so I would appreciate if you can show how to calculate. 🍵

Thank you so much for your amazing library!

The text was updated successfully, but these errors were encountered: