-

Notifications

You must be signed in to change notification settings - Fork 130

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

训练是loss很不稳定,字错率下降慢 #35

Comments

|

这应该是正常的,你训练那个数据集? |

WenetSpeech数据集与前三个开源小型数据集 |

|

字错率一开始就是0.57 ?batch size是多少? |

|

是正常的,一般是在20多轮的时候有较大的下降。你也可以用VisualDL查看训练log的变化,有loss有没有在减小的趋势。要看整体趋势,不能只看某个值,因为音频的长度不一。 |

|

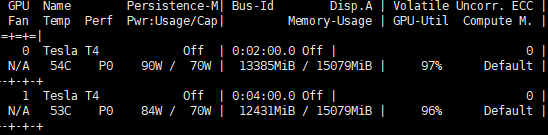

训练时间有点久,训练时,GPU占用大吗? |

两块卡一共占了27g多,训练速度属实有点慢了,一个epoch37,8小时 |

|

这就没问题了。等训练20多轮的时候变化情况 |

好的,感谢解惑 |

|

有变化吗? |

|

对了,现在提供了更大的模型 Line 15 in 5141986

|

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

目前模型已经训练了14个epoch,训练时loss频繁变化,最低为1,最高可到40多,字错率从初始0.57下降到0.49,不知道这是否是正常现象

The text was updated successfully, but these errors were encountered: