As the global manufacturing industry enters its fourth revolution, innovations such as robotics, automation, and artificial intelligence (AI) are set to take over. The number of active industrial robots worldwide is increasing by approximately 14% year on year, and automation creates new types of robots with improved utility and function. Factories of the future will likely feature robots and humans working side-by-side to meet consumer demand—a new world for which business owners should be prepared.

Based on current projections, AI is expected to have the ability to increase labor productivity by up to 40% by 2035, and robots are generally designed to take on repetitive tasks and allow workers to focus on more intensive duties. A major benefit of robots in automating large manufacturing operations is that some tasks could effectively be completed 24/7, thereby boosting production output without any additional labor costs.

RT Knits Ltd bought a 6dof robot to retrieve garments from printing machines in the Printing Department. It is the first robot of the brand Borunte which the company invested in. Hence, I wanted to take this opportunity to explore the mathematical and physical models of a 6-axis industrial robot and build a Digital Twin of the machine for simulation of future processes.

Through this project, I want to provide a basic understanding of how robots work and develop models to control them. We will find many different kinds of robots with different axes and different mechanical configurations in the industry. However, the 6-axes one is the most commonly used, and also, it is one of the most complicated ones. Hence, if we understand how this one works, we should be able to solve models for all the others.

Simulation is an extremely important part of the development of a robot, and in general of any kind of industrial machine or process. Creating a simulated version of our machine, a so-called digital twin, will give you many advantages:

- Model Validation: We can validate the

correctnessof our model. For example, after we write the inverse kinematics of our robot we can check that the results are correct in simulation, before we go on the real machine. This makes testing muchsaferandcheaper. - Design Planning: We can also plan and

optimizeour entire production line design. In simulation we can make sure our robots are in the best position to avoid singularities and have time-optimal trajectories for the tasks to be completed. - Process Monitoring: We can remotely connect the simulation to the real machine, and

controlit or simply view it from our office.

The main goal of this project is to understand the mathematical concepts behind a standard 6-axes industrial manipulator and build a Digital Twin in Unity which will allow us to simulate any other tasks.

A special thanks to Prof. Fabrizio for the guidance on this project. Most of the concepts explained below are based on his materials.

- Research on Industrial Robots

- Coordinate Frames and Vectors

- Forward Kinematics

- Inverse Kinematics

- Path Planning

- Workspace Monitoring

- Trajectory Generation

- Mechanics

- Implementation

Most industrial robots are mechanical arms controlled by electric servo motors and programmed to perform a specific task. This task is mainly repetitive, tedious and boring such that robots are more fitted to perform these tasks than humans. It is important to note here that these robots only perform actions that it was programmed for and does not utilizes any form of intelligence. It has no visual inputs and no AI that can allow it to take decisions on the go. However, a lot of research is being done that uses Reinforcement Learning so that the robot teaches itself to perform task such as grabbing objects of different sizes and shapes.

Fig. Industrial robots in automobile industry.

Constituting of mechanical arms, the industrial robots can lift objects ranging from 1Kg to 400 Kg. The mechanical arm robots can be found in all sorts of factories but they are mainly suited in metal working and automobile industry for cutting, painting, welding and assembling tasks. At RT Knits, we will use the robot for packaging, palletizing and handling of goods.

Industrial robots differ in various configurations however, they can further be segmented into two main parts: Serial Kinematic and Paralell Kinematic.

Serial kinematics consists of an open chain of links similar to the human arm. The should, arm, wrists and fingers all form a chain of links and joints. This configuration is normally used for higher payloads and have a much more flexibility to reach a desired target location with more than one possible orientations. The flexibility is measured w.r.t the number of degrees of freedom(dof). Our robotic arm has 6 dof: 3 in position and 3 in orientation. Serial kinematics also allows for larger workspaces such that the robot can reach object far from its base. However, their two main disadvantage sare that they are slower and less accurate than the paralell kinematic system. This happends because each link adds some weight and errors to the chain hence, the total error is cummulative of the number of joints. It is possible to build a robot with more joints however, we also increases the complexity to control the entity.

Fig. Da Vinci's drawing of human arm(left) and Serial kinematic drawing of robotic arm(right).

To sum up about Serial Kinematics:

- Higher payloads(5 Kg - 300 Kg)

- Higher number of dof

- Larger workspace

- Slower movements

- Lower accuracy

In a paralell manipulator, the actuated joints are not all attached to the ends of the other actuated joints. Instead the actuated joints are placed in paralell such that each joint is connected both to the end-effector and to the ground in the manipulator. This configuration is mainly used in setting where the payload to be lifted is lower(<10Kg) and the required dof is only 3 or 4. However, there exists robots which can lift higher payloads and have 6 dof such as the Gough-Stewart platform but these are not common in industrial setting. In contrast to serial kinematics, paralell manipulators have a limited workplace but can move much faster and have a higher accuracy because errors do not add up between joints.

Fig. Figure shows 6 actuated joints connected in paralell.

To sum up about paralell kinematic:

- Lower payload(<10 Kg)

- Fewer dof

- Smaller workplace

- Faster movements

- Higher accuracy

The video below shows the distinction between serial and paralell manipulators.

KINEMATICS._.Serial.robot.vs.Parallel.robot.This.is.not.CGI.mp4

Fig 1. ([Video by Oleksandr Stepanenko](https://www.youtube.com/watch?v=3fbmguBgVPA))

Our 6-axis robot is a serial kinematic structure with 6 links connected together by 6 joints. The robot starts with a base fixed to the ground. It has a motor underneath to allow rotation in the horizontal plane. It has a first axis connected to the base to allow the rotation motion - it is called J1 which stands for Joint 1. Then we have a second and a third axis called J2 and J3. Note here that J1, J2 and J3 is called the arm of the robot.

We finish with joints J4, J5 and J6 which forms the wrist of the robot. It is important to observe here that the rotation of the wrist does not affect the motion of the arm however, the inverse is not true.

We also have a Mounting Point(MP) which shows where the tool is mounted on the robot and a Tool Center Point(TCP) which is the very last edge of the robot, including the tool, which is also called the End-Effector. If there is no addtional tool, then MP = TCP.

The figure below shows the joints and links of the robot.

Fig. Description of 6-axis Anthropomorphic Robotic Arm.

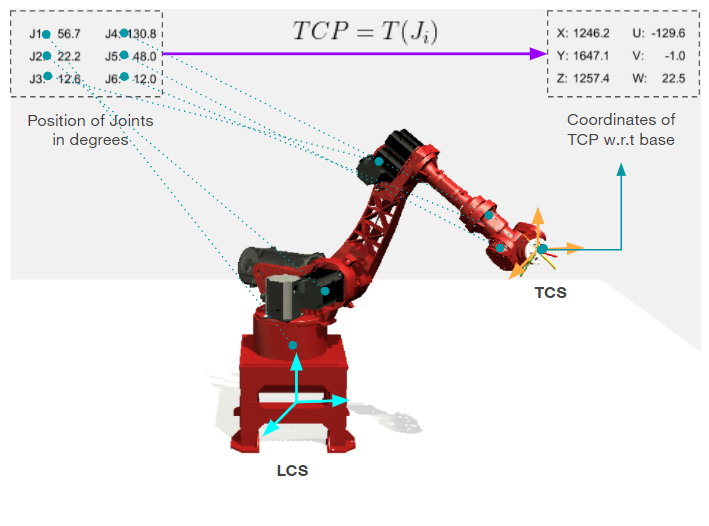

The TCP has 6 coordinates: 3 of them describes its position(XYZ) in space and the other 3 describes its orientation(UVW). The position of the joints axes and the position of the TCP is related to each other. The process of finding position and orientation of TCP given Joints values is called Forward or Direct Kinematics. Vice versa, finding our Joints values corresponding to a given TCP position and orientation is called Inverse Kinematics.

A key requirement in robotics programming is keeping track of the positions and velocities of objects in space. A frame which is essentially a coordinate system with a specific orientation and position. For example, consider the situation whereby we ask a robot to detecta blue ball in a room. It will be futile if the robot report to us that the blue is 1m from the robot because now we want to know where in the room is that blue ball. Providing this information requires us to know each of the following:

- The position of the blue ball relative to the camera.

- The position and orientation of the camera relative to the base of the robot.

- The position of the robot in the room.

We need to develop a framework for representing these relative locations and using that information to translate coordinates from one frame of reference to another. In robotics, we have 4 main geometrical frameworks:

The Global Coordinate System defines the origin and orientation of our world. If our workspace consists of various robots then it is useful to define a unique global reference system so that everyone understands that same global coordinate.

The World coordinate frame is indicated with the subscript w. This coordinate frame is fixed at a known location in space and assumed not to move over time.

Fig. Global Coordinate System(GCS) in green.

In a workspace with several robots each machine will have its own local coordinate system(LCS or MCS) which is fundamental in programming each individual robot. However, the other robots will not understand and interact with a point specified in a machine's local coordinate system. If we have only one robot then; LCS = GCS.

The machine coordinate frame is indicated with the subscript r. We can envision this coordinate frame as being attached to the base of the robot so that the origin of the frame moves as the robot moves. In other words, the robot is always located at (0, 0, 0) in its own coordinate frame.

Fig. Local Coordinate System(LCS) of each robot in blue and GCS in green.

The origin of the Tool Coordinate System is located at the TCP of the robot. If we have no tool attached to the robot, then the TCS is at the Mounting Point(MP) of the robot.

Fig. Tool Coordinate System(TCS) in yellow and GCS in green.

The most important frame for the operator in the Workpiece Coordinate System or Product Coordinate System. This frame is what the operator's sees and considers as the origin of its workplace and all the programmed points and movements refer to this system. We may have several products with which the robot will interact, hence we will have several WCS with their own origin and orientation.

Fig. TCS in yellow, GCS in green, LCS in blue and Workpiece Coordinate System(WCS) in pink.

Our goal is to understand how to move from one frame to another and how to transform coordinate points across different frames.

Before we dive into frame operations, it is best we establish some conventions that we will use throughout the project. We have a left-handed coordinate system and a right-handed coordinate system. In a right-handed coordinate system we determine the direction of the z-axis by aiming the pointer finger of the right hand along the positive x-axis and curling our palm toward the positive y-axis. The thumb will then point in the direction of positive z-axis as shown below. Note that there is no way to rotate these two coordinate systems so that they align. They represent two incompatible ways of representing three-dimensional coordinates. This means that whenever we provide coordinates in three dimensions we must specify whether we are using a left-handed or right-handed system.

Fig. Left and right-handed coordinate systems and Right-hand rule for determining the direction of positive rotations around an axis.

We also need to establish a convention for describing the direction of a rotation. Again, we will follow a right-hand rule. To determine the direction of positive rotation around a particular axis we point the thumb of the right hand along the axis in question. The fingers will then curl around the axis in the direction of positive rotation.

The position and orientation of an object in space is referred to as its pose. Any description of an object's pose must always be made in relation to some to

some coordinate frame. It is possible to translate a point between any two coordinate frames. For example, given the coordinates of the blue ball in the camera coordinate frame, we can determine its coordinates in the robot coordinate frame, and then in the room coordinate frame. In order to accomplish this we need to specify how poses are represented.

Suppose we have a point p in space and we have two different observers based in frame and

respectively. Both observers are looking at point

p and the first observer will record the coordinates of the point according to its frame as and consecutively the second observer records point

p as . Now, the question is if we know the coordinate of point

p in the frame then how do we find the position of that point from the perspective of frame

. If we want to convert

to

then we need to know how the frames

and

are related to each other. As such, the three frame operations are:

- Translation

- Rotation

- Translation + Rotation

Suppose we are based in frame and we observe our point

p with coordinates . Its position from this perspective is

with coordinates

. We then move our based to a new frame

which is translated by an offset

. That same point

p when observed from that new frame has a new position called

with coordinates

. Translation is a

linear operation therefore, when coordinate frames are separated by a pure translation transforming points between frames is straightforward: we only need to add or subtract the three coordinate offsets.Hence, the new position of p is simply:

Breaking up for each coordinates, we get:

The offset expresses how the old frame

is seen from the new frame

. In the example below, we have a negative offset along the y-axis.

Specifying orientation in three dimensions is more complicated than specifying position. There are several approaches, each with its own strengths and weaknesses.

In Euler angles orientation is expressed as a sequence of three rotations around the three coordinate axes. These three rotations are traditionally referred to as roll, pitch and yaw. When working with Euler angles it is necessary to specify the order that the rotations will be applied: a 90o rotation around the x-axis followed by a 90o rotation around the y-axis does not result in the same orientaion as the same rotations applied in the opposite order. There are, in fact, twelve valid rotation orderings: xyz, yzx, zxy, xzy, zyx, yxz, zxz, xyx, yzy, zyz, xzx, and yxy.

As convention, each rotation is performed around the axes of a coordinate frame aligned with the earlier rotations which is referred as relative or intrinsic rotations.

To find out how the position of our p changes when rotating our base frame, we need to pre-multiply the position coordinates with a rotation matrix. The content of the matrix depends around which axis we rotate and the angle of rotation . Below shows the rotation matrices when rotating around each individual axes:

- X-axis:

- Y-axis:

- Z-axis:

In all cases, when the angle of rotation is 0 degrees, the rotation matrix reduces to the Identity matrix. That is the diagonal of the matrix becomes one and the rest becomes zero and that is the reason we have cosine along the diagonal and sines outside of it.

Furthermore, when we tranpose the rotation matrix we find the same matrix but with an opposite rotation angle, i.e, the rotation in the opposite angle.

It is possible to represent any orienation as a product of three rotation matrices around the x, y and z axes. It is straightforward to convert from an Euler angle representation to the corresponding rotation matrix. For Euler angles represented using relative rotations, the order is reversed.That is, a 45° roration about the x-axis, followed by 30° about the -axis and a 75° about the z-axis is represented as

In general, rotation of robots are usually given in A,B and C angles arounf the X,Y and Z coordinate system. That is we first rotate of an angle A around the x-axis, then an angle B about the y-axis and finally an angle C about the z-axis. The notation is referred as Improper Euler angles, or RPY angles or Cardan angles.

Two important properties of rotation matrices are that:

- Any orientation can be achieved composing Euler angles which is relatively easy to perform.

- Any rotation matrix can be decomposed in Euler angles although that is is not that straightforward.

The rotation matrix then becomes:

Note that when decomposing that matrix to find the angle A,B and C we can get two results for an angle. That is, we can reach the same global rotation with different individual rotations around the base axes. Euler angles are not unique! Combined with the choice of axis orderings, there are 24 possible conventions for specifying Euler angles.

When ,

. The matrix is simplied but now A and C are no longer

linearly independent. We are facing a singularity which is a partivcular configuration whreby an infinite number of solutions exist. We can only compute the sum and difference of A and C but not their individual values.

Some important properties of the rotation matrix:

- The rotation matrix is an

orthogonalmatrix, i.e, its transpose is equal to its inverse. This is important for us and calculating the inverse of the matrix can be difficult whereas transposing it is eaxy and quick.

- The

determinantof the matrix is equal to1, a property which is useful when checking if the rotation matrix has the correct input. If the determinant is not1then it is not a correct rotational matrix however, that does not mean that any matrix with determinant1is a rotational matrix.

- The product of the matrixes are

associativebut notcommutative. This means that rotating about x first and then z is not the same thing as rotating about z first and then x.

To sum up:

Euler angles are relatively easy to visualize, but they are inconvenient to work with from a mathematical point of view. The key problem is that the mapping from spatial orientations to Euler angles is discontinuous: small changes in orientation may cause big jumps in the required representation. This can cause difficulties when we need to smoothly update the orientation of a moving object over time. A possible solution to this would be to use Quaternions. As a general rule in robotics, we use Euler angles only for the interface of the operator(Visualization) but we use Quaternions for all internal calculations(Interpolation).

Now we need to put translation and rotation together into an individual transformation. The Homogeneous transformation matrix T, combines the rotation matrix R, for example around the Z-axis, but can be any generic rotation around the XYZ frame, and then the translational offset Δ, which can also be any generic translation along the XYZ frame. The combination of the two is a 4x4 matrix, filled with zeros under the rotation matrix and with a 1 under the translational offset. Combining the two operations together means that with a single matrix multiplication we can perform both translation and rotation of our frame at the same time.

It is important to remember that the order is not commutative: we first rotate, and then translate. If we would first translate and then rotate, the result would be totally different.

If we have a point in one frame and want to find out its coordinates as seen from a different frame, we simply pre-multiply by the homogenous matrix. Note that because the matrix is a 4x4 and the point only has three coordinates, we need to pad its vector with a

1 at the end. The final expression for looks like that. If we only have a translation and no rotation, the cosine elements along the diagonal of the rotation part will all be

1s and the elements outside the diagonal will be 0s, so that we fall back to a simple linear offset addition for the translational effects. Similarly, if the translation offset is zero, then we only have the rotation elements left.

Example:

Imagine we have a very simple mechanical structure with only one rotating axis. Note that the position of the TCP indicated by the white dot keeps changing following the rotating axis. Now we want to find the position of the TCP in the fixed robot base frame. For that we need some geometrical parameters: the length of the arm l, and its height h. The position of the TCP as seen from the mobile frame of the robot’s moving link is actually constant. We call it with coordinates

.

Now, to find out from

, we need to find out what transformation modifies the old mobile frame into the new fixed frame at the base. We first have a rotation by an angle θ around the Z-axis, and then a translation of

h along Z. Note that both are positive as seen from the new frame. So we can write down the homogenous transformation using an offset h along the Z-axis and a rotation θ around the same Z axis. h is always constant, but the angle θ can change all the time because it is driven by the rotating axis. So we pre-multiply by the matrix

T to find .

To sum up:

-

If we have the position of a point given in one frame and want to find its position from a different frame we need to apply some transformations.

-

The first and simplest is a

linear translation, which mathematically is a simpleadditionof anoffset. -

If the frames are rotated with respect to each other, we need to use a

rotation matrix, which is built as a combination of rotations aroundindividualaxes. -

Finally, if both

translation and rotationsare present, we can use ahomogeneous matrix, which combines both operations into asingleone.

After studying frame operations, we can now build a geometrical model that will allow us to calculate the position and orientation of each link. This model is knows as a Kinematic model. There are two types of kinematic model: forward transformation(or Direct transformation) and inverse transformation.

Forward kinematics is defined as the process of calculating the position and orientation of the TCP given the current values of the joint axes. We know the position of the joints from the values obtained from the encoders of the electric servo motors. Using these values, we need to calculate the position XYZ and orientation UVW of the TCP always w.r.t the base frame. This function is a simple transformation between the base frame and the TCP frame.

The final transformation matrix is a homogeneous transformation that depends on all the joints positions and mechanical parameters of the robot. What we do is simply compose a chain of frames translations and rotations from the base to the TCP.

The final point can be expressed in the base frame as its local coordinates

pre-multiplied by the homogeneous frame transformation. Note that now all those frames can rotate and translate with respect to each other, according to the joints values, so their angles are not constant, but

parameterized.

So what are these parameters of our kinematic model? That is, what translations and rotations we observe between the different frames?

-

we have all the

rotationsintroduces by the movements of the joints: from joint1to6. These are not constant in time and change accordingly to the motors’ movements. -

we have all the

translationaleffects due to the mechanical size of the arm. The distance from each joint to the next as shown in the picture above, from the base to the first joint, all the way to the last one. Unlike the rotational parameters, the translational parameters are actually constant, because the mechanical size of the arm does not change in time.

We will now build our kinematic model step by step from the base to the TCP.

We have a translation and a rotation along the Z-axis. The homogeneous transformation, , for this first step is built from the rotation matrix

and the offset

.

- Translation:

- Rotation J1 around Z:

- Homogeneous Equation:

The second step goes from the first to the second joint. Here we have two translations, one along X and one along Z. Plus the rotation of the second joint around the Y axis. We build the homogeneous transformation matrix accordingly.

- Translation:

- Rotation J2 around Y:

- Homogeneous Equation:

From J2 to J3 we have a vertical translation along the Z axis: a3z. This is usually the longest and heaviest arm of the robot and the motor at joint 2 needs to be quite large. We build the homogeneous transformation matrix using the translation along the

Z-axis and then the rotation around the Y-axis.

- Translation:

- Rotation J3 around Y:

- Homogeneous Equation:

The actual mechanical configuration can change according to the robot we are working on. Here, we have only two offsets, one along Z and another one along X. In other robots, there might also be one offset along Y. The homogeneous equation is build using the translation a4x and a4z and the rotation matrix about X.

- Translation:

- Rotation J4 around X:

- Homogeneous Equation:

With the next step we reach the middle of the wrist called the wrist center point. We simply add a translation along the X axis and a rotation around Y.

- Translation:

- Rotation J5 around Y:

- Homogeneous Equation:

We finally reach the end of the robot with the last step: one additional translation and rotation along X.

- Translation:

- Rotation J6 around X:

- Homogeneous Equation:

The XYZ coordinates of the TCP as seen from the base frame can be obtained by pre-multiplying the position of the TCP in its local frame, which is zero, the origin of the TCP frame, by all the homogeneous transformations(T1 to T6) we obtained for each frame in the previous six steps. The advantage of this solution is that it can be applied to any open kinematic chain.

For the rotation coordinates ABC of the TCP we do the same thing, using the rotation matrices we built in the previous steps. Since the product is associative, we can split the calculations in two:

- The

first,secondandthirdjoints determine the rotation of thearm, from thebaseto thewrist center point. - Then the

fourth,fifthandsixthjoints determine the rotation of thewristfrom thewrist center pointto theTCP.

- Position:

- Rotation:

So far we have calculated the position and orientation of the TCP as seen from the local robot’s frame or Machine Coordinate System(MCS). Now we want to know the position of the TCP in the Global Coordinate System(GCS) so that other robots can also work with it.

For the position, we need to pre-multiply by the homogeneous matrix that describes the transformation between local machine coordinate(MCS) system and global world coordinate system(GCS).

For the orientation, it is enough to pre-multiply by the rotation matrix.

Now if the TCP is not the mounting point but instead we have a tool at the end of our MP then we need to add the homogeneous matrix and the rotation matrix after the multiplication chain.

- Position:

- Rotation:

In practical, there are axes of the robot that are internally mechanically coupled. For example, a movement of J5 causes a movement of the J6 even if the motor of J6 is not moving. Hence, we need to compensate for this effect in our calculation.

In the picture below, only J1 is moved and J2 is remained fixed. However, due to the mechanical coupling, J2 is forced to move even if the motor was not activated. The white dotted lines shows the difference between the actual positions reached by the arm with and without mechanical coupling. That difference is a function of the coupling coefficient between J1 and J2.

We normally solve the direct transformations between joints and TCP with the forward kinematic function. But the joints positions are what we read from the encoders, they do not represent the actual position of the joints angles if an additional mechanical coupling is present.

For our 6-axis robot, we introduce coupling coefficients in the forward kinematic model so that the actual position of the TCP is calculated correctly. Before we send those joints values from the encoders to the forward kinematics, we need to adjust them by the coupling coefficient: for example the actual position of J5 and J6 might be influenced by the position of the J4. In that case the final value is what the motor sees plus the coupling factor function of J4.

Up until now, we have studied forward kinematic whereby we are given the joint angles(J1 to J6) of the axes and we need to calculate the position and orientation of the TCP or End-Effector. We showed how we could use the Homogeneous transformation matrix H, to find the location of our end-effector.

In inverse kinematics we are given the TCP location and we are asked to find the joints angles(J1 to J6) that result in that particular TCP location. Inverse kinematics is more useful for us as this is the kind of situation that we will be dealing with in real-world. That is, we may know a particular object location that our end-effector will need to grab and we will then need to find out the desired joints angles of our robots to achieve this task of grabbing the object. Remember that the TCP coordinates are expressed relative to the base frame.

Robots are programmed by the operator in path space coordinates (XYZ), which are clearly understood. But motion control software acts directly on the motors, which move the joints of robots. So we need a way to transform back from path space to the joints space. More explanation about these two spaces are found below:

An industrial robot can be controlled in two spaces:

Joints spaceandCartesian space. Hence, there are two sets of position-mode motion commands that make an industrial robot move. For joint-space motion commands (sometimes incorrectly calledpoint-to-point commands), you simply specify — directly or indirectly — adesired set of joint positions, and the robot moves by translating or rotating each joint to the desired joint position, simultaneously and in a linear fashion. For Cartesian-space motion commands, you specify adesired pose for the end-effectorAND adesired Cartesian path(linear or circular). To find the necessary joint positions along the desired Cartesian path, the robot controller must calculate theinverse positionandvelocity kinematicsof the robot. Singularities arise when this calculation fails (for example, when you have division by zero) and must therefore be avoided.

Try jogging a six-axis robot arm in

Joint space, and the only time the robot will stop is when a joint hits alimitor when there is amechanical interference. In contrast, try jogging the same robot inCartesian spaceand the robot will frequently stop and refuse to go in certain directions, although it seems to be far from what you think is the workspace boundary. A robot singularity is a configuration in which the robot end-effector becomes blocked in certain directions.

Unfortunately, the inverse kinematics problem is so hard that sometimes it is even impossible to solve the problem in closed-form, that is we cannot write down equations to derive the joints axes values and a numerical approach is required. In other times, we will need a couple of assumptions and even geometrical intuition in order to solve the inverse kinematics.

For a 6-axes robot the condition that allows for a closed-form solution is the configuration of the wrist. We have two kinds of wrists: spherical and non-spherical wrist robots.

In the spherical wrist, the three rotating axes J4,J5,J6 all intersect at one point at the wrist center point. This configuration is the most common in the industry and allows for a nice closed-form solution.

In the non-spherical wrist, the rotation axes do not intersect at one point. This configuration requires a numerical solution(trial-and-error procedures - approximation to the solution of a mathematical equation) so it is mathematically more complex and computationally more expensive. However, it does not suffer from singularity issues and can always perform movements at constant speed. For that reason it is used often in painting applications, where a constant speed is strictly required to spray a uniform layer of paint.

Another problem that makes solving inverse kinematics complicated, is the fact that, given a particular target position and orientation of the TCP, there might be more than one way for the joint axes to configure in order to reach that pose. In other words, the solution to the inverse kinematics is not always unique!

In this example we have a target position which is same for both robot. Both robots reached the same target with two different configurations. Observe that the TCP position and orientation are absolutely the same in both cases. But the joint axes configurations are different!

We call the two options UP and DOWN. This is also a problem for the operator while programming the robot. In our software interface we need to provide a way for the operator to choose the preferred option, either up or down, or maybe we can automatically select the solution closest to the current joints configuration.

The next example is even more extreme. We move the two robots to the same target TCP pose and we notice how they select completely different solutions whereby the second motion is absolutely redundant and overly complex.

The main difference here is J1, which can either point in the front or the back of the robot, hence, the name FRONT and BACK.

The next example is more subtle. The final configurations look the same but they are not. While J1, J2 and J3 are in the same position and give the same pose to the arm, the last three joints are in different positions and give a different configuration to the wrist. Notice that the J5 has opposite values in the two cases, hence, we call one POSITIVE and the other NEGATIVE.

In all these previous examples we were able to find two distinct solutions to the inverse kinematics problem.

Now, we will see that there are cases where an infinite number of solutions are available. These critical points are called singularities, and occur for some specific target poses of the TCP. There are 3 types of singularities: Shoulder Singularity, Wrist Singularity and Elbow Singularity.

In the shoulder singularity, the wrist center point from J4, J5 and J6 aligns with the center point of J1. If we try to move from this position we will notice that the J1 and J6 will start to rotate very fast in opposite direction. The TCP will remain almost still causing a very inefficient robot motion. We can find a fixed solution for most joints, except for J1 and J6. These two are linearly dependent and we can only find their sum or difference. Therefore, an infinite number of possible solutions are available.

Recall that a matrix is singular when its determinant is zero. The associated linear system has an infinite number of solutions because two or more variables are dependent on each other.

In the wrist singularity, J5 is 0, while J4 and J6 are not independent anymore. We can move them around without modifying the final pose of the TCP. We have an infinite number of solutions. Normally, we manually pick a value for one of the two joints, for example J4, and then the other joint is automatically fixed.

Imn the elbow singularity, J2, J3 and the wrist center point aligns together. When these 3 points are aligned, the elbow of the robotics arm will get stuck in the so called elbow singularity no matter where the TCP is. Elbow singularities look like the robot has “reached too far”, causing the elbow to lock in position.

To sum up:

- the inverse kinematic problem is more complex than forward kinematics, not only because it is difficult to find a solution, but because sometimes there are many, or even infinite possible solutions we need to pick from.

- the definition of singularity is when the robot has an

infinitenumber of kinematic possibilities to reach the same end position - an infinite number of ways to get to thesamespot. - what a robot's kinetic algorithm is trying to do is solve a

complex equationof where to put all our axes(J1 to J6) to get our tool where we need it to be. The operator will just drag this robot somewhere and say record and we just take for granted that it gets there. But if there are aninfinitenumber of solutions to get there then it does not know which one to take hence, we find singularity and the robotfaults out.

Below is a video showing the singularities explained above:

Y2Mate.is.-.What.are.robot.singularities-lD2HQcxeNoA-480p-1641578742646.mp4

[Video by Mecademic Robotics](https://www.youtube.com/watch?v=lD2HQcxeNoA)

It is kind of hard to tell you where we are likely to find the most singularities since they are not at a given position, they are relative to the robot configuration. In simpler words, singularities occur when a robot loses a degree of freedom.

There are two ways we can find these singularities:

- Inspection: Here we just observe and determine what would be a singulafrity for that robot.

- Calculation: We write the Forward Kinematic equations, take the derivative of the velocity, find the Jacobian matrix and set its determinant equal to zero to find the joints angles that would make the determinant = 0.

Singularities most occur in nature when we place objects to be grabbed by the TCP at right angles and having the object below at the base of the robot. The image below shows that J5 is 2 degrees away from zero. The table is too low and the object is too close to the base of the robot. The robot would go pick the object but if we go a little bit back then we hit a singularity. There are many places singularities can occur becasue anytime J5 is 0 I can hit a singularity. One important thing to remember is that when in a situation as depicted in the image below, the programmer is powerless. There is nothing robotics programmers can do as they are bounded by unsolvable mathematical equations. However, we can redesign the workplace to avoid such occurances as explain below.

Below are 6 ways to avoid singularities:

1. Choose another robot: We can only get a singularity with a 6-axis or more robot. If we have a four axis robot, there is no such thing as singularity. It cannot happen because any place we want to go there are no two axes fighting each other hence, we have only one solution.

2. Singularity Avoidance package: This is a package when buying a robot that imbed some built-in intelligence to the robot to avoid these singularities. It's going to make movements that will result in only one solution.

3. Re-design workplace: We could relocate the robot by lowering it relative to the picking surface or change the table's postion, i.e, we increased the height and placed it a bit further from the base and this result in a change in the articulation of the robot arm. We now have a nice bend between J4 and J5(57.6°).

4. Offsetting the tool: By offsetting the tool as shown below, we force the robot to be further away from the object. In that way we induce a small angle(about 15 degrees) whereby we used to have only about a 2 degrees angle. Offsetting the bracker allows us to gain some reach - our J6 can not swing - and it also allow us to manipulate the tool as per our needs.

5. Pivot the tool: We can also pivot our tool such that when picking the object, the tool is perpendicular to the object but we have about 30 degrees angle at our J5. We are far away from zero therefore far away from a singularity.

6. Tilt robot: The next solution would be to tilt the robot - about 30 degrees - such that the robot is fixed on a wedge block and we have a nice J4 and J5 interaction with a bend of 24 degrees.

To sum up:

- it is mostly the mechanical engineer work to find a suitable solution for the robot to avoid singularities. The programmer should already plan this on his software.

- it is important to plan ahead of the position of the robot or workbench or tool so as to avoid redundant changes in the physcial robot.

- robot singularities can only be avoided by properly designing our robotic cell (and that includes the design of the adaptor plate for our end-effector).

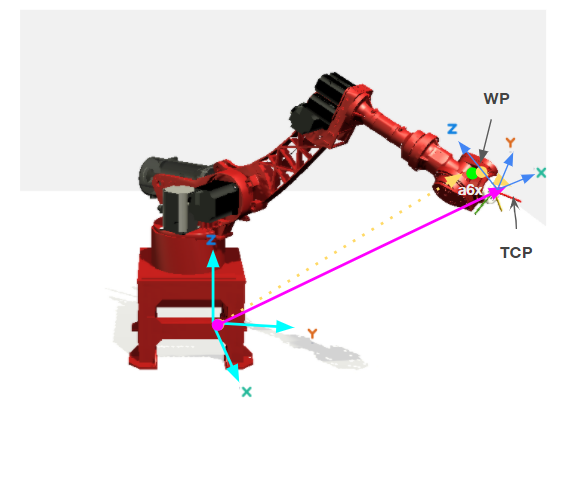

Earlier we discussed that our robot is a spherical wrist robot as J4,J5 and J6 all intersect at the wrist center point(WP). That point is crucial because it is dependent only on J1, J2 and J3. That is, only a movement of J1, J2 and J3 can change the position of WP and not J4, J5 and J6. Hence, we can split the robot into two parts: arm and wrist. We will process as follows:

- Given the position of the WP, we can find J1, J2 and J3.

- Then gicen the position and orientation of the TCP, we find J4, J5 and J6.

This process is called decoupling, i.e, we decouple the wrist from the arm and this process is only possible for spherical wrist and J4, J5 and J6 do not affect the position of WP.

We have shown before that the difference between WP and TCP is a6x which is the offset between J5 and J6. However, this offset runs along the x-axis of the local TCP frame, which we call in the robot’s base frame.

- Given the orientation of the TCP in Euler angles, we know how to compose the equivalent

rotation matrixby multiplying together all the individual rotations around the XYZ axes.

- We then take the rotation matrix

R, multiply it for the localxvectorand we find how this vector is seen from the base.

- From the multiplication above, we get the first column of R, which is

. We subtract

a6xtimesfrom the

TCPposition to find out theWPposition.

After finding WP, we can now find J1, J2 and J3.

The position of J1 only affects the X() and Y(

) coordinates of the wrist point, not the vertical position Z(

). So we can reduce the problem to a

2-dimensional planar problem and observe that J1 is the angle between the X and Y coordinates of WP. The solution is simply to take the arctangent.

Recall that two solutions are possible for this problem, depending on the pose that we select: either front or back. In the first case J1 is what the arctangent function returns; in the second case we need to add 180 degrees.

Finally, if both X and Y are zero, the wrist point is straight above the base origin and we have a shoulder singularity. Any position for J1 would be a correct solution. We usually fix J1 equal to the actual position of the joint.

When J1 is fixed, movements of J2 and J3 only happen on a vertical plane. So we can simplify the problem by removing one dimension and reduce the workspace to a 2D geometry as shown below. We need to focus on the green triangle to find J2 and J3.

- First using pythagoras theorem, we unified the X and Y coordinates into a single XY(

) component.

- We will focus on the green traingle to solve for J2 and J3. One of its side is

and its horizontal and vertical projections are

handlrespectively. Since we know the mechanical size of the robot we can easily findhandl. We then using pythagoras theroem to find.

- We already know another side of the green triangle:

a3z. We know need the last side which isb4z. We observe that it is the hypothenus of the yellow triangle on top with legsa4zanda4x+a5x. - In order to avoid a situation whereby the robotic arm tries to reach an object toor far from the TCP, we need to apply the

triangle inequality. We limit the value ofusing this rule.

The sum of the lengths of any two sides of a triangle has to be greater than the length of the third side and the difference of the lengths of any two sides of a triangle has to be less than the length of the third side.

- Now we need to find

using the arctan of

handl. - We find

using the cosine rule.

- We observe that J2 +

+

= 90°. We solve for J2. Note that it could also be plus

, if we were to choose the

DOWNpose instead, with the second link pointing downwards and the third upwards. - We find

using arctan.

- For the top yellow traingle, we observe that J3 +

+

is 180°. We solve for J3.

The first three joints(J1, J2 and J3) determine the position of the wrist point and also the orientation of the arm from the base up to the wrist center.

We call that rotation matrix The rotation of the TCP is given by the matrix

R, which we already found composing the ABC Euler angles. The missing rotation in between those two is given by the matrix.

Ris the product oftimes

, we can solve for

pre-multiplying both sides by

and simplifying the right side.

is then the product between

transpose and

R.- Since

is an orthogonal matrix, we know that its inverse is equal to its transpose.

- Remember that is

the rotation matrix from the

baseto the frame created byJ1,J2andJ3. Hence, we need to compose the rotations of the jointJ1around theZ-axis, and ofJ2andJ3around theY-axis. - The

Rmatrix is found fromA,B,Chence we can now derive.

- After finding

, we need to decompose it into its individual rotations of

J4,J5andJ6. Remember thatJ4rotates aroundX,J5aroundYandJ6aroundXagain. - We then need to decompose the

matrix into Euler angles to find J4, J5 and J6.

- However, if

cosJ5 = 1thenJ5 = 0. We will then have awrist singularity. There isnorotation around theY-axis, only two consecutive rotations aroundX. There is no way to separateJ4andJ6anymore. Only thesumof their values is known, because it is essentially asinglerotation about theX-axis. The,matrix then becomes:

- The solution is to fix one of the two angles, for example

J4equal to its current value, and findJ6accordingly. - We always try to minimize the robot’s movement’s distances to reach a target position. Hence, since angles are periodic we can add or subtract

to get the most convenient solution.

We must remember to adjust for our coupling joints. The output of the inverse kinematics model is the positipm of the real joints. They must be adjusted to calculate the correct motor target positions.

To sum up:

- Manipulator tasks are normally formulated in terms of the desired position and orientation.

- A systematic closed-form solution applicable to robots in general is not available.

- Unique solutions are rare, multiple solutions do exist.

- Inverse kinematics: Given a desired posiion X,Y,Z and orientation A,B,C, we find values of J1,J2,J3,J4,J5 and J6.

In path planning, we will study the geometrical description of the robot’s movement in space. The geometrical curve to move between points is what we call the path. If we have to points in space - A and B - we can have several ways to travel from A to B. We can have the shortest route which is the displacement - a straight line from A to B or we could have some random curve path joining the two points. Once we fix the geometry we want, we also need to generate a time-dependent trajectory to cover the path.

- The easiest way to move from one point to another one, is by simply moving the joints axes of the robot to their target positions. This is called

point-to-point(PTP)movement. PTPs are fast but they do not provide any specific control over the geometrical path of the TCP. - If we want control over the geometry of the path, then we need an

interpolated movement, for example aline. Other interpolated movements are thecircle, and thesplinewhich is a smooth interpolation through some target points in space.

Point-to-point is a linear interpolations of the joint axes of the robot. How does it work? Suppose we start from point , where all the joints have certain values and we want to reach point

, where the joints have different values. We simply

linearly increase or decrease the joint angles, from their starting to their target values.

- We use the parameter

tto describe the equation of this movement. t = 0at the starting point andt = 1at the end of the movement.- If we pick any value between

0and1, the joint axes have a value of.

- when

t = 0,and when

t = 1,.

Note:

tis nottime.tis just a parameter describing the curve from0to1. It describes thepathbut not thetrajectory. Here, we only plan thegeometry, not thespeedof the movement. So no time is involved.- This linear interpolation happens in the

Jointspace. The joint angles move linearly from their starting position to their target value. For example, jointJ1could move from0to80degrees. However, this linear movement of thejointsdoes not translate at all into a linear movement of theTCP. If we imagine the first joint moving from0to80degrees: the TCP actually moves around acirclein space(remember that J1 is a rotation around the Z-axis.)! - While PTP movements are very simple to program and fast to execute, they also have no control over the actual path of the end effector of the robot. At planning time we do not know what the

positionandorientationof the TCP will look like.

Although the TCP movement is not a line, the formula we need to calculate this movement is a linear interpolation between and

. As

t increases, . As shown from the graphs above, the value of

J1 changes linearly as t increases.

-

If we want to reconfigure the arm, e.g. change from

UPtoDOWN, the only way we can do that is with aPTPmovement, where the joint axes arefreeto moveindependentlyfrom each other to their final target value. -

A

path-interpolatedmovement doesnotallow that. If we start a line in space with anUPconfiguration we will arrive at the end of the line with the sameUPconfiguration. That is because thegeometryof the arm isrestrictedduring path movements. It cannot stretch and reconfigure itself in a different way without sending the TCP out of its planned path. -

PTPmovements, on the other hand, do not care about the TCP path and can essentially do whatever they want to the geometry of the links.

To sum up, PTP movements are time-optimal, that is, they always take the shortest time possible to complete from start to end. Finally, and most importantly, a PTP movement is the only movement that allows a modification of the actual joint configuration.

In order to describe a target pose for our TCP in space, we need 6 coordinates hence, the reason for our robot to be a 6dof robot. Suppose we have a starting point with coordinates

XYZ and we need to move to our next position with coordinates

X'Y'Z'. This time we are expressing the joints in the Path space instead of the Joint space. Moving from one point to the next can be done linearly, i.e, one unit top and one unit to the right. And to return back we have two options; either one unit down and one unit left or one unit left and then one unit down. Either way we will return to our starting position! This is because we are working in a Euclidean space, where vectors add up linearly. The process can be seen below:

However, this is not the case for our TCP angles UVW because Euler angles do not interpolate linearly. This happens because Euler angles do suffer from Gimbal lock where the order in which we apply the three rotations affects later rotations in the sequence.

Below shows the process of first rotating around X of 90 degrees, then rotate around Z also of 90 degrees to reach . And then we go back, X of -90 and Z of -90. If rotations did add up linearly like positions we would go back to the initial pose but this not what happened!

A better way to interpolate these orientations is to convert our Euler angles into another representation before interpolating them. Rotation matrices is one solution but matrices are not suitable for interpolations as they cannot be parameterized with one single variable running from 0 to 1. A better solution would be to use Quaternions.

Since Euler angles suffer from singularities at 90 degrees, we need another way in order to interpolate angles: Quaternions. The general expression for quaternion is:

where is a vector and

w is a scalar. i,j and k are complex numbers hence:

The 4 elements x,y,z,w define the quaternion and are the number of elements it needs to describe an orientation, as opposed to 3 elements for Euler angles and 9 for a rotation matrix. We typically work with unit quaternions: that means a normalized form, where the norm of the quaternion is simply the Euclidean size of the vector of elements x,y,z,w.

Suppose we have a vector v in 3-dimensional space and we want to rotate it about point p at an angle .

We will use quaternion to rotate it and we start by defining our quaternion and its complement:

If we are rotating at an angle of , then

a,b,c,d are defined as :

Any given orientation is described by one unique quaternion. That is unlike Euler angles, with which many representations were possible for the same orientation in space. After defining a,b,c,d, our corresponding unit quaternion is defined by:

If p is the point around which we are rotating, we write p as a quaternion:

And finally the rotation is :

- Compared to Euler angles, quaternions do not suffer from

singularities: they offer auniqueundisputable way to identify rotations. - They also offer an easy way to interpolate

linearlybetween orientations.

- Quaternions are more compact: using only

4elements instead of9. - Matrices are also impossible to

interpolate, and they are not numerically stable. - It is possible that a rotation matrix undergoes several computations and comes out

non-orthogonalbecause of numerical approximations, that is, its determinant is not1anymore. In that case the matrix is very difficult to recover.

Given two orientations, represented by the two quaternions and

what is the shortest and simplest way to go from one point to the other? Since we move along a sphere, there are infinite ways to do this but we are interested in the optimal path - the

torque-optimal solution which uses the minimum energy to travel from the start to the end.

The solution is defined by the formula below, which is equivalent to a linear interpolation in Euclidean space, but this time we move on a sphere. We still use the parameter t, which goes from 0 to 1.

We can travel along the rotation at constant angular speed. This kind of interpolation is called Slerp. Alpha is the angle between the two quaternions, and it is calculated the same way as the angle between vectors on plane:

Note that the problem with the arccosine is that it can return two different angles between the quaternions: either the shortest one of the opposite one. To guarantee that we always use the shortest angle we need to make sure that the dot product between the two quaternions is positive before taking the cos inverse. If we happen to have a negative dot product then we should invert it by inverting one of the two quaternions, i.e, multiple one of the quartenion by -1. We will still reach the same rotation, just using a different path.

Also, if the angle between the two orientations is very small we could face numerical instabilities with that at the denominator. Observe that a small arc on the sphere essentially degrades into a

linear segment, so we can use the standard linear interpolation instead, and have more stable calculations.

As shown below, when moving the TCP around a particular axis, only the orientation of the TCP chnages and not its position, i.e, the XYZ values remain constant.

quaternion.mp4

We have the start() orientation of the robot and the user provides a

target() orientation. We cannot interpolate the ABC Euler angles directly so we interpolate quaternions using

Slerp.

- We first transform the

Eulerangles intoRotationmatrices and from there intoQuaternions. - Then we apply

SLERPto interpolate betweenand

- But because we control the motors of the robot we need to solve the

inversekinematics to find out the values of thejointaxes. So we need to transformqback into aRotationmatrix and thenEulerangles as input to our inverse kinematic function.

To sum up:

- There are a few advantages of using quaternions over Euler angles or rotation matrices, the most important of all for our purposes being the possibility of interpolate them. When we move the robot from one pose to another we need to change its orientation from the initial one to the final one. This smooth change over time is called

interpolation. We cannot interpolate matrices or Euler angles directly. Quaternions can do that easily withSLERP.

The simplest interpolation in the path space is a line. We start from point with coordinates

and want to reach

with coordinates

. The formula for linear interpolations is as explained before:

, with

t going from 0 to 1.

We may have two situation in practice:

- In the first case the starting and target points have the same orientations, so the interpolation only happens on the position coordinates

XYZ. - In the second case the initial and final orientations are different. So, while the position coordinates interpolate

linearly, the orientation interpolates usingSLERP. The two interpolations happen inparallelat the same time.

Two points are not enough to define a circle in space. We need three points: the starting point , an intermediate point

and the final target point

. The parametric formula for the position of a point describing a circle in space is the following:

- C: center point

- r: radius

-

U: normalized vector from the center to the starting point

-

V: a vector perpendicular to U, lying on the circle plane

-

t: the parameter that runs around the circle, from zero to the ending angle

Note: We need to check the points are not collinear, i.e, no points lie on the same line in space. The vectors U and V are perpendicular to each other and form the analogue of the X, Y axes on a plane.

So starting from t = 0 we point along the vector U and we then rotate around using the formula in 2D: rcos(t) + rsin(t). This is a group of three individual equations: one in x, one in y, and one in z. We are only focusing on position here, because the orientation will follow the SLERP interpolation: from the start orientation to the middle orientation and to the ending one.

To sum up:

- We need to find all the points between

(where the movement starts) and

(where the movement ends) along the circle.

- If we take a simple

2Dcase - the X,Y plane - the parametric equation of a circle centered in the origin isp = r cos t + r sin t. By moving the value oftfrom0to any angle we want (positive or negative), we will find the coordinates of the pointpalong the circle. - If the circle is not centered in the

origin, we need to add the coordinates of its center point:p = C + r cos t + r sin t. - Finally, if we move to the

3Dspace and the circle does not lie on the X,Y plane we need to generalize its axes to the vectors U,V. In the 2D case the U,V vectors were simply[1,0]and[0,1]. The generic formula for a circle in 3D becomesp = C + U r cos t + V r sin t. U and V are perpendicular to each other and both lie on the same plane where the circle lies.

Suppose we have a starting point and a target point and we want to join these 2 points smoothly, i.e, not with a straight line. We will then use a spline to join these 2 points. Splines are functions built up by composing polynomials piece-by-piece. In our case, we will use a Bézier Curve for the spline and to be more precise, we will use Cubic Bézier Curve.

Bezier Curve is parametric curve defined by a set of control points. Two points are ends(starting and ending points) of the curve. Other points determine the shape of the curve. The Bézier curve equation is defined as follows:

- t: [0,1]

- P(t): Any point lying on the curve

: ith control point on the Bézier Curve

- n: degree of the curve

: Blending fucntion =

where

.

The degree of the polynomial defining the curve segment is one less than the total number of control points. Since we will be using a cubic curve, our number of control points will be 4.

Substituting for n = 3 in the above equation, we get:

and

are control points used to define in what direction and with what magnitude the curve moves away from the 2 edge points. We have a total of

4 control points because we decided to use a cubic spline in the parameter t where t = [0,1]. Every point P on curve is a linear combination of the four control points, with the weights being the distances from them. Note that the control points are always placed tangential to the starting and ending points respectively.

Note: A common rule of thumb is to keep the control points distance at about 1/3 of linear distance between start and end point.

Bézier curve is a valuable function for robot’s operators: they can just teach the position of some points where the robot is required to go through and we automatically generate the whole path in between. For example, if we we need to move our robot from one point to the other but we have an obstacle in between, then we just need to define a third point above the obstacle and let the algorithm calculate a smooth path between the three points as shown below:

Also, while the spline runs the position of the TCP, we can interpolate the orientation in parallel using quaternions.

We have studied different geometries to connect two points in space. Now we need to study how to connect those geometries with each other. The point between two consecutive segments of a path is called a transition. The first problem we encounter when observing transitions is that there might be a sharp angle between them. These sharp edges are bad because when we travel along the path we have to decelerate, stop at the transition and then start accelerating again along the next section. This behavior not only wastes a lot of time but is also hard on the mechanics. A smoother movement would be much more desirable. One possible solution is to introduce a round edge as a path smoothing transition. You notice that the path is smoother, which allows for higher travelling speed because no stop is required, and it is also gentle on the mechanics. Hence, the reson for using a Bézier curve.

Suppose we have two perpendicular consecutive paths section then we will need a round edge for a smooth transition.

- First we need to limit our radius

Rby setting it half the length of the segment. This is incase we have another round edge at the next transition thus, we leave some spave for that. - Once we have R, we need to define two control points: starting(

) and ending(

).

- Instead of using a cubic spline, we will use a

quarticspline hence, we have5control points. - We place one(

) at the transition and the two(

) left are placed in middle of the other control points.

- This configuration ensures

geometrical continuityof the spline with the original line up to thesecond derivative: i.e, the two sides have same direction and also same curvature. The transition between line and round edge is smooth.

We need the path to be smooth but how do we define smoothness? In order to check for smoothness, we need to first ensure that our path is continuous. What is important is to find the direction of the tangents at the transition points. In general:

A function f(x) is smooth if it is continuous and its derivative is also continuous.

The figure below shows a continuous graph as we have two paths which meet at one point. The tangent of the two paths point in two different directions as shown in

blue below. The edge is sharp and not smooth.

In the next figure, the two curves touch at the transition point. We say we have continuity as the tangents of the curves and surfaces are vectors with both the magnitude and direction of the tangent vectors being

identical.

The class

consists of all differentiable functions whose derivative is continuous; such functions are called

continuously differentiable. Thus, afunction is exactly a function whose derivative exists and is of class

.

The second derivative represents the smoothness of the curve. Although we have a transition of a circle with a line, it is not smooth, i.e, we do not have a continuity. As described above, having the same tangential direction implies only

continuity, which translates into same speed of movement, but different accelerations. The fact that this transition between line and circle is not

means that if we travel at constant speed across the transition, the acceleration along the vertical axis changes instantaneously: from zero along the line, to a sudden finite positive value when entering the circle. In practive we will hear a "click" sound on the axes, which can be detrimental to the mechanics.

The continuity between two curves must be satisfied at higher order of differentiation so as to have a smooth dynamic profile and avoiding slowing down or, worse, stopping the trajectory. Thus, the reacon for using continuity.

The figure below shows continuity as they have the same tangential direction and the same curvature.

We do not always need transition but we need to check that the transition angle between consecutive sections is small enough so as to increase production speed and to avoid excessive force on the mechanics.

Earlier, we introduced a round-edge between two lines as a quartic spline and said that the transition is smooth up to the second derivative. Note that this round edge is not a circle! The transition between line and circle is not continuous, as we mentioned before. The circle has a

constant radius of curvature, which means a constant finite second derivative. When crossing from line to circle the curvature jumps suddenly. But the round edge is a quartic spline, not a circle. Its radius of curvature is not constant: it starts at zero, increases in the middle and goes back to zero at the end, so that the transitions with the previous and next segments are smooth.

Let's prove the smoothness mathematicaly: We start with a line segment which parametric equation is as follows:

First derivative w.r.t t:

Second derivative w.r.t t:

This clearly shows that a line has zero curvature!

Now if we take the second derivative of our quartic spline we get:

Note that both first and second derivative are not constant: they both vary over the interval from t = 0 to t = 1. Physically, it means that the direction and curvature of the round edge change along the path from start to end. That is obvious for the direction, but very important to underline the curvature. A varying curvature means that we can control the amount of acceleration we require from the physical axes during the movement. That is unlike the case of a simple circle, where the curvature is constant and the centripetal acceleration is also constant, leading to discontinuities at transitions.

If we set t = 0 and t = 1 and we require the curve to have zero curvature, i.e, set the second derivative equal to zero, then we get the position of the points and

.

That explains why we decided to place the control points of the quartic spline in the middle of the two segments. With that configuration we achieve a geometrically and physically smooth transition between two path sections.

If we need to calculate our path length then it is quite straightforward if our path is a straight line, a circle or an arc of a circle. If it is a straight line then we just need to apply Euclidean's distance formula in 3-dimension:

If it is an arc then we need the radius R and the angle in between the two radii bouding the arc. The formula is as follows:

If we have a PTP or a spline, then calculating the path is not as straight forward. We just need to divide our path in many small segments of equal length whereby the small segments are considered to be straight lines. We just need to sum up the number of segments to get a numerical solution of the overall length:

To sum up:

The various order of parametric continuity can be described as follows:

- C0: zeroth derivative is continuous (curves are continuous)

- C1: zeroth and first derivatives are continuous

- C2: zeroth, first and second derivatives are continuous

- Cn: 0-th through n-th derivatives are continuous

While planning lines, circles and splines for the robot, we need to restrict its range of movements to specific areas in space. The allowed space of operation is called workspace. The main reason to monitor the workspace of a robot is safety.

A safe zone is usually defined to keep the entire robot inside a virtual cage, where it is safe for it to move around without colliding with other elements of the environment. The rule is that no part of the robot can be outside the safe zone at any time.

If both starting and ending points or even one of the points of the movement are outside the zone, then for sure part of the movement is violating our safety area. How do we check mathematically that a point is inside a box? Given the point P and defining the cuboid by two points B1 and B2, the condition is that P must be smaller than the maximum between B1, B2, and at the same time must be larger than the minimum between B1, B2. Since P,B1 and B2 are all 3-dimensional vectors, so this condition must be verified along all three axes XYZ.

A forbidden zone precludes the robot from entering certain areas of space, usually, because they are already occupied by something else.

Note that we can also have a forbidden area inside a safe area. The resulting allowed workspace will be the difference between the safe and the forbidden area.

It is important to remember that we plan our workspace in order to avoid collison. Hence, it is much wiser and safer to monitor the path while planning it, not while executing it. Monitoring only the start and end points of a movement is not enough: they might both be in safe area, but the actual path could intersect a forbidden space. So the entire path needs to be monitored closely.

It is easy to predict collisions if all we have is a straight line. Complex movements are normally decomposed in linear segments, similarly to what we did in order to calculate their path lengths.

The condition here is that a line should not intersect the box.

- Recall that a line is defined by a parametric equation

, starting from P0 at t = 0 and ending at P1 at t = 1.

- We first consider the three edges of the cuboid closer to the point P0 and we calculate the values of t for which the line intersects them.

- If the closest edges to P0 is the point B with coordinates Xb,Yb,Zb, we plug in those coordinates one at the time in the above equation and find three values for tx,ty and tz. We take the largest one, which represents the latest intersection between the line and the closest planes of the cuboid and we call it t0.

- We repeat the same for the three edges farthest away from P0. We calculate the three intersecting values and take the smallest one, which represents the earliest intersection between line and the furthest planes of the cuboid. We call it t1.

- If

then the line

intersectsthe box. - If

then there is

no intersection.

So far we have monitored only the position of the TCP. However, there exist cases when the TCP is inside the Safe zone but the part of the body of the robot is outside that zone. So we need to monitor all the parts of the robot which can be complicated depending on the shape of the robot. One solution would be to wireframe the model, i.e, represent each part of the robot with points and lines as shown below and then monitor those points and lines.

Because the position of those points depend on the actual values of the joint axes, they are calculated using direct kinematics and constantly updated during movements. Then we can use the techniques learned so far to check whether the wire-model (points and connecting lines) cross forbidden zones or move outside safe zones.

There are cases whereby we also need to monitor the orientation of the TCP such that it does not face towards people - for example a robot during welding or laser cutting. We don't want the TCP to face upwards even though the TCP is inside the Safe zone. A safe zone for orientation is called a Safe cone. We need to define a central working orientation, usually the vertical Z axis, and a maximum deviation angle. The resulting geometry is a cone, outside which the orientation of the TCP is considered unsafe.

Recall from the SLERP interpolation that when expressing orientations with quaternions the angle between two orientations is given by the following formula:

Thus, we can monitor, both at planning time and at real-time, whether the current TCP orientation lies inside the safe cone.

Another zone whereby the TCP must not come into contact with is the body of the robot itself. In other words, we want to monitor and prevent self-collisions. The solution is to generate forbidden zones around each of the links (usually corresponding to the wire-frame model), starting from the first up to the last. The se capsules act like a raincoat which will cover it safely and prevent self-collisions. Note that we use capsules since they are computationally cheap but we could have used the cuboids also. The only difference with standard forbidden zones is that their position changes over time and must be constantly updated. We generate the position of the zones automatically given the actual position of the joints, using direct kinematics, one joint at the time.

A capsule is geometrically defined as the set of points that are all at the same distance from a given segment. It is composed by a cylinder with two semi-spheres at the extremes. The segment is at the same distance r across the capsule. Usually, r is given by the user, depending on the size of the mechanical arm of the robot. Imagine we have a point Q and we need to find the distance from the segment:

- We P0,P1 as vector n:

- Observe that the projection of Q onto P0P1 is

Pand depends ont, so we call.

- If t=[0..1] then P is on the segment, otherwise it is on the line before P0 if t is negative or after P1 if t is greater than 1.

- We need to find t and then from there P and the distance PQ. We call

and

.

- We can immediately find d as the difference between the vectors v and tn:

- Q is joined to P using a perpendicular line so

.

- Replacing d in terms of v and tn we get t.

- Once we have t, we can find P and then PQ, which is the distance we were looking for.

So far we have monitored the workspace of a single robot through its position and orientation. Now we need to monitor many robots working together in the same environment and sharing the same workspace.

One example could be a robot welding, then the other cuting and another one screwing. Or, we could have multiple robots along a conveyor belt picking objects. We need to monitor their position relative to each other to avoid collisions. Below we describe two functions to perform workspace monitoring for multiple robots.

Exclusive zones are regions of space within which only one robot (or at least its TCP) can lie at a time. Each exclusive zone is associated to a flag bit, which is visible to all robots, and warns of the presence of a robot inside that space. If the TCP of a second robot is programmed to enter the same exclusive zone, it will have to check the status flag first, and will only be allowed to enter if the zone is free. Otherwise the movement is paused until the first robot leaves the exclusive zone. At that point the second robot can resume its movement and enter the region. Now the zone becomes locked by the second robot and the first cannot enter it any more. And so on.

However, we must be careful when we have more than two robots because there might be many robots waiting in line and we can only show the green flag to one of them, usually the first in line, otherwise they will all rush into the zone and collide with each other or the workpiece.

In the second solution, we need to monitor the possible intersection between a robot’s body and another one. The user normally defines a minimal distance between robots, which we need to monitor in real-time. Just as explained before, we build bounding capsules around the joints and evaluate all possible collisions between each capsule of a robot against all the capsule of the other robot. Two capsules intersect if the distance between the capsules' segments is smaller than the sum of their radii.

What is the difference between path and trajectory? Suppose we have a static path s, then, the trajectory transforms this static path into a function of time s(t) by sampling points from the geometry along time.