08 / 50

Learning a Discriminative Feature Network for Semantic Segmentation

CVPR 2018 Spotlight

- Changqian Yu 1

- Jingbo Wang 2

- Chao Peng 3

- Changxin Gao 1�

- Gang Yu 3

- Nong Sang 1

- 1 Key Laboratory of Ministry of Education for Image Processing and Intelligent Control, School of Automation, Huazhong University of Science and Technology

- 2 Key Laboratory of Machine Perception, Peking University

- 3 Megvii Inc. (Face++)

Inofficial Code1 Inofficial Code2

Official in Chinese | 旷视科技Face++提出用于语义分割的判别特征网络DFN 论文阅读 - Learning a Discriminative Feature Network for Semantic Segmentation (CVPR2018)

To remedy the imbalance of different classes, focal loss [22] is used here to supervise the output of the Border Network.

- [22] T.-Y. Lin, P. Goyal, R. Girshick, K. He, and P. Doll´ar. Focal loss for dense object detection. In IEEE International Conference on Computer Vision, 2017.

We use a parameter �lamada to balance the segmentation loss

Intra-class inconsistency and inter-class indistinction is two important problems in semantic segmentation.

2. [Contribution / Method] What's new in this paper? / How does this paper solve the above problems?

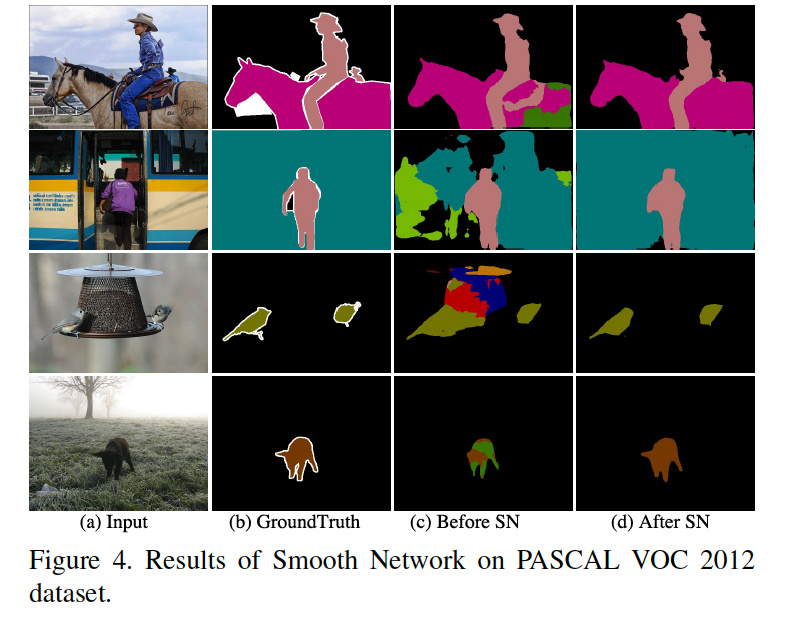

- intra-class inconsistency problem: a Smooth Network with Channel Attention Block and global average pooling to select the more discriminative features.

- multi-scale features are used to enrich the information: U-net structure

- global average pooling is used to capture the global information

- CAB is designed to use the high level information to guide the low layer.

- inter-class indistinction: To amplify the distinction of features, a Border Network is proposed to make the bilateral features of boundary distinguishable with deep semantic boundary supervision.

Using Canny algorithm on the semantic ground truth to obtain the supervisory signal, which is used to refine the low stage feature.

- PASCAL VOC 2012

- Cityscapes

- multi-scale data augmentation

Data augmentation is necessary!

mIoU

Smooth Network

Detailed performance comparison of our proposed Smooth Network. RRB: refinement residual block. GP: global pooling branch. CAB: channel attention block. DS: deep supervision.

We can find that the biggest improvementa are coming from RRB, GP and CAB.Border Network

The improvement of BN is not as big as RRB, GP and CAB.

#### 3.5 What is the ranking of the experiment results?This paper was accepted as the spotlight paper in CVPR 2018. Here are some reasons I think important:

- The ablation study is sufficient.

- The idea of refinement the low stage feature (DS: deep supervision) is interesting. Though DS doesn't improve a lot performance, just 0.43% according to Table 2, this idea is easy to touch the reviewers and readers.

The parameter lamada actually can be set to some value(like 0) at first, and gradually be learned to a proper position during the learning.