New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

how to interpret output #9

Comments

|

Ok i think i got it And now to use it But for example the bounding boxes is 2d level data, first one is box index and second one are the 4 coords (tensor: shape=[1 100 4]), and tensorToVector flatterns it to 1D vector of 400 size, so i think it has to be But the boxes are not correctly positioned.... im doing this last step wrong? Note i tried 2 differents models and output is the same so obviously im doing something wrong, just can find what Thanks |

|

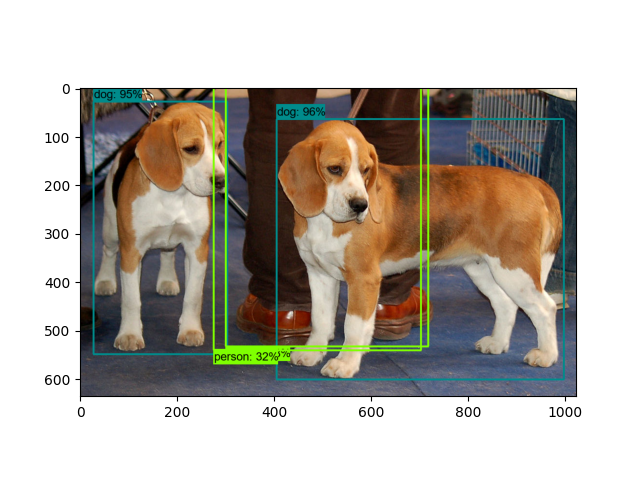

Seems like is Curious that bounding boxes for the dogs are not exact the same as tf example But with other image seems spot on so assume code is now ok Would you accept a PR with a sample to use an object detection model?¿ I will be glad to contribute |

|

Ping @bytosaur |

|

Just to note box difference was fixed replacing tensorflow lib, can also contribute providing a updated tf lib for linux to use with cuda 11.1 as i need to recompile it to use on my machine. |

|

To which version? |

|

tf 2.5.1 |

|

We have td 2.4.2 in the |

|

hey @natxopedreira! Your workflow seems to be inline with our expertise and recommendations. Thanks for tying out and reading it so carefully :) As for the TF version, I will look into using the latest CUDA (11.4) and TF (2.6.0) today. Unfortunately, we don't have a Docker Image for OpenFrameworks so I ll need to modify my current setup which may take some time. I ll to come back to you when I fixed all warnings. |

|

Hello No problem at all, bounding boxes differences where fixed when replaced the lib.... i know....no much sense at all. In fact the second image of beach boxes where correct so not sure what happened here but is fixed. I did a docker image to build the library so if need to build any version and want help, ping me! |

|

Cool! Do you have the Dockerfile somewhere on GitHub? This might be very cool for testing new TF or Cuda releases. |

|

Sorry i dont have a Dockerfile but i can publish the docker if you want to quay or similar. I will try to add more examples and will do a PR |

|

I just updated the downlaodscript for libtensorflow to 2.6. I didnt encounter any problems using CUDA 11.4 |

|

Nice, thanks! I will try to prepare some samples: object detection, face detection, something like posenet.... and make a pr. I think that do a small one training your own model will be also useful for people, i know your examples are more "art" oriented and what i'm doing is more "tools" oriented so if those do not fit in your concept please let me know |

|

Human Pose Estimation would be a huge contribution! Unfortunately, I couldn't find a single model that does the job in a pleasing way. |

|

I'm still on basics :) But i will help on what i can

What about this one for pose estimation? |

|

Hey @natxopedreira! |

|

@bytosaur model is from february i think, inference is ver fast, multiple users 40fps on cpu i7 and more than 60 on my 1060gpu. And i read somewhere in octuber tf will release next iteration of the model with a depth estimation i have to clean the code, make a readme and will do the pr. Model is very nice! |

|

Also, don't commit the model to the repo. If you can send us the model as a zip, we can add it to the models share folder and the download script. |

|

No need to send it. I ll grab it from tf hub and upload it to our cloud storage :) |

|

Ok, code is ready, only need to to readme. I use a video from pexels so no copyright, is ok to upload? |

|

@bytosaur @danomatika Please take a look here https://github.com/natxopedreira/ofxTensorFlow2/tree/main/example_Movenet to see if is ok for you, i can also add the object detection sample if you want |

|

hey @natxopedreira, Thanks for the upload!

is it useful when i make a PR first too your repo and then merge it afterwards in ours? |

I will do that in another branch if you dont mind as i prefer to have a separated class.... just for my use Dont understand what you want to say here :) |

|

The MP4 is tiny, so not a problem. I would just make sure proper attribution for it is included in the readme. |

|

Personally, I'm fine with the separate class. We already have subclasses of the Model anyway. Additionally, we can further modularize it by passing in a pixels or ofBaseVideo reference etc to the |

|

Just upload all changes except to move all back to main.... It just receive a ofPixels reference void update(ofPixels & pxs) |

|

Nice. As long as the design is easy for a beginner to follow, I don't think it's bad to have a simple class wrapper for some (complicated) examples. |

|

@natxopedreira Can you rename the folder with lowercase? ie. |

|

Done I tried to code it clean to easy understand and comment code, so people can take this as a reference and extend it adding a tracker... etc etc |

|

I would also suggest adding your own attribution to the readme and as a header in the source files. At a minimum something like "Example by Natxopedreira 2021" with a URL etc. We will probably edit a bit here and there but the core of the contribution is yours. (Thanks by the way!) |

|

Thanks :) I added a small footer with a comment a link to my github Note im open to make changes if you want, not a problem. I'm happy to contribute and i hope people will like this one!!!! |

|

As a follow up, we refactored and threaded the ofxMovenet class: https://github.com/zkmkarlsruhe/ofxTensorFlow2/blob/develop/example_movenet/src/ofxMovenet.h |

|

Thanks for let me know Now looks better, with comments on each method.... and the thread |

|

I only tested on my macOS system with TF CPU, no GPU. Please test on your setup and let me know if toggling the threading with the t key works and if there is no major loss in FPS. On this Intel Macbook Pro 16 inch, the non-threaded processing slows the app down to about 10 fps. If I turn threading on, the app runs at 60 but the tracking lags a bit as it's still slow in the background thread. In any case, your system should be able to run it fast with or without the thread. UPDATE: The current work is in the |

|

i will test and let you know, give me some hours. Note that here "old version" i get 40fps on CPU i7... 10fps sounds really low |

Laptop CPU :P |

|

no lag here on CPU and solid 60fps, need more tests? im going to start training so computer will be used but can wait to test anything |

|

No, sounds good. I just wanted to be sure I didn't break anything. |

|

Oook! If you have more ideas about other doing others examples, please let me know, those are good exercises to learn tf |

Hello!!

Im playing with this to help me to understand how tensorflow c pi works.

I have a centernet model that has one input and 4 outputs

I load the model and set input and output names

Add one dimension to input to follow model requeriments

And now how i acces to the output data? I tried this but dont work, i expected that the return of runModel was a vector of vectors.... i tried reading the cppflow doc but no luck

Thanks!

The text was updated successfully, but these errors were encountered: