-

Notifications

You must be signed in to change notification settings - Fork 34

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Bounding Box generation Error #150

Comments

|

The rest of the code, for if it's needed: |

|

That is a weird result for sure. Code seems to looks fine. What do the depth and segmentation images look like for those weird bounding boxes? |

|

Interesting. Are you using your own visualization tools to show the bounding box over the image? There are a couple different styles of bounding boxes, zpy uses |

|

Yes, I use my own script, but I'd say I got the style you say. Here is my script: |

|

Well yeah, it was my fault. That Sigh. Sorry, had my head somewhere else when I wrote this. Thanks for your support! |

|

All good! Glad I could help. |

I've created this Blender scene where I have a dice, and I'm using ZPY to generate a dataset composed of images obtained by rotating around the object and jittering both the dice position and the camera. Everything seems to be working properly, but the bounding-boxes generated on the annotation file get progressively worse with each picture.

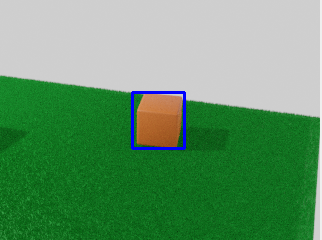

For example this is the first image's bounding-box:

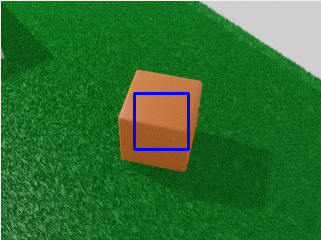

This one we get halfway through:

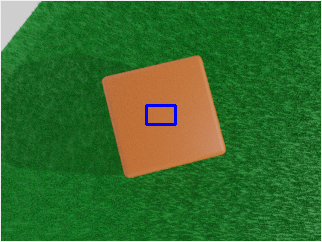

And this is one of the last ones:

This is my code (I've cut some stuff, I can't paste it all for some reason):

Is this my fault or an actual bug?

The text was updated successfully, but these errors were encountered: