PyTorch Code for 'Deep k-Means: Re-Training and Parameter Sharing with Harder Cluster Assignments for Compressing Deep Convolutions'

PyTorch Implementation of our ICML 2018 paper "Deep k-Means: Re-Training and Parameter Sharing with Harder Cluster Assignments for Compressing Deep Convolutions".

In our paper, we proposed a simple yet effective scheme for compressing convolutions though applying k-means clustering on the weights, compression is achieved through weight-sharing, by only recording K cluster centers and weight assignment indexes.

We then introduced a novel spectrally relaxed k-means regularization, which tends to make hard assignments of convolutional layer weights to K learned cluster centers during re-training.

We additionally propose an improved set of metrics to estimate energy consumption of CNN hardware implementations, whose estimation results are verified to be consistent with previously proposed energy estimation tool extrapolated from actual hardware measurements.

We finally evaluated Deep k-Means across several CNN models in terms of both compression ratio and energy consumption reduction, observing promising results without incurring accuracy loss.

- Wide ResNet

- LeNet-Caffe-5

Python 3.5

- PyTorch 0.3.1

- libKMCUDA 6.2.1

- sklearn

- numpy

- matplotlib

- Wide ResNet

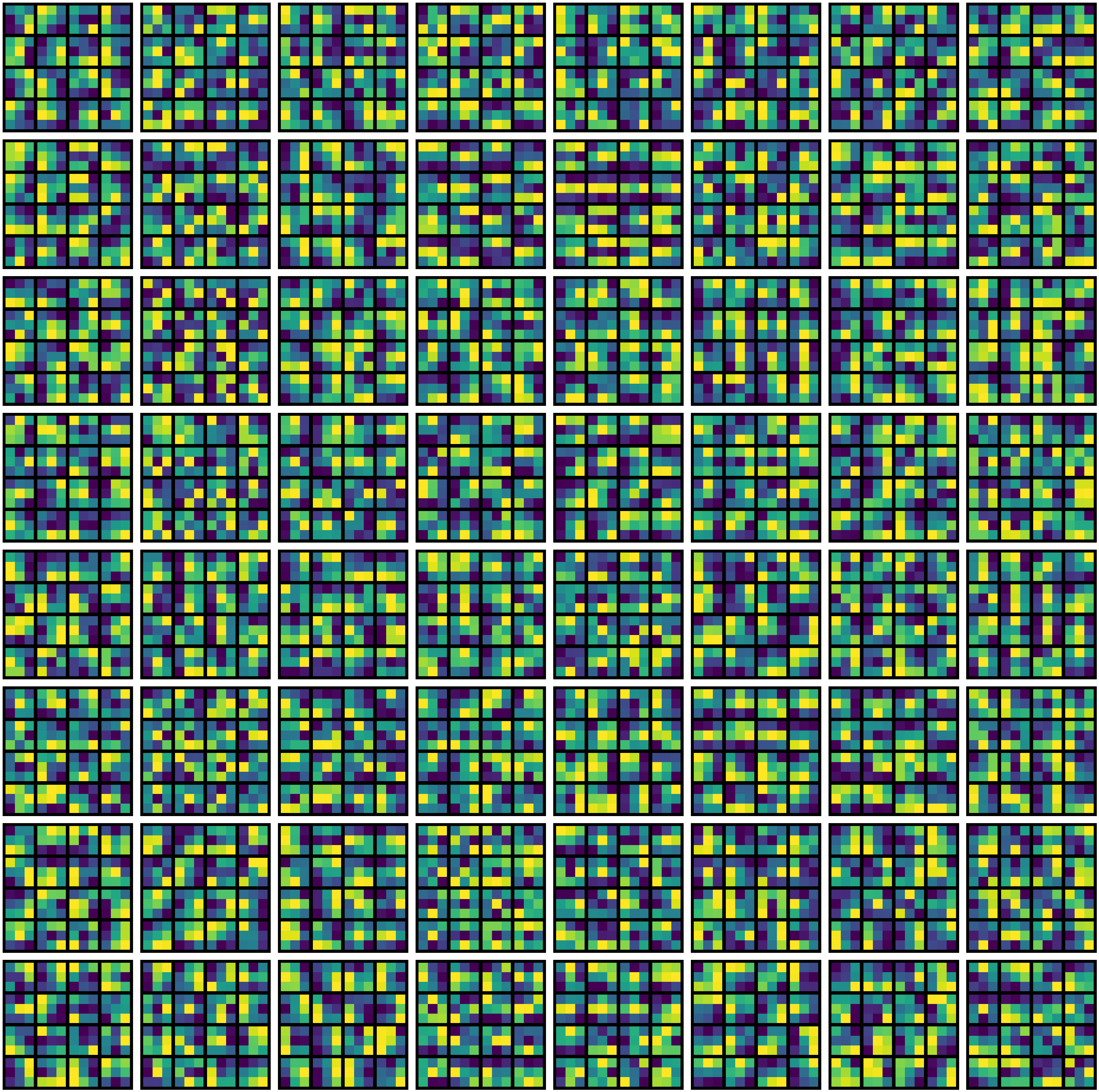

python WideResNet_Deploy.pySample Visualization of Wide ResNet (Conv2)

| Pre-Trained Model (Before Comp.) | Pre-Trained Model (After Comp.) |

|---|---|

|

.png) |

| Deep k-Means Re-Trained Model (Before Comp.) | Deep k-Means Re-Trained Model (After Comp.) |

.png) |

.png) |

If you find this code useful, please cite the following paper:

@article{deepkmeans,

title={Deep k-Means: Re-Training and Parameter Sharing with Harder Cluster Assignments for Compressing Deep Convolutions},

author={Junru Wu, Yue Wang, Zhenyu Wu, Zhangyang Wang, Ashok Veeraraghavan, Yingyan Lin},

journal={ICML},

year={2018}

}

We would like to thanks the arthor of libKMCUDA, a CUDA based k-means library, without which we won't be able to do large-scale k-means efficiently.