A PyTorch implementation of:

- Single Shot MultiBox Detector from the 2016 paper by Wei Liu, etc.

- Variants of SSD with Resnet-50/MobileNetv1/MobileNetv2 backbones.

- Single-Shot Refinement Neural Network for Object Detection from the 2017 paper by Shifeng Zhang, etc.

- Pruning Filters for Efficient ConvNets from the 2017 paper by Hao Li, etc.

- Support model training and evaluation on various datasets including VOC/XLab/WEISHI/COCO.

- Installation

- Datasets

- Train and Test

- Evaluate

- Prune and Finetune

- Performance

- Demos

- Future Work

- Reference

- Clone this repository.

- Note: We currently only support Python 3+ and PyTorch 0.3.

- Note: Can directly use image in Tencent Docker

youtu/akuxcwchen_pytorch:3.0for environment setup.

- Then download the dataset by following the instructions below.

- We now support Visdom for real-time loss visualization during training!

- To use Visdom in the browser:

# First install Python server and client pip install visdom # Start the server (probably in a screen or tmux) python -m visdom.server

- Then (during training) navigate to http://localhost:8097/ (see the Train section below for training details).

- Note: For training and evaluation, COCO is not supported yet.

To make things easy, we provide bash scripts to handle the dataset downloads and setup for you. We also provide simple dataset loaders that inherit torch.utils.data.Dataset, making them fully compatible with the torchvision.datasets API.

Please refer to config.py file (path: ssd.pytorch.tencent/data) and remember to update dataset root if necessary. Please also note that dataset root for VALIDATION should be written within config.py, while dataset root for TRAINING can be updated through args during execution of a program.

PASCAL VOC: Visual Object Classes

# specify a directory for dataset to be downloaded into, else default is ~/data/

sh data/scripts/VOC2007.sh # <directory>

# this dataset has existed in /cephfs/share/data/VOCdevkit in Tencent server# specify a directory for dataset to be downloaded into, else default is ~/data/

sh data/scripts/VOC2012.sh # <directory>

# this dataset has existed in /cephfs/share/data/VOCdevkit in Tencent serverThis dataset is VOC-like dataset produced by X-Lab

# this dataset has existed in /cephfs/share/data/VOC_xlab_products in Tencent serverThis dataset has an one-to-one matching format inside a jpg-xml file.

# this dataset has existed in /cephfs/share/data/weishi_xh in Tencent serverMicrosoft COCO: Common Objects in Context

# specify a directory for dataset to be downloaded into, else default is ~/data/

sh data/scripts/COCO2014.sh

# this dataset has existed in /cephfs/share/data/coco_xy in Tencent server- All required backbone weights have existed in

ssd.pytorch.tencent/weightsdir. They are modified versions of original model (Resnet-50, VGG, MobileNet v1, MobileNet v2) fitting our own model design.

# navigate them by:

mkdir weights

cd weights- To make backbone preloading more convenient, we turn all backbone models into class object inheriting

nn.Modulein PyTorch.- Resnet:

ssd.pytorch.tencent/models/resnet.py - VGG for SSD + RefineDet:

ssd.pytorch.tencent/models/vgg.py - MobileNet v1:

ssd.pytorch.tencent/models/mobilenetv1.py - MobileNet v2:

ssd.pytorch.tencent/models/mobilenetv2.py

- Resnet:

Based on this design, all backbone layers returned by functions in backbones.py have already stored pretrained weights.

- To train SSD using the train script simply specify the parameters listed in

train_test_vrmSSD.pyas a flag or manually change them.

#Use VOC dataset by default

#Train + Test SSD model with vgg backbone

python3 train_test_vrmSSD.py --evaluate True # testing while training

python3 train_test_vrmSSD.py # only training

#Train + Test SSD model with resnet backbone

python3 train_test_vrmSSD.py --use_res --evaluate True # testing while training

python3 train_test_vrmSSD.py --use_res # only training

#Train + Test SSD model with mobilev1 backbone

python3 train_test_vrmSSD.py --use_m1 --evaluate True # testing while training

python3 train_test_vrmSSD.py --use_m1 # only training

#Train + Test SSD model with mobilev2 backbone

python3 train_test_vrmSSD.py --use_m2 --evaluate True # testing while training

python3 train_test_vrmSSD.py --use_m2 # only training

#Use WEISHI dataset

--dataset WEISHI --dataset_root _path_for_WEISHI_ROOT --jpg_xml_path _path_to_your_jpg_xml_txt

#Use XL dataset

--dataset XL --dataset_root _path_for_XL_ROOT- To train RefineDet using the train script simply specify the parameters listed in

train_test_refineDet.pyas flag or manually change them.

#Use VOC dataset by default

#Train + Test refineDet model

python3 train_test_refineDet.py --evaluate True #testing while training

python3 train_test_refineDet.py #only training

#Use WEISHI dataset

--dataset WEISHI --dataset_root _path_for_WEISHI_ROOT --jpg_xml_path _path_of_your_jpg_xml

#Use XL dataset

--dataset XL --dataset_root _path_for_XL_ROOT- Note:

- For training, an NVIDIA GPU is strongly recommended for speed.

- For instructions on Visdom usage/installation, see the Installation section.

- You can pick-up training from a checkpoint by specifying the path as one of the training parameters (again, see

--resumefor options)

To evaluate a trained network or checkpoint on VOC or VOC-like dataset only.

Use eval_voc_vrmSSD.py to evaluate.

#Model evaluation on VOC for vggSSD separately

python3 eval_voc_vrmSSD.py --trained_model weights/_your_trained_SSD_model_.pth

#Model evaluation on VOC for resnetSSD separately

python3 eval_voc_vrmSSD.py --use_res --trained_model weights/_your_trained_SSD_model_.pth

#Model evaluation on VOC for mobileSSD v1 separately

python3 eval_voc_vrmSSD.py --use_m1 --trained_model weights/_your_trained_SSD_model_.pth

#Model evaluation on VOC for mobileSSD v2 separately

python3 eval_voc_vrmSSD.py --use_m2 --trained_model weights/_your_trained_SSD_model_.pth

#Take care of different versions of .pth file, can be solved by changing state_dictUse eval_voc_refineDet.py to evaluate.

#Model evaluation on VOC for refineDet separately

python3 eval_voc_refineDet.py --trained_model weights/_your_trained_refineDet_model_.pth

#Take care of different versions of .pth file, can be solved by changing state_dictFor other datasets, please refer to Test part in train_test files, and extract the test_net() function correspondingly.

prune_weights_refineDet.pyprune_weights_resnetSSD.pyprune_weights_vggSSD.py

#Use absolute weights-based criterion for filter pruning on refineDet(vgg)

python3 prune_weights_refineDet.py --trained_model weights/_your_trained_model_.pth

#Use absolute weights-based criterion for filter pruning on vggSSD

python3 prune_weights_vggSSD.py --trained_model weights/_your_trained_model_.pth

#Use absolute weights-based criterion for filter pruning on resnetSSD (resnet50)

python3 prune_weights_resnetSSD.py --trained_model weights/_your_trained_model_.pth

#**Note**

#Due to the limitation of PyTorch, if you really need to prune left path conv layer,

#after call this file, please use prune_rbconv_by_number() MANUALLY to prune all following right bottom layers affected by your pruning

The way of loading trained model (first time) and finetuned model (> 2 times) are different.

Please change the following codes within prune_weights_**.py files correspondingly.

# ------------------------------------------- 1st prune: load model from state_dict

model = build_ssd('train', cfg, cfg['min_dim'], cfg['num_classes'], base='resnet').cuda()

state_dict = torch.load(args.trained_model)

from collections import OrderedDict

new_state_dict = OrderedDict()

for k, v in state_dict.items():

head = k[:7] # head = k[:4]

if head == 'module.': # head == 'vgg.', module. is due to DataParellel

name = k[7:] # name = 'base.' + k[4:]

else:

name = k

new_state_dict[name] = v

model.load_state_dict(new_state_dict)

#model.load_state_dict(torch.load(args.trained_model))

# ------------------------------------------- >= 2nd prune: load model from previous pruning

model = torch.load(args.trained_model).cuda()finetune_vggresSSD.pyfinetune_refineDet.py

#Finetune prunned model vggSSD (Train/Test on VOC)

python3 finetune_vggresSSD.py --pruned_model prunes/_your_prunned_model_ --lr x --epoch y

#Finetune prunned model resnetSSD (Train/Test on VOC)

python3 finetune_vggresSSD.py --use_res --pruned_model prunes/_your_prunned_model_ --lr x --epoch y

#Finetune prunned model refineDet(vgg) (Train/Test on VOC)

python3 finetune_refineDet.py --pruned_model prunes/_your_prunned_model --lr x --epoch y| Original | Converted weiliu89 weights | From scratch w/o data aug | From scratch w/ data aug |

|---|---|---|---|

| 77.2 % | 77.26 % | 58.12% | 77.43 % |

GTX 1060: ~45.45 FPS

- For other pre-trained network with different backbones on different dataset, please contact me.

- We are trying to provide PyTorch

state_dicts(dict of weight tensors) of the latest SSD model definitions trained on different datasets. - Currently, we provide the following PyTorch models:

- SSD300 trained on VOC0712 (newest PyTorch weights)

- SSD300 trained on VOC0712 (original Caffe weights)

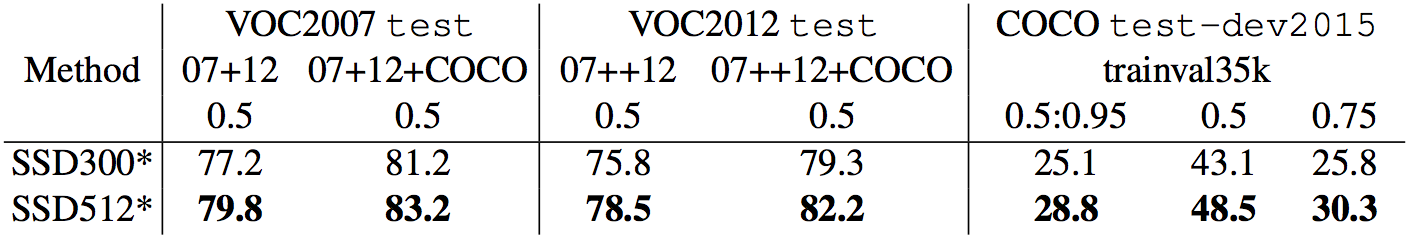

- Our goal is to reproduce this table from the original paper

- Make sure you have jupyter notebook installed.

- Two alternatives for installing jupyter notebook:

# make sure pip is upgraded

pip3 install --upgrade pip

# install jupyter notebook

pip install jupyter

# Run this inside ssd.pytorch

jupyter notebook- Now navigate to

demo/demo.ipynbat http://localhost:8888 (by default) and have at it!

- Works on CPU (may have to tweak

cv2.waitkeyfor optimal fps) or on an NVIDIA GPU - This demo currently requires opencv2+ w/ python bindings and an onboard webcam

- You can change the default webcam in

demo/live.py

- You can change the default webcam in

- Install the imutils package to leverage multi-threading on CPU:

pip install imutils

- Running

python -m demo.liveopens the webcam and begins detecting!

The following to-do list, which hope to complete in the near future

- Still to come:

- Fix mistake for implemenation of top_k/max_per_image

- Fix learning rate to 0.98 decay every epoch and 1/10 when mAP is stable

- Support for the MS COCO dataset

- Support for SSD512 and RefineDet512 training and testing

- xuhuahuang as intern in Tencent YouTu Lab 07/2018

Thanks Max deGroot and Ellis Brown because this work is built on their original implementation for SSD in pytorch.

- Jaco. "PyTorch Implementation of [1611.06440] Pruning Convolutional Neural Networks for Resource Efficient Inference." https://github.com/jacobgil/pytorch-pruningReferences

- Implementation of Variants of SSD Model. https://github.com/lzx1413/PytorchSSD

- Useful links of explanation for Mobilenet v2. http://machinethink.net/blog/mobilenet-v2/

- Useful links of explanation for Mobilenet v1. http://machinethink.net/blog/googles-mobile-net-architecture-on-iphone/

- Wei Liu, et al. "SSD: Single Shot MultiBox Detector." ECCV2016.

- Original Implementation (CAFFE)

- A huge thank you to Alex Koltun and his team at Webyclip for their help in finishing the data augmentation portion.