Self-supervised Deep Reinforcement Learning with Generalized Computation Graphs for Robot Navigation

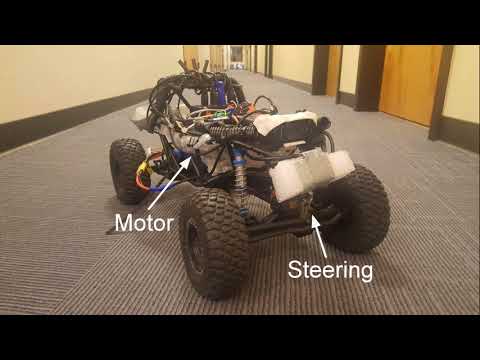

Abstract: Enabling robots to autonomously navigate complex environments is essential for real-world deployment. Prior methods approach this problem by having the robot maintain an internal map of the world, and then use a localization and planning method to navigate through the internal map. However, these approaches often include a variety of assumptions, are computationally intensive, and do not learn from failures. In contrast, learning-based methods improve as the robot acts in the environment, but are difficult to deploy in the real-world due to their high sample complexity. To address the need to learn complex policies with few samples, we propose a generalized computation graph that subsumes value-based model-free methods and model-based methods, with specific instantiations interpolating between model-free and model-based. We then instantiate this graph to form a navigation model that learns from raw images and is sample efficient. Our simulated car experiments explore the design decisions of our navigation model, and show our approach outperforms single-step and N-step double Q-learning. We also evaluate our approach on a real-world RC car and show it can learn to navigate through a complex indoor environment with a few hours of fully autonomous, self-supervised training.

Click below to view video

This repository contains the code to run the simulation experiments. The main code is in sandbox/gkahn/gcg, while the rllab code was used for infrastructure purposes (e.g., running experiments on EC2).

Clone the repository and add it to your PYTHONPATH

Install Anaconda using the Python 2.7 installer.

We will always assume the current directory is sandbox/gkahn/gcg. Create a new Anaconda environment and activate it:

$ CONDA_SSL_VERIFY=false conda env create -f environment.yml

$ source activate gcgInstall Panda3D

$ pip install --pre --extra-index-url https://archive.panda3d.org/ panda3dIncrease the simulation speed by running

$ nvidia-settingsAnd disabling "Sync to VBLank" under "OpenGL Settings"

To drive in the simulation environment, run

$ python envs/rccar/square_cluttered_env.pyThe commands are

- [ w ] forward

- [ x ] backward

- [ a ] left

- [ d ] right

- [ s ] stop

- [ r ] reset

The yamls folder contains experiment configuration files for Double Q-learning , 5-step Double Q-learning , and our approach.

These yaml files can be adapted to form alternative instantiations of the generalized computation graph. Please see the example yaml files for detailed descriptions.

To run our approach, execute

$ python run_exp.py --exps oursThe results will be stored in the gcg/data folder.

You can run other yaml files by replacing "ours" with the desired yaml file name (e.g., "dql" or "nstep_dql")

Gregory Kahn, Adam Villaflor, Bosen Ding, Pieter Abbeel, Sergey Levine. "Self-supervised Deep Reinforcement Learning with Generalized Computation Graphs for Robot Navigation." arXiv:1709.10489