Paper | Review | Experiment video | 5min presentation at CoRL 2020

This repository includes codes for synthetic trainings of these robotic tasks in the paper:

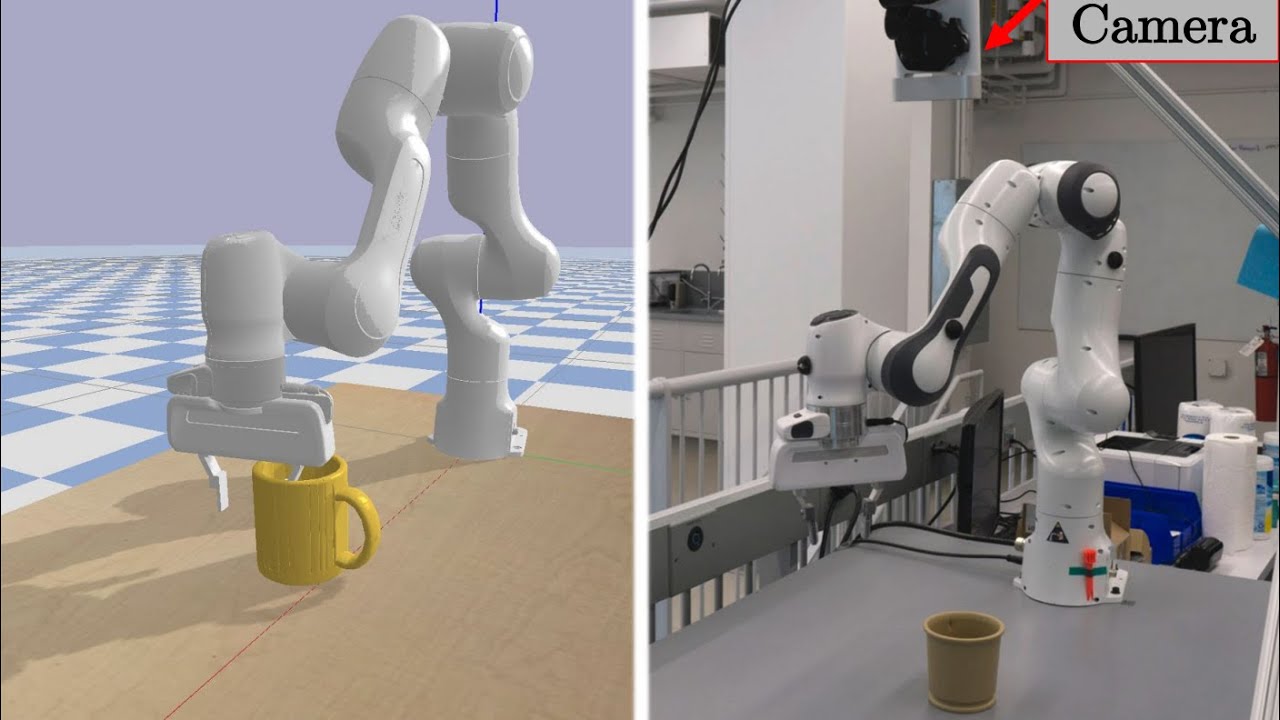

- Grasping diverse mugs

- Planar box pushing using visual-feedback

- Vision-based navigation through home environments

Although the codes for all examples are included here, only the pushing example can be run without any additional codes/resources. The other two examples require data from online object dataset and object post-processing, which can take significant amount of time to set up and involves licensing. Meanwhile, all objects (rectangular boxes) used for the pushing example can be generated through URDF files (generativeBox.py).

Moreover, we provide the pre-trained weights for the decoder network of the cVAE for the pushing example. The posterior policy distribution can be trained then using the weights and the prior distribution (unit Gaussians).

- pybullet

- torch

- ray

- cvxpy

..._bc.pyis for behavioral cloning training using collected demonstrations...._es.pyis for PAC-Bayes ``fine-tuning'' using Natural Evolutionary Strategies. Also computes the final bound at the end of training...._bound.pyis for computing the final bound.

- Generate a large number (3000) of boxes by running

python generateBox.py --obj_folder=...and specifying the path to the object URDF files generated. - Modify

obj_folderinpush_pac_easy.jsonandpush_pac_hard.json - Train pushing tasks ("Easy" or "Hard" difficulty) by running

python trainPush_es.py push_pac_easy(orhard). The final bound is also computed by specifyingL(number of policies sampled for each environment for computing the sample convergence bound) in the json file. (Note: the default number of training environments is 1000 as in the json files. Withnum_cpus=20on a moderately powerful desktop, it takes 20 minutes for each training step. We recommend training using Amazon AWS instance c5.24xlarge that has 96 threads. Also, a useful final bound requires largeLand can take significant computations.) - Visualize pushing rollout by running

python testPushRollout.py --obj_folder=... --posterior_path=.... Ifposterior_pathis not provided, the prior policy distribution (unit Gaussians) is used. Otherwise, the path should bepush_result/push_pac_easy/train_details(orhard).

- Provide the mesh-processing code for mugs from ShapeNet.

- Provide the collected demonstrations for both pushing and grasping examples.

(Note: we do not plan to release instructions to replicate results of the indoor navigation example in the near future. We plan to refine the simulation in a future version of the paper.)

- We made the assumption that the latent variables in the CVAE are independent of the states (p(z|s_{1:T}) = p(z)).