If you feel this useful, please consider cite:

@inproceedings{ren-cvpr2018,

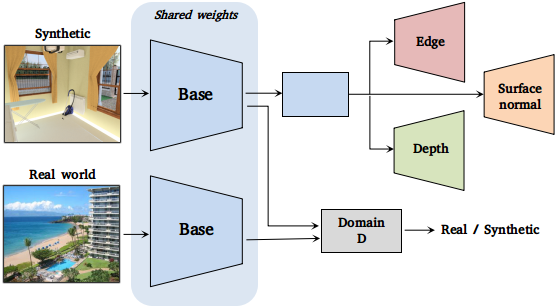

title = {Cross-Domain Self-supervised Multi-task Feature Learning using Synthetic Imagery},

author = {Ren, Zhongzheng and Lee, Yong Jae},

booktitle = {IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2018}

}Feel free to contact Jason Ren (zr5@illinois.edu) if you have any questions!

- Pytorch-0.4 (some evaluation code borrowed from other places requiring Caffe)

- Python2 (One evaluation code requiring Python3)

- NVIDIA GPU + CUDA CuDNN (Sorry no CPU version)

- Install PyTorch 0.4 and torchvision from http://pytorch.org

- Python packages

- torchvision

- numpy

- opencv

- tensorflow (necesary for the use of tensorboard. Will change it to tensorboardX)

- scikit-image

- Clone this repo:

git clone https://github.com/jason718/game-feature-learning

cd game-feature-learning- Change the config files under configs.

- Caffemodel(Caffenet): Coming in second update

- Pytorch model: Coming in second update

Since I greatly changed the code structure, I am retraining using the new code to reproduce the paper results.

-

SUNCG: Download the SUNCG images from suncg website. And make sure to put the files as the following structure:

suncg_image ├── depth ├── room_id1 ├── ... ├── normal ├── room_id1 ├── ... ├── edge ├── room_id1 ├── ... └── lab ├── room_id1 ├── ... -

SceneNet: Download the SceneNet images from scenenet website. And make sure to put the files as the following structure:

scenenet_image └── train ├── 1 ├── 2 ├── ...Please check scripts/surface_normals_code to generate surface normals from depth maps.

-

Dataset For Domain Adaptation:

- Places-365: Download the Places images from places website.

- Or you can choose other dataset for DA such ImageNet...

- Train a model:

sh ./scripts/train.sh-

Evaluate on feature learning

- Read each README.md under folder "eval-3rd-party"

-

Evaluate on three tasks

- Coming in Third Update

There are lots of awesome papers studying self-supervision for various tasks such as Image/Video Representation learning, Reinforcement learning, and Robotics. I am maintaining a paper list [awesome-self-supervised-learning] on Github. You are more than welcome to contribute and share :)

Supervised Learning is awesome but limited. Un-/Self-supervised learning generalizes better and sometimes also works better (which is already true in some geometry tasks)!

This work was supported in part by the National Science Foundation under Grant No. 1748387, the AWS Cloud Credits for Research Program, and GPUs donated by NVIDIA. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the National Science Foundation.