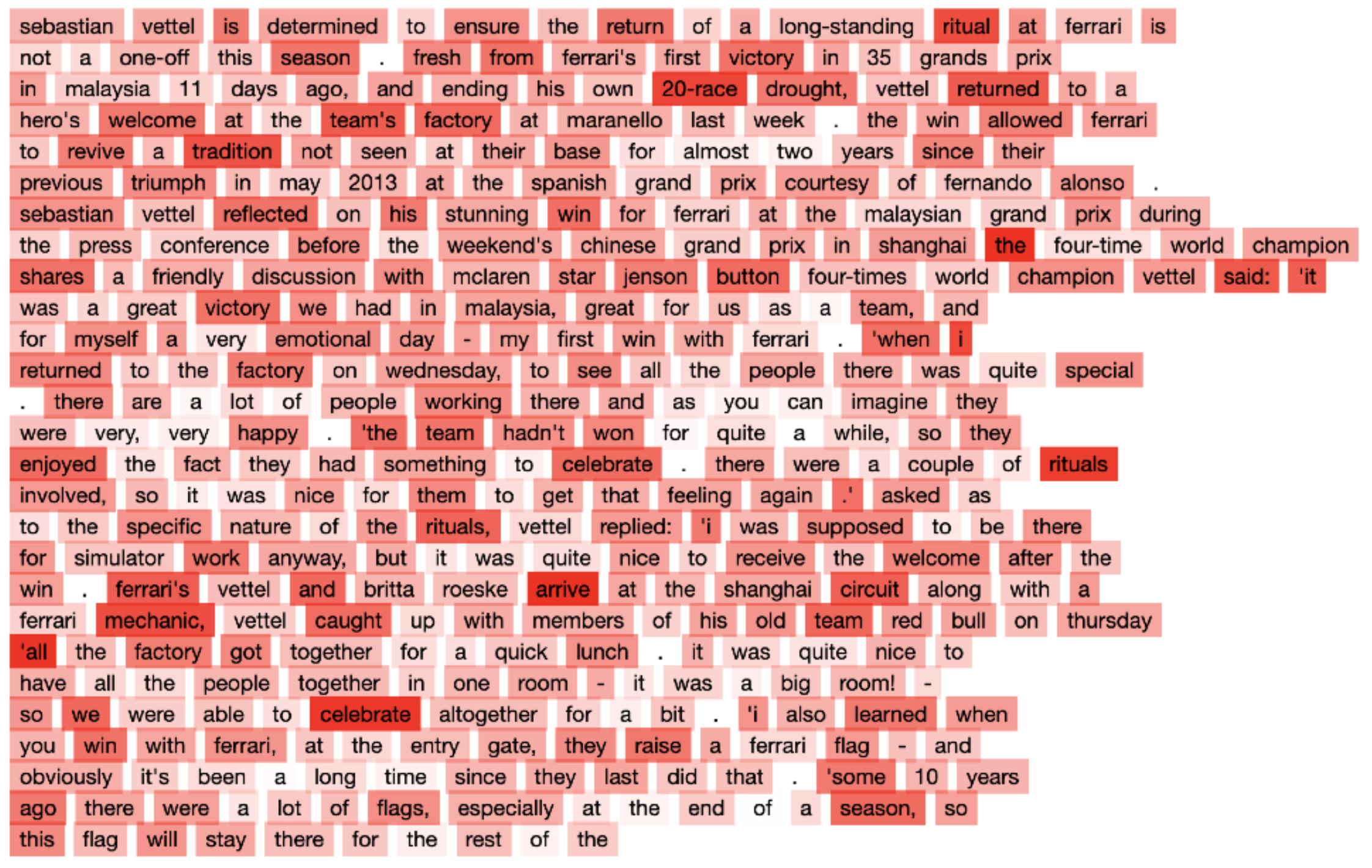

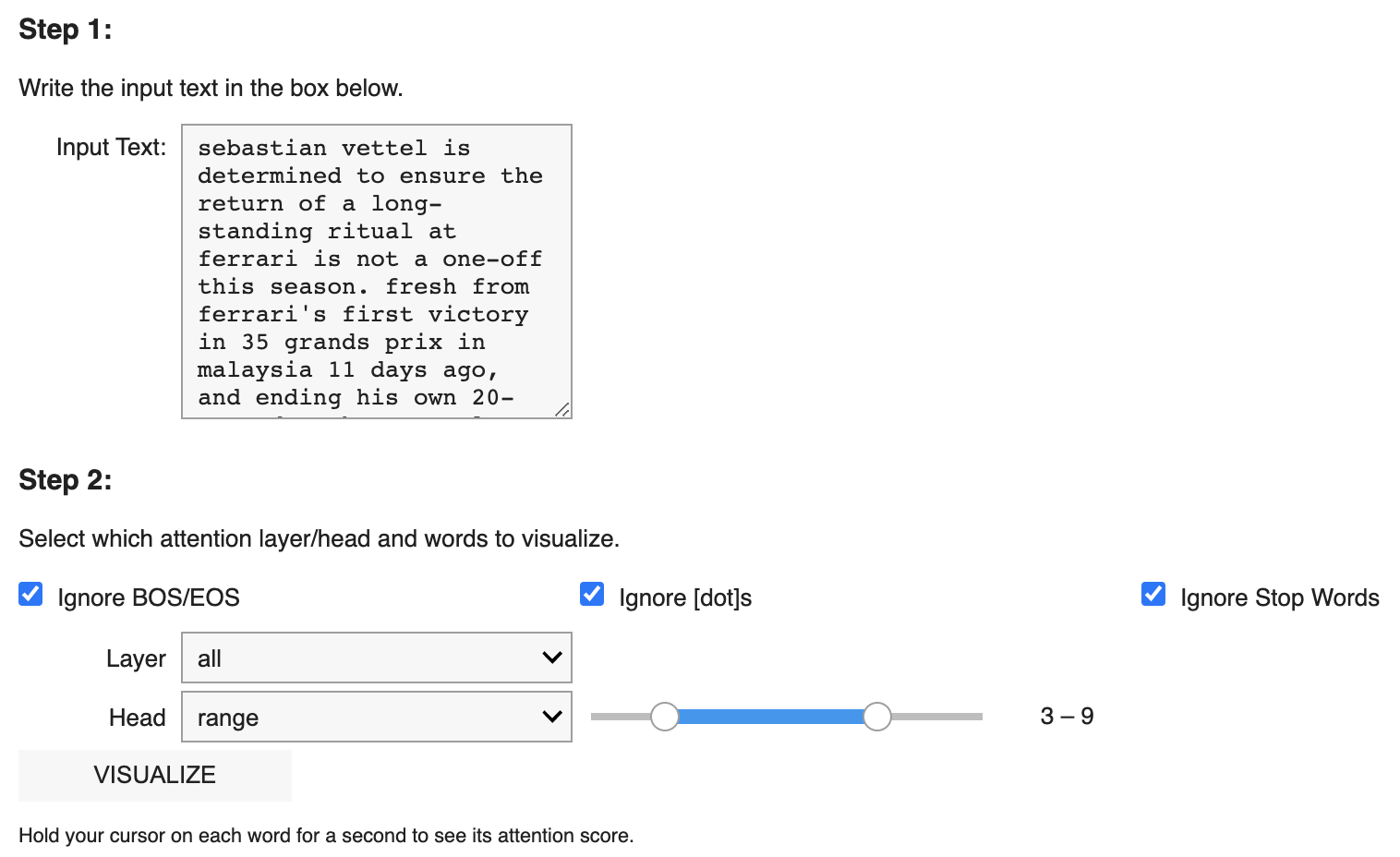

A fun project that turns out as a python package with a simple UI to visualize the self-attention score using the RoBERTa library. It is implemented for IPython Notebook environment with options to ignore tokens like "BOS", "[dot]s", or "stopwords". You can also look at a range or specific Layers/Heads.

Run the library on a Google Colab instance using the following link.

The package is only hosted on Github for now. You can use pip to install the package.

pip install git+https://github.com/AlaFalaki/AttentionVisualizer.gitRun the code below in an IPython Notebook.

import AttentionVisualizer as av

obj = av.AttentionVisualizer()

obj.show_controllers(with_sample=True)The package will automatically installs all the requirements.

- pytorch

- transformers

- ipywidgets

- NLTK

If you are interested in the project and want to know more, I wrote a blog post on medium that explain the implementation in detail.

Attention Visualizer Package: Showcase Highest Scored Words Using RoBERTa ModelarXiv preprint: Attention Visualizer Package: Revealing Word Importance for Deeper Insight into Encoder-Only Transformer Models

@article{falaki2023attention,

title={Attention Visualizer Package: Revealing Word Importance for Deeper Insight into Encoder-Only Transformer Models},

author={Falaki, Ala Alam and Gras, Robin},

journal={arXiv preprint arXiv:2308.14850},

year={2023}

}