An example of how to train different deep neural networks on the CINIC-10 dataset based on the pytorch-cifar repository.

This example was constructed from kuangliu's excellent pytorch-cifar, the official PyTorch imagenet example and bearpaw's pytorch-classification. Instead of utilizing the CIFAR-10 dataset this example use CINIC-10 which is a drop in replacement to CIFAR-10 which increases the difficulty of the image classification task.

By changing the dataset we increase the amount of images from 60,000 to 270,000. The images have the same size as in the CIFAR set and has equal train, validation and test splits. The dataset is around 650 MB.

In addition to the dataset change this example also contains a number of other changes including:

- Cosine annealing learning rate scheduler.

- Training time output.

- Dockerfile for simple dependency management.

Make sure that all nvidia drivers and CUDA runtimes are in place to be able to run on the GPU. Install PyTorch matching your setup.

Download the dataset into the ./data/cinic-10 folder.

mkdir -p data/cinic-10

curl -L \

https://datashare.is.ed.ac.uk/bitstream/handle/10283/3192/CINIC-10.tar.gz \

| tar xz -C data/cinic-10

Run the main.py file with your python environment where you installed PyTorch.

python main.py

This creates a checkpoint folder in your workdir where the best performing

PyTorch model is saved. Note that it is overwritten on re-runs.

To simply run a training session with the VGG16 neural network on nvidia-docker run the following.

nvidia-docker run -it --rm --name cinic-10 antonfriberg/pytorch-cinic-10:latest

Currently there is a need for -it option in order to run the docker benchmark.

This implementation achieves the following accuracy after training for 300 epochs on a single Nvidia GTX 1080TI with a training batch size of 64. res_next29_32x4d.

| Model | Accuracy | Native Training Time | Docker Training Time |

|---|---|---|---|

| VGG16 | 84.74% | 3 hours 12 minutes | 3 hours 40 minutes |

| ResNet18 | 87.45% | 4 hours 41 minutes | 4 hours 24 minutes |

| ResNet50 | 88.40% | 15 hours 12 minutes | 15 hours 27 minutes |

| ResNet101 | 88.42% | 24 hours 57 minutes | 25 hours 19 minutes |

| MobileNetV2 | 83.99% | 6 hours 19 minutes | 6 hours 10 minutes |

| ResNeXt29(32x4d) | 88.67% | 14 hours 21 minutes | 14 hours 59 minutes |

| ResNeXt29(2x64d) | 89.09% | 14 hours 6 minutes | 14 hours 47 minutes |

| DenseNet121 | 88.58% | 16 hours 34 minutes | 17 hours 3 minutes |

| PreActResNet18 | 87.01% | 4 hours 19 minutes | 4 hours 19 minutes |

| DPN92 | 88.18% | 38 hours 33 minutes | 43 hours 19 minutes |

This is the complete software rundown.

| Software | Native Version | Docker Version |

|---|---|---|

| Distro | Ubuntu 16.04 | Ubuntu 16.04 |

| Nvidia Driver | 384.130 | 410.78 |

| Nvidia CUDA | 8.0.61 | 10.0.130 |

| Nvidia cuDNN | 6.0.21 | 7.4.1 |

| Python | 3.5.2 | 3.6.7 |

| PyTorch | 1.0.0 | 1.0.0 |

| TorchVision | 0.2.1 | 0.2.1 |

Both native and docker benchmark runs where performed on the same hardware.

| Hardware | Brand | Version | Specification |

|---|---|---|---|

| CPU | Intel | i7 8700K | 6x3.7GHz |

| GPU | Nvidia | GTX 1080Ti | 1 531 MHz, 11GB |

| RAM | Corsair | DDR4 | 2x8GB, 2666MHz |

| PSU | Corsair | CX750 | 750W |

| Storage | Intel | 660p NVMe SSD | 1TB |

| Motherboard | Gigabyte | H370 HD3 | rev. 1.0 |

Instead of manually adjusting the learning rate a cosine annealing learning rate scheduler is used.

- https://github.com/BayesWatch/cinic-10

- https://github.com/kuangliu/pytorch-cifar/

- https://pytorch.org/tutorials/beginner/blitz/cifar10_tutorial.html

- https://pytorch.org/tutorials/beginner/data_loading_tutorial.html

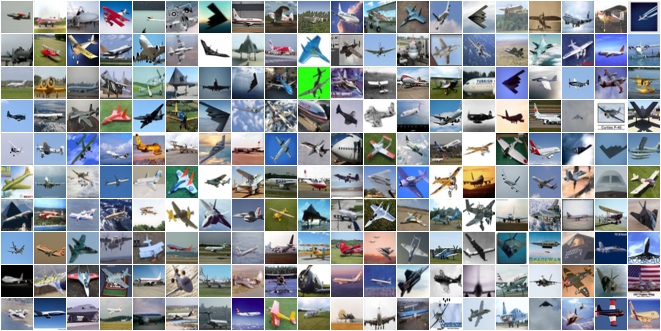

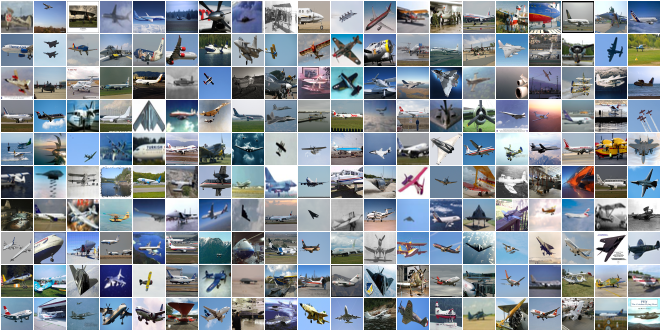

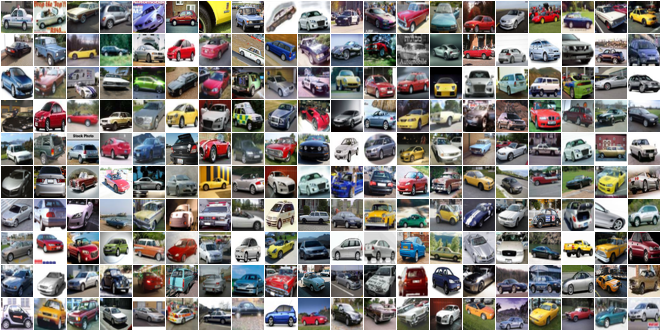

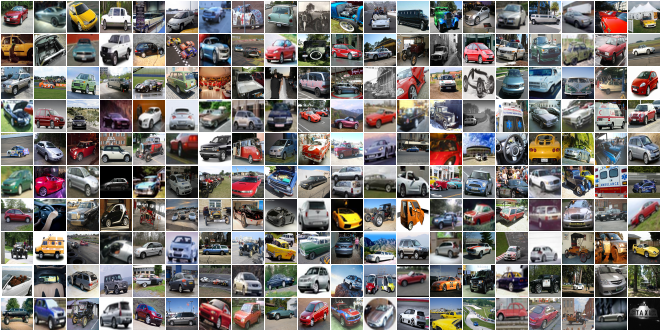

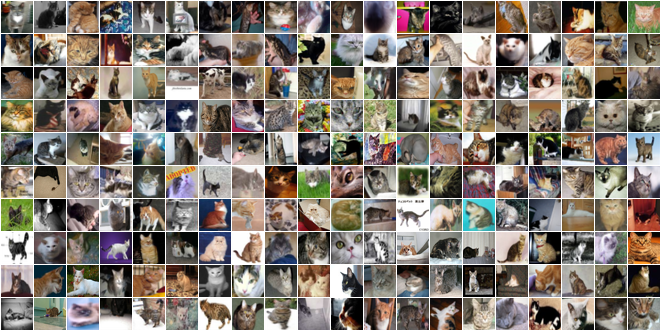

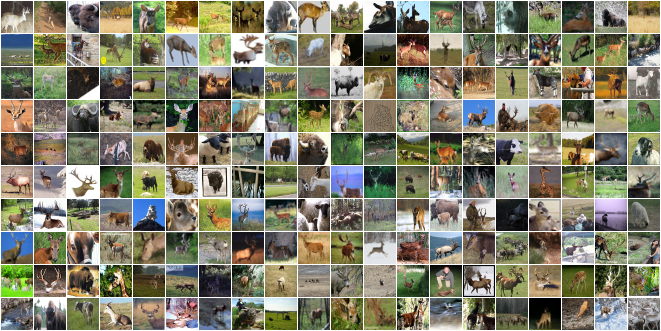

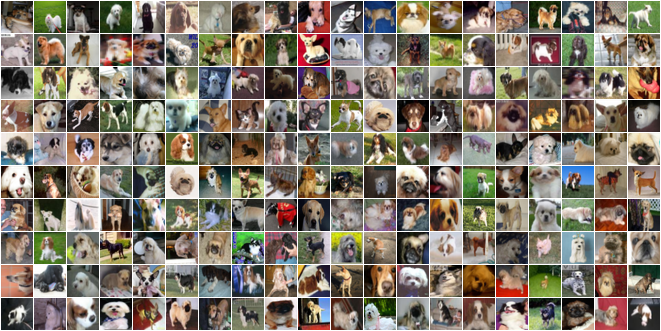

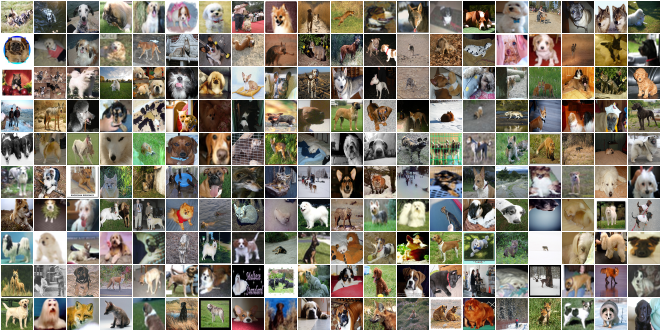

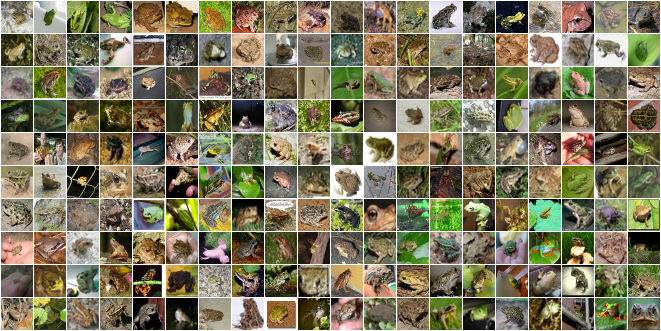

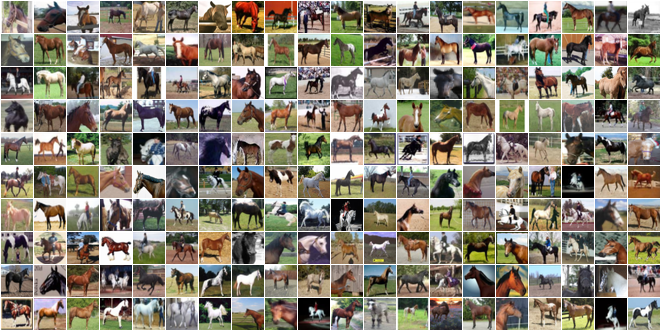

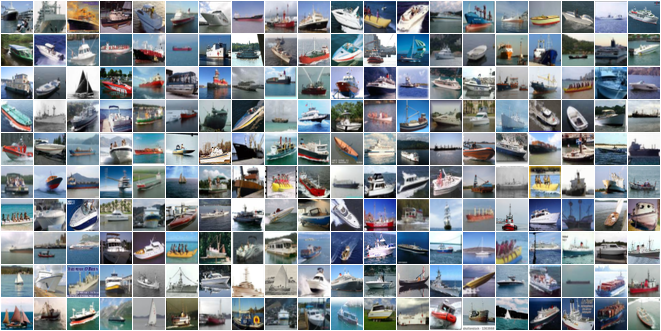

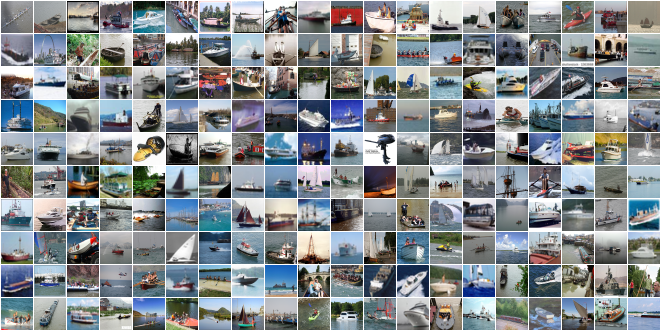

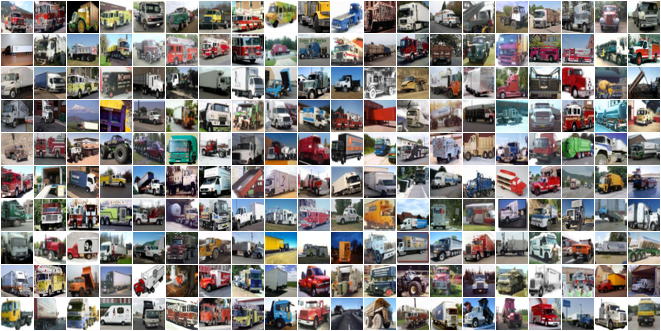

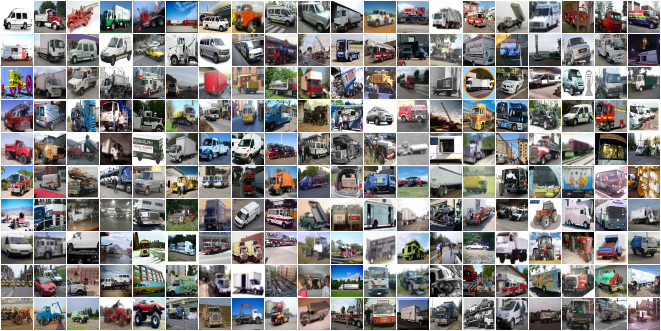

Below are samples randomly selected from CINIC-10 and from CIFAR-10 for comparison. It is clear that CINIC-10 is a more noisy dataset because the Imagenet constituent samples were not vetted.

Darlow L.N., Crowley E.J., Antoniou A., and A.J. Storkey (2018) CINIC-10 is not ImageNet or CIFAR-10. Report EDI-INF-ANC-1802 (arXiv:1810.03505).](https://arxiv.org/abs/1810.03505 2018.

Patryk Chrabaszcz, Ilya Loshchilov, and Hutter Frank. A downsampled variant of ImageNet as an alternative to the CIFAR datasets. arXiv preprint arXiv:1707.08819, 2017.

Alex Krizhevsky. Learning multiple layers of features from tiny images. Master’s thesis, Toronto University, 2009.

Yann LeCun, Yoshua Bengio, and Geoffrey Hinton. Deep learning. Nature, 521(7553):436–444, 2015.