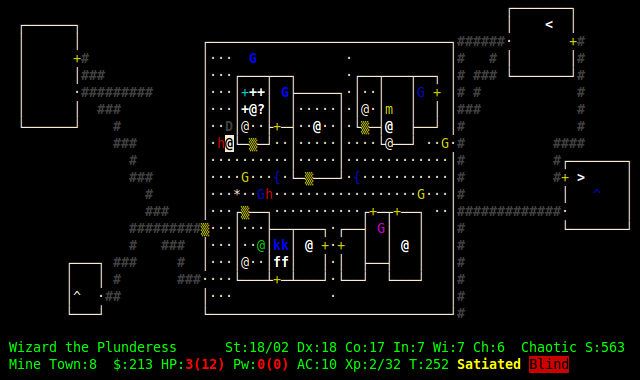

This repository contains 2 reinforcement learning implementations to play the game of NetHack. Nethack is popular single-player, terminal-based, rogue-like game that is procedurally generated, stochastic, and challenging. The NetHack Learning Environment (NLE) is a reinforcement-learning environment based on NetHack and OpenAI's Gym framework and was designed to pose a challenge to the current state-of-the-art algorithms. Due to its unique procedurally-generated nature, this testbed environment encourages advancements in various aspects such as exploration, planning and skill acquisition amongst many others. We present 2 RL algorithms in this repo, namely, a Deep Q-learning Network and a Monte-Carlo Tree Search (MCTS) algorithm. From these 2 approaches, the MCTS approach consistently achieves superior results (and is therefore the recommended implementation) as opposed to the DQN-based approach.

To run the agents on Nethack Learning Environment you need to install NLE.

NLE requires python>=3.5, cmake>=3.14 to be installed and available both when building the

package, and at runtime.

On MacOS, one can use Homebrew as follows:

$ brew install cmakeOn a plain Ubuntu 18.04 distribution, cmake and other dependencies

can be installed by doing:

# Python and most build deps

$ sudo apt-get install -y build-essential autoconf libtool pkg-config \

python3-dev python3-pip python3-numpy git flex bison libbz2-dev

# recent cmake version

$ wget -O - https://apt.kitware.com/keys/kitware-archive-latest.asc 2>/dev/null | sudo apt-key add -

$ sudo apt-add-repository 'deb https://apt.kitware.com/ubuntu/ bionic main'

$ sudo apt-get update && apt-get --allow-unauthenticated install -y \

cmake \

kitware-archive-keyringAfterwards it's a matter of setting up your environment. We advise using a conda environment for this:

$ conda create -n nle python=3.8

$ conda activate nle

$ pip install nleTo generate a ttyrec and stats.csv:

$ python3 src/agent1/save_run.pyTo evaluate a random seed:

$ python3 src/agent1/evaluation.pyTo run tests on the five seeds in the paper:

$ python3 src/agent1/RunTests.pyTo use the agent import MyAgent.py and Node.py then create an agent by:

agent = MyAgent(env.observation_space, env.action_space, seeds=env.get_seeds())Results from the runs in the report (for the MCTS implementation)

You may download the pre-trained weights here.

To train the DQN:

$ python3 src/agent2/train.pyTo evaluate the model, please specify the seeds you would like to evaluate in evaluation.py and execute:

$ python3 src/agent2/evaluation.pyTo use the agent, import MyAgent.py, configure the hyper-parameter dictionary and create an agent by:

hyper_params = {...}

agent = MyAgent(

env.observation_space, # assuming that we are taking the world as input

env.action_space,

train=True,

replay_buffer=replay_buffer,

use_double_dqn=hyper_params['use-double-dqn'],

lr=hyper_params['learning-rate'],

batch_size=hyper_params['batch-size'],

discount_factor=hyper_params['discount-factor'],

beta=hyper_params['beta'],

prior_eps=hyper_params['prior_eps']

)- Mayur Ranchod

- Wesley Earl Stander

- Joshua Greyling

- Agang Lebethe