New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[FR] VMC protocol support #193

Comments

|

I don't know what is VMC developed for ? But it seems interesting. What do we need to achieve this? |

|

@Arthur151 Sorry for my late answer.

To keep it short:

In short: Not much. Let's do it this way: |

|

It seems interesting. Looking forward to your detailed introduction. |

|

@Arthur151 Hallo my friend 🙂

We have two possibilities to implement the VMC protocol. a) Sending all skeleton bones with it's Valid bones

Pros Cons b) Sending only some keypoints as virtual trackers with it's Valid trackers

Pros Cons I have taken an quick look into the ROMP FBX export script. So what do you think? The communication protocol itself is easy. |

|

Hi, Vivian, Glad to hear from you! Yes, you can get all keypoints' 3D location and their quaternion from this function ROMP/simple_romp/tools/convert2fbx.py Line 155 in c8c8334

I think the challenge is about getting all joints we need from estimated SMPL mesh. |

How to use it?

i think the same way.

Unfortunately i am absolutely not familiar with rigging, SMPL or it's mathematics. |

You mean VRM. |

|

For the first step, I think you can just take the video predictions from simple-romp for animation. |

Thank you but i don't understand how your code for SMPL works. |

Unfortunately the VMC protocol specification is fairly unclear about this and calibration. So for me there is no information at all about it. |

|

Then we can start with solution 2 then.

|

Okay.

Using the realtime webcam tracking would be nice 🙂

I need the bone name, position (x, y, z) and the quaternion (x, y, z, w). |

|

Sure, you can firstly using video API for testing. Bone name position is the key The vector-angle 3D rotation of 24 SMPL joints are ROMP/simple_romp/romp/utils.py Line 682 in 60782b2

That's it, all the things you need. My job is done. Let's see your part. |

|

@Arthur151 After taking a look at this line: ROMP/simple_romp/tools/convert2fbx.py Line 353 in c8c8334

I had an spontaneous idea (not tested): The VMC protocol is mainly used with *.fbx and *.vrm (binary glTF) models.For example by using the following snippet, it's easy to extract bones and it's positions of the user's VRM model: #!/usr/bin/env python3

from gltflib import GLTF

gltf = GLTF.load_glb(

"test.vrm",

load_file_resources = False

)

print(gltf.model.nodes[101]) # leftUpperLeg(more details: vrm-c/vrm-specification#371) So how about to:

I will test it soon, just wanted to share my thoughts. 🙂 |

|

Oh important: Line 27 in e937a81

|

|

Hi @vivi90 , |

|

@Arthur151 I have taken an closer look at the rigs (from left: VRM, SMPL v1.0.0, Mixamo): ROMP/simple_romp/tools/convert2fbx.py Line 67 in 1458ba0

ROMP/simple_romp/tools/convert2fbx.py Line 172 in 1458ba0

ROMP/simple_romp/tools/convert2fbx.py Line 304 in 1458ba0

And then looking for it by the wrong name: ROMP/simple_romp/tools/convert2fbx.py Line 308 in 1458ba0

Is this a bug? 🙂 |

|

@Arthur151 It seems, we did not require Blender or Note: {

'smpl_thetas': array(

[

[

2.99169707e+00, -1.14872180e-01, 2.52191164e-02,

-5.85280538e-01, -2.17623878e-02, 1.04836568e-01,

-5.94840944e-01, 2.05031112e-02, -4.04515043e-02,

4.90758419e-01, -1.56615092e-03, -1.22337053e-02,

1.18982077e+00, 6.25830796e-03, -1.33860081e-01,

1.03421855e+00, -4.33490239e-02, 9.75086764e-02,

8.08686297e-03, -1.64354779e-02, -1.56924091e-02,

-5.29559441e-02, 1.50208578e-01, -8.31045508e-02,

-6.23615980e-02, -1.55124173e-01, 8.85685012e-02,

3.80534641e-02, -1.49868811e-02, -1.34220216e-02,

-2.74092108e-01, 9.23865065e-02, 1.74044952e-01,

-2.23217666e-01, -2.57145371e-02, -1.95539817e-01,

-1.50648043e-01, 2.01505367e-02, -2.51643322e-02,

3.70577164e-02, -2.34452099e-01, -3.42204958e-01,

2.55778432e-02, 2.65333027e-01, 2.82382011e-01,

8.90259165e-03, 5.71487285e-03, 1.26959458e-02,

9.14356261e-02, -3.04939717e-01, -9.25578892e-01,

1.75735921e-01, 3.51879328e-01, 9.20472264e-01,

6.75733909e-02, -5.05667686e-01, 6.31040707e-02,

2.48549446e-01, 8.01332653e-01, -9.67753083e-02,

7.34215826e-02, -6.02314919e-02, 1.28485382e-01,

5.53916991e-02, 8.01604688e-02, -1.09875299e-01,

0.00000000e+00, 0.00000000e+00, 0.00000000e+00,

0.00000000e+00, 0.00000000e+00, 0.00000000e+00

]

],

dtype = float32

)

}It's not Quaternions or Euler degrees. ROMP/simple_romp/tools/convert2fbx.py Line 157 in 1458ba0

What means 72? ROMP/simple_romp/tools/convert2fbx.py Line 162 in 1458ba0

Okay, after that it's an rotation matrix. And here: ROMP/simple_romp/tools/convert2fbx.py Line 183 in 1458ba0

It becomes finally an Quaternion. |

|

@Arthur151 I need a little bit help. romp -t -sc=3 --onnx --show_largest --mode=video -i "E:\Projekte\romp-test\test.mp4" --calc_smpl --render_mesh --save_video -o "E:\Projekte\romp-test\test_result.mp4"According to: ROMP/simple_romp/tools/convert2fbx.py Line 211 in 1458ba0

and: ROMP/simple_romp/tools/convert2fbx.py Line 212 in 1458ba0

I get in the variable But if i am testing it with the final What's my mistake? 🙂 |

|

@Arthur151 Sorry, my mistake. # -*- coding: utf-8 -*-

# Max-Planck-Gesellschaft zur Förderung der Wissenschaften e.V. (MPG) is

# holder of all proprietary rights on this computer program.

# You can only use this computer program if you have closed

# a license agreement with MPG or you get the right to use the computer

# program from someone who is authorized to grant you that right.

# Any use of the computer program without a valid license is prohibited and

# liable to prosecution.

#

# Copyright©2019 Max-Planck-Gesellschaft zur Förderung

# der Wissenschaften e.V. (MPG). acting on behalf of its Max Planck Institute

# for Intelligent Systems. All rights reserved.

#

# Contact: ps-license@tuebingen.mpg.de

#

# Author: Joachim Tesch, Max Planck Institute for Intelligent Systems, Perceiving Systems

#

# Create keyframed animated skinned SMPL mesh from .pkl pose description

#

# Generated mesh will be exported in FBX or glTF format

#

# Notes:

# + Male and female gender models only

# + Script can be run from command line or in Blender Editor (Text Editor>Run Script)

# + Command line: Install mathutils module in your bpy virtualenv with 'pip install mathutils==2.81.2'

import os

import sys

import time

import argparse

import numpy as np

from math import radians

try:

import bpy

except:

print('Missing bpy, install via pip, please install bpy by yourself if failed.')

os.system('pip install future-fstrings')

os.system('pip install tools/bpy-2.82.1 && post_install')

import bpy

try:

from mathutils import Matrix, Vector, Quaternion, Euler

except:

os.system('pip install mathutils==2.81.2')

from mathutils import Matrix, Vector, Quaternion, Euler

# Globals

# Add your UNIX paths here!

male_model_path = '/home/yusun/ROMP/model_data/SMPL_unity_v.1.0.0/smpl/Models/SMPL_m_unityDoubleBlends_lbs_10_scale5_207_v1.0.0.fbx'

female_model_path = 'E:/Projekte/romp-test3/SMPL_f_unityDoubleBlends_lbs_10_scale5_207_v1.0.0.fbx'

character_model_path = None

'''

python tools/convert2fbx.py --input=/home/yusun/BEV_results/video_results.npz --output=/home/yusun/BEV_results/dance.fbx --gender=female

'''

fps_source = 24

fps_target = 24

gender = 'female' #female

support_formats = ['.fbx', '.glb', '.bvh']

vrm_bone_names = (

0 : "Hips",

1 : "LeftUpperLeg",

2 : "RightUpperLeg",

3 : "Spine",

4 : "LeftLowerLeg",

5 : "RightLowerLeg",

6 : "Chest",

7 : "LeftFoot",

8 : "RightFoot",

9 : "UpperChest"

10 : "LeftToes",

11 : "RightToes",

12 : "Neck",

13 : "LeftIndexProximal",

14 : "RightIndexProximal",

15 : "Head",

16 : "LeftShoulder",

17 : "RightShoulder",

18 : "LeftUpperArm",

19 : "RightUpperArm",

20 : "LeftLowerArm",

21 : "RightLowerArm",

22 : "LeftHand",

23 : "RightHand"

)

bone_name_from_index = {

0 : 'Pelvis',

1 : 'L_Hip',

2 : 'R_Hip',

3 : 'Spine1',

4 : 'L_Knee',

5 : 'R_Knee',

6 : 'Spine2',

7 : 'L_Ankle',

8: 'R_Ankle',

9: 'Spine3',

10: 'L_Foot',

11: 'R_Foot',

12: 'Neck',

13: 'L_Collar',

14: 'R_Collar',

15: 'Head',

16: 'L_Shoulder',

17: 'R_Shoulder',

18: 'L_Elbow',

19: 'R_Elbow',

20: 'L_Wrist',

21: 'R_Wrist',

22: 'L_Hand',

23: 'R_Hand'

}

# To use other avatar for animation, please define the corresponding 3D skeleton like this.

bone_name_from_index_character = {

0 : 'Hips',

1 : 'RightUpLeg',

2 : 'LeftUpLeg',

3 : 'Spine',

4 : 'RightLeg',

5 : 'LeftLeg',

6 : 'Spine1',

7 : 'RightFoot',

8: 'LeftFoot',

9: 'Spine2',

10: 'LeftToeBase',

11: 'RightToeBase',

12: 'Neck',

13: 'LeftHandIndex1',

14: 'RightHandIndex1',

15: 'Head',

16: 'LeftShoulder',

17: 'RightShoulder',

18: 'LeftArm',

19: 'RightArm',

20: 'LeftForeArm',

21: 'RightForeArm',

22: 'LeftHand',

23: 'RightHand'

}

# Helper functions

# Computes rotation matrix through Rodrigues formula as in cv2.Rodrigues

# Source: smpl/plugins/blender/corrective_bpy_sh.py

def Rodrigues(rotvec):

theta = np.linalg.norm(rotvec)

r = (rotvec/theta).reshape(3, 1) if theta > 0. else rotvec

cost = np.cos(theta)

mat = np.asarray([[0, -r[2], r[1]],

[r[2], 0, -r[0]],

[-r[1], r[0], 0]])

return(cost*np.eye(3) + (1-cost)*r.dot(r.T) + np.sin(theta)*mat)

# Setup scene

def setup_scene(model_path, fps_target):

scene = bpy.data.scenes['Scene']

###########################

# Engine independent setup

###########################

scene.render.fps = fps_target

# Remove default cube

if 'Cube' in bpy.data.objects:

bpy.data.objects['Cube'].select_set(True)

bpy.ops.object.delete()

# Import gender specific .fbx template file

bpy.ops.import_scene.fbx(filepath=model_path)

# Process single pose into keyframed bone orientations

def process_pose(current_frame, pose, trans, pelvis_position):

if pose.shape[0] == 72:

rod_rots = pose.reshape(24, 3)

else:

rod_rots = pose.reshape(26, 3)

mat_rots = [Rodrigues(rod_rot) for rod_rot in rod_rots]

# Set the location of the Pelvis bone to the translation parameter

armature = bpy.data.objects['Armature']

bones = armature.pose.bones

# Pelvis: X-Right, Y-Up, Z-Forward (Blender -Y)

root_location = Vector(

(100*trans[1], 100*trans[2], 100*trans[0])) - pelvis_position

# Set absolute pelvis location relative to Pelvis bone head

bones[bone_name_from_index[0]].location = root_location

# bones['Root'].location = Vector(trans)

bones[bone_name_from_index[0]].keyframe_insert('location', frame=current_frame)

for index, mat_rot in enumerate(mat_rots, 0):

if index >= 24:

continue

bone = bones[bone_name_from_index[index]]

bone_rotation = Matrix(mat_rot).to_quaternion()

quat_x_90_cw = Quaternion((1.0, 0.0, 0.0), radians(-90))

#quat_x_n135_cw = Quaternion((1.0, 0.0, 0.0), radians(-135))

#quat_x_p45_cw = Quaternion((1.0, 0.0, 0.0), radians(45))

#quat_y_90_cw = Quaternion((0.0, 1.0, 0.0), radians(-90))

quat_z_90_cw = Quaternion((0.0, 0.0, 1.0), radians(-90))

if index == 0:

# Rotate pelvis so that avatar stands upright and looks along negative Y avis

bone.rotation_quaternion = (quat_x_90_cw @ quat_z_90_cw) @ bone_rotation

else:

bone.rotation_quaternion = bone_rotation

bone.keyframe_insert('rotation_quaternion', frame=current_frame)

return

# Process all the poses from the pose file

def process_poses(

input_path,

gender,

fps_source,

fps_target,

subject_id=-1):

print('Processing: ' + input_path)

frame_results = np.load(input_path, allow_pickle=True)['results'][()]

sequence_results = np.load(input_path, allow_pickle=True)['sequence_results'][()]

poses, trans = [], []

if len(sequence_results)>0:

subject_ids = list(sequence_results.keys())

if subject_id == -1 or subject_id not in subject_ids:

print('Get motion sequence with subject IDs:', subject_ids)

subject_id = int(input('Please select one subject ID (int):'))

poses = np.array(sequence_results[subject_id]['smpl_thetas'])

trans = np.array(sequence_results[subject_id]['cam_trans'])

else:

print('Missing tracking IDs in results. Using the first pose results for animation.')

print('To get the tracking IDs, please use temporal optimization during inference.')

frame_names = sorted(list(frame_results.keys()))

poses, trans = np.zeros((len(frame_names), 72)), np.zeros((len(frame_names), 3))

for inds, frame_name in enumerate(frame_names):

poses[inds] = frame_results[frame_name]['smpl_thetas'][0]

trans[inds] = frame_results[frame_name]['cam_trans'][0]

if gender == 'female':

model_path = female_model_path

for k,v in bone_name_from_index.items():

bone_name_from_index[k] = 'f_avg_' + v

elif gender == 'male':

model_path = male_model_path

for k,v in bone_name_from_index.items():

bone_name_from_index[k] = 'm_avg_' + v

elif gender == 'character':

model_path = character_model_path

for k, v in bone_name_from_index_character.items():

bone_name_from_index[k] = 'mixamorig1:' + v

else:

print('ERROR: Unsupported gender: ' + gender)

sys.exit(1)

# Limit target fps to source fps

if fps_target > fps_source:

fps_target = fps_source

print('Gender:',gender)

print('Number of source poses: ',poses.shape[0])

print('Source frames-per-second: ', fps_source)

print('Target frames-per-second: ', fps_target)

print('--------------------------------------------------')

setup_scene(model_path, fps_target)

scene = bpy.data.scenes['Scene']

sample_rate = int(fps_source/fps_target)

scene.frame_end = (int)(poses.shape[0]/sample_rate)

# Retrieve pelvis world position.

# Unit is [cm] due to Armature scaling.

# Need to make copy since reference will change when bone location is modified.

armaturee = bpy.data.armatures[0]

ob = bpy.data.objects['Armature']

armature = ob.data

bpy.ops.object.mode_set(mode='EDIT')

# get specific bone name 'Bone'

pelvis_bone = armature.edit_bones[bone_name_from_index[0]]

# pelvis_bone = armature.edit_bones['f_avg_Pelvis']

pelvis_position = Vector(pelvis_bone.head)

bpy.ops.object.mode_set(mode='OBJECT')

source_index = 0

frame = 1

offset = np.array([0.0, 0.0, 0.0])

while source_index < poses.shape[0]:

print('Adding pose: ' + str(source_index))

# Go to new frame

scene.frame_set(frame)

process_pose(frame, poses[source_index], (trans[source_index] - offset), pelvis_position)

source_index += sample_rate

frame += 1

return frame

def rotate_armature(use):

if use == True:

# Switch to Pose Mode

bpy.ops.object.posemode_toggle()

# Find the Armature & Bones

ob = bpy.data.objects['Armature']

armature = ob.data

bones = armature.bones

rootbone = bones[0]

# Find the Root bone

for bone in bones:

if "f_avg_root" in bone.name:

rootbone = bone

rootbone.select = True

# Rotate the Root bone by 90 euler degrees on the Y axis. Set --rotate_Y=False if the rotation is not needed.

bpy.ops.transform.rotate(value=1.5708, orient_axis='Y', orient_type='GLOBAL', orient_matrix=((1, 0, 0), (0, 1, 0), (0, 0, 1)), orient_matrix_type='GLOBAL', constraint_axis=(False, True, False), mirror=True, use_proportional_edit=False, proportional_edit_falloff='SMOOTH', proportional_size=1, use_proportional_connected=False, use_proportional_projected=False, release_confirm=True)

# Revert back to Object Mode

bpy.ops.object.posemode_toggle()

def export_animated_mesh(output_path, rotate_y):

# Create output directory if needed

output_dir = os.path.dirname(output_path)

if not os.path.isdir(output_dir):

os.makedirs(output_dir, exist_ok=True)

# Fix Rotation

rotate_armature(rotate_y)

# Select only skinned mesh and rig

bpy.ops.object.select_all(action='DESELECT')

bpy.data.objects['Armature'].select_set(True)

bpy.data.objects['Armature'].children[0].select_set(True)

if output_path.endswith('.glb'):

print('Exporting to glTF binary (.glb)')

# Currently exporting without shape/pose shapes for smaller file sizes

bpy.ops.export_scene.gltf(filepath=output_path, export_format='GLB', export_selected=True, export_morph=False)

elif output_path.endswith('.fbx'):

print('Exporting to FBX binary (.fbx)')

bpy.ops.export_scene.fbx(filepath=output_path, use_selection=True, add_leaf_bones=False)

elif output_path.endswith('.bvh'):

bpy.ops.export_anim.bvh(filepath=output_path, root_transform_only=False)

else:

print('ERROR: Unsupported export format: ' + output_path)

sys.exit(1)

return

input_path = 'E:/Projekte/romp-test3/video_results.npz'

output_path = 'E:/Projekte/romp-test3/result.fbx'

subject_id = 0 # -1

rotate_y = True

print('Input path: ' + input_path)

print('Output path: ' + output_path)

startTime = time.perf_counter()

# Process pose file

poses_processed = process_poses(

input_path=input_path,

gender=gender,

fps_source=fps_source,

fps_target=fps_target,

subject_id=subject_id

)

export_animated_mesh(output_path, rotate_y)

print('--------------------------------------------------')

print('Animation export finished, save to ', output_path)

print('Poses processed: ', poses_processed)

print('Processing time : ', time.perf_counter() - startTime)

print('--------------------------------------------------')

```

Btw, now i can proof, that this line is indeed a bug:

https://github.com/Arthur151/ROMP/blob/1458ba0b05a63d64b7fff7898cd917d9fda378b5/simple_romp/tools/convert2fbx.py#L308 |

|

@Arthur151 Okay i have run some tests with the latest full ROMP version (not simple-romp) and the Blender Addon. In ROMP the Line 130 in 1458ba0

ROMP/romp/lib/visualization/socket_utils.py Line 213 in 1458ba0

In Blender the plugin does all necessary calculations for the rig: https://github.com/yanch2116/CharacterDriven-BlenderAddon/blob/6c288acf8540f900202de10adc2463ab2ca75f04/src/characterDriven.py#L51 It's no problem to use an VRM rig instead the mixamo rig in Blender for example. But how about moving the most of these calculations from Blender back to ROMP? |

|

Happy to see thing going so well with your efforts! Excellent work! |

great! 🙂

Currently there is no VMC protocol package for python.

#!/usr/bin/env python3

from socket import gaierror

from osc4py3.as_eventloop import *

from osc4py3 import oscmethod as oscm

from osc4py3 import oscbuildparse as oscbp

import time

class Client:

"""Based on: https://github.com/barlaensdoonn/osc_basics/blob/master/osc_basics.py"""

def __init__(self, host: str, port: int, name: str) -> None:

self.host = host

self.port = port

self.name = name

self.start()

def __enter__(self) -> 'Client':

return self

def start(self):

osc_startup()

try:

osc_udp_client(self.host, self.port, self.name)

print("OSC client ready.")

except gaierror:

print(

"Host '{}' unavailable, failed to create client '{}'.".format(

self.host,

self.name

)

)

raise

def send(self, address: str, data_types: str, data: str) -> None:

message = oscbp.OSCMessage(

address,

data_types,

data

)

osc_send(

message,

self.name

)

osc_process()

print(str(message))

def send_bundle(self, address: str, data_types: str, data: str) -> None:

message_bundle = []

for data_tuple in data:

message_bundle.append(

oscbp.OSCMessage(

address,

data_types,

[

str(data_tuple[0]),

data_tuple[1].x,

data_tuple[1].y,

data_tuple[1].z,

data_tuple[2].x,

data_tuple[2].y,

data_tuple[2].z,

data_tuple[2].w

]

)

)

message_bundle = oscbp.OSCBundle(

oscbp.unixtime2timetag(time.time()),

message_bundle

)

osc_send(

message_bundle,

self.name

)

osc_process()

print(str(message_bundle))

def __exit__(self, type, value, traceback) -> None:

self.__del__()

def __del__(self) -> None:

osc_terminate()

print("OSC client down.")

#!/usr/bin/env python3

from osc import Client as OSCClient

import time

from math import radians, degrees, cos, sin, atan2, asin, pow, floor

class Bone:

valid_names = (

"Hips",

"LeftUpperLeg",

"RightUpperLeg",

"LeftLowerLeg",

"RightLowerLeg",

"LeftFoot",

"RightFoot",

"Spine",

"Chest",

"Neck",

"Head",

"LeftShoulder",

"RightShoulder",

"LeftUpperArm",

"RightUpperArm",

"LeftLowerArm",

"RightLowerArm",

"LeftHand",

"RightHand",

"LeftToes",

"RightToes",

"LeftEye",

"RightEye",

"Jaw",

"LeftThumbProximal",

"LeftThumbIntermediate",

"LeftThumbDistal",

"LeftIndexProximal",

"LeftIndexIntermediate",

"LeftIndexDistal",

"LeftMiddleProximal",

"LeftMiddleIntermediate",

"LeftMiddleDistal",

"LeftRingProximal",

"LeftRingIntermediate",

"LeftRingDistal",

"LeftLittleProximal",

"LeftLittleIntermediate",

"LeftLittleDistal",

"RightThumbProximal",

"RightThumbIntermediate",

"RightThumbDistal",

"RightIndexProximal",

"RightIndexIntermediate",

"RightIndexDistal",

"RightMiddleProximal",

"RightMiddleIntermediate",

"RightMiddleDistal",

"RightRingProximal",

"RightRingIntermediate",

"RightRingDistal",

"RightLittleProximal",

"RightLittleIntermediate",

"RightLittleDistal",

"UpperChest"

)

def __init__(self, name: str) -> None:

if name in self.valid_names:

self.name = name

else:

raise ValueError("Invalid bone name.")

def __str__(self) -> str:

return self.name

class Position:

def __init__(self, x: float, y: float, z: float) -> None:

self.x = x

self.y = y

self.z = z

def __str__(self) -> str:

return ", ".join(

(

str(self.x),

str(self.y),

str(self.z)

)

)

class Quaternion:

def __init__(self, x: float, y: float, z: float, w: float) -> None:

if round(pow(x, 2) + pow(y, 2) + pow(z, 2) + pow(w, 2), 1) == 1:

self.x = x

self.y = y

self.z = z

self.w = w

else:

raise ValueError(

"Invalid quaternion values: x={}, y={}, z={}, w={}".format(

x,

y,

z,

w

)

)

@classmethod

def from_euler(cls,

phi: float, theta: float, psi: float,

precision: int) -> 'Quaternion':

"""Creates an quaternion by euler angles and returns it

Args:

phi (float): Rotation angle around the X axis

theta (float): Rotation angle around the Y axis

psi (float): Rotation angle around the Y axis

precision (int): Round the results to 'precision' digits after the decimal point

Returns:

Quaternion: Created quaternion (x, y, z, w)

>>> str(Quaternion.from_euler(-90, -180, 90, 12))

'(0.5, -0.5, -0.5, 0.5)'

.. _Source:

https://www.meccanismocomplesso.org/en/hamiltons-quaternions-and-3d-rotation-with-python

"""

# x axis rotation angle

cos_phi_half = cos(radians(phi) / 2)

sin_phi_half = sin(radians(phi) / 2)

# y axis rotation angle

cos_theta_half = cos(radians(theta) / 2)

sin_theta_half = sin(radians(theta) / 2)

# z axis rotation angle

cos_psi_half = cos(radians(psi) / 2)

sin_psi_half = sin(radians(psi) / 2)

# Calculation

return cls(

x = float(round(sin_phi_half * cos_theta_half * cos_psi_half - cos_phi_half * sin_theta_half * sin_psi_half, precision)),

y = float(round(cos_phi_half * sin_theta_half * cos_psi_half + sin_phi_half * cos_theta_half * sin_psi_half, precision)),

z = float(round(cos_phi_half * cos_theta_half * sin_psi_half - sin_phi_half * sin_theta_half * cos_psi_half, precision)),

w = float(round(cos_phi_half * cos_theta_half * cos_psi_half + sin_phi_half * sin_theta_half * sin_psi_half, precision))

)

def to_euler(self) -> tuple[float, float, float]:

"""Exports an quaternion as an euler angle tuple

Returns:

tuple[float, float, float]: (x, y, z)

>>> str(Quaternion.from_euler(-90, -180, 90, 12).to_euler())

(90.0, 0.0, -90.0)

.. _Source:

https://www.meccanismocomplesso.org/en/hamiltons-quaternions-and-3d-rotation-with-python

"""

# x axis rotation angle

t0 = 2 * (self.w * self.x + self.y * self.z)

t1 = 1 - 2 * (self.x * self.x + self.y * self.y)

x = atan2(t0, t1)

# y axis rotation angle

t2 = 2 * (self.w * self.y - self.z * self.x)

t2 = 1 if t2 > 1 else t2

t2 = -1 if t2 < -1 else t2

y = asin(t2)

# y axis rotation angle

t3 = 2 * (self.w * self.z + self.x * self.y)

t4 = 1 - 2 * (self.y * self.y + self.z * self.z)

z = atan2(t3, t4)

return degrees(x), degrees(y), degrees(z)

def __str__(self) -> str:

return "(" + ", ".join(

(

str(self.x),

str(self.y),

str(self.z),

str(self.w)

)

) + ")"

class Assistant(OSCClient):

def __init__(self, host: str, port: int, name: str) -> None:

self.started_at = time.time()

super().__init__(host, port, name)

def send_root_transform(self, position: Position,

quaternion: Quaternion) -> None:

# since VMC v2.0.0

self.send(

"/VMC/Ext/Root/Pos",

None,

[

"root",

position.x,

position.y,

position.z,

quaternion.x,

quaternion.y,

quaternion.z,

quaternion.w

]

)

def send_bones_transform(self,

transform: list[list[Bone, Position, Quaternion]]

)-> None:

self.send_bundle(

"/VMC/Ext/Bone/Pos",

None,

transform

)

def send_available_states(self, loaded: int,

calibration_state: int = None,

calibration_mode: int = None,

tracking_status: int = None) -> None:

if tracking_status == None:

if calibration_state == None or calibration_mode == None:

self.send(

"/VMC/Ext/OK",

None,

[

loaded

]

)

else:

# since VMC v2.5

self.send(

"/VMC/Ext/OK",

None,

[

loaded,

calibration_state,

calibration_mode

]

)

else:

# since VMC v2.7

self.send(

"/VMC/Ext/OK",

None,

[

loaded,

calibration_state,

calibration_mode,

tracking_status

]

)

def send_relative_time(self) -> None:

self.send(

"/VMC/Ext/T",

None,

[

float(time.time() - self.started_at)

]

)vrm.py: #!/usr/bin/env python3

from gltflib import GLTF

class VRM(GLTF):

def __init__(self, filename: str) -> None:

gltf = super().load_glb(filename)

super().__init__(gltf.model, gltf.resources)

def export_to_glb(self, filename: str) -> None:

super().export(filename)

def export_to_gltf(self, filename: str) -> None:

super().convert_to_file_resource(

super().get_glb_resource(),

filename + ".resources.bin"

)

super().export(filename)

def get_bones(self) -> list:

return self.model.nodes

#!/usr/bin/env python3

from log import Log

import sys

from vrm import VRM as Model

from vmc import Assistant as VMCAssistant, Quaternion

from gui import Window

import numpy as np

import romp, cv2

# Configuration

connection = {

"host" : "localhost",

"port" : 39539,

"name" : "example"

}

# Logging

sys.stdout = Log(filename = "vmc.log", is_error = False)

sys.stderr = Log(filename = "vmc.log", is_error = True)

# ROMP

settings = romp.main.default_settings

# settings.mode='video'

#print(settings)

romp_model = romp.ROMP(settings)

outputs = romp_model(cv2.imread('test.jpg'))

print(outputs["smpl_thetas"][0].reshape(24, 3))

"""

# NPZ data

input_path = "E:/Projekte/romp-test/00000215.npz"

input_path = "video_results.npz"

frame_results = np.load(input_path, allow_pickle=True)['results'][()]

sequence_results = np.load(input_path, allow_pickle=True)['sequence_results'][()]

print(frame_results)

print(sequence_results)

# Avatar model

model = Model("test.vrm")

print(model.get_bones()[101]) # leftUpperLeg (see: https://github.com/vrm-c/vrm-specification/issues/371)

model_root = []

model_t_pose = []

# Quaternions example

q = Quaternion.from_euler(-90, -180, 90, precision = 12)

print(q)

e = q.to_euler()

print(e)

# GUI

Window(

VMCAssistant(

connection['host'],

connection['port'],

connection['name']

),

model_root,

model_t_pose

).MainLoop()

"""

#!/usr/bin/env python3

import wx

from vmc import Assistant as VMCAssistant, Bone, Position, Quaternion

class Window(wx.App):

def __init__(self, vmc: VMCAssistant,

model_root: list[Position, Quaternion],

model_t_pose: list[Bone, Position, Quaternion]) -> None:

self.vmc = vmc

self.model_root = model_root

self.model_t_pose = model_t_pose

super().__init__()

def OnInit(self) -> bool:

self.frame = self.Frame(

vmc = self.vmc,

parent = None,

title = 'VMC Assistant Demo',

size = wx.Size(400, 300)

)

self.frame.Show(True)

return True

class Frame(wx.Frame):

def __init__(self, vmc: VMCAssistant,

parent: wx.App, title: str, size: wx.Size) -> None:

super().__init__(

parent,

title = title,

size = size

)

self.panel = self.Panel(self, vmc)

class Panel(wx.Panel):

def __init__(self, parent: wx.Frame, vmc: VMCAssistant) -> None:

self.vmc = vmc

super().__init__(parent)

# Controls

self.test_caption = wx.StaticText(

self, label = 'Test',

style = wx.ALIGN_LEFT

)

self.test_slider = wx.Slider(

self,

value = 0,

minValue = -180,

maxValue = 180,

style = wx.SL_HORIZONTAL | wx.SL_LABELS

)

self.Bind(wx.EVT_SLIDER, self.change_test)

# Log

self.log = wx.TextCtrl(

self,

style = wx.TE_MULTILINE | wx.TE_READONLY

)

# Layout

self.layout = wx.BoxSizer(wx.VERTICAL)

self.layout.AddMany([

(self.test_caption, 0, wx.EXPAND | wx.ALIGN_LEFT | wx.ALL, 5),

(self.test_slider, 0, wx.EXPAND | wx.ALL, 5),

(self.log, 1, wx.EXPAND | wx.ALIGN_LEFT | wx.ALL, 5)

])

self.SetSizer(self.layout)

self.Center()

def change_test(self, event: wx.Event) -> None:

value = float(self.test_slider.GetValue())

quat = Quaternion.from_euler(0.0, 0.0, value, 12)

self.vmc.send_bones_transform(

[

[

Bone("LeftUpperLeg"),

Position(0.0, 0.0, 0.0),

quat

]

]

)

self.vmc.send_available_states(1)

self.vmc.send_relative_time()

self.log.AppendText(

str(quat) + "\r\n"

) |

|

Great job. It seems interesting~ |

|

Please let me know if you want me to add / check BEV/ROMP features to it. |

Already invited you: https://github.com/vivi90/python-vmc/invitations

What do you mean by features? Currently i can only find the output for the 24 SMPL joints. Would be great to have also these joints available. |

|

Hi, all 54(24+30) joints are saved as |

I am not familiar with MediaPipe,just want to have a try.like this bl_info = { class CharacterDriven(bpy.types.Operator): class SMPL_Importer: addon_keymaps = [] def register(): def unregister(): if name == "main": |

What exactly do you mean by 'not well'? |

|

okay may you please provide:

Btw, how about moving this topic to another issue in your own repo. |

|

|

@Arthur151 Sorry, for asking again in this already long issue.. 🙂 |

|

@vivi90 . |

|

@Arthur151 My VMC protocol python library is now published at PyPi and should work as expected 🙂 My new script for ROMP follows later (using it for my bachelor thesis and so i need to keep it unpublished until it's finished). |

|

Congrats! Vivian, Happy to hear from you. Looking forward to seeing your further research. You are so great.Best, Yu

发件人: ***@***.*** ***@***.***> 代表 Vivien Richter ***@***.***>发送时间: 星期五, 八月 12, 2022 11:18 下午收件人: Arthur151/ROMP ***@***.***>抄送: Yu Sun ***@***.***>; Mention ***@***.***>主题: Re: [Arthur151/ROMP] [FR] VMC protocol support (Issue #193)

@Arthur151 My VMC protocol python library is now published at PyPi and should work as expected 🙂

My new script for ROMP follows later (using it for my bachelor thesis and so i need to keep it unpublished until it's finished).

But with the provided examples in combination with the old code it might be kinda obvious, how to implement it 😉

—Reply to this email directly, view it on GitHub, or unsubscribe.You are receiving this because you were mentioned.Message ID: ***@***.***>

|

|

@Arthur151 Is //EDIT: Ah i see, |

|

Technically, it is the position of the pelvis

发件人: ***@***.*** ***@***.***> 代表 Vivien Richter ***@***.***>发送时间: 星期日, 八月 14, 2022 10:42 下午收件人: Arthur151/ROMP ***@***.***>抄送: Yu Sun ***@***.***>; Mention ***@***.***>主题: Re: [Arthur151/ROMP] [FR] VMC protocol support (Issue #193)

@Arthur151 Is cam_trans really the root position of the person or is it the position of pelvis?

—Reply to this email directly, view it on GitHub, or unsubscribe.You are receiving this because you were mentioned.Message ID: ***@***.***>

|

I see, thank you 🙂 |

|

I think you can use the mean of the two ankles. |

Great idea 👍 |

Sorry, does not work in certain situations. |

|

@Arthur151 Sorry, for making this issue longer and longer 😄 But i have still an related issue.. Using

|

|

Hi, Vivian, @vivi90 |

Changed, but no difference. Still shaking a lot. |

|

ONNX seems at least working, because if i comment the line |

|

@Arthur151 I have taken a look at: ROMP/simple_romp/vis_human/main.py Line 23 in ff5ea02

But i am not sure, if i properly understand, how --show renders the images.I mean it seems you are rendering multiple perspectives and then merging it together? In my script i am just using the output of ROMP as it is in an 3D environment. |

|

@vivi90 Hi, am also interesting at this. Did u managed converting SMPL output to VMC format? |

|

@jinfagang

Yes, i have 🙂 |

|

@vivi90 thank u. I have followed u, also, my ask a very Tortured soul question? if we can convert smpl to vmc protocal, how can we using it to driven metahuman? Through vmc4ue? How to streaming data |

|

@jinfagang

Seems like you have an very similiar question to this one: |

|

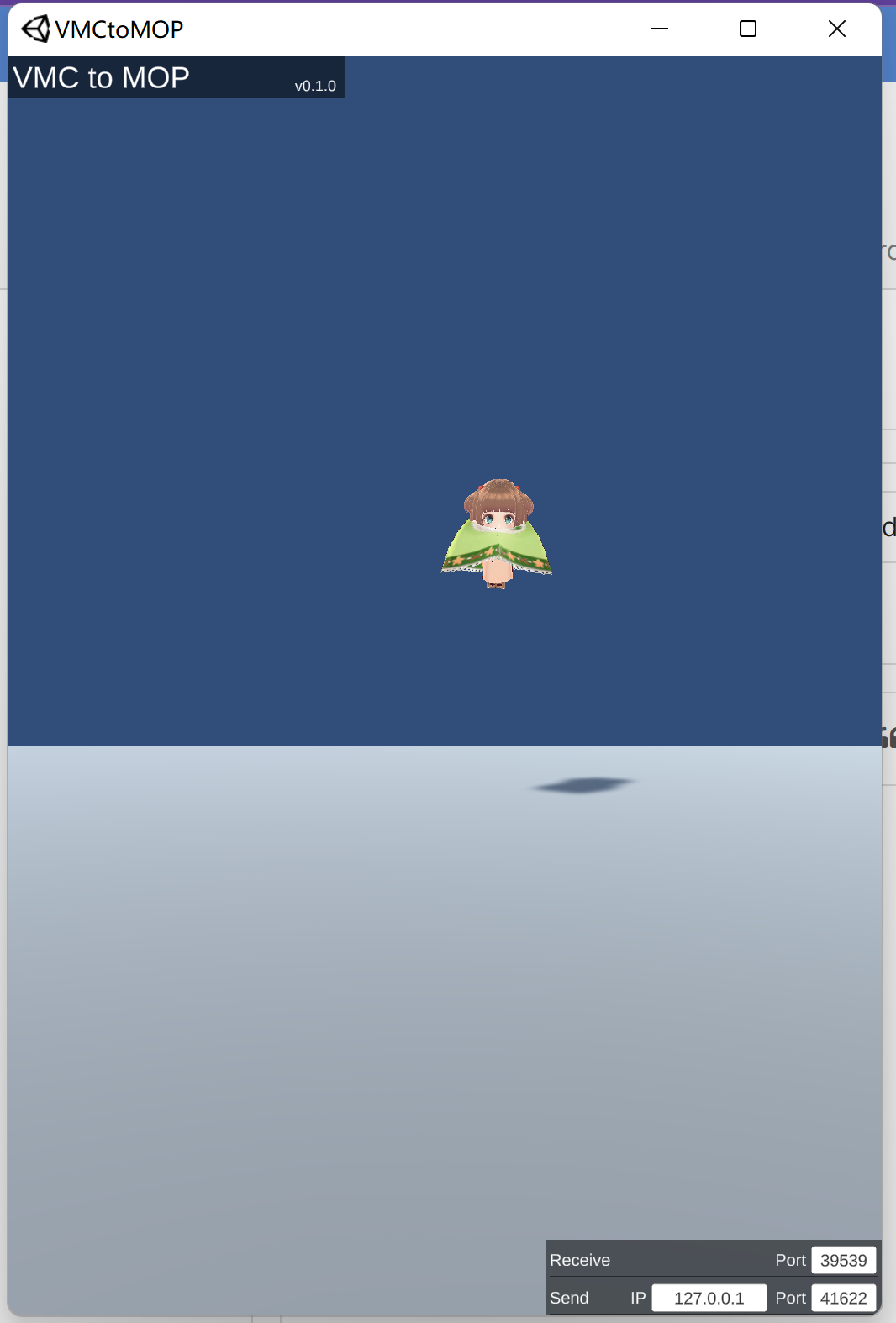

@vivi90 Hi, i found a workable solution is sending VMC, then using MopUE4 to driven. But now the question is, I can not get your code right to driven in standard VMC protocol: as you can see, normally, the data from ROMP convert to VMC with yourcode should drive VMCtoMOP, but the model is collapsed. |

|

@jinfagang

See: https://codeberg.org/vivi90/python-vmcp/issues/3#issuecomment-664578 |

|

@vivi90 hello, could be provide a basic workable script to convert SMPL data to VMC format? you said will opensouce at 11.7, will it possiable to opensource earlier? |

|

@Arthur151 @jinfagang @Altai01 @gngdb My VMC protocol implementation draft for ROMP is now public available: My next goals will be:

|

Feature request: VMC protocol export support

https://protocol.vmc.info/english

Ready to help, implementing it.

The text was updated successfully, but these errors were encountered: