Today's Progress: Today I worked on DCGAN (Deep Convolutional Generative Adversarial Network). Implemented on the MNIST handwritten digits dataset to generate Handwritten digits.

Thoughts: I will try DCGAN on slightly more complex datasets such as Fashion MNIST and CIFAR-10.

Link of Work:

References

- DCGANs (Deep Convolutional Generative Adversarial Networks)

- Deep Convolutional Generative Adversarial Network TensorFlow Core

Today's Progress: Built a network for CIFAR-10 dataset comprising Convolution, Max Pooling, Batch- Normalization and Dropout layers. Studied about dropout and batch normalization in detail and various ways to avoid overfitting in a network.

Thoughts: Looking forward to tweak the model and obtain a better accuracy and reduce the number of parameters.

Link of Work:

References

- Achieving 90% accuracy in Object Recognition Task on CIFAR-10 Dataset with Keras: Convolutional Neural Networks - Machine Learning in Action

- CIFAR-10 Image Classification in TensorFlow - Towards Data Science

Today's Progress: Today my goal was to get started with transfer learning and understand the architecture. I chose InceptionV3 begin with. Started building the model by picking weights from Imagenet on Inception as the base model. Added a couple of dense layer with dropouts to complete the model. The model was trained on 'Cats vs Dogs' dataset for 10 epochs with a training accuracy of 98.69%.

Thoughts: I am looking forward to implement the concept of Transfer Learning on a more project with a gain of accuracy. Also I am excited to try out more architectures such as ResNet, VGG and AlexNet.

Link of Work:

References

-

Master Transfer learning by using Pre-trained Models in Deep Learning

-

Using Inception-v3 from TensorFlow Hub for transfer learning

Today's Progress: Today I tried to understand the idea behind Mask Region-based Convolution Neural Network better known as Mask RCNN. While going though the references I also learned the following things

- Object Localization

- Instance Segmentation

- Semantic Segmentation

- ROI Pooling

References

Today's Progress: Today I implemented Mask RCNN on Images. I used Open CV as the platform to work. The model which I took for this task was trained on InceptionV2 on the COCO Dataset.

Thoughts: I am planning to implement Mask-RCNN next on videos. I want to work on the challenges with the video and learn about video processing all together.

Link of Work:

References

Today's Progress: Continuing with yesterday's work, I implemented Mask RCNN on video feed. The project was based on the same architecture and dataset as yesterday's. I tweaked the script to work on videos.

Thoughts: Today's implementation was quite computationally expensive. A 120-frame, 4-second video took around 10 minutes to process. The network may not be the fastest but it is quite good in terms of accuracy of detecting and masking objects. So I want to try out more computer vision techniques to do same or a similar job.

Link of Work:

References

Today's Progress: Today I dove deep into the most in-demand application of the deep learning ie. Object Detection. So I started reading about the various existing architectures.

- Hog Features

- R-CNN

- Spatial Pyramid Pooling(SPP-net)

- Fast R-CNN

- Faster R-CNN

- YOLO(You only Look Once)

- Single Shot Detector(SSD)

I discovered the working of these sophisticated architectures and compared the output result.

Thoughts: After reading about such networks 'YOLO' and 'SSD' intrigued me the most. So I am looking forward to implement those network in a project form on images and videos.

References

- Zero to Hero: Guide to Object Detection using Deep Learning: Faster R-CNN,YOLO,SSD

- A 2019 Guide to Object Detection - Heartbeat

Today's Progress: Today I started with Deep Generative Modeling as part of MIT's Introduction to Deep Learning.

References

- Deep Generative Models - Towards Data Science

- Deep Generative Modeling MIT 6.S191 Youtube

- Deep Generative Modeling Slides

Today's Progress: Today I read about learning regression in detail with the implementation in numpy. I used normal equation to calculate the weights of a function. The weights were determined on a random generated data. The data contained x and y pair with a linear relation of ' y = 4 + 3x'.

Thoughts: Understanding the algorithms at the fundamental level is a requisite for anyone who practices Machine Learning. Looking forward to understand the basic methods at a fundamental level.

Link of Work:

References

Today's Progress: I learned about the various types of gradient descent methods namely Batch Gradient Descent,Stochastic Gradient Descent and Mini Batch Gradient Descent. I implemented the same with sklearn library in python. I also learned about learning rate schedule and made a LR schedular. And finally compared the speed, architecture and use of various Gradient Descent techniques.

Thoughts: It is good to know, what goes on underneath every process in Machine Learning.

Link of Work:

References

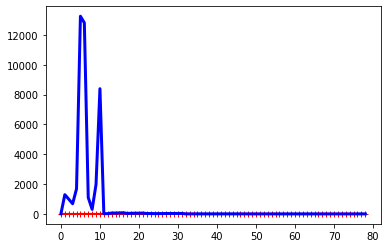

Today's Progress: Worked on polynomial regression to fit the curves with higher degree. Made a simple dataset(collection on random points which falls near the equation) and analysed it with polynomial regression.

Link of Work:

- [code]

- 10 degree polynomial

References

Today's Progress: I worked on Yolo and implemented it in OpenCV. I tried two different versions of weights from Darknet yolov3-tiny.weights and yolov3.weights.

Thoughts: Yolo is fast compared to other algorithms and can be implemented on hardware constraints environments without a sweat.

Link of Work:

References

Today's Progress: Learned about different ways to reduce overfitting in a model. Studied 3 different methods in depth. Read about the working of the following methods with mathematic understanding.

- Ridge Regression

- Lasso Regression

- Elastic Net

Thoughts: Overfitting can be avoided using the Regularized Models, having a good understanding is a icing on the cake.

References

Today's Progress: Read about Logistic Regression. Logistic Regression (also called Logit Regression) is commonly used to estimate the probability that an instance belongs to a particular class.

- Estimating Probabilities

- Training and Cost Function

- Decision Boundaries

References

Today's Progress: Made a binary classifier on a custom dataset. Did a little web scraping to collect anime faces and human faces.

Thoughts: Looking forward to work on GANs with the same dataset.

Today's Progress: Started off with reading about SVMs (Support Vector Machines) and got an intuition on what SVM is and how it is used to solve a Classification problem.

References

Today's Progress: Implemented Softmargin classification on IRIS dataset in sklearn library.

Link of Work: [python code]

Today's Progress: I tried to dig deeper and understood the mathematics behind the backpropagation algorithm. Learned about the calculus, vectorization and cost function behind every neuron in a network.

References