-

Notifications

You must be signed in to change notification settings - Fork 233

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

腾讯格式的权重转换成HF格式的转换脚本在哪里? #44

Comments

|

同样的问题 |

|

TencentPretain |

这个仓库下面,似乎没找到llama tencentpretrain格式到huggingface格式的转换脚本 |

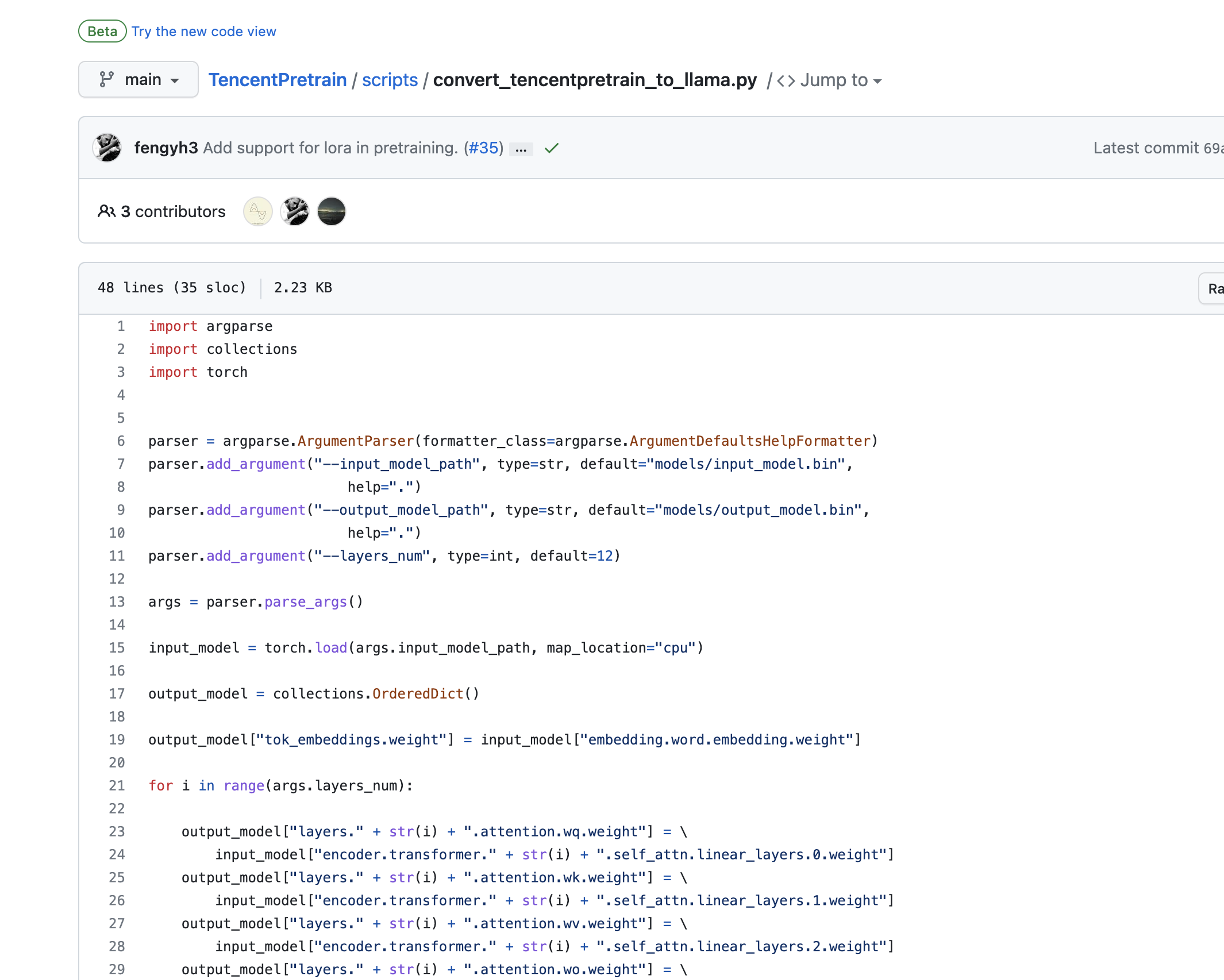

convert_tencentpretrain_to_llama.py

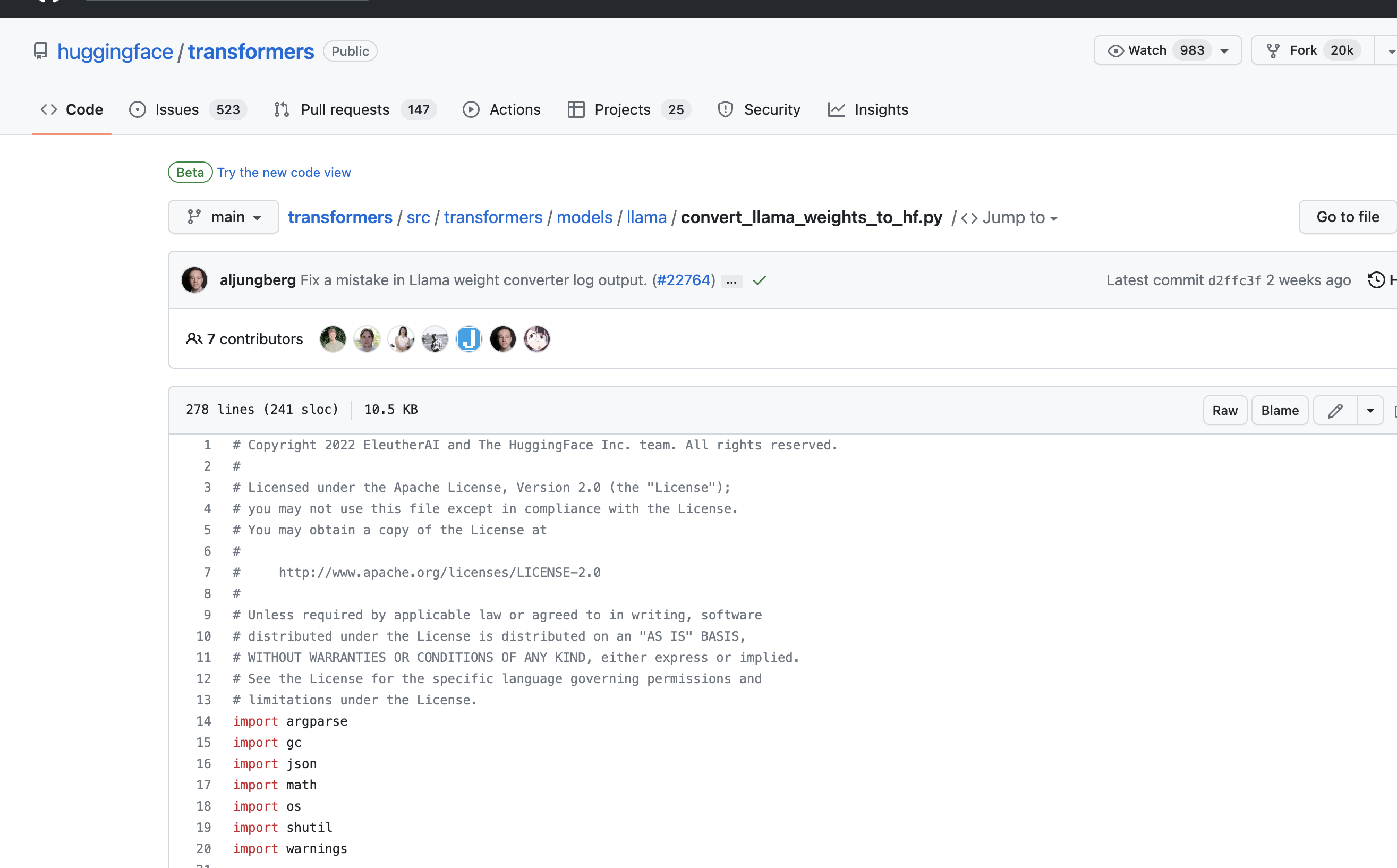

convert_llama_weights_to_hf.py |

|

转llama的时候,layer_num参数怎么设置,是用默认(12层)么? |

|

直接转到hf的脚本还在测试中,近期会上传

…________________________________

发件人: 张夜白 ***@***.***>

发送时间: Tuesday, April 25, 2023 2:35:01 PM

收件人: ydli-ai/Chinese-ChatLLaMA ***@***.***>

抄送: Subscribed ***@***.***>

主题: Re: [ydli-ai/Chinese-ChatLLaMA] 腾讯格式的权重转换成HF格式的转换脚本在哪里? (Issue #44)

1. Tencent -> Llama

[image]<https://user-images.githubusercontent.com/24763457/234192949-8b9ee692-7206-4dfc-ab8e-43a77f48d2e1.png>

[convert_tencentpretrain_to_llama.py](https://github.com/Tencent/TencentPretrain/blob/main/scripts/convert_tencentpretrain_to_llama.py)

1. Llama -> Huggingface

[image]<https://user-images.githubusercontent.com/24763457/234193393-638769c1-2059-4c51-9f6d-cad04e8ab33e.png>

[convert_llama_weights_to_hf.py ](https://github.com/huggingface/transformers/blob/main/src/transformers/models/llama/convert_llama_weights_to_hf.py) 我理解的路径应该是这样

―

Reply to this email directly, view it on GitHub<#44 (comment)>, or unsubscribe<https://github.com/notifications/unsubscribe-auth/AE3SPV3DIZTSYUVCLG4LR33XC5WBLANCNFSM6AAAAAAXJQW52E>.

You are receiving this because you are subscribed to this thread.Message ID: ***@***.***>

|

|

期待作者给出 ChatLLaMA-zh-7B 到 ChatLLaMA-zh-7B-hf的转换脚本,在线等 |

其实能直接转llama我很合用,因为我是用llama.cpp |

自己回答自己的问题。7B的模型是32层,13B的模型是40层。 |

|

转成huggingface后效果咋样,会有损失吗? |

|

@riverzhou |

先用 TencentPretrain 项目里的转换脚本把作者的腾讯格式的数据转成原始的 llama 的格式(layer_num参数:7B的模型是32层,13B的模型是40层。), |

No description provided.

The text was updated successfully, but these errors were encountered: