A tensorlayer implementation of YOLOv2

- python 3.6 or 3.5

- Anaconda 4.2.0

- tensorlayer 1.7.4 or 1.8.0

- tensorflow 1.4.1 or 1.4.0 or 1.8.0

- opencv-python 3.4.*

- (for GUI, tested on Win10) PyQt5

-

Clone the repo and cd into it:

git clone https://github.com/dtennant/tl-YOLOv2.git && cd tl-YOLOv2/ -

Download the weights pretrained on COCO dataset from BaiduYun and unzip it into

pretrained/ -

Run the following commend to detect objects in an image, you can change the input image if you want to:

python main.py --run_mode image --input_img data/before.jpg --output_img data/after.jpg --model_path pretrained/tl-yolov2.ckptFor Video detection, run the following commend:

python main.py --run_mode video --input_video data/source.avi --output_video data/target.avi --model_path pretrained/tl-yolov2.ckptYou can checkout the detailed commend-line options by type in python3 main.py -h, the output:

usage: main.py [-h] [--run_mode {video,image}] [--input_video INPUT_VIDEO]

[--output_video OUTPUT_VIDEO] [--input_img INPUT_IMG]

[--output_img OUTPUT_IMG] [--model_path MODEL_PATH]

optional arguments:

-h, --help show this help message and exit

--run_mode {video,image}

the mode will be video or image

--input_video INPUT_VIDEO

the video to be processed, default: capture from the

Camera(if available)

--output_video OUTPUT_VIDEO

the path to save the video, default: play on the

screen(not saving)

--input_img INPUT_IMG

the image to be processed

--output_img OUTPUT_IMG

the path to save the output img

--model_path MODEL_PATH

the path to the model ckpt

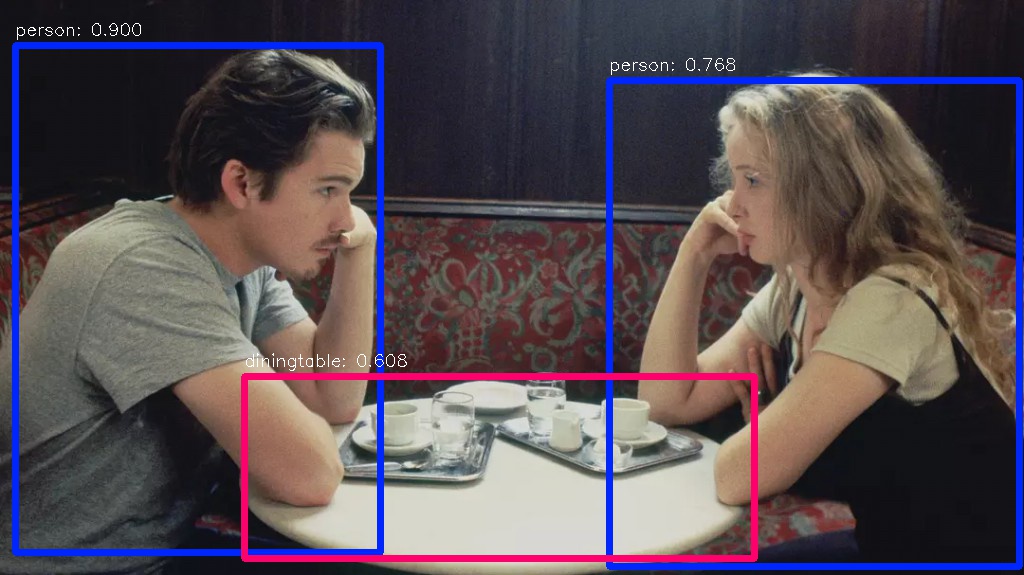

- Result:

Image Processing Result

Video Processing Result (if you are in China, maybe you can't see it):

-

Clone the repo and cd into it:

git clone https://github.com/dtennant/tl-YOLOv2.git && cd tl-YOLOv2/ -

Download the weights pretrained on COCO dataset from BaiduYun and unzip it into

pretrained/ -

Run

python gui.py, select the file you want to process, and start processing (support both video and image files)

- Add MobileNet to achieve real-time Detection on CPUs (My Machine is i5-7200u, which takes 1 second to process 1 frame)

- Add Training phase, See this issue

- Change PassThroughLayer and MyConcatLayer into proper tensorlayer layers

- Refactoring

gui.py(I am not very familiar with PyQt5)