-

Notifications

You must be signed in to change notification settings - Fork 395

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Add distributed tracing for Celery #1194

Add distributed tracing for Celery #1194

Conversation

|

I'd also like to find a way to test this against an actual running Celery deployment, is there an established way of doing that? Or would I need to package up my fork and install it in another application somewhere? |

|

I'm skeptical the segfault in SQLAlchemy tests have anything to do with this PR but I'm not sure how to re-run CI 🤔 |

Don't worry, this happens all the time, not related to your PR. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

in general this looks good, not sure I have any immediate feedback on the tests, I might need to sit with this for a minute to provide some feedback

have you tested this on a celery app? curious how it is working!

ddtrace/contrib/celery/signals.py

Outdated

| # Note: adding tags from `traceback` or `state` calls will make an | ||

| # API call to the backend for the properties so we should rely | ||

| # only on the given `Context` | ||

| attach_span(task, task_id, span, is_publish=True) | ||

|

|

||

| headers = kwargs.get('headers') | ||

| if config.celery['distributed_tracing'] and headers is not None: |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

should we add headers to kwargs if it doesn't exist? what are the cases when headers won't be present?

can headers be set in args?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I believe headers should always exist and be a dictionary in the current version of Celery, this is being cautious for previous versions. I'll attempt to verify that further when I get this installed in a running Celery app for testing.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Should we inject header if it is None?

e.g.

if config.celery['distributed_tracing']:

kwargs.get('headers')

if kwargs.get('headers'):

kwargs['headers'] = dict()

# inject into `headers` ....If there ever was a case when headers is not provided, do we still want to attempt to distribute?

(also, totally down for being cautious! in general the approach is good)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I'm trying to dig in more in Celery to understand exactly how all this works, but I'm not having much luck either with the documentation or the code itself.

I did find this note in the Task docs:

headers: | Mapping of message headers sent with this task message (may be None).

I'm not sure that the headers being None has any special meaning, though, as you're definitely allowed to write to them during these signals. I think we should still be fine to distribute in that case.

|

Alright, I was able to bundle up my fork as a wheel and test this against a distributed Celery install. Good thing, too, because I found yet another bug in Celery (celery/celery#4875) causing differences between how things work in our tests compared to in a real distributed environment. With this last commit, though, I think we should be good to go. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Just two small things and I think we're good to go! Thanks for the contribution @thieman! 😄

|

|

||

|

|

||

| class CeleryDistributedTracingIntegrationTask(CeleryBaseTestCase): | ||

| """Distributed tracing is tricky to test for two reasons: |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

👍 thanks for the test and great accompanying documentation too! Much appreciated 😄

1ffaeb4

to

0dde980

Compare

|

@Kyle-Verhoog comments addressed and rebased onto master, thanks! edit: oh I see there's a black check now, I'll try to get that to pass. I'll squash these once I'm passing |

57bc0e3

to

69d6cfe

Compare

|

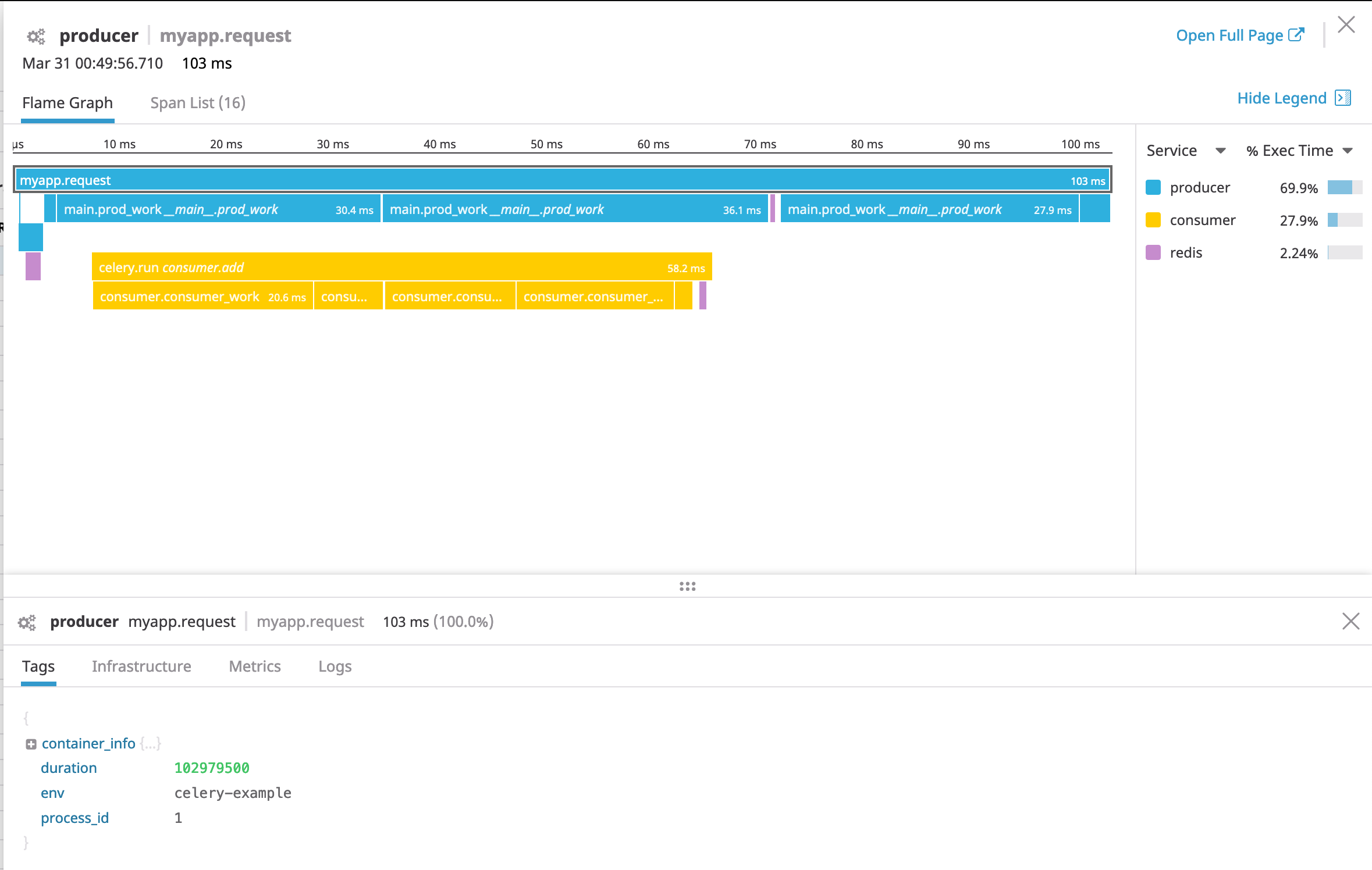

@thieman thanks for the quick response! I'm going to try to find some time today to set up a sample app to see what the traces look like. Also need to think about whether we want the distributed tracing enabled by default or not 🤔. |

Out of curiosity, what do you see as the downsides of enabling it? |

|

One reason is for tasks/jobs that may run minutes/hours/days later. The resulting trace UI isn't great for that at the moment. |

|

@thieman I created a small demo app in our trace-examples repo to play around with the distributed tracing and it looks great, good work! I do think we want to change the integration over to be disabled by default because of the above reason. But then this is good to merge 😄 |

69d6cfe

to

d570279

Compare

|

Switched to default off. I didn't add any explanation of why to the docstring in init, wasn't sure if that was the right place for it. |

|

I've been stalking this PR since february. What's left to do to get this merged? |

|

I didn't even realize the tests actually failed, I thought it was another flake like from before. Will take a look. |

|

@Kyle-Verhoog apologies for the delay but this PR is hopefully good to go now |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@thieman no worries! Thanks for seeing it through! 😄

|

Thanks everyone! |

This is an attempt to implement distributed tracing in the Celery contrib per #1179.

I ran into one major snag, which is that we rely on a signal that Celery simply does not execute when running synchronously, which is how our Celery integration tests work: celery/celery#3864

Due to this bug, there's absolutely nothing in our test suite actually executing our pre-publish signals from what I can tell. I attempted to test as much of the actual integration as I could, but I'm all ears if anybody sees a way to get around this restriction.