-

-

Notifications

You must be signed in to change notification settings - Fork 554

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Plot just one ROC curve in the binary case (#1040) #1041

Conversation

Remove per-class ROC curves and micro- and macro- averaging in the case of binary classification for estimators with predict_proba.

|

@VladSkripniuk The ROC Curve Visualizer was built with multi-class cases in mind. If you don't want the micro and macro curves... you can turn them off. |

|

@lwgray my concern is that

So I have to change

|

| y_scores = method(X) | ||

| if y_scores.ndim == 2 and y_scores.shape[1] == 2: | ||

| y_scores = y_scores[:,1] | ||

| return y_scores |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Would prefer to use sklearn.utils.multiclass.type_of_target here just as a side note, but we'd also like the user to be able to specify if this is a true binary case or if per_class is actually valid.

|

@VladSkripniuk I've got to say, I was using ROCAUC with the binary case this weekend, and I was also finding the params thing annoying as well. @lwgray is right that we built this with the multiclass case in mind primarily, and we also have to be aware of the true binary vs (a v not a/b v not b) 2 class case. It would, however, be nice to make the logic a bit more robust. I just took a look over the PR (not a full review, just a glance) - and was wondering why so many tests were removed? It looks like there is an interesting start to making the visualizer more robust though. @lwgray are you reviewing this PR? |

|

@bbengfort I did start to review this PR and as we discussed a couple weeks back, I too was concerned about the removed tests and the overall robustness of this solution. I definitely could use your assistance if we are going to work this into a YB quality PR . We could table it for now and continue the conversation back on the original issue page #1040 and work on the other PRs @VladSkripniuk has posted |

|

Hi @VladSkripniuk and thanks for your PR! @bbengfort and I took a look this morning and we're going to go ahead and close this PR in favor of your newer solution in #1056. Stay tuned for a review on that PR in the next few days and thank you for your patience! |

Remove per-class ROC curves and micro- and macro- averaging in the case

of binary classification for estimators with predict_proba.

This PR fixes #1040 which reported that

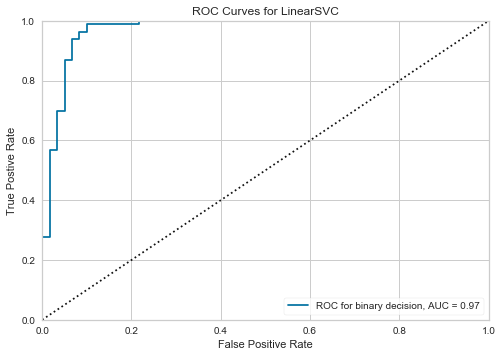

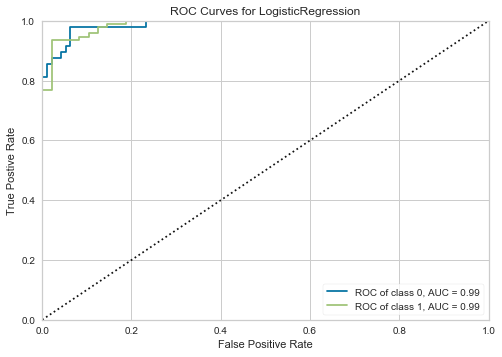

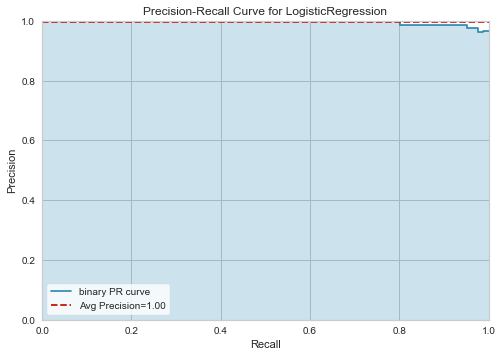

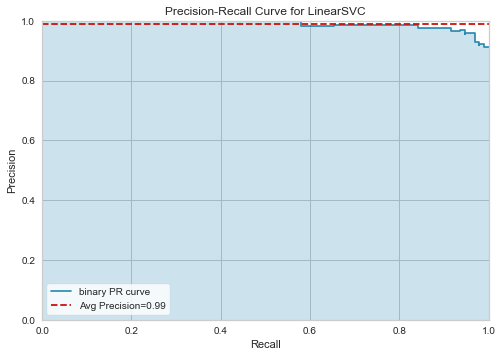

ROCAUCdraws per-class curves in case if estimator haspredict_proba. This is not consistent with behavior ofROCAUCwhen estimator only hasdecision_functionand is not consistent withPrecisionRecallCurvewhich draws single curve in the case of binary classification.I have made the following changes:

ROCAUCto treat binary classifiers withpredict_probaas binary problem, not multiclass.Sample Code and Plot

CHECKLIST

pytest?make html?