-

Notifications

You must be signed in to change notification settings - Fork 1k

Vuforia for Blocks

FTC robots have many ways to autonomously navigate the game field. This tutorial introduces Vuforia, a software tool that can use camera images to determine the robot’s location on the field. A separate tutorial describes TensorFlow Lite, a tool that supplements Vuforia to identify and track certain game elements as 3D objects.

These two tools combine several high-impact technologies: vision processing, machine learning, and autonomous navigation. Awareness of this software can spark further interest and exploration by FTC students as they prepare for future studies and careers in high technology.

Vuforia is PTC's augmented reality (AR) technology that includes image detection and image tracking capabilities. Vuforia has been available to FTC students since 2016. Each season, the FTC software is pre-loaded with 2D image targets that are placed in well-defined locations on the game field. Using Vuforia, if a robot "sees" one of these image targets, the robot can determine its location on the game field in an absolute or global frame of reference.

Image credit: Phil Malone

For the 2019-2020 SKYSTONE season, the 13 pre-loaded image targets are:

- 8 pictures (8.5 x 11 inches) on the field perimeter, 2 per wall

- 4 pictures (10 x 2 inches) on the narrow vertical pillars of the Skybridge

- 1 picture (4 x 7 inches) on one side of each Skystone (2 per Alliance)

Most of these pictures remain in a fixed location, allowing a team’s Op Mode to read and process data on the robot’s global field location and orientation. This allows autonomous navigation, an essential element of modern robotics.

For the image on the Skystone, which can move on the field, Vuforia reports the robot’s location and orientation relative to the Skystone. This can allow approach and retrieval. Note that Google's TensorFlow technology can also be used to locate and navigate to Skystone game elements, as described in a separate tutorial called TensorFlow for Blocks.

This tutorial describes the Op Modes provided in the FTC Blocks sample menu:

ConceptVuforiaNavSkystone.blk

and

ConceptVuforiaNavSkystoneWebcam.blk

Java samples are also available in OnBot Java and Android Studio:

ConceptVuforiaSkyStoneNavigation.java

and

ConceptVuforiaSkyStoneNavigationWebcam.java

The Java samples are much more detailed, revealing the underlying programming to manage the Vuforia software and to transform the picture location to a global field location. Before working with that code, Java programmers may benefit from reading this Blocks tutorial and testing the Blocks Op Mode on a game field. The Java sample programs are well documented with clear, detailed comments.

The Blocks and the Java sample op modes share the same programming logic. Before looking at the details of the sample op modes, it is helpful to step back and get a "big picture" view on what the Vuforia op modes do:

- Initialize the Vuforia software (including specifying the location and orientation of the phone on the robot).

- Activate the Vuforia software (i.e., begin "looking" for known 2D image targets).

- Loop while the op mode is still active

- Check to see if any image targets have been detected in the camera's current field of view

- If so, extract and display location (X, Y, and Z coordinates) and orientation (rotation about the Z axis) information for the robot

On a laptop connected via Wifi to the RC phone or REV Control Hub, at the Blocks screen, click Create New Op Mode. Type a name for your example program, and choose the appropriate Vuforia sample. If using the RC phone’s camera, choose the first Sample shown below.

If using a webcam, with REV Expansion Hub or REV Control Hub, choose the second Sample. Then click OK to enter the programming screen.

This Op Mode may appear long, but has a simple structure. The first section of Blocks is the main program, followed by four shorter functions. A function is a named collection of Blocks to do a certain task. This organizes and reduces the size of the main program, making it easier to read and edit. In Java these functions are called methods.

This program shows various Blocks for basic use of Vuforia. Other related Blocks can be found in the Vuforia folder, under the Utilities menu at the left side. ‘Optimized for SKYSTONE’ contains many of the game-specific Blocks in this sample. The other headings have a full suite of Vuforia commands for broader, advanced use.

The first section of an Op Mode is called initialization. These commands are run one time only, when the INIT button is pressed on the Driver Station (DS) phone. This section ends with the command waitForStart.

Besides the Telemetry messages, this section has only two actions, circled above: initialize Vuforia (specify your settings), and activate Vuforia (turn it on). The first action’s Block is long enough to justify its own Function called ‘initVuforia’, shown below the main program.

When using the RC phone camera, the FTC Vuforia software has 11 available settings to indicate your preferred usage and the camera’s location. This example uses the phone’s BACK camera, requiring the phone to be rotated -90 degrees about its long Y axis. For this sample Op Mode, the RC phone is used in Portrait mode.

When using a webcam, Vuforia has 12 available settings. The RC cameraDirection setting is replaced with two others related to the webcam: its configured name, and an optional calibration filename.

Other settings include ‘Monitoring’, which creates a live video feed on the RC phone and enables a preview image on the DS phone. This is useful for initial camera pointing, and to verify Vuforia’s recognition.

‘Feedback’ can display AXES, showing Vuforia’s X, Y, Z axes of the reference frame local to the image. The 3 colored lines indicate Vuforia has recognized and is analyzing the image. This local data is transformed by the FTC software to report the robot’s location in a global (field) reference frame.

‘Translation’ allows you to specify the phone’s location on the robot. Using these offsets, the FTC software can report the field position of a specific place on the robot itself (not the camera). Likewise, ‘rotation’ takes into account the phone’s angled/tilted orientation.

Lastly, in the main program’s initialization section, there remains only the command to activate Vuforia.

This starts the Vuforia software and opens or enables the Monitoring images if specified. The REV Control Hub offers a video feed only through its HDMI port, not allowed during competition.

At this point in the Op Mode, it is not required for Vuforia to recognize a stored image. This can happen in the next section.

The ‘run’ section begins when the Start button (large arrow icon) is pressed on the DS phone.

The first IF structure encompasses the entire program, to ensure the Op Mode was properly activated. This is an optional safety measure.

Next the Op Mode enters a Repeat loop that includes all the Vuforia-related commands. In this Sample, the loop continues while the Op Mode is active, namely until the Stop button is pressed.

In a competition Op Mode, you will add other conditions to end the loop. Examples might be: loop until Vuforia finds what you seek, loop until a time limit is reached, loop until the robot reaches a location on the field. After the loop, your main program will continue with other tasks.

Inside the loop, the program checks whether Vuforia has found specific pre-loaded images. This example Op Mode checks one at a time, in the order listed, re-using a Function called ‘isTargetVisible’. If a match is found, this program performs an action with another Function called ‘processTarget’. The first two searches are shown here:

The first search is for an image called Stone Target, the dark picture applied to a Skystone. The FTC Vuforia software calls this label TrackableName. The name is given as a parameter to the function called ‘isTargetVisible’.

Programming tip: when building a new Function in Blocks, click the blue gear icon to add one or more ‘input names’ or parameters.

The Op Mode’s second function ‘isTargetVisible’ will send, or return, to this IF block a Boolean value, namely True or False. If the reply is True, the DO branch will be performed. In this case, the DO command is to run the function called ‘processTarget’.

If the reply is False, the camera’s view did not include the Stone Target. The program moves to the ELSE IF branch, now seeking an image called Blue Front Bridge (picture on the front side of the Blue Alliance Skybridge support). If the function isTargetVisible returns a True value, the processTarget function will be run. Otherwise the Op Mode will seek the next image, and so on.

If no matches were found after checking all the desired images, the Op Mode reaches the final ELSE branch.

This builds a Telemetry message (line of text for the DS phone) that none of the desired images were found in the current cycle of the Repeat loop.

The last action of the loop is to provide, or update, the DS phone with all Telemetry messages built during this loop cycle. Those messages might have been created in a Function that was used, or called, during the loop.

During this Repeat loop, two Functions were called; their descriptions follow below.

This example Op Mode ends by turning off the Vuforia software. When your competition Op Mode is finished using Vuforia, deactivation will free up computing resources for the remainder of your program.

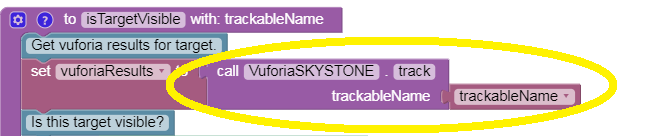

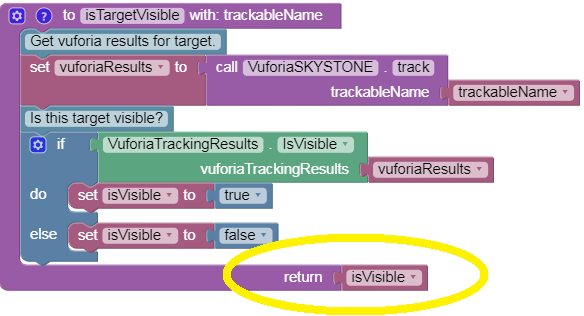

Recall that this second function was given, or passed, a parameter called ‘trackableName’. This contains the name of the pre-loaded image currently being sought by the main program.

Programming tip: By passing the name as a parameter, this same function can be reused 13 times, to look for each of the images. This is more efficient than 13 similar functions, each seeking 1 particular image.

Programming tip: in Blocks, a Function’s parameters are automatically created as Variables. This makes its contents available to use inside the functions and elsewhere.

To understand this function’s first command, read it from right to left.

The FTC Vuforia software contains a method called ‘track’. This is the central task; it looks for a specific image named as an input parameter. The yellow-circled command calls that method, passing it the same parameter from the main program: trackableName.

The ‘track’ method examines the current camera image, to see if it contains any form of the named pre-stored picture. The recognized image, if any, might be distant (small), at an angle (distorted), in poor lighting (darker), etc. In any case, the ‘track’ method returns various pieces of information about its analysis.

All of that returned information is passed to the left side of the command line.

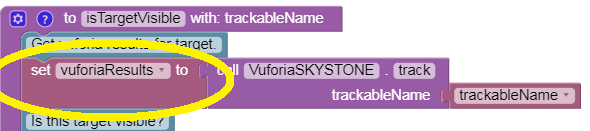

This Blocks Op Mode uses a Variable called vuforiaResults. This is a special variable holding multiple pieces of information, not just one number, or one text string, or one Boolean value. The yellow-circled command sets that variable to store the entire group of results passed from the Vuforia ‘track’ method.

This function is interested in only one thing: was there a match?

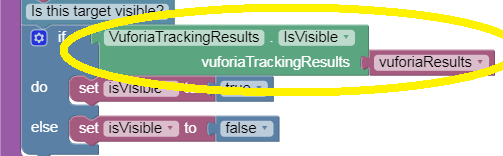

The yellow-circled command calls a different Vuforia method called ‘IsVisible’. This method examines the full set of tracking results, now stored in the variable ‘vuforiaResults’, and determines whether a target was recognized in the camera image. It returns a Boolean value of True or False.

Finally, the Op Mode can set a simple Boolean variable with similar name, called ‘isVisible’.

This true/false answer is sent, or returned, to the main program.

Programming tip: when creating a new Function in Blocks, look for the separate menu choice with a return feature, as shown above.

This third function is called by the main program, only if the named target was recognized. Now the Op Mode can take action based on a recognized image.

This example Op Mode does not take physical action, such as operating motors or servos. In a competition Op Mode, you will program the robot to react to a successful recognition, probably to continue navigating on the field.

Instead this program simply displays Telemetry, showing some contents of the tracking results. Those results are still stored in the special variable called vuforiaResults, and can be extracted using other Vuforia methods besides ‘IsVisible’. This example Op Mode calls these 5 basic methods:

- Name: returns the name of the recognized target

- X: returns the X coordinate of robot’s estimated location on the field

- Y: returns the Y coordinate of robot’s estimated location on the field

- Z: returns the Z coordinate of robot’s estimated location on the field

- ZAngle: returns the estimated orientation of the robot on the field

The Testing and Navigation sections below will discuss how to use this data.

The fourth and last function receives a Vuforia distance value (in millimeters) as a parameter and converts it to another unit, specified as a second parameter.

The converted value, in the requested units, is returned to the calling program or function.

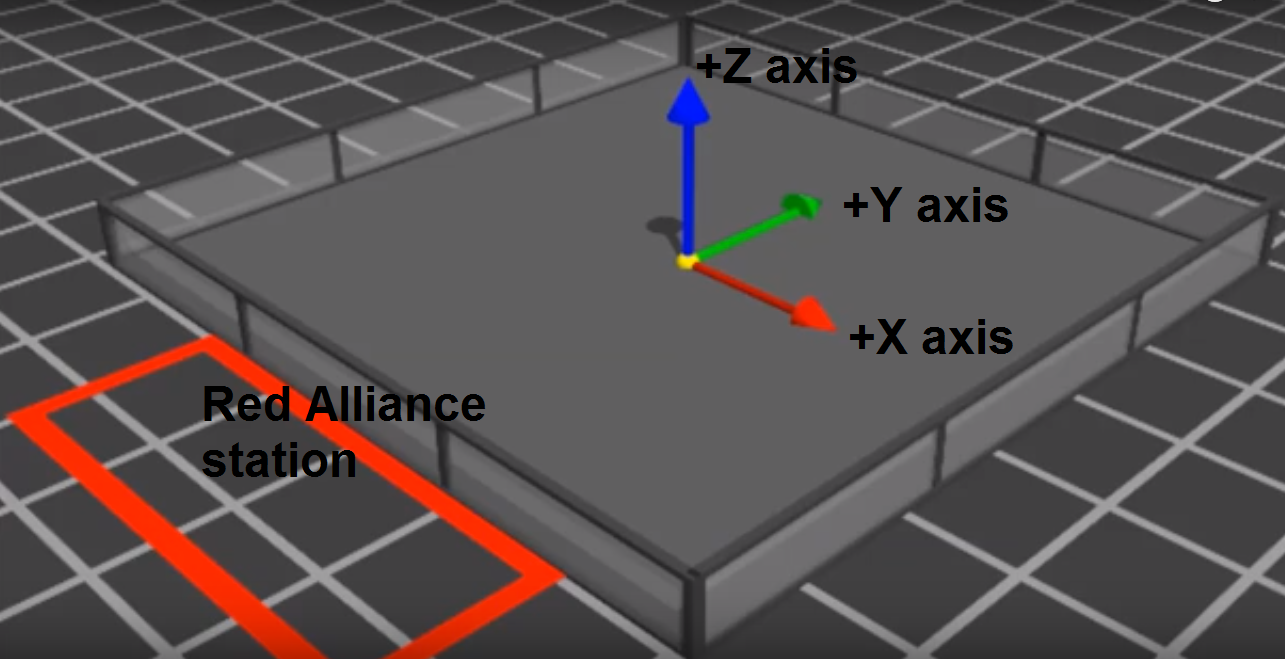

The FTC software’s global frame of reference is defined from the viewpoint of the Red Alliance station. The center of the game field is the origin: X=0, Y=0, Z=0. Positive X extends to the right, and Positive Y extends forward or away. Thus on the SKYSTONE game field, Positive X extends to the rear, away from the Audience side; while Positive Y extends to the Blue Alliance station.

Image credit: Phil Malone

Positive Z extends upwards from the foam tile surface. Rotation about each axis follows the right-hand rule, namely clockwise when looking in the positive direction. Z-Angle begins at 0 degrees on the positive X axis. In the image shown above, if you were floating over the field looking from a "birds eye" video down onto the field (in the negative Z direction) the positive Z-Angle direction is in the counterclockwise direction with 0 degrees coinciding with the +X axis, and 90 degrees coinciding with the +Y axis.

Try this on an FTC SKYSTONE game field. An RC phone in Portrait mode can be tested loose or mounted on a robot. From a low place inside the perimeter, point the phone’s camera or webcam at a fixed target image (perimeter or Skybridge). Initialize the sample Op Mode, and use the RC video feed to confirm Vuforia’s recognition (3 colored local axes on the picture).

A preview is also available on the DS screen, with the REV Expansion Hub and the REV Control Hub. To see it, choose the main menu’s Camera Stream when the Op Mode has initialized (but not started). Touch to refresh the image as needed, and select Camera Stream again to close the preview and continue the Op Mode. This is described further in the tutorial Using an External Webcam with Control Hub.

Close the DS preview (if used), and press the Start button. The DS phone should show Telemetry with the name of that image, and the estimated location and orientation of the camera.

Carefully move the camera around the field, trying to keep a navigation image in the field of view. Verify whether the X, Y and Z-Angle values respond and seem reasonable. Swiveling the phone from right to left should increase Z-Angle (Rotation about Z). Move the camera from one image to another; observe the results.

Learn and assess the characteristics of this navigation tool. You will notice that after initially recognizing a picture, Vuforia can easily track the full or partial image as the camera moves. You may find that certain zones give better results.

You may also notice some readings are slightly erratic or ‘jumpy’, like the raw data from many advanced sensors. An FTC programming challenge is to smooth out such data to make it more useful. Ignoring rogue values, simple average, moving average, weighted average, PID control, and many other methods are available. It’s also possible to combine Vuforia results with other sensor data, for a blended source of navigation input.

This is the end of reviewing the sample Op Mode. With the data provided by Vuforia, your competition Op Mode can autonomously navigate on the field. The next section gives a simple example.

For illustration only, consider an extremely simple SKYSTONE navigation task. With the robot near the Blue midline (approximately X = 0), front camera directly facing the rear left target image, drive 6 inches towards the image.

The sample Op Mode looped until the DS Stop button was pressed. Now the navigation loop will contain driving, and the loop ends when X reaches 6 inches.

Programming tip: Plan your programs first using plain language, also called pseudocode. This can be done with Blocks, by creating empty Functions. The name of each Function describes the action.

Programming tip: Later, each team member can work on the inner details of a Function. When ready, Functions can be combined using a laptop’s Copy and Paste functions (e.g. Control-C and Control-V).

A simple pseudocode solution might look like this:

To stay very close to the Sample Op Mode described above, the pseudocode might be altered as follows:

This version has the added safety feature of driving forward only if the target is in view.

Lastly, to use exactly the same Functions from the Sample Op Mode:

This code could directly replace the Repeat loop in the Sample Op Mode. This version has the added safety feature of looping only while the Op Mode is active.

Programming tip: action loops should also have safety time-outs. Add another loop condition, while ElapsedTime.Time is less than your time limit. Otherwise your robot could sit idle, or keep driving and crash.

Programming tip: optional to set a Boolean variable indicating that the loop ended from a time-out, then take action accordingly.

This example showed how to extract and use a single number generated by Vuforia, in this case the current X position. It could be used in proportional control, where larger deviation, or error, is corrected faster. X and Y error could control steering, or forward motion combined with sideways motion (crab drive or strafing). Z-Angle could be used for spins and angled drives.

Overall, Vuforia allows autonomous navigation to global field locations and orientations, not relying only on the robot’s frame of reference and on-board sensor data. It also allows a robot to recognize and approach a movable image, like a Skystone, with or without TensorFlow Lite.

Questions, comments and corrections to: westsiderobotics@verizon.net

A special Thank You! is in order for Chris Johannesen of Westside Robotics (Los Angeles) for putting together this tutorial. Thanks Chris!