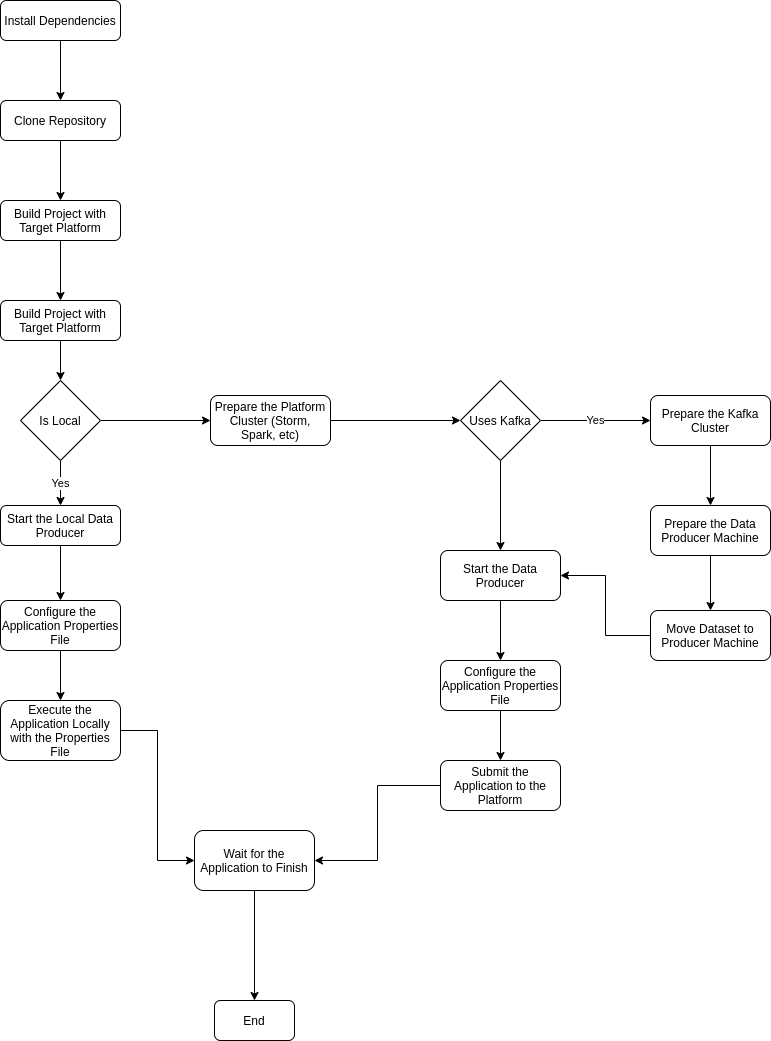

Deployment Flow

To start reading the dataset from a local directory <data-dir> into a Kafka topic (reading line by line of all files within the directory) you will need to run the following command:

dspbench-producer/bin/producer.sh start <properties-file> <topic> <partitions> <replicas> <data-dir>

The <topic> is the name of the topic which must already exists, as well as the number of <partitions> and <replicas> should have been already defined when creating the topic.

The <properties-file> should have the following properties:

zk.connect=localhost:2181

serializer.class=kafka.serializer.StringEncoder

producer.type=async

request.required.acks=1

statsd.prefix=streamer.producer

statsd.host=localhost

statsd.port=8125

The statsD is a very small daemon for aggregating metrics and can be installed locally to receive metrics from the producer.

The producer can be stopped at any time with the following command:

dspbench-producer/bin/producer.sh stop <topic>

To start reading the dataset from a local directory <data-dir> into a local socket you will need to run the following command:

dspbench-producer/bin/producer.sh start <properties-file> <data-dir>

The <properties-file> should have the following properties:

producer.port=8080

statsd.prefix=streamer.producer

statsd.host=localhost

statsd.port=8125

The producer.port is the port where the socket will be created.