-

-

Notifications

You must be signed in to change notification settings - Fork 5.7k

RFC: use pairwise summation for sum, cumsum, and cumprod #4039

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

(We could use the old definitions for |

|

If you do image processing with single precision, this is an enormously big deal. E.g. julia> mean(fill(1.5f0, 3000, 4000))

1.766983f0(Example chosen for its striking effect. Unfortunately the mean implementation is disjoint from the sum implementation that this patch modifies.) |

|

@GunnarFarneback, yes, it is a pain to modify all of the different |

|

Of course, in single precision, the right thing is to just do summation and other accumulation in double precision, rounding back to single precision at the end. |

|

To clarify my example was meant show the insufficiency of the current state of summing. That pairwise summation is an effective solution I considered as obvious, so I'm all in favor of this patch. That mean and some other functions fail to take advantage of it is a separate issue. |

|

The right thing with respect to precision, not necessarily with respect to speed. |

|

I'm good with this as a first step and think we should, as @GunnarFarneback points out, integrate even further so that mean and other stats functions also use pairwise summation. |

…omplex arrays (should use absolute value and return a real number)

|

Updated to use pairwise summation for The variance computation is also more efficient now because (at least when operating on the whole array) it no longer constructs a temporary array of the same size. (Would be even better if Also, I noticed that |

…rted) mapreduce_associative

|

Also added an associative variant of _Question:_ Although |

|

Another approach would be to have a |

That would be a good idea--for instance, https://github.com/pao/Monads.jl/blob/master/src/Monads.jl#L54 relies on the fold direction. |

|

The only sensible associativities to have in Base are left, right, and unspecified. A My suggestion would be that |

|

@StefanKarpinski, should I go ahead and merge this? |

|

This is great, and I would love to see this merged. Also, we should probably not export |

|

|

|

I'm good with merging this. @JeffBezanson? |

RFC: use pairwise summation for sum, cumsum, and cumprod

|

This is great. I had played with my own variant of this idea, breaking it up into blocks of size |

Stdlib: Pkg URL: https://github.com/JuliaLang/Pkg.jl.git Stdlib branch: master Julia branch: master Old commit: 7b759d7f0 New commit: d84a1a38b Julia version: 1.12.0-DEV Pkg version: 1.12.0 Bump invoked by: @KristofferC Powered by: [BumpStdlibs.jl](https://github.com/JuliaLang/BumpStdlibs.jl) Diff: JuliaLang/Pkg.jl@7b759d7...d84a1a3 ``` $ git log --oneline 7b759d7f0..d84a1a38b d84a1a38b Allow use of a url and subdir in [sources] (#4039) cd75456a8 Fix heading (#4102) b61066120 rename FORMER_STDLIBS -> UPGRADABLE_STDLIBS (#4070) 814949ed2 Increase version of `StaticArrays` in `why` tests (#4077) 83e13631e Run CI on backport branch too (#4094) ``` Co-authored-by: Dilum Aluthge <dilum@aluthge.com>

Stdlib: Pkg URL: https://github.com/JuliaLang/Pkg.jl.git Stdlib branch: master Julia branch: master Old commit: 7b759d7f0 New commit: d84a1a38b Julia version: 1.12.0-DEV Pkg version: 1.12.0 Bump invoked by: @KristofferC Powered by: [BumpStdlibs.jl](https://github.com/JuliaLang/BumpStdlibs.jl) Diff: JuliaLang/Pkg.jl@7b759d7...d84a1a3 ``` $ git log --oneline 7b759d7f0..d84a1a38b d84a1a38b Allow use of a url and subdir in [sources] (#4039) cd75456a8 Fix heading (#4102) b61066120 rename FORMER_STDLIBS -> UPGRADABLE_STDLIBS (#4070) 814949ed2 Increase version of `StaticArrays` in `why` tests (#4077) 83e13631e Run CI on backport branch too (#4094) ``` Co-authored-by: Dilum Aluthge <dilum@aluthge.com>

* Make a failed extension load throw an error during pre-compilation (#56668)

Co-authored-by: Ian Butterworth <i.r.butterworth@gmail.com>

* Remove arraylist_t from external native code APIs. (#56693)

This makes them usable for external consumers like GPUCompiler.jl.

* fix world_age_at_entry in codegen (#56700)

* Fix typo in `@cmd` docstring (#56664)

I'm not sure what `` `cmd` `` could refer to, but it would make sense to

refer to `` `str` `` in this case. I'm assuming it's a typo.

* deps/pcre: Update to version 10.44 (#56704)

* compile: make more efficient by discarding internal names (#56702)

These are not user-visible, so this makes the compiler faster and more

efficient with no effort on our part, and avoids duplicating the

debug_level parameter.

* Automatically enable JITPROFILING with ITTAPI (#55598)

This helps when profiling remotely since VTunes doesn't support

setting environment variables on remote systems.

Will still respect `ENABLE_JITPROFILING=0`.

* Fix string handling in jlchecksum (#56720)

A `TAGGED_RELEASE_BANNER` with spaces such as `Official

https://julialang.org release` produces the error

`/cache/build/builder-amdci4-5/julialang/julia-master/deps/tools/jlchecksum:

66: [: Official: unexpected operator`.

* Clarifying ispunct behavior difference between Julia and C in documentation (#56727)

Fixes #56680. This PR updates the documentation for the ispunct function

in Julia to explicitly note its differing behavior from the similarly

named function in C.

---------

Co-authored-by: Lilith Orion Hafner <lilithhafner@gmail.com>

* [NEWS.md] Add PR numbers and remove some 1.11 changes that accidentally came back. (#56722)

* ircode: cleanup code crud

- support gc running

- don't duplicate field 4

- remove some unused code only previously needed for handling cycles

(which are not valid in IR)

* ircode: small optimization for nearby ssavalue

Since most ssavalue are used just after their def, this gives a small

memory savings on compressed IR (a fraction of a percent).

* Fix scope of hoisted signature-local variables (#56712)

When we declare inner methods, e.g. the `f` in

```

function fs()

f(lhs::Integer) = 1

f(lhs::Integer, rhs::(local x=Integer; x)) = 2

return f

end

```

we must hoist the definition of the (appropriately mangled) generic

function `f` to top-level, including all variables that were used in the

signature definition of `f`. This situation is a bit unique in the

language because it uses inner function scope, but gets executed in

toplevel scope. For example, you're not allowed to use a local of the

inner function in the signature definition:

```

julia> function fs()

local x=Integer

f(lhs::Integer, rhs::x) = 2

return f

end

ERROR: syntax: local variable x cannot be used in closure declaration

Stacktrace:

[1] top-level scope

@ REPL[3]:1

```

In particular, the restriction is signature-local:

```

julia> function fs()

f(rhs::(local x=Integer; x)) = 1

f(lhs::Integer, rhs::x) = 2

return f

end

ERROR: syntax: local variable x cannot be used in closure declaration

Stacktrace:

[1] top-level scope

@ REPL[4]:1

```

There's a special intermediate form `moved-local` that gets generated

for this definition. In c6c3d72d1cbddb3d27e0df0e739bb27dd709a413, this

form stopped getting generated for certain inner methods. I suspect this

happened because of the incorrect assumption that the set of moved

locals is being computed over all signatures, rather than being a

per-signature property.

The result of all of this was that this is one of the few places where

lowering still generated a symbol as the lhs of an assignment for a

global (instead of globalref), because the code that generates the

assignment assumes it's a local, but the later pass doesn't know this.

Because we still retain the code for this from before we started using

globalref consistently, this wasn't generally causing a problems, except

possibly leaking a global (or potentially assigning to a global when

this wasn't intended). However, in follow on work, I want to make use of

knowing whether the LHS is a global or local in lowering, so this was

causing me trouble.

Fix all of this by putting back the `moved-local` where it was dropped.

Fixes #56711

* ircode: avoid serializing ssaflags in the common case when they are all zero

When not all-zero, run-length encoding would also probably be great

here for lowered code (before inference).

* Extend `invoke` to accept CodeInstance (#56660)

This is an alternative mechanism to #56650 that largely achieves the

same result, but by hooking into `invoke` rather than a generated

function. They are orthogonal mechanisms, and its possible we want both.

However, in #56650, both Jameson and Valentin were skeptical of the

generated function signature bottleneck. This PR is sort of a hybrid of

mechanism in #52964 and what I proposed in

https://github.com/JuliaLang/julia/pull/56650#issuecomment-2493800877.

In particular, this PR:

1. Extends `invoke` to support a CodeInstance in place of its usual

`types` argument.

2. Adds a new `typeinf` optimized generic. The semantics of this

optimized generic allow the compiler to instead call a companion

`typeinf_edge` function, allowing a mid-inference interpreter switch

(like #52964), without being forced through a concrete signature

bottleneck. However, if calling `typeinf_edge` does not work (e.g.

because the compiler version is mismatched), this still has well defined

semantics, you just don't get inference support.

The additional benefit of the `typeinf` optimized generic is that it

lets custom cache owners tell the runtime how to "cure" code instances

that have lost their native code. Currently the runtime only knows how

to do that for `owner == nothing` CodeInstances (by re-running

inference). This extension is not implemented, but the idea is that the

runtime would be permitted to call the `typeinf` optimized generic on

the dead CodeInstance's `owner` and `def` fields to obtain a cured

CodeInstance (or a user-actionable error from the plugin).

This PR includes an implementation of `with_new_compiler` from #56650.

This PR includes just enough compiler support to make the compiler

optimize this to the same code that #56650 produced:

```

julia> @code_typed with_new_compiler(sin, 1.0)

CodeInfo(

1 ─ $(Expr(:foreigncall, :(:jl_get_tls_world_age), UInt64, svec(), 0, :(:ccall)))::UInt64

│ %2 = builtin Core.getfield(args, 1)::Float64

│ %3 = invoke sin(%2::Float64)::Float64

└── return %3

) => Float64

```

However, the implementation here is extremely incomplete. I'm putting it

up only as a directional sketch to see if people prefer it over #56650.

If so, I would prepare a cleaned up version of this PR that has the

optimized generics as well as the curing support, but not the full

inference integration (which needs a fair bit more work).

* Update references to LTS from v1.6 to v1.10 (#56729)

* lowering: Canonicalize to builtins for global assignment (#56713)

This adjusts lowering to emit `setglobal!` for assignment to globals,

thus making the `=` expr head used only for slots in `CodeInfo` and

entirely absent in `IRCode`. The primary reason for this is just to

reduce the number of special cases that compiler passes have to reason

about. In IRCode, `=` was already essentially equivalent to

`setglobal!`, so there's no good reason not to canonicalize.

Finally, the `=` syntax form for globals already gets recognized

specially to insert `convert` calls to their declared binding type, so

this doesn't impose any additional requirements on lowering to

distinguish local from global assignments. In general, I'd also like to

separate syntax and intermediate forms as much as possible where their

semantics differ, which this accomplises by just using the builtin.

This change is mostly semantically invisible, except that spliced-in

GlobalRefs now declare their binding because they are indistinguishable

from ordinary assignments at the stage where I inserted the lowering. If

we want to, we can preserve the difference, but it'd be a bit more

annoying for not much benefit, because:

1. The spliced in version was only recently made to work anyway, and

2. The semantics of when exactly bindings are declared is still messy on

master anyway and will get tweaked shortly in further binding partitions

work.

* Actually show glyphs for latex or emoji shortcodes being suggested in the REPL (#54800)

When a user requests a completion for a backslash shortcode, this PR

adds the glyphs for all the suggestions to the output. This makes it

much easier to find the result one is looking for, especially if the

user doesn't know all latex and emoji specifiers by heart.

Before:

<img width="813" alt="image"

src="https://github.com/JuliaLang/julia/assets/22495855/bf651399-85a6-4677-abdc-c66a104e3b89">

After:

<img width="977" alt="image"

src="https://github.com/JuliaLang/julia/assets/22495855/04c53ea2-318f-4888-96eb-0215b49c10f3">

---------

Co-authored-by: Dilum Aluthge <dilum@aluthge.com>

* Update annotated.jl docstrings according to #55741 (#56736)

Annotations now use a NamedTuple

* effects: pack bits better (#56737)

There is no reason to preserve duplicates of the bits for the value

before and after inference, and many of the numbers in the comments had

gotten incorrect. Now we are able to pack all 16 bits of currently

defined bitflags into 20 bits, instead of 25 bits (although either case

still rounds up to 32).

There was also no reason for InferenceState to be mutating of CodeInfo

during execution, so remove that mutation.

* 🤖 [master] Bump the Pkg stdlib from 7b759d7f0 to d84a1a38b (#56743)

Stdlib: Pkg

URL: https://github.com/JuliaLang/Pkg.jl.git

Stdlib branch: master

Julia branch: master

Old commit: 7b759d7f0

New commit: d84a1a38b

Julia version: 1.12.0-DEV

Pkg version: 1.12.0

Bump invoked by: @KristofferC

Powered by:

[BumpStdlibs.jl](https://github.com/JuliaLang/BumpStdlibs.jl)

Diff:

https://github.com/JuliaLang/Pkg.jl/compare/7b759d7f0af56c5ad01f2289bbad71284a556970...d84a1a38b6466fa7400e9ad2874a0ef963a10456

```

$ git log --oneline 7b759d7f0..d84a1a38b

d84a1a38b Allow use of a url and subdir in [sources] (#4039)

cd75456a8 Fix heading (#4102)

b61066120 rename FORMER_STDLIBS -> UPGRADABLE_STDLIBS (#4070)

814949ed2 Increase version of `StaticArrays` in `why` tests (#4077)

83e13631e Run CI on backport branch too (#4094)

```

Co-authored-by: Dilum Aluthge <dilum@aluthge.com>

* Hide IRShow include from Revise (#56756)

Revise in theory wants to re-evaluate this include, but it fails at

doing so, because the include call no longer works after bootstrap. It

happens to work right now on master, because the lowering of

`Compiler.include` happens to hide the include call from Revise, but

that's a Revise bug I'm about to fix. Address this by moving the include

call into the package and using an absolute include if necessary.

* fixup!: JuliaLang/julia#56756 (#56758)

We need to quote it, otherwise it would result in `UnderVarError`.

* Accept more general Integer sizes in reshape (#55521)

This PR generalizes the `reshape` methods to accept `Integer`s instead

of `Int`s, and adds a `_reshape_uncolon` method for `Integer` arguments.

The current `_reshape_uncolon` method that accepts `Int`s is left

unchanged to ensure that the inferred types are not impacted. I've also

tried to ensure that most `Integer` subtypes in `Base` that may be

safely converted to `Int`s pass through that method.

The call sequence would now go like this:

```julia

reshape(A, ::Tuple{Vararg{Union{Integer, Colon}}}) -> reshape(A, ::Tuple{Vararg{Integer}}) -> reshape(A, ::Tuple{Vararg{Int}}) (fallback)

```

This lets packages define `reshape(A::CustomArray, ::Tuple{Integer,

Vararg{Integer}})` without having to implement `_reshape_uncolon` by

themselves (or having to call internal `Base` functions, as in

https://github.com/JuliaArrays/FillArrays.jl/issues/373). `reshape`

calls involving a `Colon` would convert this to an `Integer` in `Base`,

and then pass the `Integer` sizes to the custom method defined in the

package.

This PR does not resolve issues like

https://github.com/JuliaLang/julia/issues/40076 because this still

converts `Integer`s to `Int`s in the actual reshaping step. However,

`BigInt` sizes that may be converted to `Int`s will work now:

```julia

julia> reshape(1:4, big(2), big(2))

2×2 reshape(::UnitRange{Int64}, 2, 2) with eltype Int64:

1 3

2 4

julia> reshape(1:4, big(1), :)

1×4 reshape(::UnitRange{Int64}, 1, 4) with eltype Int64:

1 2 3 4

```

Note that the reshape method with `Integer` sizes explicitly converts

these to `Int`s to avoid self-recursion (as opposed to calling

`to_shape` to carry out the conversion implicitly). In the future, we

may want to decide what to do with types or values that can't be

converted to an `Int`.

---------

Co-authored-by: Neven Sajko <s@purelymail.com>

* drop llvm 16 support (#56751)

Co-authored-by: Valentin Churavy <v.churavy@gmail.com>

* Address some post-commit review from #56660 (#56747)

Some more questions still to be answered, but this should address the

immediate actionable review items.

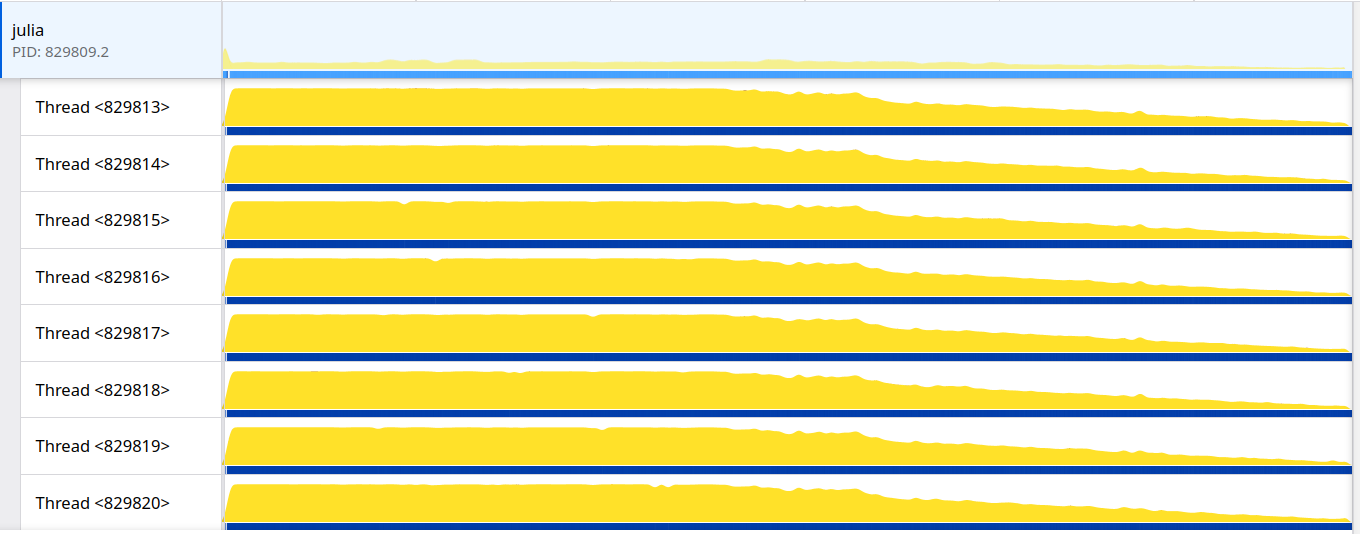

* Add per-task metrics (#56320)

Close https://github.com/JuliaLang/julia/issues/47351 (builds on top of

https://github.com/JuliaLang/julia/pull/48416)

Adds two per-task metrics:

- running time = amount of time the task was actually running (according

to our scheduler). Note: currently inclusive of GC time, but would be

good to be able to separate that out (in a future PR)

- wall time = amount of time between the scheduler becoming aware of

this task and the task entering a terminal state (i.e. done or failed).

We record running time in `wait()`, where the scheduler stops running

the task as well as in `yield(t)`, `yieldto(t)` and `throwto(t)`, which

bypass the scheduler. Other places where a task stops running (for

`Channel`, `ReentrantLock`, `Event`, `Timer` and `Semaphore` are all

implemented in terms of `wait(Condition)`, which in turn calls `wait()`.

`LibuvStream` similarly calls `wait()`.

This should capture everything (albeit, slightly over-counting task CPU

time by including any enqueuing work done before we hit `wait()`).

The various metrics counters could be a separate inlined struct if we

think that's a useful abstraction, but for now i've just put them

directly in `jl_task_t`. They are all atomic, except the

`metrics_enabled` flag itself (which we now have to check on task

start/switch/done even if metrics are not enabled) which is set on task

construction and marked `const` on the julia side.

In future PRs we could add more per-task metrics, e.g. compilation time,

GC time, allocations, potentially a wait-time breakdown (time waiting on

locks, channels, in the scheduler run queue, etc.), potentially the

number of yields.

Perhaps in future there could be ways to enable this on a per-thread and

per-task basis. And potentially in future these same timings could be

used by `@time` (e.g. writing this same timing data to a ScopedValue

like in https://github.com/JuliaLang/julia/pull/55103 but only for tasks

lexically scoped to inside the `@time` block).

Timings are off by default but can be turned on globally via starting

Julia with `--task-metrics=yes` or calling

`Base.Experimental.task_metrics(true)`. Metrics are collected for all

tasks created when metrics are enabled. In other words,

enabling/disabling timings via `Base.Experimental.task_metrics` does not

affect existing `Task`s, only new `Task`s.

The other new APIs are `Base.Experimental.task_running_time_ns(::Task)`

and `Base.Experimental.task_wall_time_ns(::Task)` for retrieving the new

metrics. These are safe to call on any task (including the current task,

or a task running on another thread). All these are in

`Base.Experimental` to give us room to change up the APIs as we add more

metrics in future PRs (without worrying about release timelines).

cc @NHDaly @kpamnany @d-netto

---------

Co-authored-by: Pete Vilter <pete.vilter@gmail.com>

Co-authored-by: K Pamnany <kpamnany@users.noreply.github.com>

Co-authored-by: Nathan Daly <nathan.daly@relational.ai>

Co-authored-by: Valentin Churavy <vchuravy@users.noreply.github.com>

* Fully outline all GlobalRefs (#56746)

This is an alternative to #56714 that goes in the opposite direction -

just outline all GlobalRefs during lowering. It is a lot simpler that

#56714 at the cost of some size increase. Numbers:

sys.so .ldata size:

This PR: 159.8 MB

Master: 158.9 MB

I don't have numbers of #56714, because it's not fully complete.

Additionally, it's possible that restricting GlobalRefs from arguments

position would let us use a more efficient encoding in the future.

* Add #54800 to NEWS (#56774)

Show glyphs for latex or emoji shortcodes being suggested in the REPL

* Add the actual datatype to the heapsnapshot. This groups objects of the same type together (#56596)

* Fix typos in docstring, comments, and news (#56778)

* Add sort for NTuples (#54494)

This is partially a reland of #46104, but without the controversial `sort(x) = sort!(copymutable(x))` and with some extensibility improvements. Implements #54489.

* precompile: don't waste memory on useless inferred code (#56749)

We never have a reason to reference this data again since we already

have native code generated for it, so it is simply wasting memory and

download space.

$ du -sh {old,new}/usr/share/julia/compiled

256M old

227M new

* various globals cleanups (#56750)

While doing some work on analyzing what code runs at toplevel, I found a

few things to simplify or fix:

- simplify float.jl loop not to call functions just defined to get back

the value just stored there

- add method to the correct checkbounds function (instead of a local)

- missing world push/pop around jl_interpret_toplevel_thunk after #56509

- jl_lineno use maybe missed in #53799

- add some debugging dump for scm_to_julia_ mistakes

* Fix test report alignment (#56789)

* 🤖 [master] Bump the Pkg stdlib from d84a1a38b to e7c37f342 (#56786)

* Bump JuliaSyntax to 0.4.10 (#56110)

* libgit2: update enums from v1.8.0 (#56764)

* fix `exp(weirdNaN)` (#56784)

Fixes https://github.com/JuliaLang/julia/issues/56782

Fix `exp(reinterpret(Float64, 0x7ffbb14880000000))` returning non-NaN

value. This should have minimal performance impact since it's already in

the fallback branch.

* Add note to Vararg/UnionAll warning about making it an error (#56662)

This warning message

```

WARNING: Wrapping `Vararg` directly in UnionAll is deprecated (wrap the tuple instead).

You may need to write `f(x::Vararg{T})` rather than `f(x::Vararg{<:T})` or `f(x::Vararg{T}) where T` instead of `f(x::Vararg{T} where T)`.

```

(last extended in #49558) seems clear enough if you wrote the code. But

if it's coming from 10 packages deep, there's no information to track

down the origin.

Turns out you can do this with `--depwarn=error`. But the message

doesn't tell you that, and doesn't call itself a deprecation warning at

all.

* Add a note clearifying option parsing (#56285)

To help with #56274

* Fix undefined symbol error in version script (#55363)

lld 17 and above by default error if symbols listed in the version

script are undefined. Julia has a few of these, as some symbols are

defined conditionally in Julia (e.g. based on OS), others perhaps have

been removed from Julia and other seem to be defined in other libraries.

Further the version script is used in linking three times, each time

linking together different objects and so having different symbols

defined.

Adding `-Wl,--undefined-version` is not a great solution as passing that

to ld < 2.40 errors and there doesn't seem to be a great way to check if

a linker supports this flag.

I don't know how to get around these errors for symbols like

`_IO_stdin_used` which Julia doesn't define and it seems to matter

whether or not they are exported, see

https://libc-alpha.sourceware.narkive.com/SevIQmU3/io-stdin-used-stripped-by-version-scripts.

So I've converted all undefined symbols into wildcards to work around

the error.

Fixes #50414, fixes #54533 and replaces #55319.

---------

Co-authored-by: Zentrik <Zentrik@users.noreply.github.com>

* Update repr docstring to hint what it stands for. (#56761)

- Change `repr` docstring from "Create a string from any

value ..." to "Create a string representation of any value ...".

- Document that it typically emits parseable Julia code

---------

Co-authored-by: Ian Butterworth <i.r.butterworth@gmail.com>

* Fix generate_precompile statement grouping & avoid defining new func (#56317)

* remove LinearAlgebra specific bitarray tests since they have moved to the external LinearAlgebra.jl repo (#56800)

Moved in https://github.com/JuliaLang/LinearAlgebra.jl/pull/1148.

* Fix partially_inline for unreachable (#56787)

* codegen: reduce recursion in cfunction generation (#56806)

The regular code-path for this was only missing the age_ok handling, so

add in that handling so we can delete the custom path here for the test

and some of the brokenness that implied.

* gc: simplify sweep_weak_refs logic (#56816)

[NFCI]

* gc: improve mallocarrays locality (#56801)

* Fix codegen not handling invoke exprs with Codeinstances iwith jl_fptr_sparam_addr invoke types. (#56817)

fixes https://github.com/JuliaLang/julia/issues/56739

I didn't succeed in making a test for this. The sole trigger seems to be

```julia

using HMMGradients

T = Float32

A = T[0.0 1.0 0.0; 0.0 0.5 0.5; 1.0 0.0 0.0]

t2tr = Dict{Int,Vector{Int}}[Dict(1 => [2]),]

t2IJ= HMMGradients.t2tr2t2IJ(t2tr)

Nt = length(t2tr)+1

Ns = size(A,1)

y = rand(T,Nt,Ns)

c = rand(Float32, Nt)

beta = backward(Nt,A,c,t2IJ,y)

gamma = posterior(Nt,t2IJ,A,y)

```

in @oscardssmith memorynew PR

One other option is to have the builtin handle receiving a CI. That

might make the code cleaner and does handle the case where we receive a

dynamic CI (is that even a thing)

* 🤖 [master] Bump the SparseArrays stdlib from 1b4933c to 4fd3aad (#56830)

Stdlib: SparseArrays

URL: https://github.com/JuliaSparse/SparseArrays.jl.git

Stdlib branch: main

Julia branch: master

Old commit: 1b4933c

New commit: 4fd3aad

Julia version: 1.12.0-DEV

SparseArrays version: 1.12.0

Bump invoked by: @DilumAluthge

Powered by:

[BumpStdlibs.jl](https://github.com/JuliaLang/BumpStdlibs.jl)

Diff:

https://github.com/JuliaSparse/SparseArrays.jl/compare/1b4933ccc7b1f97427ff88bd7ba58950021f2c60...4fd3aad5735e3b80eefe7b068f3407d7dd0c0924

```

$ git log --oneline 1b4933c..4fd3aad

4fd3aad Generalize `istriu`/`istril` to accept a band index (#590)

780c4de Bump codecov/codecov-action from 4 to 5 (#589)

1beb0e4 Update LICENSE.md (#587)

268d390 QR: handle xtype/dtype returned from LibSuiteSparse that don't match matrix element type (#586)

9731aef get rid of UUID changing stuff (#582)

```

Co-authored-by: Dilum Aluthge <dilum@aluthge.com>

* Missing tests for IdSet (#56258)

Co-authored-by: CY Han <git@wo-class.cn>

* Fix eltype of flatten of tuple with non-2 length (#56802)

In 4c076c80af, eltype of flatten of tuple was improved by computing a

refined eltype at compile time. However, this implementation only worked

for length-2 tuples, and errored for all others.

Generalize this to all tuples.

Closes #56783

---------

Co-authored-by: Neven Sajko <s@purelymail.com>

* Skip or loosen two `errorshow` tests on 32-bit Windows (#56837)

Ref #55900.

* Adding tests for AbstractArrayMath.jl (#56773)

added tests for lines 7, 137-154 (insertdims function) from

base/abstractarraymath.jl

---------

Co-authored-by: Lilith Orion Hafner <lilithhafner@gmail.com>

Co-authored-by: Dilum Aluthge <dilum@aluthge.com>

* 🤖 [master] Bump the Downloads stdlib from 89d3c7d to afd04be (#56826)

Stdlib: Downloads

URL: https://github.com/JuliaLang/Downloads.jl.git

Stdlib branch: master

Julia branch: master

Old commit: 89d3c7d

New commit: afd04be

Julia version: 1.12.0-DEV

Downloads version: 1.6.0(It's okay that it doesn't match)

Bump invoked by: @DilumAluthge

Powered by:

[BumpStdlibs.jl](https://github.com/JuliaLang/BumpStdlibs.jl)

Diff:

https://github.com/JuliaLang/Downloads.jl/compare/89d3c7dded535a77551e763a437a6d31e4d9bf84...afd04be8aa94204c075c8aec83fca040ebb4ff98

```

$ git log --oneline 89d3c7d..afd04be

afd04be Bump codecov/codecov-action from 4 to 5 (#264)

39036e1 CI: Use Dependabot to automatically update external GitHub Actions (#263)

78e7c7c Bump CI actions versions (#252)

```

Co-authored-by: Dilum Aluthge <dilum@aluthge.com>

* 🤖 [master] Bump the SHA stdlib from aaf2df6 to 8fa221d (#56834)

Stdlib: SHA

URL: https://github.com/JuliaCrypto/SHA.jl.git

Stdlib branch: master

Julia branch: master

Old commit: aaf2df6

New commit: 8fa221d

Julia version: 1.12.0-DEV

SHA version: 0.7.0(Does not match)

Bump invoked by: @inkydragon

Powered by:

[BumpStdlibs.jl](https://github.com/JuliaLang/BumpStdlibs.jl)

Diff:

https://github.com/JuliaCrypto/SHA.jl/compare/aaf2df61ff8c3898196587a375d3cf213bd40b41...8fa221ddc8f3b418d9929084f1644f4c32c9a27e

```

$ git log --oneline aaf2df6..8fa221d

8fa221d ci: update doctest config (#120)

346b359 ci: Update ci config (#115)

aba9014 Fix type mismatch for `shake/digest!` and setup x86 ci (#117)

0b76d04 Merge pull request #114 from JuliaCrypto/dependabot/github_actions/codecov/codecov-action-5

5094d9d Update .github/workflows/CI.yml

45596b1 Bump codecov/codecov-action from 4 to 5

230ab51 test: remove outdate tests (#113)

7f25aa8 rm: Duplicated const alias (#111)

aa72f73 [SHA3] Fix padding special-case (#108)

3a01401 Delete Manifest.toml (#109)

da351bb Remvoe all getproperty funcs (#99)

4eee84f Bump codecov/codecov-action from 3 to 4 (#104)

15f7dbc Bump codecov/codecov-action from 1 to 3 (#102)

860e6b9 Bump actions/checkout from 2 to 4 (#103)

8e5f0ea Add dependabot to auto update github actions (#100)

4ab324c Merge pull request #98 from fork4jl/sha512-t

a658829 SHA-512: add ref to NIST standard

11a4c73 Apply suggestions from code review

969f867 Merge pull request #97 from fingolfin/mh/Vector

b1401fb SHA-512: add NIST test

4d7091b SHA-512: add to docs

09fef9a SHA-512: test SHA-512/224, SHA-512/256

7201b74 SHA-512: impl SHA-512/224, SHA-512/256

4ab85ad Array -> Vector

8ef91b6 fixed bug in padding for shake, addes testcases for full code coverage (#95)

88e1c83 Remove non-existent property (#75)

068f85d shake128,shake256: fixed typo in export declarations (#93)

176baaa SHA3 xof shake128 and shake256 (#92)

e1af7dd Hardcode doc edit backlink

```

Co-authored-by: Dilum Aluthge <dilum@aluthge.com>

* 🤖 [master] Bump the StyledStrings stdlib from 056e843 to 8985a37 (#56832)

Stdlib: StyledStrings

URL: https://github.com/JuliaLang/StyledStrings.jl.git

Stdlib branch: main

Julia branch: master

Old commit: 056e843

New commit: 8985a37

Julia version: 1.12.0-DEV

StyledStrings version: 1.11.0(Does not match)

Bump invoked by: @DilumAluthge

Powered by:

[BumpStdlibs.jl](https://github.com/JuliaLang/BumpStdlibs.jl)

Diff:

https://github.com/JuliaLang/StyledStrings.jl/compare/056e843b2d428bb9735b03af0cff97e738ac7e14...8985a37ac054c37d084a03ad2837208244824877

```

$ git log --oneline 056e843..8985a37

8985a37 Fix interpolation edge case dropping annotations

729f56c Add typeasserts to `convert(::Type{Face}, ::Dict)`

```

Co-authored-by: Dilum Aluthge <dilum@aluthge.com>

* xref `UnionAll` in the doc string of `where` (#56411)

* 🤖 [master] Bump the ArgTools stdlib from 997089b to 1314758 (#56839)

Stdlib: ArgTools

URL: https://github.com/JuliaIO/ArgTools.jl.git

Stdlib branch: master

Julia branch: master

Old commit: 997089b

New commit: 1314758

Julia version: 1.12.0-DEV

ArgTools version: 1.1.2(Does not match)

Bump invoked by: @DilumAluthge

Powered by:

[BumpStdlibs.jl](https://github.com/JuliaLang/BumpStdlibs.jl)

Diff:

https://github.com/JuliaIO/ArgTools.jl/compare/997089b9cd56404b40ff766759662e16dc1aab4b...1314758ad02ff5e9e5ca718920c6c633b467a84a

```

$ git log --oneline 997089b..1314758

1314758 build(deps): bump codecov/codecov-action from 4 to 5; also, use CODECOV_TOKEN (#40)

68bb888 Fix typo in `arg_write` docstring (#39)

5d56027 build(deps): bump julia-actions/setup-julia from 1 to 2 (#38)

b6189c7 build(deps): bump codecov/codecov-action from 3 to 4 (#37)

```

Co-authored-by: Dilum Aluthge <dilum@aluthge.com>

* 🤖 [master] Bump the Statistics stdlib from 68869af to d49c2bf (#56831)

Stdlib: Statistics

URL: https://github.com/JuliaStats/Statistics.jl.git

Stdlib branch: master

Julia branch: master

Old commit: 68869af

New commit: d49c2bf

Julia version: 1.12.0-DEV

Statistics version: 1.11.2(Does not match)

Bump invoked by: @DilumAluthge

Powered by:

[BumpStdlibs.jl](https://github.com/JuliaLang/BumpStdlibs.jl)

Diff:

https://github.com/JuliaStats/Statistics.jl/compare/68869af06e8cdeb7aba1d5259de602da7328057f...d49c2bf4f81e1efb4980a35fe39c815ef8396297

```

$ git log --oneline 68869af..d49c2bf

d49c2bf Merge pull request #178 from JuliaStats/dw/ci

d10d6a3 Update Project.toml

1b67c17 Merge pull request #168 from JuliaStats/andreasnoack-patch-2

c3721ed Add a coverage badge

8086523 Test earliest supported Julia version and prereleases

12a1976 Update codecov in ci.yml

2caf0eb Merge pull request #177 from JuliaStats/ViralBShah-patch-1

33e6e8b Update ci.yml to use julia-actions/cache

a399c19 Merge pull request #176 from JuliaStats/dependabot/github_actions/julia-actions/setup-julia-2

6b8d58a Merge branch 'master' into dependabot/github_actions/julia-actions/setup-julia-2

c2fb201 Merge pull request #175 from JuliaStats/dependabot/github_actions/actions/cache-4

8f808e4 Merge pull request #174 from JuliaStats/dependabot/github_actions/codecov/codecov-action-4

7f82133 Merge pull request #173 from JuliaStats/dependabot/github_actions/actions/checkout-4

046fb6f Update ci.yml

c0fc336 Bump julia-actions/setup-julia from 1 to 2

a95a57a Bump actions/cache from 1 to 4

b675501 Bump codecov/codecov-action from 1 to 4

0088c49 Bump actions/checkout from 2 to 4

ad95c08 Create dependabot.yml

40275e2 Merge pull request #167 from JuliaStats/andreasnoack-patch-1

fa5592a Merge pull request #170 from mbauman/patch-1

cf57562 Add more tests of mean and median of ranges

128dc11 Merge pull request #169 from stevengj/patch-1

48d7a02 docfix: abs2, not ^2

2ac5bec correct std docs: sqrt is elementwise

39f6332 Merge pull request #96 from josemanuel22/mean_may_return_incorrect_results

db3682b Merge branch 'master' into mean_may_return_incorrect_results

9e96507 Update src/Statistics.jl

58e5986 Test prereleases

6e76739 Implement one-argument cov2cor!

b8fee00 Stop testing on nightly

9addbb8 Merge pull request #162 from caleb-allen/patch-1

6e3d223 Merge pull request #164 from aplavin/patch-1

71ebe28 Merge pull request #166 from JuliaStats/dw/cov_cor_optimization

517afa6 add tests

aa0f549 Optimize `cov` and `cor` with identical arguments

cc11ea9 propagate NaN value in median

cf7040f Use non-mobile Wikipedia urls

547bf4d adding docu to mean! explain target should not alias with the source

296650a adding docu to mean! explain target should not alias with the source

```

Co-authored-by: Dilum Aluthge <dilum@aluthge.com>

* inference: don't allocate `TryCatchFrame` for `compute_trycatch(::IRCode)` (#56835)

`TryCatchFrame` is only required for the abstract interpretation and is

not necessary in `compute_trycatch` within slot2ssa.jl.

@nanosoldier `runbenchmarks("inference", vs=":master")`

* 🤖 [master] Bump the LazyArtifacts stdlib from e9a3633 to a719c0e (#56827)

Stdlib: LazyArtifacts

URL: https://github.com/JuliaPackaging/LazyArtifacts.jl.git

Stdlib branch: main

Julia branch: master

Old commit: e9a3633

New commit: a719c0e

Julia version: 1.12.0-DEV

LazyArtifacts version: 1.11.0(Does not match)

Bump invoked by: @DilumAluthge

Powered by:

[BumpStdlibs.jl](https://github.com/JuliaLang/BumpStdlibs.jl)

Diff:

https://github.com/JuliaPackaging/LazyArtifacts.jl/compare/e9a36338d5d0dfa4b222f4e11b446cbb7ea5836c...a719c0e3d68a95c6f3dc9571459428ca8761fa2c

```

$ git log --oneline e9a3633..a719c0e

a719c0e Add compat for LazyArtifacts

```

Co-authored-by: Dilum Aluthge <dilum@aluthge.com>

* make `memorynew` intrinsic (#56803)

Attempt to split up https://github.com/JuliaLang/julia/pull/55913 into 2

pieces. This piece now only adds the `memorynew` intrinsic without any

of the optimizations enabled by

https://github.com/JuliaLang/julia/pull/55913. As such, this PR should

be ready to merge now. (and will make

https://github.com/JuliaLang/julia/pull/55913 smaller and simpler)

---------

Co-authored-by: gbaraldi <baraldigabriel@gmail.com>

* fix `compute_trycatch` for `IRCode` /w `EnterNode` with `catch_dest==0` (#56846)

* EA: general cleanup (#56848)

The handling of `Array` in EA was implemented before `Memory` was

introduced and has now become stale. Analysis for `Array` should be

reintroduced after the upcoming overhaul is completed. For now, I will

remove the existing stale code.

* 🤖 [master] Bump the Distributed stdlib from 6c7cdb5 to c613685 (#56825)

Stdlib: Distributed

URL: https://github.com/JuliaLang/Distributed.jl

Stdlib branch: master

Julia branch: master

Old commit: 6c7cdb5

New commit: c613685

Julia version: 1.12.0-DEV

Distributed version: 1.11.0(Does not match)

Bump invoked by: @DilumAluthge

Powered by:

[BumpStdlibs.jl](https://github.com/JuliaLang/BumpStdlibs.jl)

Diff:

https://github.com/JuliaLang/Distributed.jl/compare/6c7cdb5860fa5cb9ca191ce9c52a3d25a9ab3781...c6136853451677f1957bec20ecce13419cde3a12

```

$ git log --oneline 6c7cdb5..c613685

c613685 Merge pull request #116 from JuliaLang/ci-caching

20e2ce7 Use julia-actions/cache in CI

9c5d73a Merge pull request #112 from JuliaLang/dependabot/github_actions/codecov/codecov-action-5

ed12496 Merge pull request #107 from JamesWrigley/remotechannel-empty

010828a Update .github/workflows/ci.yml

11451a8 Bump codecov/codecov-action from 4 to 5

8b5983b Merge branch 'master' into remotechannel-empty

729ba6a Fix docstring of `@everywhere` (#110)

af89e6c Adding better docs to exeflags kwarg (#108)

8537424 Implement Base.isempty(::RemoteChannel)

6a0383b Add a wait(::[Abstract]WorkerPool) (#106)

1cd2677 Bump codecov/codecov-action from 1 to 4 (#96)

cde4078 Bump actions/cache from 1 to 4 (#98)

6c8245a Bump julia-actions/setup-julia from 1 to 2 (#97)

1ffaac8 Bump actions/checkout from 2 to 4 (#99)

8e3f849 Fix RemoteChannel iterator interface (#100)

f4aaf1b Fix markdown errors in README.md (#95)

2017da9 Merge pull request #103 from JuliaLang/sf/sigquit_instead

07389dd Use `SIGQUIT` instead of `SIGTERM`

```

Co-authored-by: Dilum Aluthge <dilum@aluthge.com>

* inference: handle cases where `:the_exception` is used independently (#56836)

`Expr(:the_exception)` is handled by the interpreter in all cases,

however, inference assumes it is only used within frames containing

`try/catch`. This commit corrects that assumption.

* precompileplkgs: release parallel limiter when waiting for another process (#56844)

* Tests for two AbstractString methods (#56247)

Co-authored-by: Chengyu Han <cyhan.dev@outlook.com>

* Tests for annotated string/char c-tors (#56513)

Co-authored-by: Chengyu Han <cyhan.dev@outlook.com>

* docs: fix example for `sort` and add new doctest (#56843)

* jitlayers: re-delete deregisterEHFrames impl (#56854)

Fixes https://github.com/maleadt/LLVM.jl/issues/496

* docs: fix edge case in rational number conversion `float(a//b)` (#56772)

Fixes #56726 added the changes that were suggested. fixing the mistake.

---------

Co-authored-by: Max Horn <max@quendi.de>

Co-authored-by: Chengyu Han <git@wo-class.cn>

* docs: fix scope type of a `struct` to hard (#56755)

Is struct not a hard scope?

```jl

julia> b = 1

struct Tester

tester

Tester(tester) = new(tester)

b = 2

Tester() = new(b)

end

b

1

```

* dict docs: document that ordering of keys/values/pairs match iterate (#56842)

Fix #56841.

Currently the documentation states that keys(dict) and values(dict)

iterate in the same order. But it is not stated whether this is the same

order as that used by pairs(dict), or when looping, for (k,v) in dict.

This PR makes this guarantee explicit.

* fix abstract_eval_cfunction mistakes (#56856)

Noticed in code reading, that 35e4a1f9689f4b98f301884e0683e4f07db7514b

simplified this incorrectly resulting in all arguments being assigned to

the function type, and then 7f8635f11cae5f3f592afcc7b55c8e0e23589c3d

further broke the return type expected for the failure case.

Co-authored-by: Shuhei Kadowaki <40514306+aviatesk@users.noreply.github.com>

* inference: use `ssa_def_slot` for `typeassert` refinement (#56859)

Allows type refinement in the following kind of case:

```julia

julia> @test Base.infer_return_type((Vector{Any},)) do args

codeinst = first(args)

if codeinst isa Core.MethodInstance

mi = codeinst

else

codeinst::Core.CodeInstance

mi = codeinst.def

end

return mi

end == Core.MethodInstance

Test Passed

```

* EA: use embedded `CodeInstance` directly for escape cache lookup (#56860)

* Compiler.jl: use `Base.[all|any]` instead of `Compiler`'s own versions (#56851)

The current `Compiler` defines its own versions of `all` and `any`,

which are separate generic functions from `Base.[all|any]`:

https://github.com/JuliaLang/julia/blob/2ed1a411e0a080f3107e75bb65105a15a0533a90/Compiler/src/utilities.jl#L15-L32

On the other hand, at the point where `Base.Compiler` is bootstrapped,

only a subset of `Base.[all|any]` are defined, specifically those

related to `Tuple`:

https://github.com/JuliaLang/julia/blob/2ed1a411e0a080f3107e75bb65105a15a0533a90/base/tuple.jl#L657-L668.

Consequently, in the type inference world, functions like

`Base.all(::Generator)` are unavailable. If `Base.Compiler` attempts to

perform operations such as `::BitSet ⊆ ::BitSet` (which internally uses

`Base.[all|any]`), a world age error occurs (while `Compiler.[all|any]`

can handle these operations, `::BitSet ⊆ ::BitSet` uses

`Base.[all|any]`, leading to this issue)

To resolve this problem, this commit removes the custom `Compiler`

versions of `[all|any]` and switches to using the Base versions.

One concern is that the previous `Compiler` versions of `[all|any]`

utilized `@nospecialize`. That annotation was introduced a long time ago

to prevent over-specialization, but it is questionable whether it is

still effective with the current compiler implementation. The results of

the nanosoldier benchmarks conducted below also seem to confirm that

the `@nospecialize`s are no longer necessary for those functions.

* Extend `Base.rationalize` instead of defining new function (#56793)

#55886 accidentally created a new function

`Base.MathConstants.rationalize` instead of extending

`Base.rationalize`, which is the reason why `Base.rationalize(Int, π)`

isn’t constant-folded in Julia 1.10 and 1.11:

```

julia> @btime rationalize(Int,π);

1.837 ns (0 allocations: 0 bytes) # v1.9: constant-folded

88.416 μs (412 allocations: 15.00 KiB) # v1.10: not constant-folded

```

This PR fixes that. It should probably be backported to 1.10 and 1.11.

* 🤖 [master] Bump the LinearAlgebra stdlib from 56d561c to 1137b4c (#56828)

Stdlib: LinearAlgebra

URL: https://github.com/JuliaLang/LinearAlgebra.jl.git

Stdlib branch: master

Julia branch: master

Old commit: 56d561c

New commit: 1137b4c

Julia version: 1.12.0-DEV

LinearAlgebra version: 1.11.0(Does not match)

Bump invoked by: @DilumAluthge

Powered by:

[BumpStdlibs.jl](https://github.com/JuliaLang/BumpStdlibs.jl)

Diff:

https://github.com/JuliaLang/LinearAlgebra.jl/compare/56d561c22e1ab8e0421160edbdd42f3f194ecfa8...1137b4c7fa8297cef17c4ae0982d7d89d4ab7dd8

```

$ git log --oneline 56d561c..1137b4c

1137b4c Port structured opnorm changes from main julia PR (#1138)

ade3654 port bitarray tests to LinearAlgebra.jl (#1148)

130b94a Fix documentation bug in QR docstring (#1145)

128518e setup coverage (#1144)

15f7e32 ci: add linux-i686 (#1142)

b6f87af Fallback `newindex` method with a `BandIndex` (#1143)

d1e267f bring back [l/r]mul! shortcuts for (kn)own triangular types (#1137)

5cdeb46 don't refer to internal variable names in gemv exceptions (#1141)

4a3dbf8 un-revert "Simplify some views of Adjoint matrices" (#1122)

7b34d81 [CI] Install DependaBot (#1115)

f567112 Port structured opnorm changes from main julia PR Originally written by mcognetta

d406524 implements a `rank(::SVD)` method and adds unit tests. fixes #1126 (#1127)

f13f940 faster implementation of rank(::QRPivoted) fixes #1128 (#1129)

8ab7e09 Merge pull request #1132 from JuliaLang/jishnub/qr_ldiv_R_cache

85919e6 Merge pull request #1108 from JuliaLang/jishnub/tri_muldiv_stride

195e678 Cache and reuse `R` in adjoint `QR` `ldiv!`

cd0da66 Update comments LAPACK -> BLAS

b3ec55f Fix argument name in stride

d3a9a3e Non-contiguous matrices in triangular mul and div

aecb714 Reduce number of test combinations in test/triangular.jl (#1123)

62e45d1 Update .github/dependabot.yml

ff78c38 use an explicit file extension when creating sysimage (#1119)

43b541e try use updated windows compilers in PackageCompiler (#1120)

c00cb77 [CI] Install DependaBot

b285b1c Update LICENSE (#1113)

7efc3ba remove REPL from custom sysimage (#1112)

b7f82ec add a README

```

Co-authored-by: Dilum Aluthge <dilum@aluthge.com>

* Add ABIOverride type for def field (#56555)

Together with #54899, this PR is intending to replicate the

functionality of #54373, which allowed particular specializations to

have a different ABI signature than what would be suggested by the

MethodInstance's `specTypes` field. This PR handles that by adding a

special `ABIOverwrite` type, which, when placed in the `owner` field of

a `CodeInstance` instructs the system to use the given signature

instead.

* EAUtils: perform `code_escapes` with a global cache by default (#56868)

In JuliaLang/julia#56860, `EAUtils.EscapeAnalyzer` was updated to create

a new cache for each invocation of `code_escapes`, similar to how

Cthulhu.jl behaves. However, `code_escapes` is often used for

performance analysis like `@benchmark code_escapes(...)`, and in such

cases, a large number of `CodeInstance`s can be generated for each

invocation. This could potentially impact native code execution.

So this commit changes the default behavior so that `code_escapes` uses

the same pre-existing cache by default. We can still opt-in to perform a

fresh analysis by specifying

`cache_token=EAUtils.EscapeAnalyzerCacheToken()`.

* Small ABIOverride follow up and add basic test (#56877)

Just a basic `invoke` test for now. There's various other code paths

that should also be tested with ABI overwrites, but this gives us the

basic framework and more tests can be added as needed.

* Package docstring: more peaceful README introduction (#56798)

Hello! 👋

#39093 is great! This PR styles the banner ("Displaying contents of

readme found at ...") differently:

Before:

```

help?> DifferentialEquations

search: DifferentialEquations

No docstring found for module DifferentialEquations.

Exported names

≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡

@derivatives, @ode_def, @ode_def_all, @ode_def_bare,

... (manually truncated)

Displaying contents of readme found at /Users/ian/.julia/packages/DifferentialEquations/HSWeG/README.md

≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡

DifferentialEquations.jl

≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡

(Image: Join the chat at https://gitter.im/JuliaDiffEq/Lobby) (https://gitter.im/JuliaDiffEq/Lobby?utm_source=badge&utm_medium=badge&utm_campaign=pr-badge&utm_content=badge) (Image:

Build Status) (https://github.com/SciML/DifferentialEquations.jl/actions?query=workflow%3ACI) (Image: Stable) (http://diffeq.sciml.ai/stable/) (Image: Dev) (http://diffeq.sciml.ai/dev/)

(Image: DOI) (https://zenodo.org/badge/latestdoi/58516043)

This is a suite for numerically solving differential equations written in Julia and available for use in Julia, Python, and R. The purpose of this package is to supply efficient Julia

implementations of solvers for various differential equations. Equations within the realm of this package include:

• Discrete equations (function maps, discrete stochastic (Gillespie/Markov) simulations)

• Ordinary differential equations (ODEs)

```

After:

```

help?> DifferentialEquations

search: DifferentialEquations

No docstring found for module DifferentialEquations.

Exported names

≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡

@derivatives, @ode_def, @ode_def_all, @ode_def_bare,

... (manually truncated)

────────────────────────────────────────────────────────────────────────────

Package description from README.md:

DifferentialEquations.jl

≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡≡

(Image: Join the chat at https://gitter.im/JuliaDiffEq/Lobby) (https://gitter.im/JuliaDiffEq/Lobby?utm_source=badge&utm_medium=badge&utm_campaign=pr-badge&utm_content=badge) (Image:

Build Status) (https://github.com/SciML/DifferentialEquations.jl/actions?query=workflow%3ACI) (Image: Stable) (http://diffeq.sciml.ai/stable/) (Image: Dev) (http://diffeq.sciml.ai/dev/)

(Image: DOI) (https://zenodo.org/badge/latestdoi/58516043)

This is a suite for numerically solving differential equations written in Julia and available for use in Julia, Python, and R. The purpose of this package is to supply efficient Julia

implementations of solvers for various differential equations. Equations within the realm of this package include:

• Discrete equations (function maps, discrete stochastic (Gillespie/Markov) simulations)

• Ordinary differential equations (ODEs)

```

I think this makes it a bit less technical (the word "Displaying" and

the long filename), and it improves the reading flow.

* Support indexing `Broadcasted` objects using `Integer`s (#56470)

This adds support for `IndexLinear` `eachindex`, as well as

bounds-checking and `getindex` for a `Broadcasted` with an `Integer`

index.

Instead of using the number of dimensions in `eachindex` to determine

whether to use `CartesianIndices`, we may use the `IndexStyle`. This

should not change anything currently, but this adds the possibility of

`nD` broadcasting using linear indexing if the `IndexStyle` of the

`Broadcasted` object is `IndexLinear`.

After this,

```julia

julia> bc = Broadcast.broadcasted(+, reshape(1:4, 2, 2), 1:2)

Base.Broadcast.Broadcasted(+, ([1 3; 2 4], 1:2))

julia> eachindex(bc)

CartesianIndices((2, 2))

julia> eachindex(IndexLinear(), bc)

Base.OneTo(4)

julia> [bc[I] for I in eachindex(IndexLinear(), bc)]

4-element Vector{Int64}:

2

4

4

6

julia> vec(collect(bc))

4-element Vector{Int64}:

2

4

4

6

```

This PR doesn't add true linear indexing support for `IndexCartesian`

`Broadcasted` objects. In such cases, an `Integer` index is converted to

a `CartesianIndex` before it is used in indexing.

* Limit the scope of `@inbounds` in `searchsorted*` (#56882)

This removes bounds-checking only in the indexing operation, instead of

for the entire block.

* Remove trailing comma in 0-dim `reshape` summary (#56853)

Currently, there is an extra comma in displaying the summary for a 0-dim

`ReshapedArray`:

```julia

julia> reshape(1:1)

0-dimensional reshape(::UnitRange{Int64}, ) with eltype Int64:

1

```

This PR only prints the comma if `dims` isn't empty, so that we now

obtain

```julia

julia> reshape(1:1)

0-dimensional reshape(::UnitRange{Int64}) with eltype Int64:

1

```

* Switch debug registry mapping to `CodeInstance` (#56878)

Currently our debugging code maps from pointers to `MethodInstance`.

However, I think it makes more sense to map to `CodeInstance` instead,

since that's what we're actually compiling and as we're starting to make

more use of external owners and custom specializations, we'll want to be

able to annotate that in backtraces. This only adjusts the internal data

structures - any actual printing changes for those sorts of use cases

will have to come separately.

* binaryplatforms.jl: Remove duplicated riscv64 entry (#56888)

* Minor documentation updates (#56883)

- Array/Vector/Matrix output showing

- Syntax highlighting for fenced code block examples

---------

Co-authored-by: Chengyu Han <cyhan.dev@outlook.com>

Co-authored-by: Lilith Orion Hafner <lilithhafner@gmail.com>

* Remove `similar` specialization with `Integer`s (#56881)

This method seems unnecessary, as the method on line 830 does the same

thing. Having this method in `Base` requires packages to disambiguate

against this by adding redundant methods.

* simplify and slightly improve memorynew inference (#56857)

while investigating some missed optimizations in

https://github.com/JuliaLang/julia/pull/56847, @gbaraldi and I realized

that `copy(::Array)` was using `jl_genericmemory_copy_slice` rather than

the `memmove`/`jl_genericmemory_copyto` that `copyto!` lowers to. This

version lets us use the faster LLVM based Memory initialization, and the

memove can theoretically be further optimized by LLVM (e.g. not copying

elements that get over-written without ever being read).

```

julia> @btime copy($[1,2,3])

15.521 ns (2 allocations: 80 bytes) # before

12.116 ns (2 allocations: 80 bytes) #after

julia> m = Memory{Int}(undef, 3);

julia> m.=[1,2,3];

julia> @btime copy($m)

11.013 ns (1 allocation: 48 bytes) #before

9.042 ns (1 allocation: 48 bytes) #after

```

We also optimize the `memorynew` type inference to make it so that

getting the length of a memory with known length will propagate that

length information (which is important for cases like `similar`/`copy`

etc).

* Update the OffsetArrays test helper (#56892)

This updates the test helper to match v1.15.0 of `OffsetArrays.jl`. The

current version was copied over from v1.11.2, which was released on May

20, 2022, so this bump fetches the intermediate updates to the package.

The main changes are updates to `unsafe_wrap` and `reshape`. In the

latter, several redundant methods are now removed, whereas, in the

former, methods are added to wrap a `Ptr` in an `OffsetArray`

(https://github.com/JuliaArrays/OffsetArrays.jl/issues/275#issue-1222585268).

A third major change is that an `OffsetArray` now shares its parent's

`eltype` and `ndims` in the struct definition, whereas previously this

was ensured through the constructor. Other miscellaneous changes are

included, such as performance-related ones.

* fix: Base.GMP.MPZ.invert yielding return code instead of return value (#56894)

There is a bug in `Base.GMP.MPZ.invert` it returned GMP return code,

instead of the actual value. This commit fixes it.

Before:

```

julia> Base.GMP.MPZ.invert(big"3", big"7")

1

```

After:

```

julia> Base.GMP.MPZ.invert(big"3", big"7")

5

```

* fix precompilation error printing if `CI` is set (#56905)

Co-authored-by: Ian Butterworth <i.r.butterworth@gmail.com>

* Add #53664, public&export error to news (#56911)

Suggested by @simeonschaub

[here](https://github.com/JuliaLang/julia/pull/53664#issuecomment-2562408676)

* restrict `optimize_until` argument type correctly (#56912)

The object types that `optimize_until` can accept are restricted by

`matchpass`, so this restriction should also be reflected in functions

like `typeinf_ircode` too.

* doc: manual: give the Performance Tips a table of contents (#56917)

The page is quite long, and now it also has a more intricate structure

than before, IMO it deserves a TOC.

* ReentrantLock: wakeup a single task on unlock and add a short spin (#56814)

I propose a change in the implementation of the `ReentrantLock` to

improve its overall throughput for short critical sections and fix the

quadratic wake-up behavior where each unlock schedules **all** waiting

tasks on the lock's wait queue.

This implementation follows the same principles of the `Mutex` in the

[parking_lot](https://github.com/Amanieu/parking_lot/tree/master) Rust

crate which is based on the Webkit

[WTF::ParkingLot](https://webkit.org/blog/6161/locking-in-webkit/)

class. Only the basic working principle is implemented here, further

improvements such as eventual fairness will be proposed separately.

The gist of the change is that we add one extra state to the lock,

essentially going from:

```

0x0 => The lock is not locked

0x1 => The lock is locked by exactly one task. No other task is waiting for it.

0x2 => The lock is locked and some other task tried to lock but failed (conflict)

```

To:

```

# PARKED_BIT | LOCKED_BIT | Description

# 0 | 0 | The lock is not locked, nor is anyone waiting for it.

# -----------+------------+------------------------------------------------------------------

# 0 | 1 | The lock is locked by exactly one task. No other task is

# | | waiting for it.

# -----------+------------+------------------------------------------------------------------

# 1 | 0 | The lock is not locked. One or more tasks are parked.

# -----------+------------+------------------------------------------------------------------

# 1 | 1 | The lock is locked by exactly one task. One or more tasks are

# | | parked waiting for the lock to become available.

# | | In this state, PARKED_BIT is only ever cleared when the cond_wait lock

# | | is held (i.e. on unlock). This ensures that

# | | we never end up in a situation where there are parked tasks but

# | | PARKED_BIT is not set (which would result in those tasks

# | | potentially never getting woken up).

```

In the current implementation we must schedule all tasks to cause a

conflict (state 0x2) because on unlock we only notify any task if the

lock is in the conflict state. This behavior means that with high

contention and a short critical section the tasks will be effectively

spinning in the scheduler queue.

With the extra state the proposed implementation has enough information

to know if there are other tasks to be notified or not, which means we

can always notify one task at a time while preserving the optimized path

of not notifying if there are no tasks waiting. To improve throughput

for short critical sections we also introduce a bounded amount of

spinning before attempting to park.

### Results

Not spinning on the scheduler queue greatly reduces the CPU utilization

of the following example:

```julia

function example()

lock = ReentrantLock()

@sync begin

for i in 1:10000

Threads.@spawn begin

@lock lock begin

sleep(0.001)

end

end

end

end

end

@time example()

```

Current:

```

28.890623 seconds (101.65 k allocations: 7.646 MiB, 0.25% compilation time)

```

Proposed:

```

22.806669 seconds (101.65 k allocations: 7.814 MiB, 0.35% compilation time)

```

In a micro-benchmark where 8 threads contend for a single lock with a

very short critical section we see a ~2x improvement.

Current:

```

8-element Vector{Int64}:

6258688

5373952

6651904

6389760

6586368

3899392

5177344

5505024

Total iterations: 45842432

```

Proposed:

```

8-element Vector{Int64}:

12320768

12976128

10354688

12845056

7503872

13598720

13860864

11993088

Total iterations: 95453184

```

~~In the uncontended scenario the extra bookkeeping causes a 10%

throughput reduction:~~

EDIT: I reverted _trylock to the simple case to recover the uncontended

throughput and now both implementations are on the same ballpark

(without hurting the above numbers).

In the uncontended scenario:

Current:

```

Total iterations: 236748800

```

Proposed:

```

Total iterations: 237699072

```

Closes #56182

* 🤖 [master] Bump the Pkg stdlib from e7c37f342 to c7e611bc8 (#56918)

* Don't report only-inferred methods as recompiles (#56914)

* precompilepkgs: respect loaded dependencies when precompiling for load (#56901)

* Add julia-repl in destructuring docs (#56866)

* doc: manual: Command-line Interface: tiny Markdown code formatting fix (#56919)

* Make threadpoolsize(), threadpooltids(), and ngcthreads() public (#55701)

* [Test] Print RNG of a failed testset and add option to set it (#56260)

Also, add a keyword option to `@testset` to let users override the seed

used there, to make testsets more replicable.

To give you a taster of what this PR

enables:

```

julia> using Random, Test

julia> @testset begin

@test rand() == 0

end;

test set: Test Failed at REPL[2]:2

Expression: rand() == 0

Evaluated: 0.559472630416976 == 0

Stacktrace:

[1] top-level scope

@ REPL[2]:2

[2] macro expansion

@ ~/repo/julia/usr/share/julia/stdlib/v1.12/Test/src/Test.jl:1713 [inlined]

[3] macro expansion

@ REPL[2]:2 [inlined]

[4] macro expansion

@ ~/repo/julia/usr/share/julia/stdlib/v1.12/Test/src/Test.jl:679 [inlined]

Test Summary: | Fail Total Time

test set | 1 1 0.9s

ERROR: Some tests did not pass: 0 passed, 1 failed, 0 errored, 0 broken.

Random seed for this testset: Xoshiro(0x2e026445595ed28e, 0x07bb81ac4c54926d, 0x83d7d70843e8bad6, 0xdbef927d150af80b, 0xdbf91ddf2534f850)

julia> @testset rng=Xoshiro(0x2e026445595ed28e, 0x07bb81ac4c54926d, 0x83d7d70843e8bad6, 0xdbef927d150af80b, 0xdbf91ddf2534f850) begin

@test rand() == 0.559472630416976

end;

Test Summary: | Pass Total Time

test set | 1 1 0.0s

```

This also works with nested testsets, and testsets on for loops:

```

julia> @testset rng=Xoshiro(0xc380f460355639ee, 0xb39bc754b7d63bbf, 0x1551dbcfb5ed5668, 0x71ab5a18fec21a25, 0x649d0c1be1ca5436) "Outer" begin

@test rand() == 0.0004120194925605336

@testset rng=Xoshiro(0xee97f5b53f7cdc49, 0x480ac387b0527d3d, 0x614b416502a9e0f5, 0x5250cb36e4a4ceb1, 0xed6615c59e475fa0) "Inner: $(i)" for i in 1:10

@test rand() == 0.39321938407066637

end

end;

Test Summary: | Pass Total Time

Outer | 11 11 0.0s

```

Being able to see what was the seed inside a testset and being able to

set it afterwards should make replicating test failures which only

depend on the state of the RNG much easier to debug.

* 🤖 [master] Bump the Pkg stdlib from c7e611bc8 to 739a64a0b (#56927)

* 🤖 [master] Bump the Pkg stdlib from 739a64a0b to 8d3cf02e5 (#56930)

* doc: summary of output functions: cross-ref println and printstyled (#55860)

Slight tweak to #54547 to add cross-referenes to `println` and

`printstyled`.

Co-authored-by: Dilum Aluthge <dilum@aluthge.com>

* Make `Markdown.parse` public and add a docstring (#56818)

* precompilepkgs: color the "is currently loaded" text to make meaning clearer (#56926)

* Remove unused variable from `endswith` (#56934)

This is a trivial code cleanup suggestion. The `cub` variable was unused

in `endswith(::Union{String, SubString{String}}, ::Union{String,

SubString{String}})`.

I ran the tests with `make test`, there were some failures but they did

not appear to be related to the change.

* deps: support Unicode 16 via utf8proc 2.10.0 (#56925)

Similar to #51799, support [Unicode

16](https://www.unicode.org/versions/Unicode16.0.0/) by bumping utf8proc

to 2.10.0 (thanks to https://github.com/JuliaStrings/utf8proc/pull/277

by @eschnett).

This allows us to use [7 exciting new emoji

characters](https://www.unicode.org/emoji/charts-16.0/emoji-released.html)

as identifiers, including "face with bags under eyes"

`"\U1fae9"` (but still no superscript "q").

Closes #56035.

* teach jitlayers to use equivalent edges

Sometimes an edge (especially from precompile file, but sometimes from

inference) will specify a CodeInstance that does not need to be compiled

for its ABI and simply needs to be cloned to point to the existing copy

of it.

* opaque_closure: fix data-race mistakes with reading fields by using standard helper function

* opaque_closure: fix world-age mistake in fallback path

This was failing the h_world_age test sometimes.

* inference,codegen: connect source directly to jit

This avoids unnecessary compression when running (not generating code).

While generating code, we continue the legacy behavior of storing

compressed code, since restarting from a ji without that is quite slow.

Eventually, we should also remove that code also once we have generated

the object file from it.

This replaces the defective SOURCE_MODE_FORCE_SOURCE option with a new

`typeinf_ext_toplevel` batch-mode interface for compilation which

returns all required source code. Only two options remain now:

SOURCE_MODE_NOT_REQUIRED :

Require only that the IPO information (e.g. rettype and friends) is

present.

SOURCE_MODE_FORCE_ABI :

Require that the IPO information is present (for ABI computation)

and that the returned CodeInstance can be invoked on the host target

(preferably after inference, called directly, but perfectly

acceptable for Base.Compiler to instead force the runtime to use a

stub there or call into it with the interpreter instead by having

failed to provide any code).

This replaces the awkward `jl_create_native` interface (which is now

just a shim for calling the new batch-mode `typeinf_ext_toplevel`) with

a simpler `jl_emit_native` API, which does not do any inference or other

callbacks, but simply is a batch-mode call to `jl_emit_codeinfo` and

the work to build the external wrapper around them for linkage.

* delete unused code, so the jit no longer uses the inferred field at all

* Make sure we don't promise alignments that are larger than the heap alignment to LLVM (#56938)

Fixes https://github.com/JuliaLang/julia/issues/56937

---------

Co-authored-by: Oscar Smith <oscardssmith@gmail.com>

* 🤖 [master] Bump the NetworkOptions stdlib from 8eec5cb to c090626 (#56949)

Stdlib: NetworkOptions

URL: https://github.com/JuliaLang/NetworkOptions.jl.git

Stdlib branch: master

Julia branch: master

Old commit: 8eec5cb

New commit: c090626

Julia version: 1.12.0-DEV

NetworkOptions version: 1.3.0(Does not match)

Bump invoked by: @giordano

Powered by:

[BumpStdlibs.jl](https://github.com/JuliaLang/BumpStdlibs.jl)

Diff:

https://github.com/JuliaLang/NetworkOptions.jl/compare/8eec5cb0acec4591e6db3c017f7499426cd8e352...c090626d3feee6d6a5c476346d22d6147c9c6d2d

```

$ git log --oneline 8eec5cb..c090626

c090626 Enable OpenSSL certificates (#36)

```

* [build] Fix value of `CMAKE_C{,XX}_ARG` when `CC_ARG` doesn't have args (#56920)

The problem was that `cut` by default prints the entire line if the

delimiter doesn't appear, unless the option `-s` is used, which means

that if `CC_ARG` contains only `gcc` then `CMAKE_CC_ARG` also contains

`gcc` instead of being empty.

Before the PR:

```console

$ make -C deps/ print-CC_ARG print-CMAKE_CC_ARG print-CMAKE_COMMON USECCACHE=1

make: Entering directory '/home/mose/repo/julia/deps'

CC_ARG=gcc

CMAKE_CC_ARG=gcc

CMAKE_COMMON=-DCMAKE_INSTALL_PREFIX:PATH=/home/mose/repo/julia/usr -DCMAKE_PREFIX_PATH=/hom…

This patch modifies the

sumandcumsumfunctions (andcumprod) to use pairwise summation for summingAbstractArrays, in order to achieve much better accuracy at a negligible computational cost.Pairwise summation recursively divides the array in half, sums the halves recursively, and then adds the two sums. As long as the base case is large enough (here, n=128 seemed to suffice), the overhead of the recursion is negligible compared to naive summation (a simple loop). The advantage of this algorithm is that it achieves O(sqrt(log n)) mean error growth, versus O(sqrt(n)) for naive summation, which is almost indistinguishable from the O(1) error growth of Kahan compensated summation.

For example:

where

oldsumandnewsumare the old and new implementations, respectively, gives(-1.2233732622777777e-13,0.0)on my machine in a typical run: the oldsumloses three significant digits, whereas the newsumloses none. On the other hand, their execution time is nearly identical:gives

(The difference is within the noise.)

@JeffBezanson and @StefanKarpinski, I think I mentioned this possibility to you at Berkeley.