New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Do you know why nodes change completely when I reran the same setup with varying number of iterations? #146

Comments

|

The weights are randomly initialized and it's normal to have different

results at each run. You can set the random state to a given value if you

want your experiment to be reproducible.

…On Fri, Jul 8, 2022, 15:06 michel039 ***@***.***> wrote:

Hey :-)

First of all thank you for providing this tool, it seems very handy! I am

using SOM with geopotential height anomalies over a given region as input

variables to cluster meteorological circulation patterns (ca. 2000

observations). What is really strange is that the SOM nodes differ

completely when I rerun the same setup. I tried different map sizes,

learning rates, sigmas, and number of iterations (going up to 1 mio) as

wel. But even just rerunning the same setup produces nodes not only in a

different order, but also such that have no analogue in the new SOM... Is

there anything I am doing wrong?

Thank you very much - below some details about the setup

The example I am using most often is sigma=1 (Gaussian), lr=0.5, SOM sizes

between 2x4 to 4x5. The problem occurs no matter the initialization (pca or

random) and no matter the training (single, batch, random). My code is

basically only:

SOM

som = MiniSom(som_m, som_n, ndims, sigma=sigma, learning_rate=lr,

neighborhood_function='gaussian')

som.pca_weights_init(somarr)

som.train_batch(somarr,10000,verbose=True)

...

plot

for m in range(som_m):

for n in range(som_n):

ax...

pltarr = som.get_weights()[m,n,:].reshape((nsomlat,nsomlon))

p = ax.contourf(somlons,somlats,pltarr,cmap='seismic',

transform=ccrs.PlateCarree())

—

Reply to this email directly, view it on GitHub

<#146>, or unsubscribe

<https://github.com/notifications/unsubscribe-auth/ABFTNGLCZA7BK37ORAOKTSLVTAYWZANCNFSM53BDQZWA>

.

You are receiving this because you are subscribed to this thread.Message

ID: ***@***.***>

|

|

Thanks. Is this the case even though I initialize with pca weights? And is it also normal that it does not reproduce at least the same patterns? |

|

PCA weights should always be the same. You can check the weights like this |

|

Ok, so would changing results be an indication of one of the rare cases where more iterations lead to different results, i.e., there is no convergence? |

|

To be precise, PCA weights are always the same when initialized with the same data. Training is supposed to converge as long as the learning rate decreases. |

|

Yes, after initialization the weights are the same for both setups. So could the results change due to the increase in iterations and the thereby altered decay function? Cause they are quite substantially different |

|

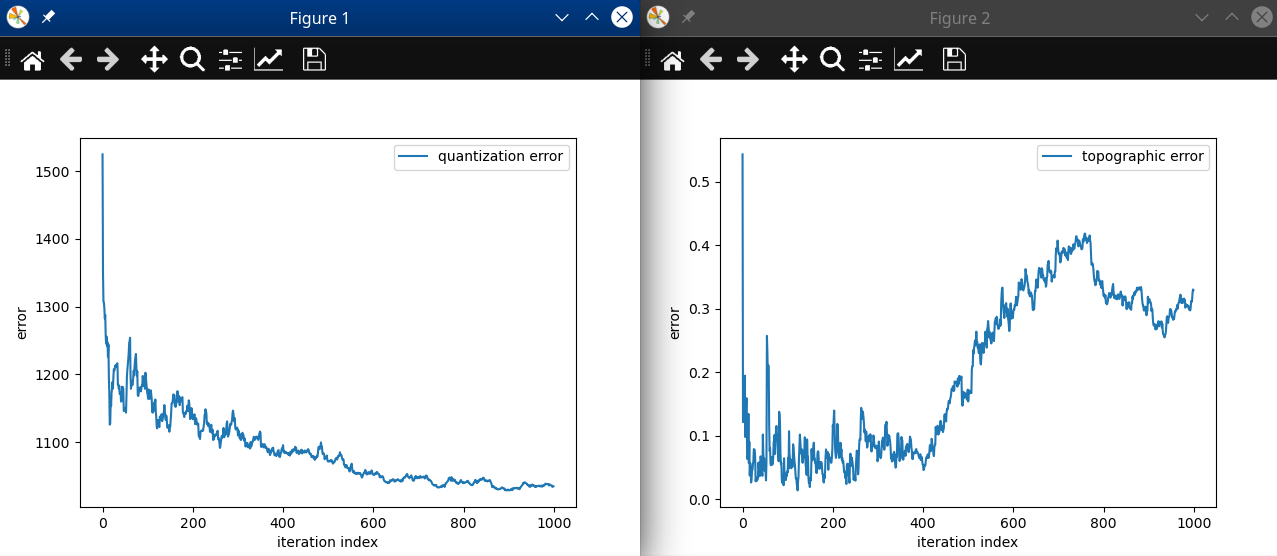

it should not happen in theory. Have you tried checking the quantization error throughout the iterations? The example at the bottom of this notebook shows how to do it https://github.com/JustGlowing/minisom/blob/master/examples/BasicUsage.ipynb |

|

Try scaling the data as shown here https://scikit-learn.org/stable/modules/generated/sklearn.preprocessing.StandardScaler.html#sklearn.preprocessing.StandardScaler |

|

I now use the som toolbox on Matlab (I know...) which performs well, i.e., produces similar results for increasing number of iterations. Let me know if you want to know more about my problem if that's of any relevance to your python module |

|

if you properly set learning rate and decay function, you will get consistent results after a certain number of iterations. I can't give advice regarding the matlab toolbox. |

Hey :-)

First of all thank you for providing this tool, it seems very handy! I am using SOM with geopotential height anomalies over a given region as input variables to cluster meteorological circulation patterns (ca. 2000 observations). What is really strange is that the SOM nodes differ completely when I rerun the same setup with more iterations (e.g. doubling from 10000 to 20000). It produces nodes not only in a different order, but also such that have no analogue in the new SOM... Is there anything I am doing wrong?

Thank you very much - below some details about the setup

The example I am using most often is sigma=1 (Gaussian), lr=0.5, SOM sizes between 2x4 to 4x5. The problem occurs no matter the initialization (pca or random) and no matter the training (single, batch, random). My code is basically only:

SOM

som = MiniSom(som_m, som_n, ndims, sigma=sigma, learning_rate=lr, neighborhood_function='gaussian')

som.pca_weights_init(somarr)

som.train_batch(somarr,10000,verbose=True)

...

plot

for m in range(som_m):

for n in range(som_n):

ax...

pltarr = som.get_weights()[m,n,:].reshape((nsomlat,nsomlon))

p = ax.contourf(somlons,somlats,pltarr,cmap='seismic', transform=ccrs.PlateCarree())

The text was updated successfully, but these errors were encountered: