Formalizing Attacker Scenarios for Adversarial Transferability

Paper Available »

Marco Alecci

·

Mauro Conti

·

Francesco Marchiori

·

Luca Martinelli

·

Luca Pajola

Table of Contents

Evasion attacks are a threat to machine learning models, where adversaries attempt to affect classifiers by injecting malicious samples. An alarming side-effect of evasion attacks is their ability to transfer among different models: this property is called transferability. Therefore, an attacker can produce adversarial samples on a custom model to conduct the attack on a victim's organization later. Although literature widely discusses how adversaries can transfer their attacks, their experimental settings are limited and far from reality. For instance, many experiments consider both attacker and defender sharing the same dataset, balance level (i.e., how the ground truth is distributed), and model architecture. In this work, we propose the DUMB attacker model. This framework allows analyzing if evasion attacks fail to transfer when the training conditions of surrogate and victim models differ. DUMB considers the following conditions: Dataset soUrces, Model architecture, and the Balance of the ground truth. We then propose a novel testbed to evaluate many state-of-the-art evasion attacks with DUMB; the testbed consists of three computer vision tasks with two distinct datasets each, four types of balance levels, and three model architectures. Our analysis, which generated 13K tests over 14 distinct attacks, led to numerous novel findings in the scope of transferable attacks with surrogate models. In particular, mismatches between attackers and victims in terms of dataset source, balance levels, and model architecture lead to non-negligible loss of attack performance.

Please, cite this work when referring to the DUMB attacker model:

@inproceedings{10.1145/3607199.3607227,

author = {Alecci, Marco and Conti, Mauro and Marchiori, Francesco and Martinelli, Luca and Pajola, Luca},

title = {Your Attack Is Too DUMB: Formalizing Attacker Scenarios for Adversarial Transferability},

year = {2023},

isbn = {9798400707650},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3607199.3607227},

doi = {10.1145/3607199.3607227},

booktitle = {Proceedings of the 26th International Symposium on Research in Attacks, Intrusions and Defenses},

pages = {315–329},

numpages = {15},

keywords = {Adversarial Machine Learning, Evasion Attacks, Adversarial Attacks, Surrogate Model, Transferability},

location = {Hong Kong, China},

series = {RAID '23}

}

First, start by cloning the repository.

git clone https://github.com/Mhackiori/DUMB.git

cd DUMBThen, install the required Python packages by running:

pip install -r requirements.txtYou now need to add the datasets in the repository. You can do this by downloading the zip file here and extracting it in this repository.

To replicate the results in our paper, you need to execute the scripts in a specific order (modelTrainer.py, attackGeneration.py and evaluation.py), or you can execute them one after another by running the dedicated shell script.

chmod +x ./run.sh && ./run.shIf instead you want to run each script one by one, you will need to specify the task through an environment variable.

- TASK=0:

[bike, motorbike] - TASK=1:

[cat, dog] - TASK=2:

[man, woman]

export TASK=0 && python3 modelTrainer.pyWith modelTrainer.py we are training three different model architectures on one of the tasks, which can be selected by changing the value of currentTask in tasks.py. The three model architectures that we consider are the following.

| Name | Paper |

|---|---|

| AlexNet | One weird trick for parallelizing convolutional neural networks (Krizhevskyet al., 2014) |

| ResNet | Deep residual learning for image recognition (He et al., 2016) |

| VGG | Very deep convolutional networks for large-scale image recognition (Simonyan et al., 2015) |

The script will automatically handle the different balancing scenarios of the dataset and train 4 models for each architecture. The four different balancings affect only the training set and are described as follows:

- [50/50]: 3500 images for

class_0, 3500 images forclass_1 - [40/60]: 2334 images for

class_0, 3500 images forclass_1 - [30/70]: 1500 images for

class_0, 3500 images forclass_1 - [20/80]: 875 images for

class_0, 3500 images forclass_1

Once the 24 models are trained (2 datasets * 3 architectures * 4 dataset balancing), the same script will evaluate all of them in the same test set. First, we will save the predictions of each of the images. This data will be used to create adversarial sample only from images that are correctly classified by the model. Then, we will save the evaluation of the models on the test set, which gives us a baseline to evaluate the difficulty of the task.

With attackGeneration.py we are generating the attacks through the Torchattacks library and the Pillow library. Indeed, we divide the attacks in two main categories:

These are the adversarial attacks that is possible to find in the literature. In particular, we use: BIM, DeepFool, FGSM, PGD, RFGSM, and TIFGSM. In the next Table, we include their correspondent papers and the parameter that we use for fine-tuning the attack. We also show the range in which the parameter has been tested and the step for the search (see Parameter Tuning).

| Name | Paper | Parameter |

|---|---|---|

|

BIM (Linf) |

Adversarial Examples in the Physical World (Kurakin et al., 2016) |

@ 0.01 step |

|

DeepFool (L2) |

DeepFool: A Simple and Accurate Method to Fool Deep Neural Networks (Moosavi-Dezfooli et al., 2016) | Overshoot @ 1 step |

|

FGSM (Linf) |

Explaining and harnessing adversarial examples (Goodfellow et al., 2014) |

@ 0.01 step |

|

PGD (Linf) |

Towards Deep Learning Models Resistant to Adversarial Attacks (Mardry et al., 2017) |

@ 0.01 step |

|

RFGSM (Linf) |

Ensemble Adversarial Traning: Attacks and Defences (Tramèr et al., 2017) |

@ 0.01 step |

|

Square (Linf, L2) |

Square Attack: a query-efficient black-box adversarial attack via random search (Andriushchenko et al., 2019) |

@ 0.05 step |

|

TIFGSM (Linf) |

Evading Defenses to Transferable Adversarial Examples by Translation-Invariant Attacks (Dong et al., 2019) |

@ 0.01 step |

NOTE: if you're accessing this data from the anonymized repository, the above formulae might not be displayed correctly. This issue might be present in other places in this repository, but can be solved by rendering the Markdown code locally after downloading the repository.

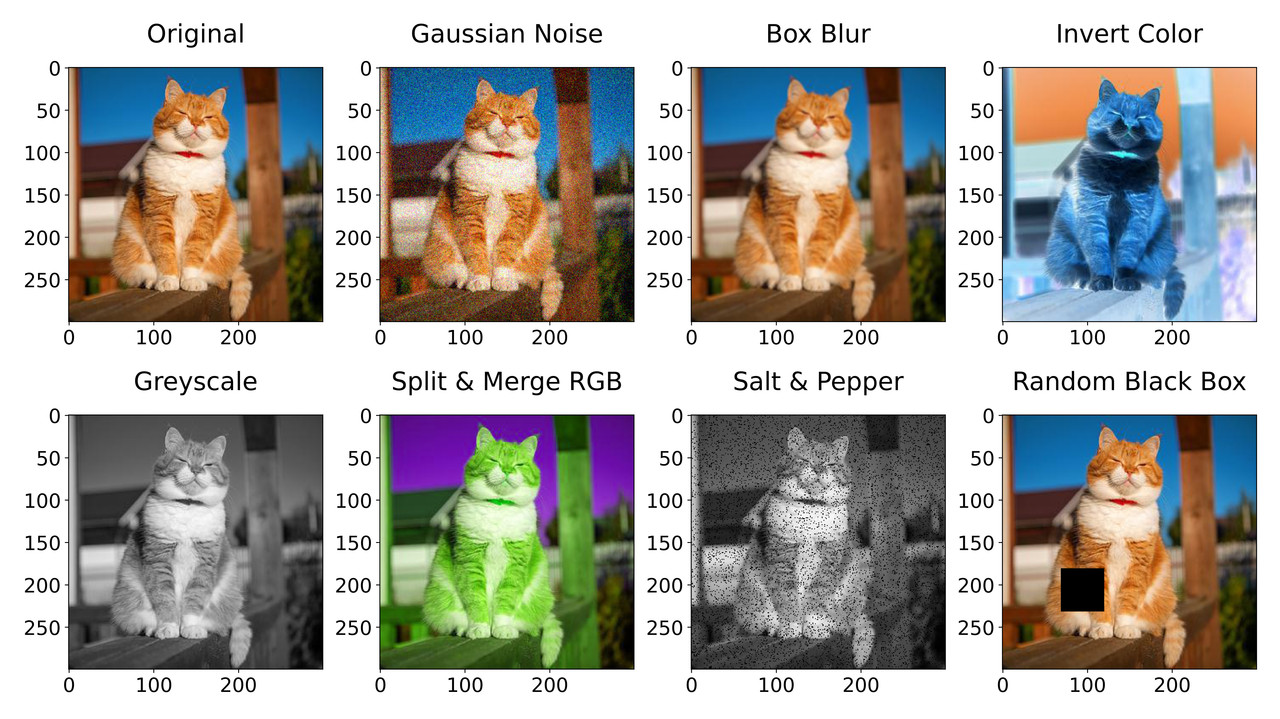

These are just visual modifications of the image obtained through filters or other means. A description of the individual attacks is provided in the next Table. If present, we also specify which parameter we use to define the level of perturbation added to the image. We also show the range in which the parameter has been tested and the step for the search (see Parameter Tuning).

| Name | Description | Parameter |

|---|---|---|

| Box Blur | By applying this filter it is possible to blur the image by setting each pixel to the average value of the pixels in a square box extending radius pixels in each direction |

@ 0.5 step |

| Gaussian Noise | A statistical noise having a probability density function equal to normal distribution |

@ 0.005 step |

| Grayscale Filter | To get a grayscale image, the color information from each RGB channel is removed, leaving only the luminance values. Grayscale images contain only shades of gray and no color because maximum luminance is white and zero luminance is black, so everything in between is a shade of gray | N.A. |

| Invert Color | An image negative is produced by subtracting each pixel from the maximum intensity value, so for color images, colors are replaced by their complementary colors | N.A. |

| Random Black Box | We draw a black square in a random position inside the central portion of the image in order to cover some crucial information | Square size @ 10 step |

| Salt & Pepper | An image can be altered by setting a certain amount of the pixels in the image either black or white. The effect is similar to sprinkling white and black dots-salt and pepper-ones in the image | Amount @ 0.005 step |

In the next Figure we show an example for each of the non-mathematical attacks.

All mathematical attacks and most of non mathematical attacks include some kind of parameter

After generating attacks at different

In the notation,

With evaluation.py we are evaluating all the adversarial samples that we generated on all the different models that we trained.

We created our own datasets by downloading images of cats and dogs from different sources (Bing and Google). All images are then processed in order to be 300x300 in RGB using PIL. Images for each class are then sorted in the following format:

- Trainig Set: 3500 images

- Validation Set: 500 images

- Test Set: 1000 images

In this work, we enhance the transferability landscape by proposing the DUMB attacker model, a list of 8 scenarios that might occur during an attack by including the three variables of the dataset, class balance, and model architecture.

| Case | Condition | Attack Scenario |

|---|---|---|

| C1 |

|

The ideal case for an attacker. We identified two potential attack scenarios. (i) Attackers legally or illegally gain information about the victims' system. (ii) Attackers and victims use the state-of-the-art. |

| C2 |

|

Attackers and victims use state-of-the-art datasets and model architecture. However, victims modify the class balance to boost the model's performance. This scenario can occur especially with imbalanced datasets. |

| C3 |

|

Attackers and victims use standard datasets to train their models. However, there is a mismatch in the model architecture. This scenario might occur when state-of-the-art presents many comparable models. Or similarly, the victims choose a specific model based on computational constraints. |

| C4 |

|

Attackers and victims use standard datasets to train their models, while models' architectures differ. Furthermore, victims adopt data augmentation or preprocessing techniques that alter the ground truth distribution (balancing). This scenario can occur especially with imbalanced datasets. |

| C5 |

|

Attackers and victims use different datasets to accomplish the same classification task. The ground truth distribution can be equal, especially in tasks that are inherently balanced. Similarly, models can be equal if they both adopt the state-of-the-art. |

| C6 |

|

Attackers and victims use different datasets to accomplish the same classification task. Datasets have different balancing because they are inherently generated in different ways (e.g., see hate speech datasets example) or because the attackers or victims augmented them. Attackers and victims use the same state-of-the-art architecture. |

| C7 |

|

Attackers and victims use different datasets to accomplish the same classification task. Datasets ground truth distribution matches. Attackers and victims use different models' architecture. |

| C8 |

|

The worst-case scenario for an attacker. Attackers do not match the victims' dataset, balancing, and model architecture. |