-

Notifications

You must be signed in to change notification settings - Fork 5.5k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Add bfloat16 data type #25402

Add bfloat16 data type #25402

Conversation

|

Thanks for your contribution! |

cb12a84

to

eeae10e

Compare

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I've posted one question. Other than that, LGTM.

|

@luotao1 @wzzju Can ask you for review and approval for framework.proto because PR-CI-CPU-Py2 informs about it. |

It is a bug in the code, not due to lack of approval |

|

@luotao1 You're right, but I still have a problem with that PR-CI-Coverage that BF16 is not visible as proto VarType although it is defined in framework.proto. The problem came when PR-CI-Coverage started to be built with -DWITH_LITE=ON. Could you ask someone related to Lite to help me with this problem? |

|

|

Adam Osewski is looking at this WITH_LITE=ON issue. @arogowie-intel |

|

@luotao1 We found out that problem with DWITH_LITE=ON is caused by https://github.com/PaddlePaddle/Paddle-Lite/blob/develop/lite/core/framework.proto which is the same file in Paddle and Paddle-Lite. That is why BF16 wasn't visible in Paddle-Lite, because Lite was using its framework.proto without defined BF16. |

|

@wozna The update of

If two experiment results are both OK, you can first add BF16 to framework.proto in Paddle-Lite and then in Paddle. |

|

Thank you @luotao1 . I will provide these experiments.

@luotao1 or @Superjomn Do you think about some particular model training? |

@wozna You can choose any model. |

|

Hi @luotao1 (Please correct me if I get sth fundamentally wrong - I'm a newbie here :) ) Let me note that Paddle-Lite contains already 3 copies of

I'm not sure that keeping all those files in sync with Paddle's Regarding the experiments with PaddleLite you asked I also wonder whether they're necessary since:

|

|

@luotao1 |

test=develop

test=develop

test=develop

test=develop

| @@ -38,3 +38,25 @@ TEST(DataType, float16) { | |||

| std::string type = "::paddle::platform::float16"; | |||

| EXPECT_STREQ(f::DataTypeToString(dtype).c_str(), type.c_str()); | |||

| } | |||

|

|

|||

| TEST(DataType, bfloat16) { | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The first test could be parameterized and reused here, to avoid duplicating of code.

This can be done later as a refactoring.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

You are right. I will refactor it later.

| @@ -189,4 +194,120 @@ TEST(DataTypeTransform, CPUTransform) { | |||

| static_cast<paddle::platform::float16>(in_data_bool[i]).x); | |||

| } | |||

| } | |||

|

|

|||

| // data type transform from/to bfloat16 | |||

| { | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The previous block could be parameterized as a function and reused here, to avoid duplication of code.

This can be done later as a refactoring.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

You are right. I will refactor it later.

|

@luotao1 For now, my last proposition that resolves this error for sure is to add BF16 to Do I need to do something more? |

@arogowie-intel Does #25402 (comment) before not solve the problem? where is the omission in the before investigation? |

|

Hi @luotao1

I'm truly sorry, but it looks like that was my fault. Just before the solution I've proposed in that comment I've been experimenting with Paddle-Lite and adding to its After all it seems that this is still somehow unexpected behavior, that the Paddle-Lite proto files have priority over the Paddle ones, or is it correct? I have been trying to find why this happen, however searching through cmake files in Paddle and Paddle-Lite I couldn't find anything suspicious. The update of |

Got it. I will discuss with @Superjomn |

|

@wozna @arogowie-intel You can create a PR into Paddle-Lite repo to update the framework.proto. |

|

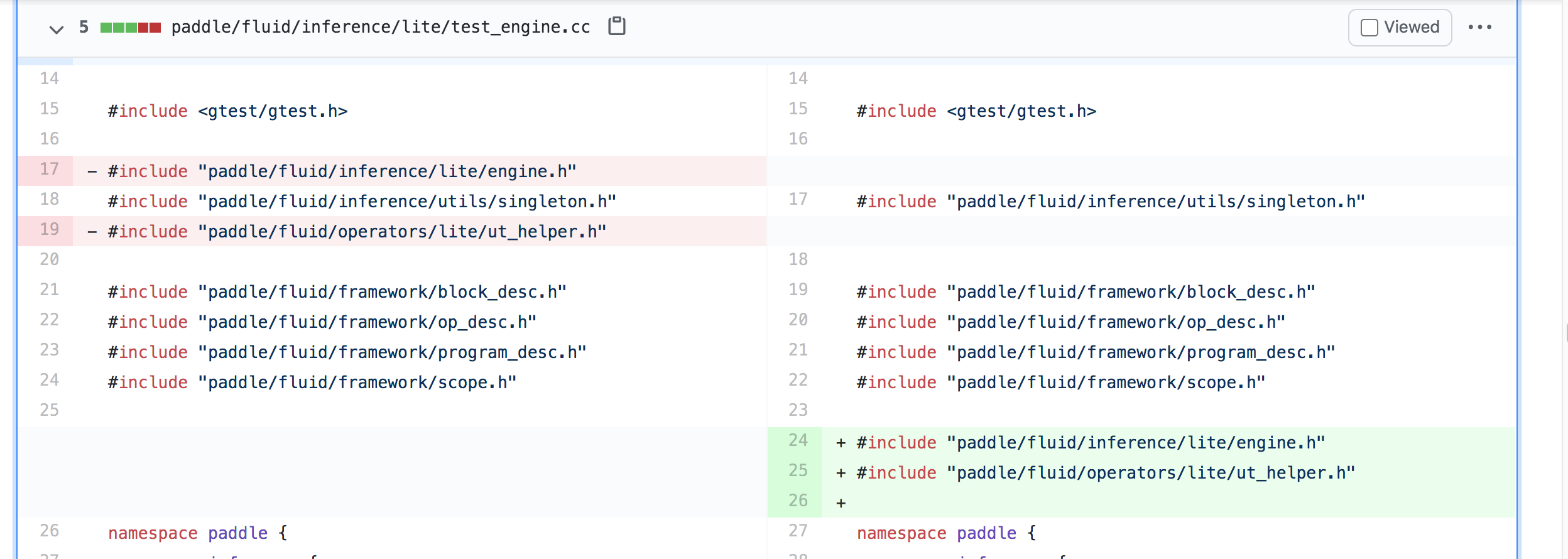

@luotao1 , @Superjomn We looked into this problem with this PR not building with -DWITH_LITE=ON option added and actually |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM

PR types

Others

PR changes

Others

Describe

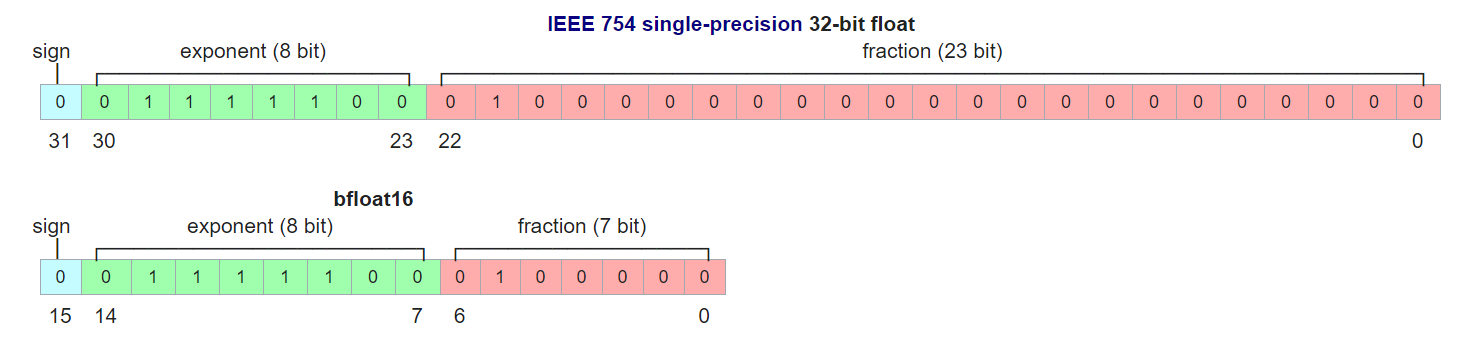

PR adds bfloat16 data type implementation with a test for this type.

Formats of float32 and bfloat16 are presented in the picture below.

The implementation of bfloat16 focuses on the conversion from float to bfloat16, which involves copying the first 2 bytes from float and saving them as bfloat16. The rest of the conversion from other types is done using the default conversion to float and then to bfloat16.

This is the first step associated with model inference on bfloat16 using the OneDNN library on supporting devices such as Cooper Lake.

This PR does not need verification on Cooper Lake, because it is a universal implementation of the bfloat16 data type.