New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[hybrid performance] Optimize pipeline send wait #34086

[hybrid performance] Optimize pipeline send wait #34086

Conversation

|

Thanks for your contribution! |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM for op DataType registeration.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM in pipeline case. But for recompute scenario, the major bottleneck of training is the GPU memory instead of the concurrency of computation (backward & recompute). Allowing concurrency of recompute might influence the max bsz recompute could reach.

| assert dev_type == "gpu" or dev_type == 'npu', ( | ||

| "Now only gpu and npu devices are supported " | ||

| "for pipeline parallelism.") | ||

| if not device in device_list: | ||

|

|

||

| if device not in device_list: |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

这个的原因是啥?

PR types

Performance optimization

PR changes

Others

Describe

1、添加nop op,不作任何操作。主要作用有(静态图下有用,动态图不需要):

2、优化pipeline前向send的wait_comm,在保证完成send的情况下,使用nop替换sync_comm,减少不必要的同步。

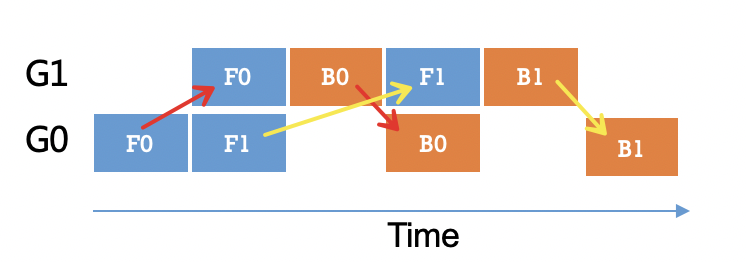

如下图,根据执行顺序可知,若当前stage反向recv完成,那么前向的send也一定完成了。则该场景下,send可以不需要加同步;

但send使用的变量如果没有op使用它,则会被gc回收,在send使用通信流场景下就会出错;所以为解决这个问题,在反向recv后面添加一个nop op,确保在recv完之后对应的变量才被回收。

V100 32G,gpt2-en模型测试