New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

An issue on implementation of SemanticConnectivityloss #1798

Comments

|

Hi, Logits is logit map without applying softmax. Regarding the above issue, it looks like there is indeed a problem. We need to slice pred_conn. |

|

I tested it for paddle. If the range of values in pred_conn exceeds the pred_num_conn value, F.one_hot can still be calculated |

|

In fact, I am using this loss in PyTorch. |

|

In case of using Paddle, how will the values in pred_conn exceeds the pred_num_conn value be processed? |

Exceeded values will be ignored. The corresponding one hot codes returned are all 0 Output |

|

What is your paddlepaddle version? Mine is paddlepaddle 2.2.2. |

|

Mine is 2.2.1 |

|

I have no problem here |

|

import paddle a = paddle.randint(0, 10, (4, 4)) This is the script. |

|

The difference is only that I tried it on CPU without GPU. |

|

I have the same problem with CPU. This is an OP bug in paddle. You can submit the issue here https://github.com/PaddlePaddle/Paddle |

|

Could u provide the implementation of this loss in PyTorch. |

|

In fact, I think that the input element can support greater than class_num is a feature, not a bug. I will try the pytorch loss implementation |

|

Hi @LutaoChu |

|

Hi,I fix this bug on CPU with Paddle. You can use it after the PR is merged |

Hi @LutaoChu and @dreamer121121

In the forward function of the SemanticConnectivityLoss implementation(https://github.com/PaddlePaddle/PaddleSeg/blob/release/2.4/paddleseg/models/losses/semantic_connectivity_loss.py), are the parameter logits the probabilities map or logit map without applying softmax?

BTW:

There is an issue with the above function implementation.

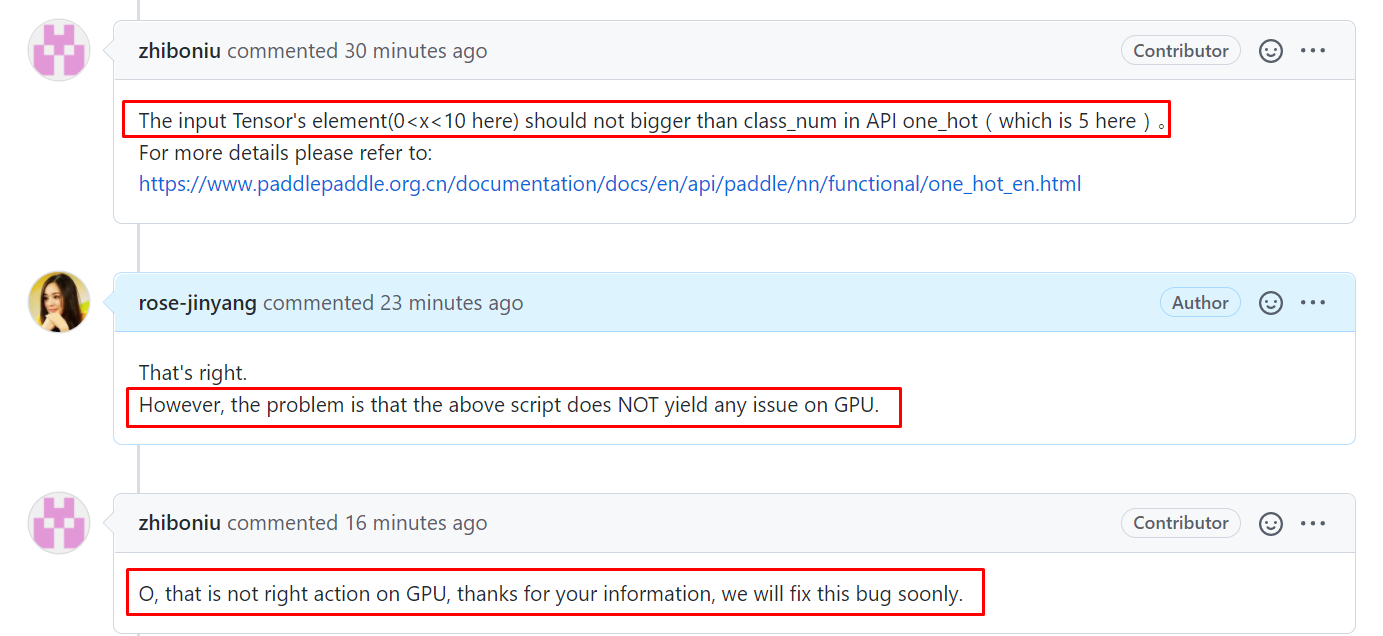

pred_conn has labels greater than pred_num_conn value.

That's because pred_num_conn is limited as smaller than 10.

This results in an issue in the below line.

pred_conn = F.one_hot(pred_conn.long(), pred_num_conn)

How can we fix this issue?

The text was updated successfully, but these errors were encountered: