-

Notifications

You must be signed in to change notification settings - Fork 44

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

- Loading branch information

Showing

4 changed files

with

371 additions

and

0 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,192 @@ | ||

| ## Batch Jobs and Job Scripting | ||

|

|

||

| Batch Jobs are by far the most common job on Summit. Batch jobs are resource provisions that run applications on nodes away from the user. Batch jobs are commonly used for applications that run for a long period of time or applications that require little to no user input. | ||

|

|

||

| Batch jobs are created from a job script which provide resource requirements and commands for the job. | ||

|

|

||

| ### Job Scripts | ||

|

|

||

| Even though it is possible to submit jobs completely from the command line, it is often overly tedious and unorganized to do so. Instead, Research Computing recommends constructing a job script for your batch jobs. A **job script** is set of Linux commands paired with a set of resource requirements that can be submitted to the Slurm job scheduler. Slurm will then generate a job according to the parameters set in the job script. Any commands that are included with the job script will be run within the job. | ||

|

|

||

| <iframe width="560" height="315" src="https://www.youtube.com/embed/sStJQKTa9zY" frameborder="0" allow="autoplay; encrypted-media" allowfullscreen></iframe> | ||

|

|

||

| --- | ||

|

|

||

|

|

||

|

|

||

| ### Submitting a Job Script | ||

|

|

||

| Submitting a job script can be done with the `sbatch` command: | ||

|

|

||

| ```bash | ||

| sbatch <your-job-script> | ||

| ``` | ||

|

|

||

| Because job script specify the desired resources for your job, you won't need to specify any resources on the command line. You can, however, overwrite or add any job parameter by providing the specific resource as a flag within `sbatch` command: | ||

|

|

||

| ```bash | ||

| sbatch --partition=sgpu <your-job-script> | ||

| ``` | ||

|

|

||

| Running this command would force your job to run on the sgpu partition *no matter what your job script specified*. | ||

|

|

||

| ### Making a Job Script | ||

|

|

||

| Although Research Computing provides a variety of different sample scripts users can utilize when running their own jobs, knowing how to draft a job script can be quite handy if you need to debug any errors in your jobs or you need to make substantial changes to a script. | ||

|

|

||

| A job script looks something like this: | ||

|

|

||

| ```bash | ||

| #!/bin/bash | ||

|

|

||

| #SBATCH --nodes=1 | ||

| #SBATCH --ntasks=1 | ||

| #SBATCH --time=00:10:00 | ||

| #SBATCH --partition=shas-testing | ||

| #SBATCH --qos=testing | ||

| #SBATCH --output=sample-%j.out | ||

|

|

||

| module purge | ||

|

|

||

| module load intel | ||

| module load mkl | ||

|

|

||

| echo "== This is the scripting step! ==" | ||

| sleep 30 | ||

| ./executable.exe | ||

| echo "== End of Job ==" | ||

| ``` | ||

|

|

||

| Normally job scripts are divided into 3 primary parts: directives, loading software, and user scripting. Directives give the terminal and the Slurm daemon instructions on setting up the job. Loading software involves cleaning out the environment and loading specific pieces of software you need for your job. User scripting is simply the commands you wish to be executed in your job. | ||

|

|

||

| #### 1. Directives | ||

|

|

||

| A directive is a comment that is included at the top of a job script that tells the shell some sort of information about the script. | ||

|

|

||

| The first directive, the shebang directive, is always on the first line of any script. The directive indicates which shell you want running commands in your job. Most users will likely utilize bash as their shell, so we will specify bash by typing: | ||

|

|

||

| ```bash | ||

| #!/bin/bash | ||

| ``` | ||

|

|

||

| The next directives that must be included with your job script are *sbatch* directives. These directives specifies resource requirements for a batch job. These directives must come after the shebang directive and before any commands are issued in the Job script. Each directive contains a flag that requests resource the job would need to complete execution. An sbatch directive is written as such: | ||

|

|

||

| ```bash | ||

| #SBATCH --<resource>=<amount> | ||

| ``` | ||

|

|

||

| For example if you wanted to request 2 nodes with an sbatch directive, you would write: | ||

|

|

||

| ```bash | ||

| #SBATCH --nodes=2 | ||

| ``` | ||

|

|

||

| A list of some useful sbatch directives [can be found here.]() A full list of commands [can be found on Slurm's documentation on sbatch.]() | ||

|

|

||

| #### 2. Software | ||

|

|

||

| Because jobs run on a different node than from where you submit, any shared software that is needed must be loaded in via the job script. Software can be loaded in a job script just like you would on the command line. First we will want to purge all software that may be left behind from a previous job by running the command: | ||

|

|

||

| ```bash | ||

| module purge | ||

| ``` | ||

|

|

||

| After this you can load whatever piece of software you may need by running the following command: | ||

|

|

||

| ```bash | ||

| module load <software> | ||

| ``` | ||

|

|

||

| #### 3. User Scripting | ||

|

|

||

| The last part of a job script is the actual user scripting that will execute when the job is submitted. This part of the job script includes all user commands that are utilized in the jobs completion. Any Linux command can be utilized in this step. Scripting can range from highly complex loops iterating over thousands of files to a simple call to an executable. Below is an simple example of some user scripting: | ||

|

|

||

| ```bash | ||

| echo "== This is the scripting step! ==" | ||

|

|

||

| touch tempFile1.in | ||

| touch tempFile2.in | ||

|

|

||

| sleep 30 | ||

| ./executable.exe tempfile1.in tempfile2.in | ||

|

|

||

| echo "== End of Job ==" | ||

| ``` | ||

|

|

||

| ### Examples | ||

|

|

||

| Job script to run a 5 minute long, 1 node, 1 core C++ Job: | ||

|

|

||

| ```bash | ||

| #!/bin/bash | ||

|

|

||

| #SBATCH --nodes=1 | ||

| #SBATCH --time=00:05:00 | ||

| #SBATCH --qos=testing | ||

| #SBATCH --partition=shas-testing | ||

| #SBATCH --ntasks=1 | ||

| #SBATCH --job-name=cpp-job | ||

| #SBATCH --output=cpp-job.%j.out | ||

|

|

||

| module purge | ||

|

|

||

| ./example_cpp.exe | ||

| ``` | ||

|

|

||

| Job script to run a 7 minute long, 1 node, 4 core C++ OpenMP Job: | ||

|

|

||

| ```bash | ||

| #!/bin/bash | ||

|

|

||

| #SBATCH --nodes=1 | ||

| #SBATCH --time=00:07:00 | ||

| #SBATCH --qos=testing | ||

| #SBATCH --partition=shas-testing | ||

| #SBATCH --ntasks=4 | ||

| #SBATCH --job-name=omp-cpp-job | ||

| #SBATCH --output=omp-cpp-job.%j.out | ||

|

|

||

| module purge | ||

|

|

||

| export OMP_NUM_THREADS=4 | ||

|

|

||

| ./example_omp.exe | ||

| ``` | ||

|

|

||

| Job script to run a 10 minute long, 2 node, 24 core C++ MPI Job: | ||

|

|

||

| ```bash | ||

| #!/bin/bash | ||

|

|

||

| #SBATCH --nodes=2 | ||

| #SBATCH --time=00:10:00 | ||

| #SBATCH --qos=testing | ||

| #SBATCH --partition=shas-testing | ||

| #SBATCH --ntasks=24 | ||

| #SBATCH --job-name=mpi-cpp-job | ||

| #SBATCH --output=mpi-cpp-job.%j.out | ||

|

|

||

| module purge | ||

| module load intel | ||

| module load impi | ||

|

|

||

| mpirun -np 24 ./example_mpi.exe | ||

| ``` | ||

|

|

||

| ### Job Flags | ||

|

|

||

| The `sbatch` command supports many optional flags. To review all the options, please visit the Slurm [sbatch page](http://slurm.schedmd.com/sbatch.html). Below, we have listed a few ones you may want to consider when submitting your job via | ||

| `sbatch`. | ||

|

|

||

| | Type | Description | Flag | | ||

| | :--------------------- | :-------------------------------------------------- | :------------------------- | | ||

| | [Allocations]() | Specify an allocation account if you have multiple | --account=account_no | | ||

| | [Partitions]() | Specify a partition | --partition=partition_name | | ||

| | Sending email | Receive email at beginning or end of job completion | --mail-type=type | | ||

| | Email address | Email address to receive the email | --mail-user=user | | ||

| | Number of nodes | The number of nodes needed to run the job | --nodes=nodes | | ||

| | Number of tasks | The total number of cores needed to run the job | --ntasks=processes | | ||

| | [Quality of service]() | Specify a QOS | --qos=qos | | ||

| | Wall time | The max. amount of time your job will run for | --time=wall time | | ||

| | Job Name | Name your job so you can identify in queue | --job-name=<jobname> | | ||

|

|

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,67 @@ | ||

| ## Interactive jobs | ||

|

|

||

| Interactive jobs allow a user to interact with applications on the HPC environment in real time. With interactive jobs, users can request time to work on a compute node directly. Users can then run general user interface (GUI) applications, execute scripts, or run other commands directly on a compute node. Common reasons for running interactive jobs include debugging, designing workflows, or preference in using the GUI interface of an application. | ||

|

|

||

| ### General Interactive Jobs | ||

|

|

||

| <iframe width="560" height="315" src="https://www.youtube.com/embed/s53sjDubBpo" frameborder="0" allow="autoplay; encrypted-media" allowfullscreen></iframe> | ||

|

|

||

| --- | ||

|

|

||

| To run an interactive job on Research Computing resources, request an interactive session by utilizing the `sinteractive` command. The `sinteractive` command creates a job with parameters provided through flags run with the command. The command will then put the user onto the compute node to interactively utilize their resource allotment. | ||

|

|

||

| Any resource that could be specified in a job script or with `sbatch` can be utilized with `sinteractive`. The primary flags we recommend users specify are the `qos` flag and the `time` flag. These flags will specify quality of service and amount of time for your job for respectively. We run the `sinteractive` command as such: | ||

|

|

||

| ```bash | ||

| sinteractive --qos=interactive --time=00:10:00 | ||

| ``` | ||

|

|

||

| This will load an interactive session that will run on one core of one node on the interactive quality of service for ten minutes. From here you can run any application or script you may need. For example, if you type `python` you will open an interactive python shell on a compute node (rather than the login nodes, which is forbidden). | ||

|

|

||

| ### Interactive GUI Applications | ||

|

|

||

| <iframe width="560" height="315" src="https://www.youtube.com/embed/DFnHsMxPC5w" frameborder="0" allow="autoplay; encrypted-media" allowfullscreen></iframe> | ||

|

|

||

| --- | ||

|

|

||

| To run an interactive GUI application, we must install a X windows server application and enable X11 forwarding on our personal computer. | ||

|

|

||

| #### Windows setup | ||

|

|

||

| On Windows we must first install an X window server application to allow Summit to forward the GUI information to your local system. For Windows, we will use an application called Xming to accomplish | ||

| this. [Download the Xming here](https://sourceforge.net/projects/xming/). | ||

|

|

||

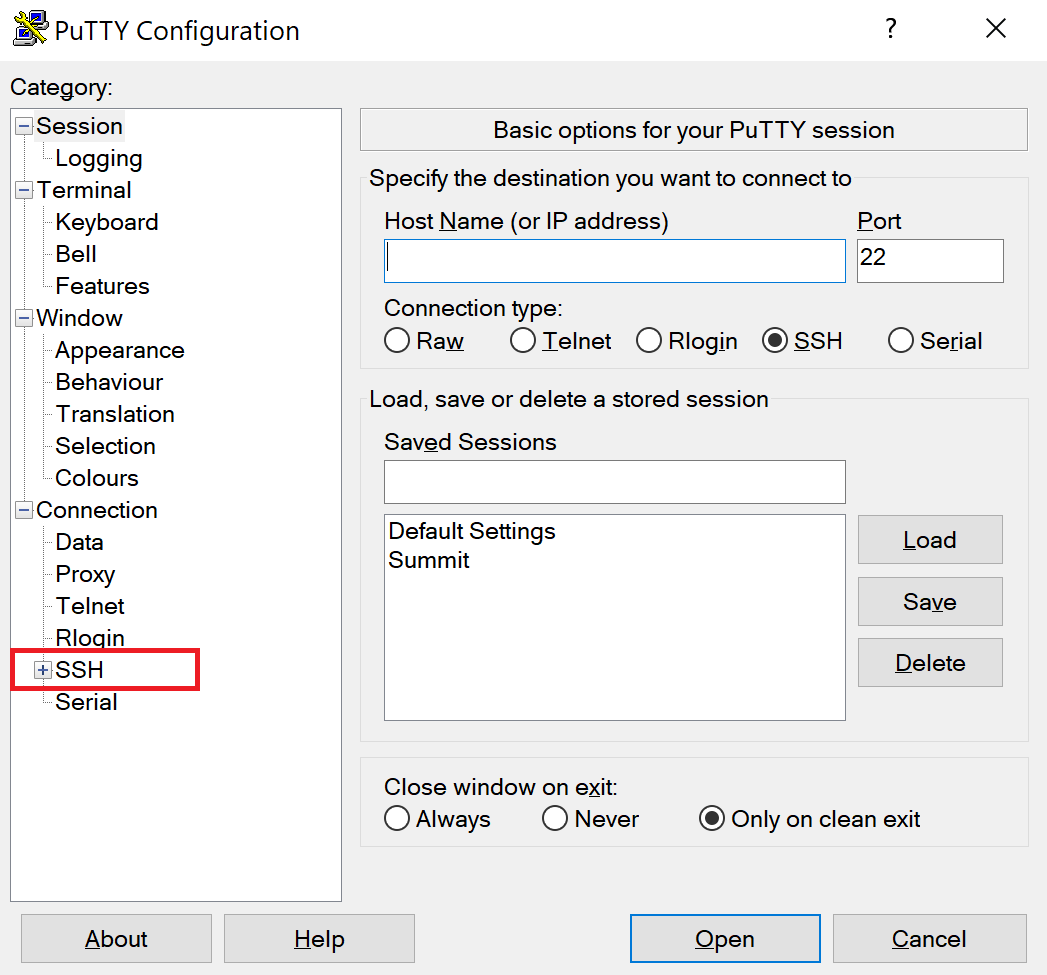

| Next we must enable X11 forwarding on the PuTTY application. Download and install the [PuTTY application](https://www.chiark.greenend.org.uk/~sgtatham/putty/latest.html) here if you have not done so already. | ||

|

|

||

|  | ||

|

|

||

| Expand the SSH tab on the left side of the application and click X11. | ||

|

|

||

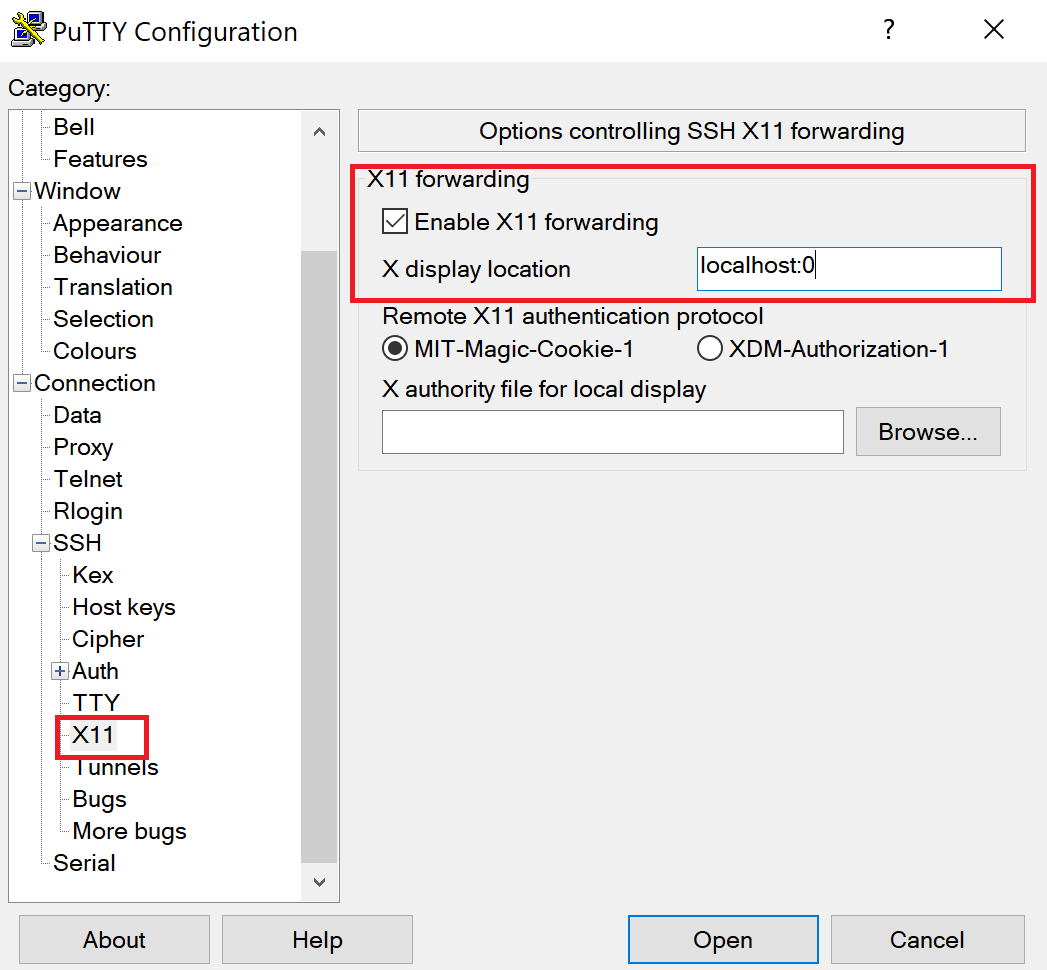

|  | ||

|

|

||

| In the X11 Menu check the "Enable X11 Forwarding" checkbox and type "localhost:0" in the X display location field. Clicking open will open a terminal window where you can login. | ||

|

|

||

| #### macOS setup | ||

|

|

||

| Using macOS, we will also need to install an X-window server application to allow Summit to forward GUI information to your local system. For Mac, we will use an application called XQuartz to accomplish this. [Download and install XQuartz here](https://www.xquartz.org/). | ||

|

|

||

| Opening the application will bring up a terminal window. On this window type: | ||

|

|

||

| ```bash | ||

| ssh login.rc.colorado.edu -l your_rc-username -X | ||

| ``` | ||

|

|

||

| #### Running GUI Applications | ||

|

|

||

| Once you have logged into Summit with X11 Forwarding enabled, a user should be able to initialize a GUI application by starting an interactive job and running your selected application. The X-window server application installed on your local system will display the window generated on the cluster on your local machine. | ||

|

|

||

| If you plan on submitting your interactive job from a compile node for whatever reason, you must connect connect to the scompile node with x11 forwarding enabled: | ||

|

|

||

| ```bash | ||

| ssh scompile -X | ||

| ``` | ||

|

|

||

| From here you will be able to submit your interactive job like normal. | ||

|

|

||

|

|

||

|

|

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,65 @@ | ||

| ## Job Resources | ||

|

|

||

| Slurm allows for resources to be specified on a job with flags. Below is a reference to some of these Slurm resource flags as well as a reference to partition and quality of service which have Research Computing specific parameters. | ||

|

|

||

| ### Slurm Resource Flags | ||

|

|

||

| Job scripts, the `sbatch` command, and the `sinteractive` command support many different resource requests in the form of flags. To review all options for sbatch, please visit the Slurm [sbatch page](http://slurm.schedmd.com/sbatch.html). Below, we have listed a few ones you may want to consider when submitting your job script. | ||

|

|

||

| | Type | Description | Flag | | ||

| | :--------------------- | :-------------------------------------------------- | :------------------------- | | ||

| | [Allocations]() | Specify an allocation account if you have multiple | --account=account_name | | ||

| | [Partitions]() | Specify a partition | --partition=partition_name | | ||

| | Sending email | Receive email at beginning or end of job completion | --mail-type=type | | ||

| | Email address | Email address to receive the email | --mail-user=user | | ||

| | Number of nodes | The number of nodes needed to run the job | --nodes=nodes | | ||

| | Number of tasks | The total number of cores needed to run the job | --ntasks=processes | | ||

| | [Quality of service]() | Specify a QOS | --qos=qos | | ||

| | Wall time | The max. amount of time your job will run for | --time=wall time | | ||

| | Job Name | Name your job so you can identify in queue | --job-name=jobname | | ||

|

|

||

| ### Partitions | ||

|

|

||

| On Summit, nodes with the same hardware configuration are grouped into partitions. You will need to specify a partition using `--partition` in your job script in order for your job to run on the appropriate type of node. | ||

|

|

||

| These are the partitions available on Summit. | ||

|

|

||

| | Partition | Description | # of nodes | cores/node | RAM/core (GB) | Billing weight | Default and Max Walltime | | ||

| | --------- | ----------------- | ---------- | ---------- | ------------- | -------------- | ------------------------ | | ||

| | shas | Haswell (default) | 380 | 24 | 4.84 | 1 | 4H, 24H | | ||

| | sgpu | GPU-enabled | 10 | 24 | 4.84 | 2.5 | 4H, 24H | | ||

| | smem | High-memory | 5 | 48 | 42.7 | 6 | 4H, 7D | | ||

| | sknl | Phi (KNL) | 20 | 68 | TBD | 0.1 | 4H, 24H | | ||

|

|

||

| In addition to these partitions, Research Computing also provides specialized partitions for interactive and test jobs. Each of which must be pair with their corresponding Quality of Service. | ||

|

|

||

| | Partition | Description | Max Nodes | Max cores | RAM/core (GB) | Billing weight | Default and Max Walltime | | ||

| | ---------------- | ----------------- | --------- | --------- | ------------- | -------------- | ------------------------ | | ||

| | shas-testing * | Haswell (default) | 24 | 24 | 4.84 | 1 | 0.5H, 0.5H | | ||

| | shas-interactive | Haswell (default) | 1 | 1 | 4.84 | 1 | 1H, 4H | | ||

| | sgpu-testing | GPU-enabled | 1 | 24 | 4.84 | 2.5 | 0.5H, 0.5H | | ||

| | sknl-testing | Phi (KNL) | 1 | 24 | TBD | 1 | 0.5H, 0.5H | | ||

|

|

||

| *The *shas testing* partition is limited to 24 cores total. These cores can be spread upon multiple nodes but only 24 will be available for the partition. | ||

|

|

||

| To run a job longer than 24 hours on the *shas*, *sgpu*, or *sknl* partitions, use the *long* QOS. | ||

|

|

||

| More details about each type of node can be found [here](https://www.colorado.edu/rc/resources/summit/specifications). | ||

|

|

||

| ### Quality of Service | ||

|

|

||

| On Summit, Quality of Service or QoS are used to constrain or modify the characteristics that a job can have. For example, by selecting the *testing* QoS, a user can obtain higher queue priority for a job with the trade-off that the maximum allowed wall time is reduced from what would otherwise be allowed on that partition. We recommend specifying a QoS as well as a partition for every job. | ||

|

|

||

| The available QoS's for Summit are: | ||

|

|

||

| | QOS name | Description | Max walltime | Max jobs/user | Node limits | Priority boost | | ||

| | ----------- | ------------------------------------- | --------------- | ------------- | ------------------------- | ------------------------------- | | ||

| | normal* | default | see table above | n/a | 256/user | 0 | | ||

| | testing | For quick turnaround when testing | 30M | 1 | 2/user; max 12 cores/node | QoS has dedicated cores | | ||

| | interactive | For interactive jobs (command or GUI) | 4H | 1 | 1 core | QoS has dedicated cores | | ||

| | long | Longer wall times | 7D | n/a | 22/user; 40 total | 0 | | ||

| | condo | Condo purchased nodes only | 7D | n/a | n/a | Equiv. of 1 day queue wait time | | ||

|

|

||

| *The _normal_ QOS is the default QOS if no other is specified. | ||

|

|

||

| **The testing and interactive QOS must be paired with a testing or interactive partition. Jobs that utilize testing and interactive QOS will fail if paired with a any other partition** |

Oops, something went wrong.