The project aims to create a system consisting of trained neural network based on LSTM and Attention that generates realistic handwritten text from input text.

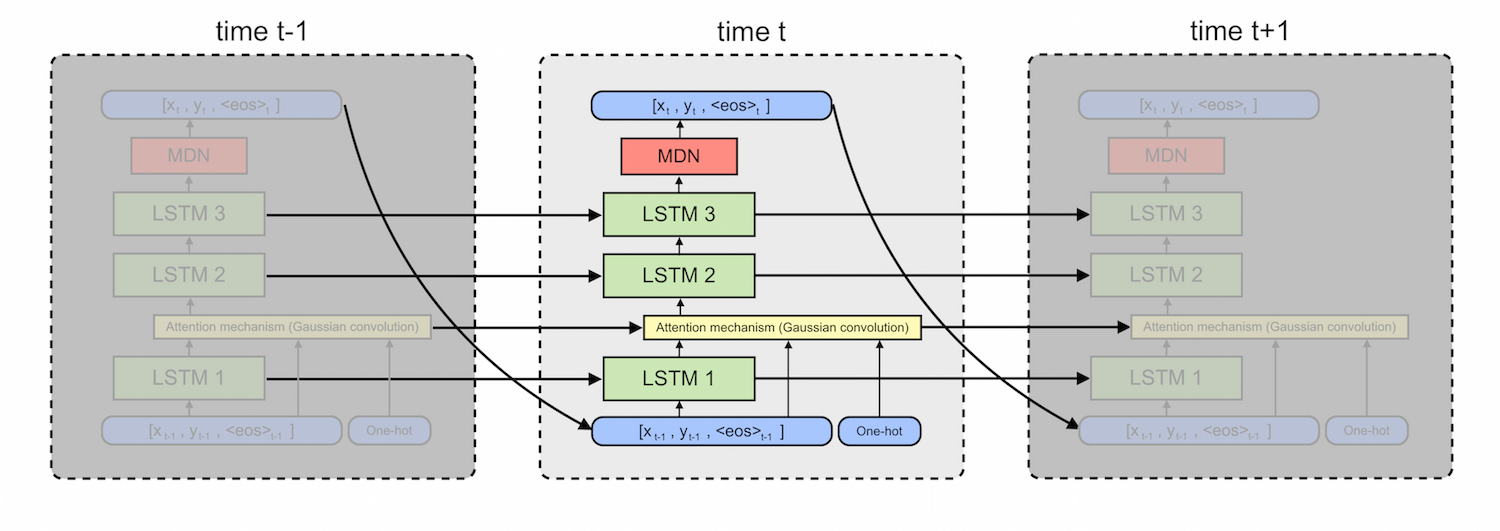

The project is an implementation of Alex Graves 2014 paper Generating Sequences With Recurrent Neural Networks. It processes input text and outputs realistic-looking handwriting using stacked LSTM layers along with attention mechanism. The output is x, y co-ordinates of the handwriting sequences which can be plotted to get the required longhand sentences.

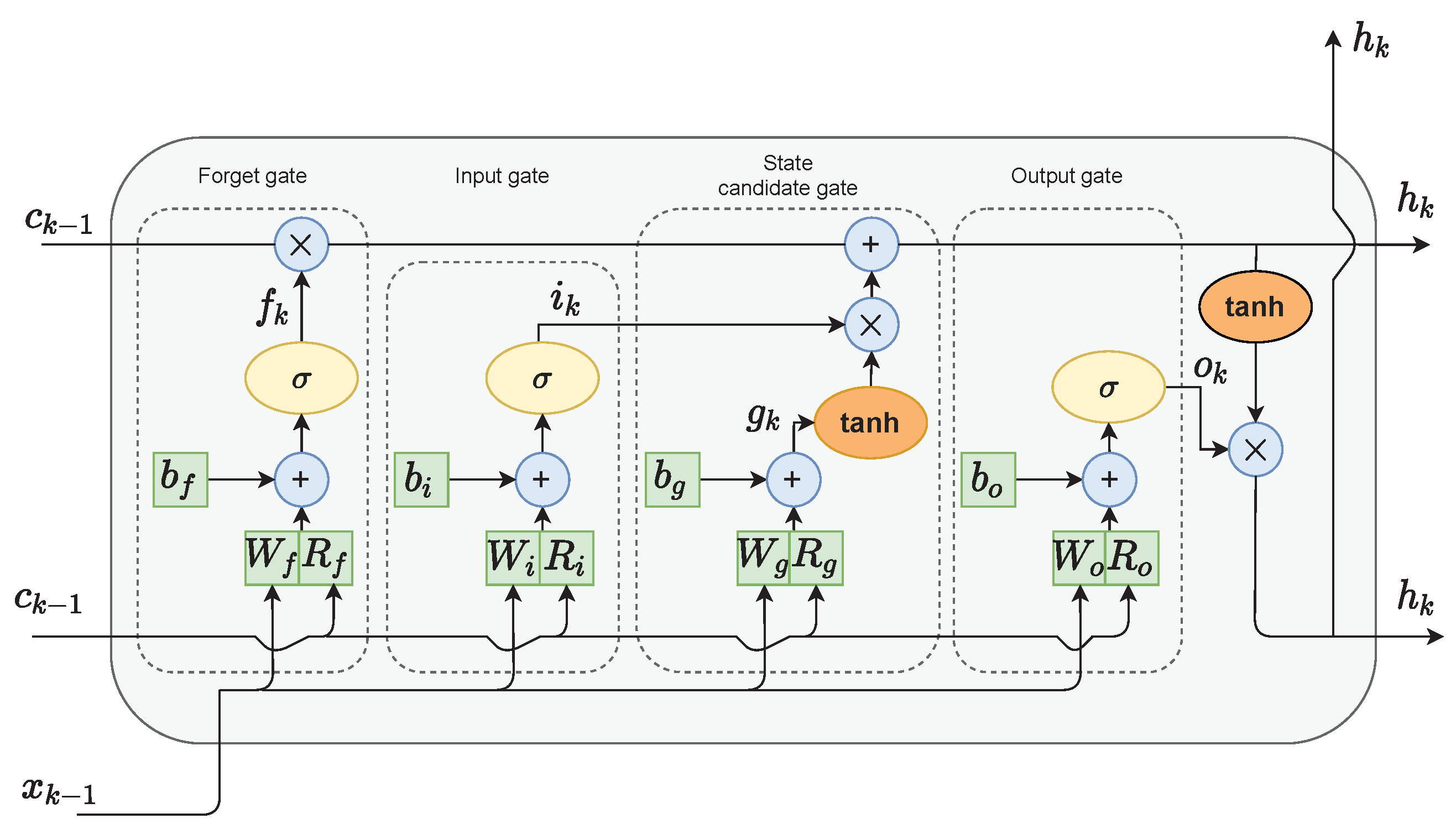

The model uses Long-short term memory cell layers and attention mechanism.

LSTM can use its memory to generate complex,

realistic sequences containing long-range structure. It uses purpose-built memory cells to store information, is better at finding and exploiting long range dependencies in the data.

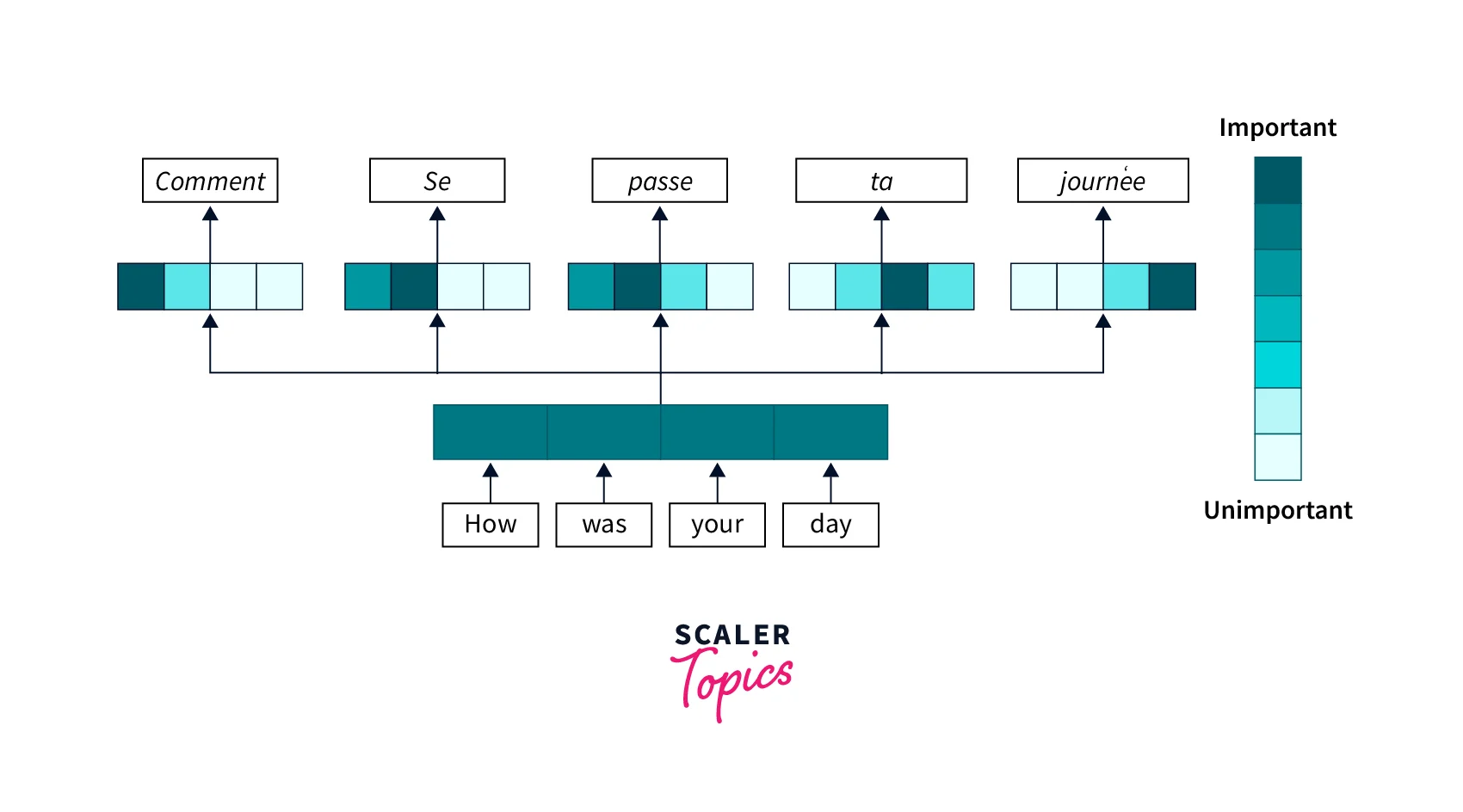

The number of co-ordinates used to write

each character varies greatly compared to text according to style, size, pen speed etc. The attention-mechanism assists to create a soft-window so that it dynamically determines an alignment between the text and the pen locations. That is, it helps model to learn to decide which character to write next.

Mixture Density Networks are neural networks which can measure their own uncertainty and help to capture randomness of handwriting. They output parameters μ(mean), σ(standard deviation), and ρ(correlation) for several multivariate Gaussian components. They also estimate a parameter π(weights) for each of these distributions which helps to gauge contribution of each distriution in the output.

It consists of 3 LSTM stacked layers. The output is feeded to a mixture density layer which provides mean, variance, correlation and weights of 20 mixture components.

The model consists of 3 LSTM layers stacked upon each other along with the added input from the character sequence mediated by the window layer.

The input (x, y, eos) are taken from IAM-OnDB online database where x and y are the pen co-ordinates and eos is the points in the sequence when the pen is lifted off the whiteboard.

Onehots is the onehot representation of text inputted

Some of the ways in which this model can advance are listed below

- Controlling the width of the output handwriting. Introducting variance to it to give more realistic look.

- Generate handwriting in the style of a particular writer. This can be achieved with primed sampling

- Mohammed Bhadsorawala

- Sanika Kumbhare

- SRA VJTI - Eklavya 2023

- Heartfelt gratitude to our mentors Lakshaya Singhal and Advait Dhamorikar for guiding us at every point of our journey. The project wouldn't have been possible without them

Deep Learning courses