Dataset: Titanic

- Feature preprocessing

- Categorical

- Numerical

- Ordinal

- Text: Ver sesión de NLP

- Date or Time: Ver sesión de series temporales

- Feature generation

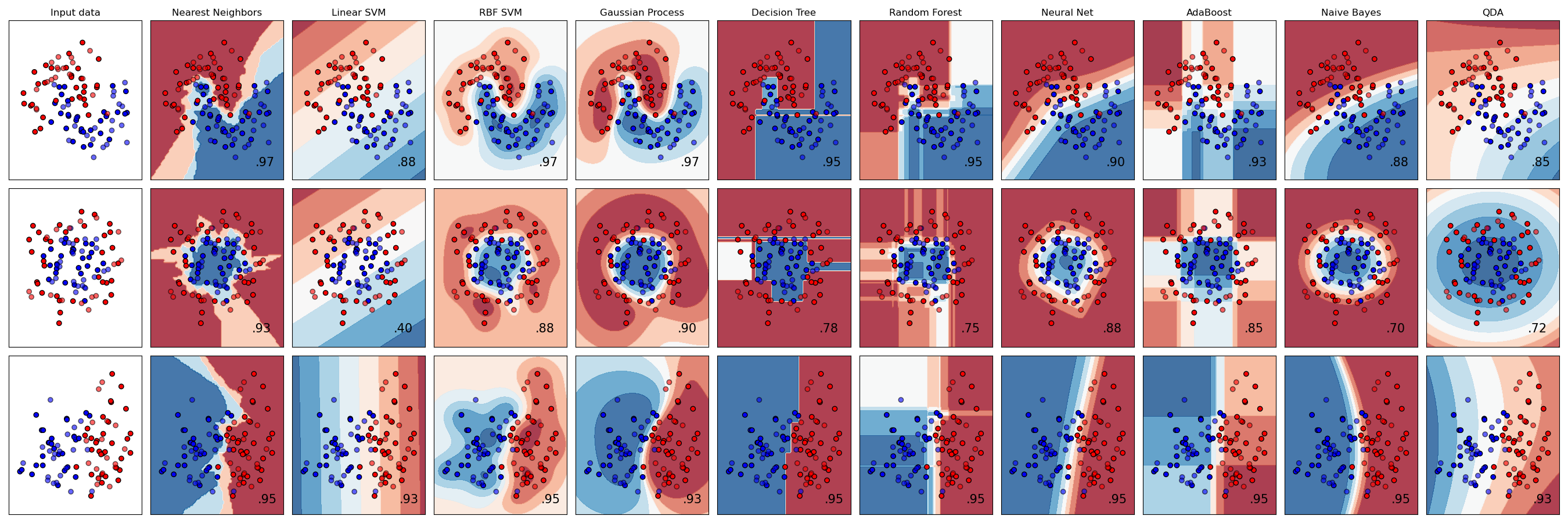

- Models

- Validation

- Classification metrics

- Conclussion

👉 Use this encoding for tree based models (Random Forest, Gradient Boosting...)

👉 Use this encoding for not tree based models (Linear models, Neural Networks, Support Vector Machines...)

/img/one-hot-encoding.png)

Números enteros o decimales: Ej: Edad, medidas, precios, ...

Categorias con orden. No podemos asegurar que los intervalos son iguales. Ej: Carnet de conducir, nivel de educación, tipo de ticket

- Si tienes el precio de la casa y los metros cuadrados, puedes añadir el precio del metro cuadrado.

- Si tines la distancia en el eje x e y, puedes añadir la distancia directa por pitagoras.

- Si tines precios, puedes añanir la parte fraccionaria pq es muy subjetiva en la gente.

| Model | Comment | Library | More info |

|---|---|---|---|

| Decission Tree | Simple and explicable. | Sklearn | |

| Linear models | Simple and explicable. | Sklearn | |

| Random Forest | Good starting point (tree enesemble) | Sklearn | |

| Gradient Boosting | Usually the best (tree enesemble) | XGBoost, LighGBM, Catboost | |

| Neural Network | Good if lot of data. | Fast.ai v2 | blog |

Jeremy Howard on twitter: Our advice for tabular modeling

We have two approaches to tabular modelling: decision tree ensembles, and neural networks. And we have mentioned two different decision tree ensembles: random forests, and gradient boosting. Each is very effective, but each also has compromises:

Are the easiest to train, because they are extremely resilient to hyperparameter choices, and require very little preprocessing. They are very fast to train, and should not overfit, if you have enough trees. But, they can be a little less accurate, especially if extrapolation is required, such as predicting future time periods

In theory are just as fast to train as random forests, but in practice you will have to try lots of different hyperparameters. They can overfit. At inference time they will be less fast, because they cannot operate in parallel. But they are often a little bit more accurate than random forests.

| sklearn Random Forest |

XGBoost Gradient Boosting |

LightGBM Gradient Boosting |

Try | |

|---|---|---|---|---|

| 🔷 Number of trees | N_estimators | num_round 💡 | num_iterations 💡 | 100 |

| 🔷 Max depth of the tree | max_depth | max_depth | max_depth | 7 |

| 🔶 Min cases per final tree leaf | min_samples_leaf | min_child_weight | min_data_in_leaf | |

| 🔷 % of rows used to build the tree | max_samples | subsample | bagging_fraction | 0.8 |

| 🔷 % of feats used to build the tree | max_features | colsample_bytree | feature_fraction | |

| 🔷 Speed of training | NOT IN FOREST | eta | learning_rate | |

| 🔶 L1 regularization | NOT IN FOREST | lambda | lambda_l1 | |

| 🔶 L2 regularization | NOT IN FOREST | alpha | lambda_l2 | |

| Random seed | random_state | seed | _seed |

- 🔷: Increase parameter for overfit, decrease for underfit.

- 🔶: Increase parameter for underfit, decrease for overfit. (regularization)

- 💡: For Gradient Boosting maybe is better to do early stopping rather than set a fixed number of trees.

Take the longest time to train, and require extra preprocessing such as normalisation; this normalisation needs to be used at inference time as well. They can provide great results, and extrapolate well, but only if you are careful with your hyperparameters, and are careful to avoid overfitting.

We suggest starting your analysis with a random forest. This will give you a strong baseline, and you can be confident that it's a reasonable starting point. You can then use that model for feature selection and partial dependence analysis, to get a better understanding of your data.

From that foundation, you can try Gradient Boosting and Neural Nets, and if they give you significantly better results on your validation set in a reasonable amount of time, you can use them.

|

|

|

| Categorical Ordinal |

|

|

|---|---|---|

| Numerical | Nothing |

|

Map data to a normal distribution: Box-Cox

A Box Cox transformation is a generic way to transform non-normal variables into a normal shape.

| Lambda value (λ) | Transformed data |

|---|---|

| -3 | Y⁻³ = 1/Y³ |

| -2 | Y⁻² = 1/Y² |

| -1 | Y⁻¹ = 1/Y¹ |

| -0.5 | Y⁻⁰·⁵ = 1/√Y |

| 0 | log(Y) |

| 0.5 | Y⁰·⁵ = √Y |

| 1 | Y¹ |

| 2 | Y² |

| 3 | Y³ |

/img/header.jpg)

/img/label-encoding.png)

/img/Tree.png)

/img/RandomForest.png)

/img/GradientBoosting.png)

/img/NeuralNet.png)

/img/cross-validation.png)