demo.mp4

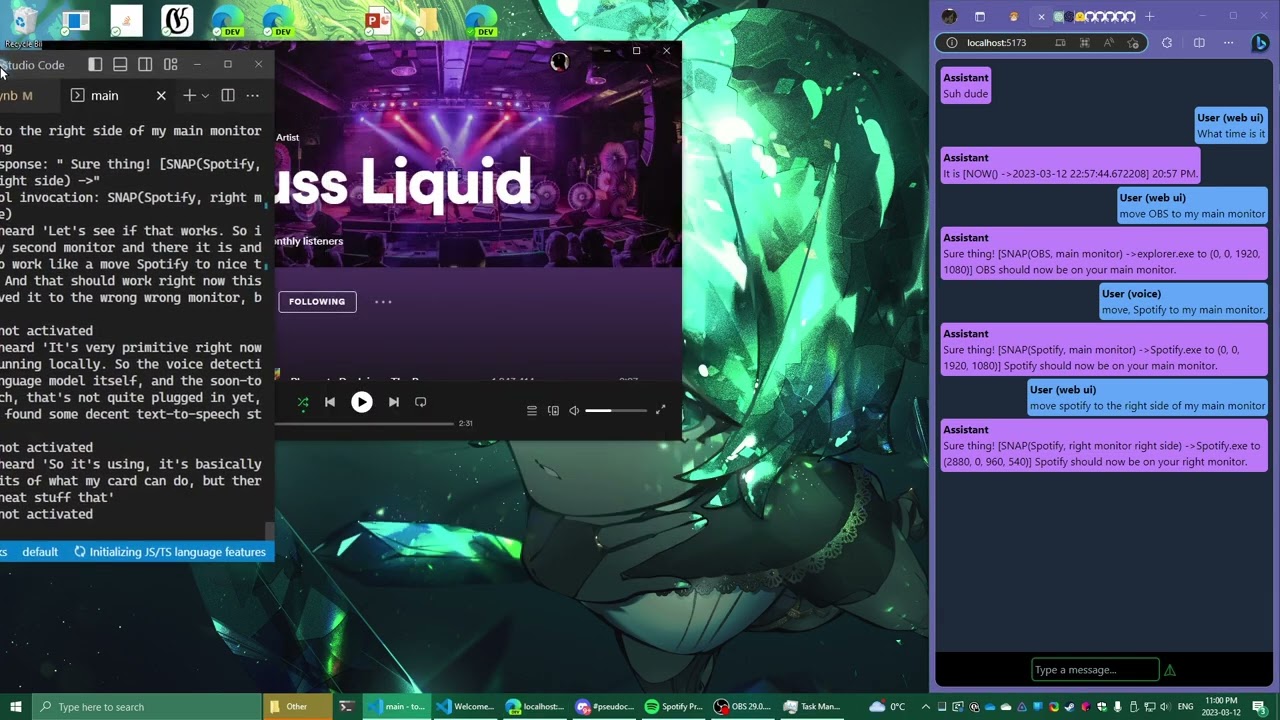

Using toolformer prompting to create a copilot for your operating system.

Currently only supports moving windows around. Example:

User:

move notepad, main monitor, top right

Assistant: Okay, moving spotify... [SNAP(notepad, main monitor top right) ->moved notepad to main monitor top right] done!

Runs locally using pythia-2.8B-deduped. This would work a lot better if I just used the ChatGPT api, but I have a nice graphics card for testing, and I think it's important that we try and develop these tools to run locally instead of only relying on third party inference.

Because the local models are less smart, I do some dumb things to make it work.

I tried using the language model to pick the process name that best matched the user input, but it wasn't very good at it. Better prompts would probably help, but I just used a fuzzy string matcher instead.

Same with converting from natural language to window coordinates.

The important thing is that it shows the agent invoking the tool, and that the parameters for the tool are passed as natural language.

Check out main.ipynb for a demo. Windows only for now.

Credit to minosvasilias for their prompt

Credit to tatellos for their whisper mic stuff

Credit to coqui-ai for their TTS stuff

checkout langchain