-

Notifications

You must be signed in to change notification settings - Fork 51

Commit

This commit does not belong to any branch on this repository, and may belong to a fork outside of the repository.

Add terraform deployment example and documentation

This adds a reference implementation of a terraform deployment script.

- Loading branch information

Showing

27 changed files

with

2,207 additions

and

20 deletions.

There are no files selected for viewing

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

|

|

@@ -4,3 +4,4 @@ target/ | |

| .terraform* | ||

| .config | ||

| terraform.tfstate* | ||

| .update_scheduler_ips.zip | ||

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,68 @@ | ||

| # Turbo Cache's Terraform Deployment | ||

| This directory contains a reference/starting point on creating a full AWS terraform deployment of Turbo Cache's cache and remote execution system. | ||

|

|

||

| ## Prerequisites - Setup Hosted Zone / Base Domain | ||

| You are required to first setup a Route53 Hosted Zone in AWS. This is because we will generate SSL certificates and need a domain to register them under. | ||

|

|

||

| 1. Login to AWS and go to [Route53](https://console.aws.amazon.com/route53/v2/hostedzones) | ||

| 2. Click `Create hosted zone` | ||

| 3. Enter a domain (or subdomain) that you plan on using as the name and ensure it's a `Public hosted zone` | ||

| 4. Click into the hosted zone you just created and expand `Hosted zone details` and copy the `Name servers` | ||

| 5. In the DNS server that your domain is currently hosted under (it may be another Route53 hosted zone) create a new `NS` record with the same domain/subdomain that you used in Step 3. The value should be the `Name servers` from Step 4 | ||

|

|

||

| It may take a few mins to propagate | ||

|

|

||

| ## Terraform Setup | ||

| 1. [Install terraform](https://www.terraform.io/downloads) | ||

| 2. Open terminal and run `terraform init` in this directory | ||

| 3. Run `terraform apply -var base_domain=INSERT_DOMAIN_NAME_YOU_SETUP_IN_PREREQUISITES_HERE` | ||

|

|

||

| It will take some time to apply, when it is finished everything should be running. The endpoints are: | ||

| ``` | ||

| CAS: grpcs://cas.INSERT_DOMAIN_NAME_YOU_SETUP_IN_PREREQUISITES_HERE | ||

| Scheduler: grpcs://scheduler.INSERT_DOMAIN_NAME_YOU_SETUP_IN_PREREQUISITES_HERE | ||

| ``` | ||

|

|

||

| As a reference you should be able to compile this project using bazel with something like: | ||

| ```sh | ||

| bazel test //... \ | ||

| --remote_cache=grpcs://cas.INSERT_DOMAIN_NAME_YOU_SETUP_IN_PREREQUISITES_HERE \ | ||

| --remote_executor=grpcs://scheduler.INSERT_DOMAIN_NAME_YOU_SETUP_IN_PREREQUISITES_HERE | ||

| ``` | ||

|

|

||

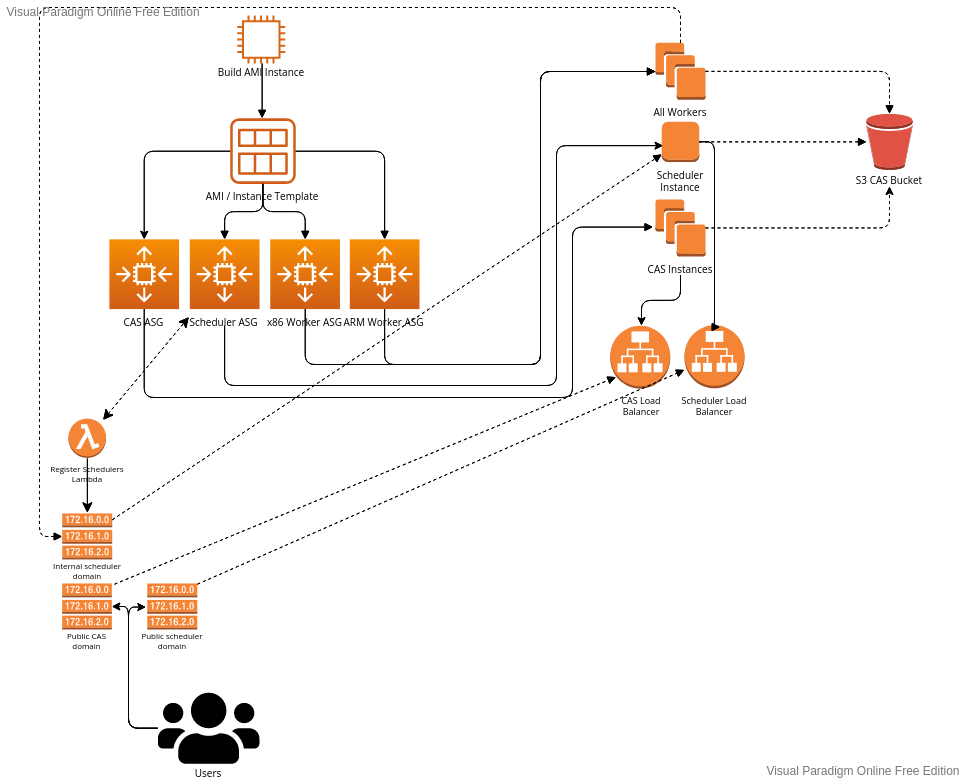

| ## Server configuration | ||

|  | ||

|

|

||

| ## Instances | ||

| All instances use the same configuration for the AMI. There are technically two AMI's but only because by default this solution will spawn workers for x86 servers and ARM servers, so two AMIs are required. | ||

|

|

||

| ### CAS | ||

| The CAS is only used as a public interface to the S3 data. All the services will talk to S3 directly, so they don't need to talk to the CAS instance. | ||

|

|

||

| #### More optimal configuration | ||

| You can reduce cost and increase reliability by moving the CAS onto the same machine that invokes the remote execution protocol (like bazel). Then point the configuration to `localhost` and it will translate the S3 calls into the Bazel Remote Execution Protocol API. | ||

| In bazel you can do this by making an executable file at `tools/bazel` in your WORKSPACE directory. This file can be a scripting language (like bash or python), then start the local proxy before starting bazel as a background service and then invoke the actual bazel executable with the proper flags configured. | ||

|

|

||

| ### Scheduler | ||

| The scheduler is currently the only single point of failure in the system. We currently only support one scheduler at a time. | ||

| The workers will lookup the scheduler in a Route53 DNS record set by a lambda function that is configured to execute every time an instance change happens on the auto-scaling group the scheduler is under. | ||

| We don't use a load balancer here mostly for cost reaons and the fact that there's no real gain from using one, since we don't want/need to encrypt our data since we are using it all inside the VPC. | ||

|

|

||

| ### Workers | ||

| Worker instances in this confugration (but can be changed) will only spawn 1 or 2 CPU machines all with NVMe drives. This is also for cost reasons, since NVMe drives are much faster and often cheaper than EBS volumes. | ||

| When the instance spawns it will lookup the available properties of the node and notify the scheduler. For example, if the instance has 2 cores it will let the scheduler know it has two cores. | ||

|

|

||

| ## Security | ||

| The security permissions of each instance group is very strict. The major volnerabilitys are that the instances by default are all public ip instances and we allow incoming traffic on all instances to port 22 (SSH) for debugging reaons. | ||

|

|

||

| The risk of a user using this configuration in production is quite high and for this reason we don't allow the two S3 buckets (access logs for ELBs and S3 CAS bucket) to be deleted if they have content. | ||

| If you would like to use `terraform destroy`, you will need to manually purge these buckets or change the terraform files to force destroy them. | ||

| Taking the safer route seemed like the best route, even if it means the default developer life is slightly more difficult. | ||

|

|

||

| ## Future work / TODOs | ||

| * Currently we never delete S3 files. Depending on the configuration this needs to be done carefully. Likely the best approach is with a service that runs constantly. | ||

| * Auto scaling up the instances is not confugred. An endpoint needs to be made so that a parsable (like json) feed can be read out of the scheduler through a lambda and publish the results to `CloudWatch`; then a scaling rule should be made for that ASG. | ||

|

|

||

| ## Useful tips | ||

| You can add `-var terminate_ami_builder=false` to the `teraform apply` command and it will make it easier to modify/apply/test your changes to these `.tf` files. | ||

| This command will cause the AMI builder instances to not be terminated, which costs more money, but makes it so that terraform will not create a new AMI each time you call the command. |

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change |

|---|---|---|

| @@ -0,0 +1,139 @@ | ||

| # Copyright 2022 Nathan (Blaise) Bruer. All rights reserved. | ||

| # -- Begin Base AMI --- | ||

|

|

||

| resource "aws_instance" "build_turbo_cache_instance" { | ||

| for_each = { | ||

| arm = { | ||

| "instance_type": var.build_arm_instance_type, | ||

| "ami": var.build_base_ami_arm, | ||

| } | ||

| x86 = { | ||

| "instance_type": var.build_x86_instance_type, | ||

| "ami": var.build_base_ami_x86, | ||

| } | ||

| } | ||

|

|

||

| ami = each.value["ami"] | ||

| instance_type = each.value["instance_type"] | ||

| associate_public_ip_address = true | ||

| key_name = aws_key_pair.turbo_cache_key.key_name | ||

| iam_instance_profile = aws_iam_instance_profile.builder_profile.name | ||

|

|

||

| vpc_security_group_ids = [ | ||

| aws_security_group.allow_ssh_sg.id, | ||

| aws_security_group.ami_builder_instance_sg.id, | ||

| aws_security_group.allow_aws_ec2_and_s3_endpoints.id, | ||

| ] | ||

|

|

||

| root_block_device { | ||

| volume_size = 8 | ||

| volume_type = "gp3" | ||

| } | ||

|

|

||

| tags = { | ||

| "turbo_cache:instance_type" = "ami_builder", | ||

| } | ||

|

|

||

| connection { | ||

| host = coalesce(self.public_ip, self.private_ip) | ||

| agent = true | ||

| type = "ssh" | ||

| user = "ubuntu" | ||

| private_key = data.tls_public_key.turbo_cache_pem.private_key_openssh | ||

| } | ||

|

|

||

| provisioner "local-exec" { | ||

| command = <<EOT | ||

| set -ex | ||

| SELF_DIR=$(pwd) | ||

| cd ../../ | ||

| rm -rf $SELF_DIR/.terraform-turbo-cache-builder | ||

| mkdir -p $SELF_DIR/.terraform-turbo-cache-builder | ||

| find . ! -ipath '*/target*' -and ! -ipath '*/.*' -and ! -ipath './bazel-*' -type f -print0 | tar cvf $SELF_DIR/.terraform-turbo-cache-builder/file.tar.gz --null -T - | ||

| EOT | ||

| } | ||

|

|

||

| provisioner "file" { | ||

| source = "./scripts/create_filesystem.sh" | ||

| destination = "create_filesystem.sh" | ||

| } | ||

|

|

||

| provisioner "remote-exec" { | ||

| # By moving common temp folder locations to the nvme drives (if available) | ||

| # will greatly reduce the amount of data on the EBS volume. This also will | ||

| # make the AMI/EBS snapshot much faster to create, since the blocks on the | ||

| # EBS drives was not changed. | ||

| # When the instance starts we need to give a tiny bit of time for amazon | ||

| # to install the keys for all the apt packages. | ||

| inline = [ | ||

| <<EOT | ||

| set -eux && | ||

| `# When the instance first starts up AWS may have not finished add the certs to the` && | ||

| `# apt servers, so we sleep for a few seconds` && | ||

| sleep 5 && | ||

| sudo apt update && | ||

| sudo apt install -y jq && | ||

| sudo mv ~/create_filesystem.sh /root/create_filesystem.sh && | ||

| sudo chmod +x /root/create_filesystem.sh && | ||

| sudo /root/create_filesystem.sh /mnt/data && | ||

| sudo rm -rf /tmp/* && | ||

| sudo mkdir -p /mnt/data/tmp && | ||

| sudo chmod 777 /mnt/data/tmp && | ||

| sudo mount --bind /mnt/data/tmp /tmp && | ||

| sudo chmod 777 /tmp && | ||

| sudo mkdir -p /mnt/data/docker && | ||

| sudo mkdir -p /var/lib/docker && | ||

| sudo mount --bind /mnt/data/docker /var/lib/docker | ||

| EOT | ||

| ] | ||

| } | ||

|

|

||

| provisioner "file" { | ||

| source = "./.terraform-turbo-cache-builder/file.tar.gz" | ||

| destination = "/tmp/file.tar.gz" | ||

| } | ||

|

|

||

| provisioner "remote-exec" { | ||

| inline = [ | ||

| <<EOT | ||

| set -eux && | ||

| mkdir -p /tmp/turbo-cache && | ||

| cd /tmp/turbo-cache && | ||

| tar xvf /tmp/file.tar.gz && | ||

| sudo apt install -y docker.io awscli && | ||

| cd /tmp/turbo-cache && | ||

| sudo docker build -t turbo-cache-runner -f ./deployment-examples/docker-compose/Dockerfile . && | ||

| container_id=$(sudo docker create turbo-cache-runner) && | ||

| `# Copy the compiled binary out of the container` && | ||

| sudo docker cp $container_id:/usr/local/bin/turbo-cache /usr/local/bin/turbo-cache && | ||

| `# Stop and remove all containers, as they are not needed` && | ||

| sudo docker rm $(sudo docker ps -a -q) && | ||

| sudo docker rmi $(sudo docker images -q) && | ||

| `` && | ||

| sudo mv /tmp/turbo-cache/deployment-examples/terraform/scripts/scheduler.json /root/scheduler.json && | ||

| sudo mv /tmp/turbo-cache/deployment-examples/terraform/scripts/cas.json /root/cas.json && | ||

| sudo mv /tmp/turbo-cache/deployment-examples/terraform/scripts/worker.json /root/worker.json && | ||

| sudo mv /tmp/turbo-cache/deployment-examples/terraform/scripts/start_turbo_cache.sh /root/start_turbo_cache.sh && | ||

| sudo chmod +x /root/start_turbo_cache.sh && | ||

| sudo mv /tmp/turbo-cache/deployment-examples/terraform/scripts/turbo-cache.service /etc/systemd/system/turbo-cache.service && | ||

| sudo systemctl enable turbo-cache && | ||

| sync | ||

| EOT | ||

| ] | ||

| } | ||

| } | ||

|

|

||

| resource "aws_ami_from_instance" "base_ami" { | ||

| for_each = { | ||

| arm = "arm", | ||

| x86 = "x86" | ||

| } | ||

|

|

||

| name = "turbo_cache_${each.key}_base" | ||

| source_instance_id = aws_instance.build_turbo_cache_instance[each.key].id | ||

| # If we reboot the instance it will terminate the instance because of turbo-cache.service file. | ||

| # So, we can control if the instance should terminate only by if the instance will reboot. | ||

| snapshot_without_reboot = !var.terminate_ami_builder | ||

| } | ||

|

|

||

| # -- Begin Base AMI --- |

Oops, something went wrong.