Machine Learning is a subfield of computer science that gives computers the ability to learn without being programmed.

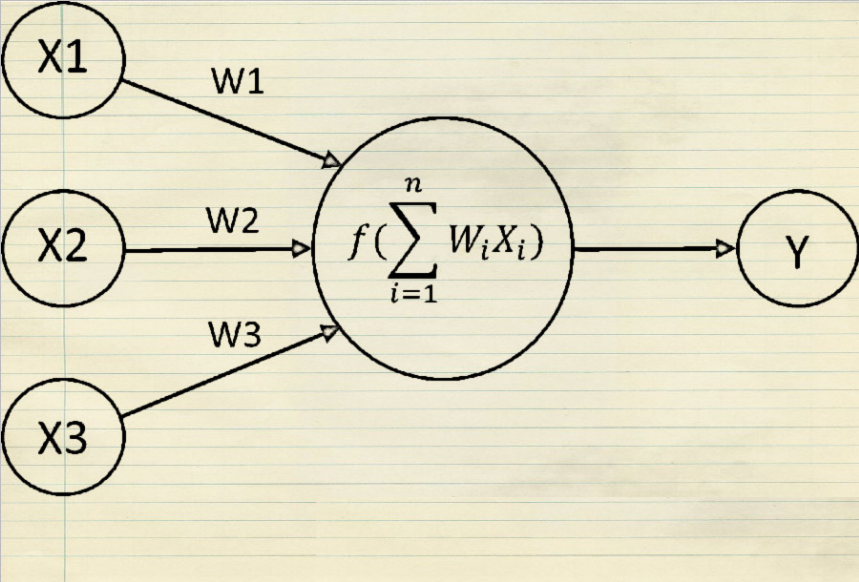

Perceptron is the simplest possible Neural Network. A neuron takes inputs, does some math with them, and produces one output.

Each input is multiplied by a weight and all the weighted inputs are added together with a bias.

The sum is passed through an activation function

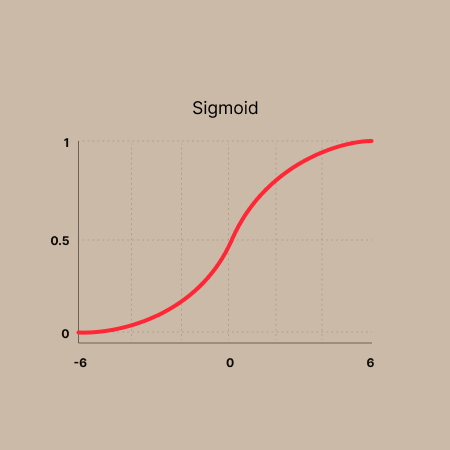

The activation function maps the weighted sum in between 0 to 1 or -1 to 1 etc. It is used to determine the output of neural network like yes or no. A commonly used activation function is the sigmoid function:

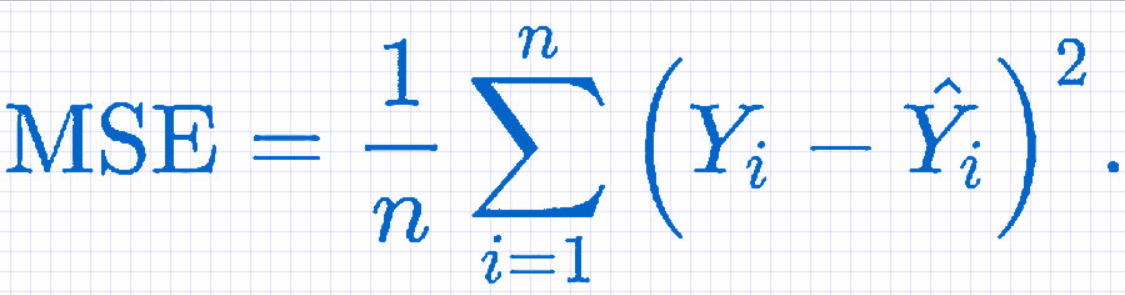

The loss function uses the weight and bias from the model and returns an error, based on how well the line fits a plot. It measures how well the neural network models the training data. When training, we aim to minimize this loss between the predicted and target outputs.A commonly used loss function is MSE (Mean Squared Error):

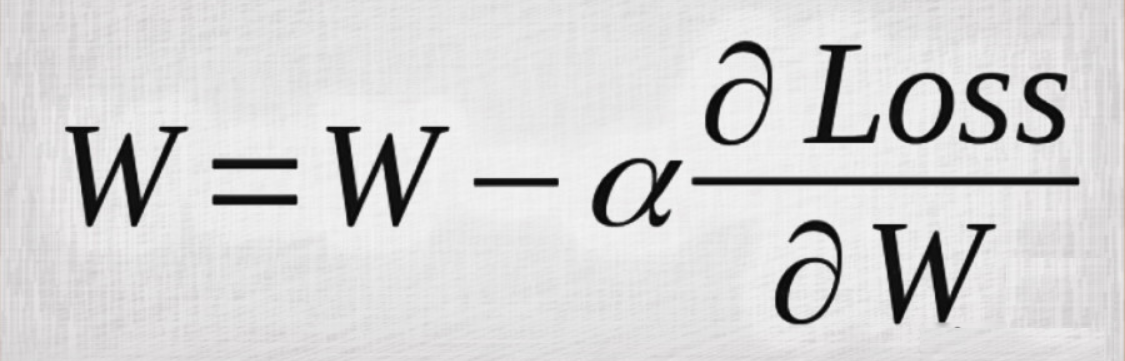

Gradient Descent is a popular algorithm for solving AI problems. It tells us how to change our weights and biases to minimize loss. A commonly optimization algorithm called stochastic gradient descent (SGD)