LF-DFnet: Light Field Image Super-Resolution Using Deformable Convolution, TIP 2021

News: We recommend our newly-released repository BasicLFSR for the implementation of our LF-DFnet. BasicLFSR is an open-source and easy-to-use toolbox for LF image SR. A number of milestone methods have been implemented (retrained) in a unified framework in BasicLFSR.

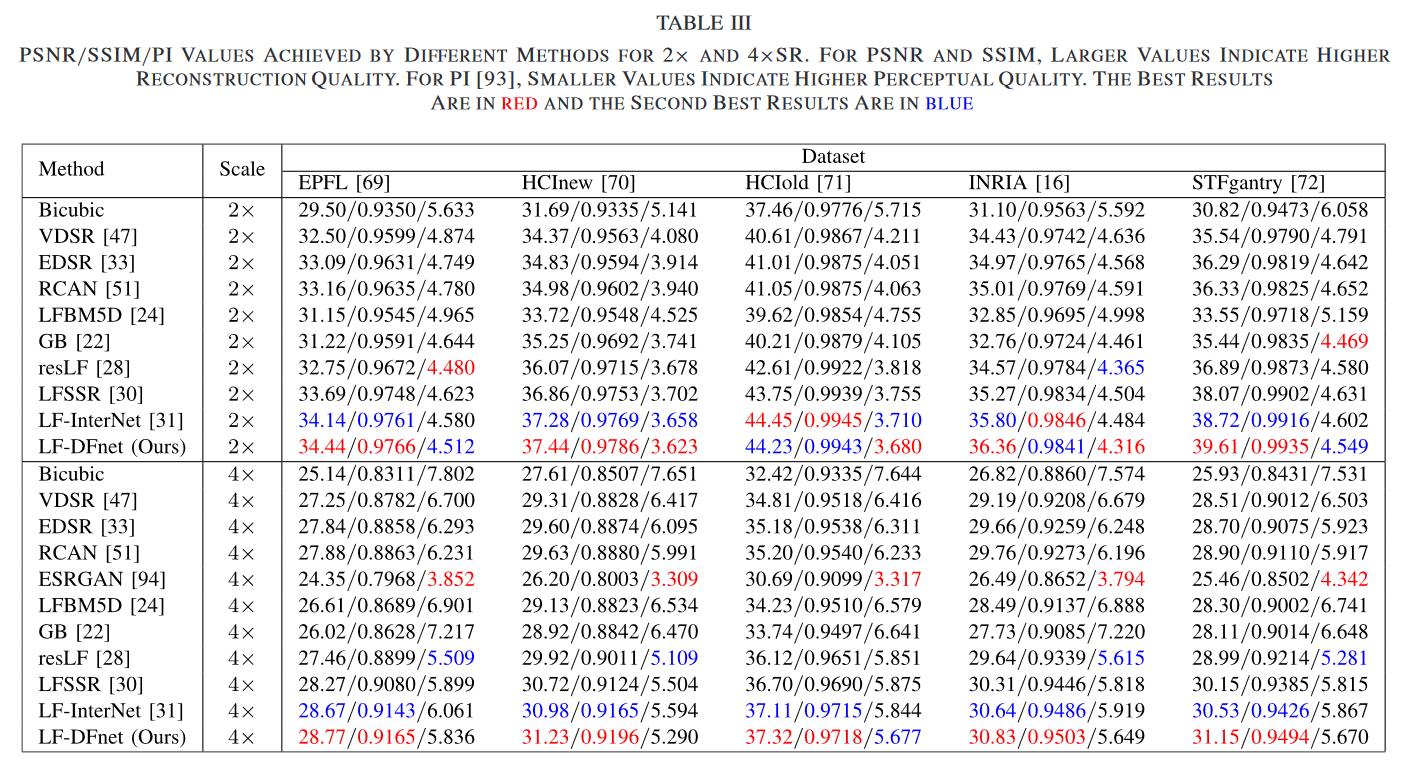

We share the super-resolved LF images generated by our LF-DFnet on all the 5 datasets for 4xSR. Then, researchers can compare their algorithms to our LF-DFnet without performing inference. Results are available at Baidu Drive (Key: nudt).

We used the EPFL, HCInew, HCIold, INRIA and STFgantry datasets for both training and test. Please first download our dataset via Baidu Drive (key:nudt) or OneDrive, then place the 5 datasets to the folder ./Datasets/.

- PyTorch 1.3.0, torchvision 0.4.1. The code is tested with python=3.7, cuda=9.0.

- Matlab (For training/test data generation and result image generation)

- Cd to

code/dcn. - For Windows users, run

cmd make.bat. For Linux users, run bashbash make.sh. The scripts will build DCN automatically and create some folders. Seetest.pyfor example usage.

- Run

GenerateTrainingData.mto generate training data. The generated data will be saved in./Data/TrainData_UxSR_AxA/(U=2,4; A=3,5,7,9). - Run

train.pyto perform network training. Note that, the training settings intrain.pyshould match the generated training data. Checkpoint will be saved to./log/.

- Run

GenerateTestData.mto generate input LFs of the test set. The generated data will be saved in./Data/TestData_UxSR_AxA/(U=2,4; A=3,5,7,9). - Run

test.pyto perform network inference. The PSNR and SSIM values of each dataset will be printed on the screen. - Run

GenerateResultImages.mto convert '.mat' files in./Results/to '.png' images to./SRimages/.

If you find this work helpful, please consider citing the following paper:

@article{LF-DFnet,

author = {Wang, Yingqian and Yang, Jungang and Wang, Longguang and Ying, Xinyi and Wu, Tianhao and An, Wei and Guo, Yulan},

title = {Light Field Image Super-Resolution Using Deformable Convolution},

journal = {IEEE Transactions on Image Processing},

volume = {30),

pages = {1057-1071},

year = {2021},

}

The DCN part of our code is referred from DCNv2 and D3Dnet. We thank the authors for sharing their codes.

Any question regarding this work can be addressed to wangyingqian16@nudt.edu.cn.