🌟For the benchmark result📊, please check our website.👈👈👈

| Scenario name | Modality | Task type | Performance metrics | Client number | Sample number |

|---|---|---|---|---|---|

| celeba | Image | Binary Classification (Smiling vs. Not smiling) |

Accuracy | 894 | 20,028 |

| femnist | Image | Multiclass Classification (62 classes) |

Accuracy | 178 | 40,203 |

| Text | Next-word Prediction | Accuracy | 813 | 27,738 |

| Scenario name | Modality | Task type | Performance metrics | Client number | Sample number |

|---|---|---|---|---|---|

| breast_horizontal | Medical | Binary Classification | AUC | 2 | 569 |

| default_credit_horizontal | Tabular | Binary Classification | AUC | 2 | 22,000 |

| give_credit_horizontal | Tabular | Binary Classification | AUC | 2 | 150,000 |

| student_horizontal | Tabular | Regression (Grade Estimation) |

MSE | 2 | 395 |

| vehicle_scale_horizontal | Image | Multiclass Classification (4 classes) |

Accuracy | 2 | 846 |

| Scenario name | Modality | Task type | Performance metrics | Vertical split details |

|---|---|---|---|---|

| breast_vertical | Medical | Binary Classification | AUC | A: 10 features 1 label B: 20 features |

| default_credit_vertical | Tabular | Binary Classification | AUC | A: 13 features 1 label B: 10 features |

| dvisits_vertical | Tabular | Regression (Number of consultations Estimation) |

MSE | A: 3 features 1 label B: 9 features |

| give_credit_vertical | Tabular | Binary Classification | AUC | A: 5 features 1 label B: 5 features |

| motor_vertical | Sensor data | Regression (Temperature Estimation) |

MSE | A: 4 features 1 label B: 7 features |

| student_vertical | Tabular | Regression (Grade Estimation) |

MSE | A: 6 features 1 label B: 7 features |

| vehicle_scale_vertical | Image | Multiclass Classification (4 classes) |

Accuracy | A: 9 features 1 label B: 9 features |

- Python

Recommend to usePython 3.9. It should also work onPython>=3.6, feel free to contact us if you encounter any problems.

You can set up a sandboxed python environment by conda easily:conda create -n flbenchmark python=3.9 - Command line tools:

git,wget,unzip - Docker Engine

- NVIDIA Container Toolkit if you want to use GPU.

pip install flbenchmark colink

We highly recommend to use cloud servers(e.g. AWS) to run the benchmark. Here we provide an auto-deploy script on AWS.

- Set up a web server(e.g. Apache2, Nginx, PHP built-in web server) with PHP support with files on controller folder.

- Change

CONTROLLER_URL="http://172.31.2.2"in install_auto.sh to the controller's web url.

- Launch enough servers you need for the benchmark(e.g. 179 servers for femnist) on AWS and set the user data to the following script. (remember to replace the

http://172.31.2.2with the controller's web url)

#!/bin/bash

sudo -i -u ubuntu << EOF

echo "Hello World" > testfile.txt

wget http://172.31.2.2/install_auto.sh

bash install_auto.sh > user_data.out

EOF

- Wait for server setups, when they are finished you could see all servers' ip under controller/servers.

- Get server list via

python get_server_list.py, and copy theserver_list.jsonto exp folder.

- Change the working directory to exp for later steps.

- Register users via

python register_users.py. - Launch corresponding framework operators

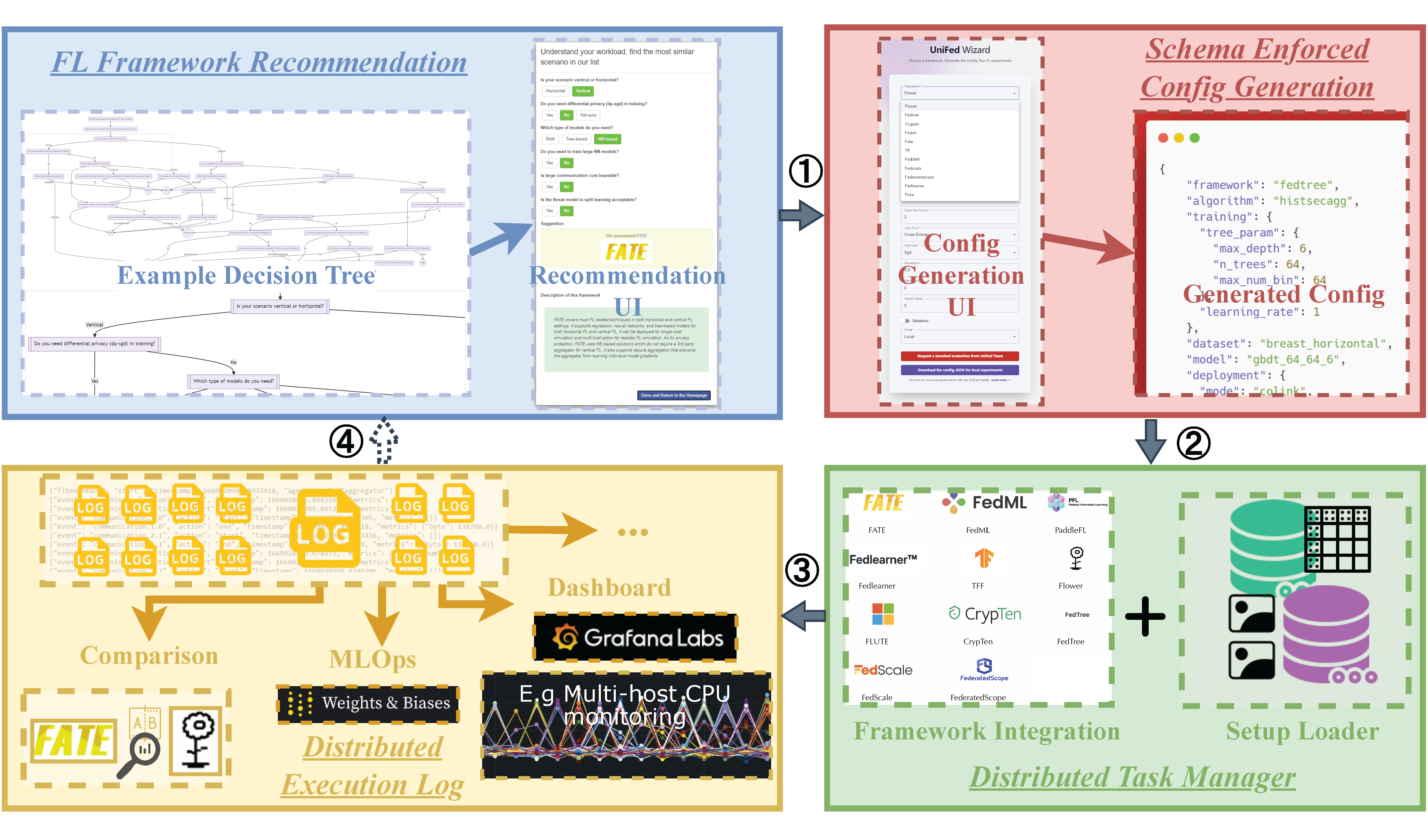

./start_po.a <unifed_protocol_name> <repo_url>. For example,./start_po.a unifed.flower https://github.com/CoLearn-Dev/colink-unifed-flower.git.

- Generate the exp/config_auto.json from wizard.

- Start an evaluation via

python run_task_auto.py

Alternatively, you could also manually deploy on your own cluster. You need to prepare enough Ubuntu 20.04 LTS servers based on the needs of the benchmark, and you should set up the environment on these servers. Here we provide one script to set up the environment.

- Download install.sh on home directory.

- Change

SERVER_IP="172.16.1.1"in install.sh to corresponding server ip. - Execute install.sh.

- Record all servers' ip and

~/server/host_token.txtin the following json format.

{

"test-0": [

"http://172.31.4.48:80",

"eyJ0eXAiOiJKV1QiLCJhbGciOiJIUzI1NiJ9.eyJwcml2aWxlZ2UiOiJob3N0IiwidXNlcl9pZCI6IjAyMGJhNzkyNzk0ZTlmMWUwZWZmNTEyOGM4NDdjZmE0MmRlNTllY2I1ODM4MzU4MDBmN2QwMzM1Yzg2YWFjZTViOSIsImV4cCI6MTY4OTM0ODAyM30.UP5JUYdbL-MkZDTSVuBHnIHoun1VkfcRgsBLV119v6A"

],

"test-1": [

"http://172.31.15.143:80",

"eyJ0eXAiOiJKV1QiLCJhbGciOiJIUzI1NiJ9.eyJwcml2aWxlZ2UiOiJob3N0IiwidXNlcl9pZCI6IjAyNzJhNjgxNDg0NDMwNTFmZTI2NDFlYmZiNjM2MDgxODM5YmQ5NDdkZGFhNTcwYjY3MjU0MTI2NjU5YzBmZjVjYSIsImV4cCI6MTY4OTM0ODAzNH0.2HHQYzcMjif0ZkhSyltlOSC1ydsgWS8H_no5wWvohw0"

]

}- Change the working directory to exp for later steps.

- Put the json file about servers from the last step to exp/server_list.json.

- Register users via

python register_users.pyand record the user id for later use. - Launch corresponding framework operators

./start_po.a <unifed_protocol_name> <repo_url>. For example,./start_po.a unifed.crypten https://github.com/CoLearn-Dev/colink-unifed-crypten.git.

- Generate the exp/config.json from wizard. Remember to replace the

user_idwith the user id you got from the previous steps. - Start an evaluation via

python run_task.py

This project is licensed under Apache License Version 2.0. By contributing to the project, you agree to the license and copyright terms therein and release your contribution under these terms.